Notes:

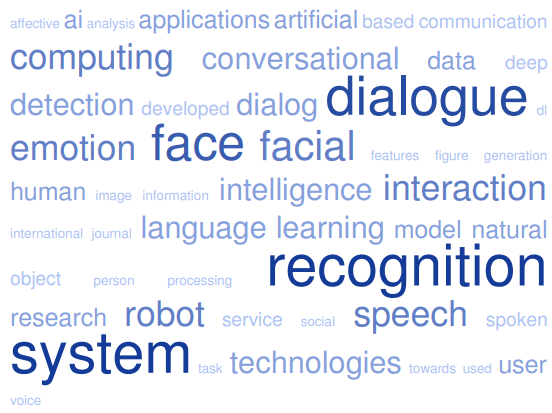

Facial recognition is a technology that uses artificial intelligence (AI) to analyze and identify individuals based on their facial features. It involves capturing an image or video of a person’s face, and then using algorithms to analyze the data and compare it to a database of known faces in order to determine the identity of the person.

Expression recognition, also known as facial expression analysis or emotion recognition, is a related technology that uses AI to analyze and interpret facial expressions in order to determine a person’s emotional state. It involves analyzing the movements and positions of facial muscles and features, such as the eyebrows, mouth, and eyes, in order to detect and interpret various emotions, such as happiness, sadness, anger, surprise, or disgust.

While facial recognition and expression recognition are distinct technologies, they are related in the sense that both involve the use of AI and machine learning to analyze and interpret data from a person’s face. Facial recognition is primarily used for identification purposes, while expression recognition is used to interpret emotional states. However, the two technologies can be used together in order to provide a more complete understanding of a person’s identity and emotional state.

Expression recognition can be coordinated with dialog systems in order to enable the chatbot to respond more effectively to a user’s emotional state. For example, if the chatbot detects that a user is feeling frustrated or angry, it could choose to respond in a more calming and empathetic manner, in order to de-escalate the situation. On the other hand, if the chatbot detects that a user is feeling happy or excited, it could respond with more enthusiasm and positivity.

Emotion corpora, also known as emotion datasets or emotion lexicons, are collections of data that are used to train and evaluate systems for emotion recognition. Emotion recognition is a technology that uses artificial intelligence (AI) to analyze and interpret facial expressions, vocal patterns, or written language in order to determine a person’s emotional state.

Emotion corpora typically consist of large collections of annotated data, where each data point has been labeled with the emotional state that it represents. These datasets can include text, audio, or video data, and can be used to train machine learning algorithms to recognize and classify different emotions.

The role of emotion corpora in emotion recognition is to provide a large and diverse collection of data that can be used to train and evaluate emotion recognition systems. These datasets can help to improve the accuracy and reliability of emotion recognition systems, as they provide a wide range of examples of different emotions and contexts in which they may be expressed.

By using emotion corpora to train and evaluate emotion recognition systems, researchers and developers can ensure that these systems are able to accurately and reliably recognize and interpret different emotions in a variety of different contexts. This can help to improve the effectiveness and usefulness of emotion recognition systems, and can enable the development of more sophisticated and human-like AI systems.

Wikipedia:

See also:

100 Best Faceposer Videos | 100 Best Faceshift Videos

An open-source dialog system with real-time engagement tracking for job interview training applications

Z Yu, V Ramanarayanan, P Lange… – … Social Interaction with …, 2019 – Springer

… such as face recognition, head tracking, etc. have improved. Those technologies are now robust enough to tolerate a fair amount of noise in the visual and acoustic background [3, 7]. So it is now possible to incorporate these technologies into spoken dialog systems to make the …

Emotion recognition based preference modelling in argumentative dialogue systems

N Rach, K Weber, A Aicher… – 2019 IEEE …, 2019 – ieeexplore.ieee.org

… ”A human-computer dialogue system for educational … IRIS.” 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops (2009): 1-8. [37] B. Amos, B. Ludwiczuk, M. Satyanarayanan, ”Openface: A general- purpose face recognition library with …

Does gender matter? towards fairness in dialogue systems

H Liu, J Dacon, W Fan, H Liu, Z Liu, J Tang – arXiv preprint arXiv …, 2019 – arxiv.org

… 3]. In other words, AI techniques may make decisions that are skewed towards certain groups of people in these applications [4]. In the field of computer vision, some face recognition algorithms fail to … Page 2. Table 1: Examples of Gender and Racial Biases in Dialogue Systems …

From smart to personal environment: integrating emotion recognition into smart houses

D Fedotov, Y Matsuda, W Minker – 2019 IEEE International …, 2019 – ieeexplore.ieee.org

… They also can be extracted in real-time with open source software openSMILE [32]. When several people are living together and use the same dialogue system, a face recognition and speaker diarization is required before the feature extraction …

Data fusion methods in multimodal human computer dialog

Y Ming-Hao, TAO Jian-Hua – Virtual Reality & Intelligent Hardware, 2019 – Elsevier

… After decades of development, the man-machine dialogue system has developed from the early telephone voice system, such as … technology contribute to largely improvement for single channel behavior perception, including speech recognition15, 16, face recognition17, 18 …

Learning emotion recognition and response generation for a service robot

JY Huang, WP Lee, BW Dong – IFToMM International Symposium on …, 2019 – Springer

… Essentially, the dialogue system includes a knowledge base with domain questions and the corresponding answers, and the dialogue service is to … on a newly developed emotion-aware dialoguing service, in which the robot can identify users (by face recognition) and their …

Say what i want: Towards the dark side of neural dialogue models

H Liu, T Derr, Z Liu, J Tang – arXiv preprint arXiv:1909.06044, 2019 – arxiv.org

… invalid. Second, when trying to manipulate a dialogue system released by others, it is impractical for us to interact with it for unlimited times. Based … mistakes. Besides, Sharif et al. [23] focus on attacking face recognition models; Xie et al. [24 …

Evaluating Methods for Emotion Recognition based on Facial and Vocal Features.

M Ley, M Egger, S Hanke – AmI (Workshops/Posters), 2019 – ceur-ws.org

… [13] M. Ley, Emotion recognition from facial recognition and bio signal … [27] A. Haag, S. Goronzy, P. Schaich, and J. Williams, Emotion Recognition Using Bio-sensors: First Steps towards an Automatic System, Tutorial and research workshop on affective dialogue systems, p. 36 …

A Voice Interactive Multilingual Student Support System using IBM Watson

K Ralston, Y Chen, H Isah… – 2019 18th IEEE …, 2019 – ieeexplore.ieee.org

… [21] proposed and evaluated a novel neural network architecture for an emotion-sensitive neural chat-based dialogue system, optimized on the … 3. The intelligent robotic system is clearly separated into two main parts namely dynamic face recognition and voice communication …

AMUSED: A Multi-Stream Vector Representation Method for Use in Natural Dialogue

G Kumar, R Joshi, J Singh, P Yenigalla – arXiv preprint arXiv:1912.10160, 2019 – arxiv.org

… 4.4 TRIPLET LOSS Triplet loss has been successfully used for face recognition (Schroff et al., 2015) … Such a study helps us understand the impact of different modules on a human based conversation. Dialogue system proposed by Zhang et al …

Deep unknown intent detection with margin loss

TE Lin, H Xu – arXiv preprint arXiv:1906.00434, 2019 – arxiv.org

… 1 Introduction In the dialogue system, it is essential to identify the unknown intents that have never appeared in the training set … Weiyang Liu, Yandong Wen, Zhiding Yu, Ming Li, Bhiksha Raj, and Le Song. 2017. Sphereface: Deep hypersphere embedding for face recognition …

Annotation-efficient approaches towards real-time emotion recognition

IP Lajos – 2019 – ritsumei.repo.nii.ac.jp

… Dialogue systems, for example, are often applied as telephone-customer-service agents and can rely only on audio features. Even in the case of computer games, where facial recognition is often feasible, a shadow on the user’s face, unleveled position of the camera, or the …

Mining Polysemous Triplets with Recurrent Neural Networks for Spoken Language Understanding

V Vukoti?, C Raymond – 2019 – hal.archives-ouvertes.fr

… To address this issue, in the task of face recognition and person re-identification, an archi- tecture consisting of 3 instances of … MEDIA (Méthodologie d’Evaluation automatique de la compréhension hors et en contexte du DIAlogue – evaluation of man-machine dialogue systems) …

Nadine Humanoid Social Robotics Platform

M Ramanathan, N Mishra, NM Thalmann – Computer Graphics …, 2019 – Springer

… Nadine has been equipped dedicated modules for face recognition, gaze behavior, speech recognition and synthesis, action recognition, object recognition, memory of users, affective system (to model her personality, emotion, mood), data processing or dialog system …

Hierarchical Tensor Fusion Network for Deception Handling Negotiation Dialog Model

K Yoshino, S Sakti, S Nakamura – ahcweb01.naist.jp

… on the success of the negotiation [1]. Thus, it is critical to build an accurate deception detection module when constructing negotiation dialog systems … TFN is reported as the best method for improving the accuracy of face recognition and sentiment analysis tasks, because it can …

Towards visual behavior detection in human-machine conversations

O Roesler, D Suendermann-Oeft – 2019 Joint 8th International …, 2019 – ieeexplore.ieee.org

… Abstract—In this paper, we investigate how multiple conversa- tional behaviors can be detected by automatically analyzing facial expressions in video recordings of users talking to a dialog system … Emotion detection in dialog systems – usecases, strategies and challenges …

Human-robot interaction with smart shopping trolley using sign language: data collection

D Ryumin, D Ivanko, A Axyonov… – 2019 IEEE …, 2019 – ieeexplore.ieee.org

… for further research and experiments on Russian sign language recognition system, which will be embedded into the dialogue system of the … Conference on Artificial Intelligence, 2013 [21] PK Pisharady, P. Vadakkepat, and AP Loh, “Hand posture and face recognition using a …

Towards understanding lifelong learning for dialogue systems

M Cieliebak, O Galibert, JM Deriu – … on Spoken Dialogue Systems …, 2019 – dreamboxx.com

… for dialogue systems, there are also other goals, as we discuss in the following section. In addition, we would like to mention that the concept of a ”task” may cover situations of varying complexity, ranging from single new instances (eg a new person in a face recognition system …

Chatbots as Moral and Immoral Machines

O Bendel – … Artefacts in Machine Ethics. CHI 2019 …, 2019 – robophilosophy.swissbooks.net

… Since I have already illuminated the risks of facial recognition, especially of newer forms associated with physiognomy and bio- metrics in their delicate form, in a paper and in lectures, it is quite clear to me that with the … Dialogue systems can be easily integrated and networked …

Towards Environment Aware Social Robots using Visual Dialog

A Singh, M Ramanathan, R Satapathy… – aalind0.github.io

… images to perceive its environment and human-robot conversation history as text are combined to develop a Visual Dialog system … 2. The perception layer recognizes vari- ous cues from the surrounding environment using modules such as face recognition, gesture recognition …

A fuzzy logic approach to reliable real-time recognition of facial emotions

K Bahreini, W van der Vegt, W Westera – Multimedia Tools and …, 2019 – Springer

… The combination of posture and conversational dialogue systems reveals a modest amount of redundancy among them [17] … After loading images and their related emotion labels from the CK+ database, face recognition and face tracking functionalities from DLIB were used and …

Towards an Interactive Learning Ecosystem: Education in Power Systems?

G Santamar?a-Bonfil – researchgate.net

… Tracking devices such as LM or RS300 are 3D cameras which are capable of gesture tracking, face recognition, and estimate affective states by using skele- ton, head, facial, hands … These commands are in the form of speech (ie Spoken Dialogue Systems) or text (ie Chatbots) …

Progress of artificial intelligence (AI)

TMI B?jenescu – 2019 – ip-81.180.74.21.utm.renam.md

… the reasoning of human being; biometrics – face recognition; logistics, reliability and accuracy of sensory information; automatic learning, artificial vision, robotics and innovations. The AI progress requires standardization, clear benchmarks (eg, dialogue systems, planning and …

Multimodal dialogue processing for machine translation

A Waibel – The Handbook of Multimodal-Multisensor Interfaces …, 2019 – dl.acm.org

… Field-adaptable and extendable systems. Languages and vocabularies change, and interpreting dialogue systems must evolve alongside such changing languages and vocabularies and adapt to any given dialogue scenario …

Project r-castle: Robotic-cognitive adaptive system for teaching and learning

D Tozadore, AHM Pinto, J Valentini… – … on Cognitive and …, 2019 – ieeexplore.ieee.org

… There are some open-source programmed behaviors (as object and face recognition, web Page 3 … the specific algorithms to collect the objective measures, those that depend on users’ verbal communication (nW, RWa, Tta) will be provided by the Dialogue System, whereas the …

An overview of machine learning techniques applicable for summarisation of characters in videos

G Nair, KE Johns, A Shyna… – … Conference on Intelligent …, 2019 – ieeexplore.ieee.org

… Face recognition for video surveillance with aligned facial landmarks learning [6] uses active shape model to align and convert the data … Real-Time Speech Emotion and Sentiment Recognition for Interactive Dialogue Systems [25] aims at improving the user experience while …

Privacy concerns of multimodal sensor systems

G Friedland, MC Tschantz – The Handbook of Multimodal-Multisensor …, 2019 – dl.acm.org

… profiling people. Meanwhile, advances in multimedia content analysis (face recognition, speaker verification, location estimation, etc.) provides the ability to analyze and integrate information like never before. While discussion …

A Survey of Artificial Intelligence Techniques on MOOC of Legal Education

Y Weng, X Liang, H Duan, S Gu, N Wang… – National Conference on …, 2019 – Springer

… Entity recognition and relationship extraction of long sentences, automatic generation of knowledge base, multi-round dialogue system, and accurate … A variety of artificial intelligence technologies can be used for this purpose, including face recognition and student portrait …

Artificial intelligence systems for programme production and exchange

BT Series – 2019 – xn—-vmcebbajlc6dj7bxne2c.xn …

… class of algorithms performs generative speech synthesis and enable candidate texts to be generated by the dialogue system and converted … Image and audio analysis technologies including face recognition, scene text detection and audio feature detection will make it possible …

Unobtrusive Vital Data Recognition by Robots to Enhance Natural Human–Robot Communication

G Bieber, M Haescher, N Antony, F Hoepfner… – … , Societal and Ethical …, 2019 – Springer

… signal. Therefore, face recognition and head tracking technologies support the readjustment of the ROI and the assessment of a change in color … system. In Tutorial and Research Workshop on Affective Dialogue Systems (pp. 36–48) …

Human-Augmented Robotic Intelligence (HARI) for Human-Robot Interaction

V Mruthyunjaya, C Jankowski – Proceedings of the Future Technologies …, 2019 – Springer

… For the video input, the image processing executes tasks such as face tracking, face recognition, facial features extraction, object detection … From a spoken dialogue system point of view, the spoken language understanding has three main components: (a) domain classification …

13 Older adults’ experiences with Pepper humanoid robot

A Poberznik, S Merilampi – Tutkimusfoorumi – theseus.fi

… Pepper quickly scanned the subject’s face and matched the photo with the existing photo database using its facial recognition function … Towards Metrics of Evaluation of Pepper Robot as a Social Companion for the Elderly: 8th International Workshop on Spoken Dialog Systems …

Study on the application of cloud computing and speech recognition technology in English teaching

L Wei – Cluster Computing, 2019 – Springer

… Experimental results show that the efficiency of the man–machine dialogue system with each link based on cloud computing is improved … 1–8 (2015)MathSciNetCrossRefGoogle Scholar. 2. Hossain, MS, Muhammad, G.: Cloud-assisted speech and face recognition framework for …

A Supplementary Feature Set for Sentiment Analysis in Japanese Dialogues

PL Ihasz, M Kovacs, I Piumarta… – ACM Transactions on …, 2019 – dl.acm.org

… Dialogue systems, for example, are often used as telephone-customer- service agents, thus they can rely only on audio features. Even in the case of computer games, where facial recognition is often feasible, a shadow on the user’s face, an un-leveled camera or the presence …

Efficient spatial temporal convolutional features for audiovisual continuous affect recognition

H Chen, Y Deng, S Cheng, Y Wang, D Jiang… – Proceedings of the 9th …, 2019 – dl.acm.org

… ACM, Nice, France, 8 pages. https://doi.org/10.1145/3347320.3357690 1 INTRODUCTION Automatic affective dimension recognition is an essential capabil- ity for a vast range of real-life applications such as human-robot interaction and dialogue systems [20, 21] …

Emoty: an emotionally sensitive conversational agent for people with neurodevelopmental disorders

F Catania, N Di Nardo, F Garzotto… – Proceedings of the 52nd …, 2019 – 128.171.57.22

… Emoty is a voice-based Italian speaking Dialog System able to converse with the users in ordinary natural language and to entertain them with small talks and educational games … Emoty is a goal oriented, domain restricted and proactive Dialog System …

ULearn: understanding and reacting to student frustration using deep learning, mobile vision and NLP

L Grewe, C Hu – Signal Processing, Sensor/Information Fusion …, 2019 – spiedigitallibrary.org

… Regarding speech, a survey of research on emotion detection can be found in [7]. Related to speech is dialog systems and in [7] the authors discuss steps in research they are taking to attempt to create dialog systems that facilitate self-learning …

FinBrain: when finance meets AI 2.0

X Zheng, M Zhu, Q Li, C Chen, Y Tan – Frontiers of Information Technology …, 2019 – Springer

… demonstrated that deep learning provides a powerful tool for undertaking the main challenges in face recognition, including reducing … 2. Dialogue management and generation Practical dialogue systems consist of a natural language understanding module, a natural language …

Study of speech enabled healthcare technology

S Debnath, P Roy – International Journal of Medical …, 2019 – inderscienceonline.com

… They have used SRT for developing spoken dialogue system aiming to support home healthcare services … Classify different type and severity of voice pathologies of dysphonic patients using ASR. 2.7 Cloud assisted speech and face recognition framework for healthcare (2015) …

The role of speech technology in biometrics, forensics and man-machine interface.

S Singh – International Journal of Electrical & Computer …, 2019 – search.ebscohost.com

… speech, but the speech generated by the machine lacks individuality, expression and the communicative intent and the dialogue systems of the … Haptics is another field of science, which lends fusion to well between emotional recognition of facial recognition and facial features …

Bridging the Gap between Robotic Applications and Computational Intelligence in Domestic Robotics

J Zhong, T Han, A Lotfi, A Cangelosi… – 2019 IEEE Symposium …, 2019 – ieeexplore.ieee.org

… applications. Virtual IoT Interactive Service SLAM and Navigation ¥ ¥ Object Recognition ¥ ¥ À À Face Recognition ¥ À À À Action Recognition À À À Emotion Recognition À ÀÀ Speech Recognition ¥ ¥ ¥ ¥ Dialog System ¥ ¥ À À …

Time to Go ONLINE! A Modular Framework for Building Internet-based Socially Interactive Agents

M Polceanu, C Lisetti – Proceedings of the 19th ACM International …, 2019 – dl.acm.org

… diverse mod- ules like new characters, data-driven behavior models (using for example TensorFlow.js), dialogue systems or even … Compute-intensive functionality (speech/face recognition) can be done asynchronously via web services or with functionalities built into the browser …

Communication Effiency and User Experience Analysis of Visual and Audio Feedback Cues in Human and Service Robot Voice Interaction Cycle

H Song, JTC Tan, Y Xing, G Hou – 2019 WRC Symposium on …, 2019 – ieeexplore.ieee.org

… In the above voice dialogue system, although the basic interaction cycle can be realized, in actual life, in order to ensure the smooth progress of the conversation between the person and the … In Figure 3, Kinect is used for navigation; Astra is used for face recognition and tracking …

A Survey on Virtual Personal Assistant

S Modhave, S Soniminde, A Mogarge, MK Tajane… – academia.edu

… The dialogue system is one of a functioning territory that numerous organizations use to structure and improve their new frameworks … E. Face Detection module A Face recognition module persistently filters the video contribution from an associated webcam and, at whatever point …

Comparison and efficacy of synergistic intelligent tutoring systems with human physiological response

F Alqahtani, N Ramzan – Sensors, 2019 – mdpi.com

… or slow down the exercise. Therefore, facial recognition has been cited as one of the more important aspects of estimating psychological, physiological and physiological indicators in a subject [47]. This method provides a contactless …

From the Inside Out: A Literature Review on Possibilities of Mobile Emotion Measurement and Recognition.

M Meyer, P Helmholz, M Rupprecht, J Seemann… – Bled …, 2019 – researchgate.net

… Furthermore, this research should give an overview of the evolution and current types of emotion detection (eg biofeedback, facial recognition) as well as of previous research results. 3 Theoretical Background … 1. Optical emotion recognition (Face recognition) Quantity: 12 …

Advanced Programming of Intelligent Social Robots

D Malerba, A Appice, P Buono, G Castellano… – Journal of e-Learning …, 2019 – je-lks.com

… 2018). Other common computer vision tasks are face recognition and detection of facial expressions and emotions, which are useful to convey the user’s feelings to the robot … Fig. 1 – Overview of a generic dialog system Page 5. Donato …

Communicating with SanTO–the first Catholic robot

G Trovato, F Pariasca, R Ramirez… – 2019 28th IEEE …, 2019 – ieeexplore.ieee.org

… A dialogue system, integrated within the multimodal communication consisting of vision, touch, voice and lights, drives the interaction with the users … with SanTO is initiated by connecting the LED candle, with no other interfaces other than touch, voice and face recognition …

MuMMER: Socially Intelligent Human-Robot Interaction in Public Spaces

ME Foster, B Craenen, A Deshmukh, O Lemon… – arXiv preprint arXiv …, 2019 – arxiv.org

… Figure 5: Architecture of the Dialogue system. The blue parts on the left represent the task management and execu- tion system, the green parts on the right represent Alana as the dialogue system … Openface: A general-purpose face recognition library with mobile applications …

Situated interaction

D Bohus, E Horvitz – The Handbook of Multimodal-Multisensor Interfaces …, 2019 – dl.acm.org

… The earliest attempts at dialog between computers and people were text-based dialog systems, such as Eliza [Weizenbaum 1966], a pattern- matching chat-bot that emulated a psychotherapist, and SHRLDU [Winograd 1971], a natural language understanding system that …

End-to-end facial and physiological model for\\Affective Computing and applications

J Comas, D Aspandi, X Binefa – arXiv preprint arXiv:1912.04711, 2019 – arxiv.org

… Thus, we may have a less noisy features. This has been shown to improve the model estimates on other computing fields, such as facial recognition [43], object classification [44], music generation [45], data compression [18], dimensionality reduction [19] etc …

Interaction with Robots

G Skantze, J Gustafson, J Beskow – The Handbook of Multimodal …, 2019 – books.google.com

… For a longtime, spoken dialogue systems developed in research labs and employed in the industry also lacked any physical … Language Understanding (NLU) Bystander Addressee Side participant Cameras Face detection Gaze/Head pose tracking Face recognition Objects of …

Developing context-aware dialoguing services for a cloud-based robotic system

JY Huang, WP Lee, TA Lin – IEEE Access, 2019 – ieeexplore.ieee.org

… The most common way of achieving natural language- based human-robot interactions is to develop a dialogue system as a vocal interactive interface between them. Page 2. 2169-3536 (c) 2018 IEEE. Translations and content mining are permitted for academic research only …

Future English learning: Chatbots and artificial intelligence

NY Kim, Y Cha, HS Kim – Multimedia-Assisted Language …, 2019 – pdfs.semanticscholar.org

… CSIEC is an intelligent web-based human-computer dialogue system with natural language and a learning assessment system for learners and teachers … Lim et al. (2018) developed an AI face recognition program based on “facial landmarks” to improve English pronunciation …

Entity resolution for noisy ASR transcripts

A Raghuvanshi, V Ramakrishnan, V Embar… – Proceedings of the …, 2019 – aclweb.org

… Our dialog system consists of a set of classi- fiers, information retrieval components and a di- alogue manager as described in Raghuvanshi et … The caller’s identity can be deter- mined by a variety of methods including authenti- cation, device pairing, face recognition, or speaker …

A COMMUNICATIVE ROBOT TO LEARN ABOUT US AND THE WORLD

S Baez – dialog-21.ru

… 6, 7, (2015). 2. Amos, B. et al.: OpenFace: A general-purpose face recognition library with mo- bile applications … (2016). 9. Lowe, R. et al.: The ubuntu dialogue corpus: A large dataset for research in un- structured multi-turn dialogue systems. arXiv preprint arXiv:1506.08909 …

Stepped Warm-Up–The Progressive Interaction Approach for Human-Robot Interaction in Public

M Zhao, D Li, Z Wu, S Li, X Zhang, L Ye, G Zhou… – … Conference on Human …, 2019 – Springer

… 1). Xiaodu is benefited from the AI techniques (eg, NLP, dialogue system, speech recognition) of Baidu, and is able to communicate smoothly with users in multiple aspects, such as communicating emotions … Facial Expressions, Voice and Face Recognition in Near Field …

Studying affective tutoring systems for mathematical concepts

D Mastorodimos… – Journal of Educational …, 2019 – journals.sagepub.com

Students face difficulties in learning mathematical processes. As a result, they have negative emotions toward mathematics. The use of technology is employed to change the student’s attitude toward…

Session 1A: Social Media

M Guan, M Cha, Y Li, Y Wang, J Yu, M Yokoyama… – computer.org

… 262 ,Katsurou Takahashi and Hiroaki Ohshima ,Question Understanding based on Sentence Embedding on Dialog Systems for Banking ,Service … 288 ,Sang Le, Yuyang Dong, Hanxiong Chen, and Kazutaka Furuse ,Session 7B: Face Recognition & Speech ,Real-Time Facial …

An ICA-based method for stress classification from voice samples

D Palacios, V Rodellar, C Lázaro, A Gómez… – Neural Computing and …, 2019 – Springer

… a hot topic nowadays for its potential application to intelligent systems in different fields such as neuromarketing, dialogue systems, friendly robotics … in several fields of research, for instance in segmentation and clustering of medical images [22] or in face recognition [23], among …

Multimodal sentiment analysis: A survey and comparison

R Kaur, S Kautish – International Journal of Service Science …, 2019 – igi-global.com

… recognitionsystemrealistictotheHRIhasbeendesignated.Theirgrindhas beenrealisticinageneral interface(ordialog)system,calledRDS.Using … contained in the audio data and movement of face, ieexpressionssentbyvisualdata.Detectionnamedashumanfacerecognitionplaysa …

A review of computational approaches for human behavior detection

S Nigam, R Singh, AK Misra – Archives of Computational Methods in …, 2019 – Springer

Computer vision techniques capable of detecting human behavior are gaining interest. Several researchers have provided their review on behavior detection, however most of the reviews are focused on…

Multi-device digital assistance

RW White, A Fourney, A Herring, PN Bennett… – Communications of the …, 2019 – dl.acm.org

… The user-facing camera (webcam, infra- red camera) on many laptops and tab- lets can add vision-based skills such as emotion detection and face recognition to smart … 7. Pardal JP and Mamede NJ Starting to cook a coaching dialogue system in the Olympus framework …

2Multimodal

G Skantze, J Gustafson, J Beskow – The Handbook of Multimodal-Multisensor … – dl.acm.org

… For a long time, spoken dialogue systems developed in research labs and employed in the industry also lacked any … Speech Recognition (ASR) Natural Language Understanding (NLU) Cameras Face detection Gaze/Head pose tracking Face recognition Gesture recognition …

An Active Learning Paradigm for Online Audio-Visual Emotion Recognition

I Kansizoglou, L Bampis… – IEEE Transactions on …, 2019 – ieeexplore.ieee.org

Page 1. 1949-3045 (c) 2019 IEEE. Personal use is permitted, but republication/ redistribution requires IEEE permission. See http://www.ieee.org/ publications_standards/publications/rights/index.html for more information. This …

Creating Dynamic Robot Utterances in Human-Robot Social Interaction: Comparison of a Selection-Based Approach and a Neural Network Approach on Giving Robot …

P Andersson, ES Ko – 2019 – diva-portal.org

… it will be investigated by focusing on the dialogue state tracker and dialogue policy of the dialogue system. Users will communicate with the robot through a console in which the user is to give input as directed. Other human behaviors such as voice and facial recognition will not …

Empirical study and improvement on deep transfer learning for human activity recognition

R Ding, X Li, L Nie, J Li, X Si, D Chu, G Liu, D Zhan – Sensors, 2019 – mdpi.com

… Some researchers studied the switching of conversation models in different scenarios within NLP (Natural Language Processing), so that the dialogue system can satisfy the needs of users [15]. We focus on the transfer learning based on features …

Ocean Mammalian sound recognition based on feature fusion

X Xing – Revista Cientifica-Facultad de Ciencias Veterinarias, 2019 – cientificajournal.com

… The direction of research is also increasingly focused on oral dialogue system … For example, face recognition, content-based image retrieval and other fields have corresponding application research results, but the research in speech recognition is still scarce …

Assistive Robot with Action Planner and Schedule for Family

S Konngern, N Kaibutr, N Konru… – … Conference on Ubi …, 2019 – ieeexplore.ieee.org

… Those are: ? DialogSytem – control and command dialog system. ? MotionControl … tasD. The face recognition feature on Zenbo will also be used to find users in a desires room as to increase the accuracy of a person that needs to be identified …

Context Awareness and Ambient Intelligence

A Przegalinska – Wearable Technologies in Organizations, 2019 – Springer

… Facebook uses AI for facial recognition to make its applications better than humans in identifying if two pictures are of the same person … 2010. “Ambient Intelligence Interaction via Dialogue Systems.” In Ambient Intelligence, edited by Felix Jesus Villanueva, 109–123 …

Apprentice of Oz: human in the loop system for conversational robot wizard of Oz

A Shin, JH Oh, J Lee – 2019 14th ACM/IEEE International …, 2019 – ieeexplore.ieee.org

… Conversational robots (CR) are robots that inherit the conversational abilities of a conversational agent, a human- like dialog system that interacts … component is designed to be extendable, it can be customized to fit particular experiments, such as using face recognition in the …

ALMA MATER STUDIORUM–BOLOGNA UNIVERSITY CESENA CAMPUS

D Maltoni, A Bernardino, I Lirussi – amslaurea.unibo.it

… While the trigger with the face-recognition for the approach has a backup way to be executed (with the button pressing), this activity does … This chapter will concern the dialog system, or conversational agent, implemented in the robot to find a suitable reply to the user sentence …

Text-Based Emotion Analysis: Feature Selection Techniques and Approaches

P Apte, SS Khetwat – Emerging Technologies in Data Mining and …, 2019 – Springer

… Emotion analysis can be done using facial recognition systems. Some authors have used speech patterns to observe the tone of the voice to do this task … Emotion analysis can help us in building the review summarization, dialogue system, and review ranking systems …

Mobile interaction and security issues

P Preelakha – ?????? ??????????? ??? ????????? ???????? ??????????????? …, 2019 – 122.154.0.175

… fingerprint. This paper will explore some notable examples including Touchscreen, Voice-Based Interaction, Iris Recognition, Face Recognition, Fingerprint Recognition, and Mobile Device Peripherals … Spoken dialogue systems which utilize speech for both input and output …

Multimodal conversational interaction with robots

G Skantze, J Gustafson, J Beskow – The Handbook of Multimodal …, 2019 – dl.acm.org

… For a long time, spoken dialogue systems developed in research labs and employed in the industry also lacked any physical embodiment … Cameras Face detection Gaze/Head pose tracking Face recognition Gesture recognition Object tracking …

GLOBAL JOURNAL OF ENGINEERING SCIENCE AND RESEARCHES

CS Mahalakshmi, T Sharmila, S Priyanka, MR Sastry… – gjesr.com

… keyword. conversational interface artificial conversation entities. Chatbot are typically used in dialogue systems for various practical purposes. Some chatbot … us. Chatbots can use visual information, such as facial recognition. The chatbots …

Emotional State Analysis Using Handheld Portable ECG Device

NY Khan, N Al Mahmood, H Kamal… – 2019 IEEE …, 2019 – ieeexplore.ieee.org

… Certain methods have been put forward to analyze and understand human emotions such as using facial recognition, speech recognition … sensors: First Steps towards an Automatic System,” In: André E., Dybkjær L., Minker W., Heisterkamp P. (eds) Affective Dialogue Systems …

Commercialization of multimodal systems

PR Cohen, R Tumuluri – The Handbook of Multimodal-Multisensor …, 2019 – dl.acm.org

… Whereas Mabu reminds users to take their medication, Pillo™ from PilloHealth is a pill dispensing robot that uses voice and face recognition to coordinate pill de- livery to the right user … X Head pose X Object recognition X Person detection X X X Face recognition X X X X …

Cognitive computing on unstructured data for customer co-innovation

S Chen, J Kang, S Liu, Y Sun – European Journal of Marketing, 2019 – emerald.com

… Limited analysis. Because the technology basis of cognitive computing is machine learning and deep learning primally designed to make sense of massive amounts of data in special issue such as health care, facial recognition and so on (Trees, 2017) …

Cognitive checkpoint: Emerging technologies for biometric-enabled watchlist screening

SN Yanushkevich, KW Sundberg, NW Twyman… – Computers & …, 2019 – Elsevier

JavaScript is disabled on your browser. Please enable JavaScript to use all the features on this page. Skip to main content Skip to article …

Subjective and Objective Measures

HA Ferreira, M Saraiva – Emotional Design in Human-Robot Interaction, 2019 – Springer

… Valence. Facial mimicry. Video facial recognition; EMG. Speech … In: Tutorial and research workshop on affective dialogue systems. Springer, Berlin, Heidelberg, pp 36–48Google Scholar. Hartridge H, Thompson LC (1948) Methods of investigating eye movements …

Conversational Interfaces for Explainable AI: A Human-Centred Approach

SF Jentzsch, S Höhn, N Hochgeschwender – International Workshop on …, 2019 – Springer

… autonomously. To do so, several crucial components such as navigation, path planning, speech and face recognition are required and integrated on the robot … 4. Inferring implications for an implementation in a dialogue system. Three …

Follow the Attention: Combining Partial Pose and Object Motion for Fine-Grained Action Detection

MMK Moghaddam, E Abbasnejad, J Shi – arXiv preprint arXiv:1905.04430, 2019 – arxiv.org

Page 1. Follow the Attention: Combining Partial Pose and Object Motion for Fine-Grained Action Detection M. Mahdi Kazemi M. Ehsan Abbasnejad Javen Shi The Australian Institute for Machine Learning, The University of Adelaide …

Inferring Emotions from Touching Patterns

M Hashemian, R Prada, PA Santos… – 2019 8th …, 2019 – ieeexplore.ieee.org

… 15, no. 2, pp. 99–117, 2012. [4] A. Haag, S. Goronzy, P. Schaich, and J. Williams, “Emotion recognition using bio-sensors: First steps towards an automatic system,” in Tutorial and research workshop on affective dialogue systems. Springer, 2004, pp. 36–48 …

In bot we trust: A new methodology of chatbot performance measures

A Przegalinska, L Ciechanowski, A Stroz, P Gloor… – Business Horizons, 2019 – Elsevier

… Several real-world cases have shown the tremendous power that AI represents for companies. Facebook uses AI for facial recognition to make its applications better than humans at determining if two pictures are of the same person …

Living with Artificial Intelligence–Developing a Theory on Trust in Health Chatbots

W Wang, K Siau – researchgate.net

… AI applications include self-driving cars, chatbots, and humanoid robots that apply face recognition, speech recognition, natural language … Since natural language dialogues are more natural than graphic-based interfaces, spoken dialogue systems are the primary interaction …

Artificial intelligence in education

W Holmes, M Bialik, C Fadel – Boston: Center for …, 2019 – curriculumredesign.org

… Eg, http://www.predpol.com 18 Eg, https://www.cbp.gov/newsroom/national-media-release/cbp- deploys-facial-recognition-biometric- technology … papers will confirm, AIED includes everything from AI-driven, step-by-step personalized instructional and dialogue systems, through AI …

Wayfinding

D Sato, H Takagi, C Asakawa – Web Accessibility, 2019 – Springer

… Strothotte et al. (1996) developed a dialogue system to help wayfinding for blind users. This research may be the first significant attempt in the direction … On the Web, the system fully integrates face recognition (Schroff et al. 2015; Taigman et al …

The impact of robots, artificial intelligence, and service automation on service quality and service experience in hospitality

N Naumov – Robots, Artificial Intelligence, and Service Automation …, 2019 – emerald.com

… Automated border control e-gates and facial recognition systems. Computers & Security, 62, 49–72 … In G. Geunbae Lee, H. Kook Kim, M. Jeong, & J. Kim (Eds.), Natural language dialog systems and intelligent assistants (pp. 233–239) …

Ordinal Triplet Loss: Investigating Sleepiness Detection from Speech.

P Wu, SK Rallabandi, AW Black… – …, 2019 – pdfs.semanticscholar.org

… Paralinguistic information also has appli- cations in other domains of speech processing such as dialog systems, speech synthesis, voice conversion, and more … [28] F. Schroff, D. Kalenichenko, and J. Philbin, “Facenet: A unified embedding for face recognition and clustering …

Simulators’ mimetic behaviours with auto-mated simulation tools in wizard of oz study

KKFXM Zhong, XLWGX LI – ijds.yuntech.edu.tw

… three simulators for speech recognition, face recognition, and mouse control, respectively (Salber & Coutaz, 1993) … The simulators usually mimic a human-like spoken dialogue system in this study. Here, the ‘human- like spoken dialogue system’ is proposed for several reasons …

Eye-tracking introduction considerations in vestibular telerehabilitation in Latvia

A Gorbunovs, Z Timsans, I Grada – Periodicals of Engineering and …, 2019 – pen.ius.edu.ba

… Eye-tracking Facial recognition Gaze data Postural balance Telerehabilitation … of Human Eye Gaze,” in The 4th IEEE tutorial and research workshop on Perception and Interactive Technologies for Speech-Based Systems: Perception in Multimodal Dialogue Systems, 2008, pp …

Impact of artificial intelligence on businesses: from research, innovation, market deployment to future shifts in business models

N Soni, EK Sharma, N Singh, A Kapoor – arXiv preprint arXiv:1905.02092, 2019 – arxiv.org

… Chung 2011). More than human-level accuracy has been reported for certain tasks like human face recognition (Taigman 2014), traffic signal recognition (Cire?an 2015), MNIST data … 4. Spotify: Discover weekly playlist 5. KFC: Facial recognition for order prediction …

On the Morality of Artificial Intelligence

A Luccioni, Y Bengio – arXiv preprint arXiv:1912.11945, 2019 – arxiv.org

… can therefore continue perpetuating gender bias in downstream usages in Natural Language Processing (NLP) applications such as dialogue systems … privacy and protection and, on the other hand, more local initiatives such as San Francisco’s Facial Recognition Software Ban …

Analysis and use of the emotional context with wearable devices for games and intelligent assistants

GJ Nalepa, K Kutt, B Gi?ycka, P Jemio?o, S Bobek – Sensors, 2019 – mdpi.com

In this paper, we consider the use of wearable sensors for providing affect-based adaptation in Ambient Intelligence (AmI) systems. We begin with discussion of selected issues regarding the applications of affective computing techniques. We describe our experiments for affect change …

Usability evaluation of spoken humanoid embodied conversational agents in mobile serious games

D Korre – 2019 – era.ed.ac.uk

… ii Page 6. iii Abstract The use of embodied conversational agents (ECAs) and spoken dialogue systems in … and emotions. Despite these theoretical advantages, according to recent studies, the interaction with spoken dialogue systems, either in the form of an embodied agent …

Impact of Industry 4.0 Technologies on the Employment of the People with eye Problems: a Case Study on the Spatial Cognition Within Industrial Facilities

D Zubov, N Siniak, A Grencikova – 2019 – elib.belstu.by

… It helps to build a more appropriate interface and dialogue system … hospitality criterion – the remote localization and obstacle detection meet requirements to the accessibility, safety, and problem solving, the ability to apply other assistive technologies, eg face recognition, is the …

CargoAffect: usage of affective computing to assess stress from truck drivers’ verbal communication

IF Goldstein, LVL Filgueiras – … of the 18th Brazilian Symposium on …, 2019 – dl.acm.org

Page 1. CargoAffect: Usage Of Affective Computing to Assess Stress from Truck Drivers’ Verbal Communication Igor Fillippe Goldstein CargoX São Paulo, SP, Brasil igor.goldstein@cargox.com.br Lucia Vilela Leite Filgueiras …

Artificial intelligence and radiology in Singapore: championing a new age of augmented imaging for unsurpassed patient care

CJ Liew, P Krishnaswamy, LT Cheng… – Ann Acad Med …, 2019 – researchgate.net

… and test-taking robots that can pass medical examinations.7,8 Some AI technologies such as facial recognition and customised … the breach of large-scale personally identifiable information by Facebook and Cambridge Analytica,10 the development of dialogue systems that are …

A survey on deep learning empowered IoT applications

X Ma, T Yao, M Hu, Y Dong, W Liu, F Wang… – IEEE Access, 2019 – ieeexplore.ieee.org

… Such dialogue system based products would func- tion as the next-generation smart home controller … In the following we present how deep learning is applied to traffic video analytics from the three perspectives: object detection, object tracking, and face recognition …

Learning from Others’ Experience

S Höhn – Artificial Companion for Second Language …, 2019 – Springer

… There are many successful attempts to integrate CA into Human-Computer Inter- action (HCI) and dialogue modelling to obtain models for human-robot communica- tion and dialogue systems from naturally occurring interaction data and experiments with robots in the wild …

A reference framework and overall planning of industrial artificial intelligence (I-AI) for new application scenarios

X Zhang, X Ming, Z Liu, D Yin, Z Chen… – The International Journal …, 2019 – Springer

… robot strategy, set up the AI Research Centre, and put forward the concept of “super smart society” and “AI com- prehensive development Plan (AIP).” In May 2013, in the “Exo-brain program,” South Korea is expected to develop the natural language dialog system for human …

Deep Exemplar Networks for VQA and VQG

BN Patro, VP Namboodiri – arXiv preprint arXiv:1912.09551, 2019 – arxiv.org

Page 1. 1 Deep Exemplar Networks for VQA and VQG Badri N. Patro, and Vinay P. Namboodiri, Member,IEEE Abstract—In this paper we consider the problem of solving semantic tasks such as ‘Visual Question Answering’ (VQA …

Equity beyond bias in language technologies for education

E Mayfield, M Madaio, S Prabhumoye… – Proceedings of the …, 2019 – aclweb.org

… But high-profile research has repeatedly shown an amplifying effect of ma- chine learning on concrete real-world outcomes, like racial bias in recidivism prediction in judicial hearings (Corbett-Davies et al., 2017), or dispro- portionate error from facial recognition for dark skin …

A review of artificial intelligence in the Internet of Things

C González García, ER Núñez Valdéz… – … Journal of Interactive …, 2019 – digibuo.uniovi.es

… The use of Deep Learning allows learning data representations. For example, an image can be represented in many ways, but not all of them facilitate the task of face recognition, so, what it does is try to define which is the best representation method to perform this task …

Design and implementation of embodied conversational agents

F Geraci – 2019 – rucore.libraries.rutgers.edu

… of these are user utterances, their semantic meaning, sentiment on this speech and emotional facial recognition, among others. Chapter 4 describes in detail the utilized mechanisms for parsing speech as input, validating and …

A state-of-the-art survey on deep learning theory and architectures

MZ Alom, TM Taha, C Yakopcic, S Westberg, P Sidike… – Electronics, 2019 – mdpi.com

In recent years, deep learning has garnered tremendous success in a variety of application domains. This new field of machine learning has been growing rapidly and has been applied to most traditional application domains, as well as some new areas that present more opportunities …

Jamura: A Conversational Smart Home Assistant Built on Telegram and Google Dialogflow

S Salvi, V Geetha, SS Kamath – TENCON 2019-2019 IEEE …, 2019 – ieeexplore.ieee.org

… Though regularly utilized as a part of a dialog system for functional purposes similar to client administration or data procurement, a Chatbot can be customized to give some assistance for several intents … [12] A. Patel and A. Verma, “Iot based facial recognition door access …

BACHELORS THESIS

ME Adam – 2019 – researchgate.net

… Chatbots can be defined as ”any software application that engages in a (text-based) dialogue with a human using natural language” [Dal16]; they are further known as: conversational dialogue systems, chatterbots, chat robots, or conversational agents (CAs) respectively …

TrojanNet: Exposing the Danger of Trojan Horse Attack on Neural Networks

C Guo, R Wu, KQ Weinberger – 2019 – openreview.net

… Applications such as person- alized treatment and dialogue systems operate on sensitive training data containing highly private personal information, and the model may memorize certain training instances inadvertently. Shokri et al. (2017) and Carlini et al …

Seeker: Real-Time Interactive Search

A Biswas, TT Pham, M Vogelsong, B Snyder… – Proceedings of the 25th …, 2019 – dl.acm.org

Page 1. Seeker: Real-Time Interactive Search Ari Biswas Amazon Seattle, WA aritrb@amazon.com Thai T. Pham Amazon Seattle, WA phamtha@amazon.com Michael Vogelsong Amazon Seattle, WA vogelson@amazon.com …

PHD THESIS SUMMARY

EAM FLOREA, IA AWADA – upb.ro

Page 1. University Politehnica of Bucharest Automatic Control and Computers Faculty Computer Science and Engineering Department PHD THESIS SUMMARY Multimodal Interfaces for Active and Assisted Living Systems Thesis Supervisor: Author: Prof. Dr. Eng …

Prototyping relational things that talk: a discursive design strategy for conversational AI systems

B Aga – 2019 – pearl.plymouth.ac.uk

Page 1. 1 PROTOTYPING RELATIONAL THINGS THAT TALK: A DISCURSIVE DESIGN STRATEGY FOR CONVERSATIONAL AI SYSTEMS by BIRGITTE AGA A thesis submitted to the University of Plymouth in partial fulfilment for the degree of DOCTOR OF PHILOSOPHY …

Ubiquitous Web Accessibility

VI Part – Web Accessibility, 2019 – Springer

… The idea to use conversational interface is not new and has a long history. Strothotte et al.(1996) developed a dialogue system to help wayfinding for blind users … On the Web, the system fully integrates face recognition (Schroff et al. 2015; Taigman et al …

Standardized representations and markup languages for multimodal interaction

R Tumuluri, D Dahl, F Paternò… – The Handbook of …, 2019 – dl.acm.org

… the basic image capture code. . Nesting. Components can be nested to create complex modality compo- nents, such as face-recognition and voice-authentication combined into one biometric verification. In this way the nested …

FRAMING RISK, THE NEW PHENOMENON OF DATA SURVEILLANCE AND DATA MONETISATION; FROM AN ‘ALWAYS-ON’CULTURE TO ‘ALWAYS-ON’ …

M CUNNEEN, M MULLINS – Hybrid Worlds – researchgate.net

… Moreover, it is expected that Amazon will add more advanced video capabilities to Alexa devices by late 2018 [2]. Face recognition and behavioural … Gunkel, DJ: Computational Interpersonal Communication: Communication Studies and Spoken Dialogue Systems,(2016) 21 …

Adroitness: An Android-based Middleware for Fast Development of High-performance Apps

OJ Romero, SA Akoju – arXiv preprint arXiv:1906.02061, 2019 – arxiv.org

… byte] Listing 2. Rules for ASR activation On Listing 3, we present a case where Rule1 is activated when DRE receives an event from the Facial Recognition (FR) service (given that camera sensor was previously activated), then it triggers the Emotion Recognition (ER) service …

Internet of Things Anomaly Detection using Multivariate Analysis

S Ezekiel, AA Alshehri, L Pearlstein, XW Wu… – The 3rd ICICPE 2019 … – icicpe.org

… developed an English conversation system for elementary school students by combining scenario-based dialog system and English … 3 Conclusion This paper fused machine translation, voice recognition technology and facial recognition-based emotional analysis technology in …

Sensor-based Human–Process Interaction in Discrete Manufacturing

S Knoch, N Herbig, S Ponpathirkoottam… – Journal on Data …, 2019 – Springer

… An efficient automatic align- ment of capability requirements and resource type warrants is achieved, applying lightweight semantic procedures from transaction processing in dialog systems, such as algorithms for unification and pattern matching, cf. [26] …

Computer mediated reality technologies: A conceptual framework and survey of the state of the art in healthcare intervention systems

Z Ibrahim, AG Money – Journal of Biomedical Informatics, 2019 – Elsevier

JavaScript is disabled on your browser. Please enable JavaScript to use all the features on this page. Skip to main content Skip to article …

A review of modularization techniques in artificial neural networks

M Amer, T Maul – Artificial Intelligence Review, 2019 – Springer

… For example, you may think of decomposing some input image to facilitate face recognition. However, since the process of face recognition is not well understood, it is not clear what decomposition is suitable. Is it segmentation of face parts or maybe some filter transformation …

Real world user model: Evolution of user modeling triggered by advances in wearable and ubiquitous computing

F Cena, S Likavec, A Rapp – Information Systems Frontiers, 2019 – Springer

… sensors (Karami et al. 2016; Khalili et al. 2009). smartphone use (Liao et al. 2015). Affects. emotions. camera-based facial recognition(Affectiva 2017). wearable sensors (Guo et al. 2013). sensors and camera (frustration) (Kapoor et al. 2007). wearable sensors (Kapoor et al. 2007 …

The need for common sense in 21st century mental health

JG Pereira, J Gonçalves, V Bizzari – 2019 – vernonpress.com

… The importance of consistency within services and teams is paramount, as demonstrated by the Open Dialogue system which, to operate in its full potential, should be adopted by the entire geographical catchment area (see Seikkula and Alakare, in this volume) …

Engagement and experience of older people with socially assistive robots in home care

R Khosla, MT Chu, SMS Khaksar, K Nguyen… – Assistive …, 2019 – Taylor & Francis

Skip to Main Content …

Progress on robotics in hospitality and tourism: a review of the literature

S Ivanov, U Gretzel, K Berezina, M Sigala… – Journal of Hospitality …, 2019 – emerald.com

… More specifically, the topics covered in these studies included robot appearance; mapping, path planning and navigation; collision/obstacle avoidance; vision calibration and image recognition (including object and facial recognition); object manipulation (eg dishes at a …

Enabling deaf or hard of hearing accessibility in live theaters through virtual reality

MRS Teófilo – 2019 – tede.ufam.edu.br

… That is, recognizing speech of humans talking to machines, either reading out loud in read speech (which simulates the dictation task), or conversing with speech dialogue systems, is relatively easy. Recognizing the speech of two Page 23. Chapter 2. Background 22 …

Ex Machina Lex: The Limits of Legal Computability

C Markou, S Deakin – Available at SSRN 3407856, 2019 – papers.ssrn.com

… 14 Nonetheless, there are compelling cases for prohibiting AI—broadly conceived—in autonomous weapons, facial recognition—and as the French courts have ruled—the use of state legal data for judicial analytics, an important—and potentially lucrative—LegalTech domain …

ELLIOT JONES NICOLINA KALANTERY

BEN GLOVER – 2019 – demos.co.uk

… on machine learning models.8 Since 2016, Google Translate has been using neural networks to improve translation to and from English and live-translate text.9 Facebook uses facial recognition models to … Uber, for example, has developed the Plato Research Dialogue System …

Visual to text: Survey of image and video captioning

S Li, Z Tao, K Li, Y Fu – IEEE Transactions on Emerging Topics …, 2019 – ieeexplore.ieee.org

… For example, social context [16] is able to enhance the 178 face recognition, and thus further facilitate the following caption 179 generation … Further, Nagel 267 and Zimmermann introduced a dialog system in which visual 268 scenes were depicted [62] …

End-to-end Neural Information Retrieval

W Yang – 2019 – uwspace.uwaterloo.ca

Page 1. End-to-end Neural Information Retrieval by Wei Yang A thesis presented to the University of Waterloo in fulfillment of the thesis requirement for the degree of Master in Computer Science Waterloo, Ontario, Canada, 2019 c Wei Yang 2019 Page 2 …

Transfer learning: Domain adaptation

U Kamath, J Liu, J Whitaker – Deep Learning for NLP and Speech …, 2019 – Springer

Domain adaptationis a form of transfer learning, in which the task remains the same, but there is a domain shift or a distribution change between the source and the target. As an example, consider a…

The Development of Telepresence Robot

P Duan – 2019 – search.proquest.com

… in order to introduce customers to the “temperature” service such as dish awareness, historical evaluation, and jokes, a voice recognition dialogue system based on the cloud AI … face recognition and other technologies, telepresence robot can be qualified as a salesperson …

Embodiment in socially interactive robots

E Deng, B Mutlu, M Mataric – arXiv preprint arXiv:1912.00312, 2019 – arxiv.org

Page 1. Embodiment in Socially Interactive Robots Eric Deng University of Southern California denge@usc.edu Bilge Mutlu University of Wisconsin–Madison bilge@cs.wisc.edu Maja J Matari? University of Southern California mataric@usc.edu December 3, 2019 Abstract …

Augmenting Education: Ethical Considerations for Incorporating Artificial Intelligence in Education

D Remian – 2019 – scholarworks.umb.edu

… Applications available on mobile devices can tap into this potential to identify objects in real- time (Vanitha, Jeeva, & Shriman, 2019). Facial recognition and eye-gaze systems can provide additional data for applications to learn from, as well as open the door to new forms of …

From sci-fi to sci-fact: the state of robotics and AI in the hospitality industry

LN Cain, JH Thomas, M Alonso Jr – Journal of Hospitality and Tourism …, 2019 – emerald.com

… reduced. Using AI assistance, a human serving guests can better personalize their service by being prompted about the guest’s history of preferences and by identifying guests by name through facial recognition technology …

Virtual humans: Today and tomorrow

D Burden, M Savin-Baden – 2019 – books.google.com

… 6.6 Figure 6.7 Figure 6.8 Figure 6.9 Figure 6.10 Figure 6.11 Figure 6.12 Figure 8.1 Figure 8.2 Figure 8.3 Figure 8.4 Figure 10.1 Figure 12.1 Figure 13.1 Figure 13.2 Figure 13.3 The key mind processes of a virtual human Kismet robot Research on dialogue systems for language …

AI in Consulting

M Bayati – 2019 – theseus.fi

Page 1. AI in Consulting Mutasim Bayati Bachelor’s Thesis Degree Programme in Business Information Technology 2019 Page 2. Abstract 20.11.2019 Author(s) Mutasim Bayati Degree programme Business Information Technology Report/thesis title AI in Consulting …

Unlocking Data to Improve Public Policy

B Certified, COS Kernels – Communications of the ACM, 2019 – dl.acm.org

Page 1. Unlocking Data to Improve Public Policy Closing in on Quantum Error Correction Building Certified Concurrent OS Kernels Consumer-Grade Fabrication to Revolutionize Accessibility COMMUNICATIONS OF THEACM CACM.ACM.ORG 10/2019 VOL.62 NO.10 …

Survey of deep learning and architectures for visual captioning—transitioning between media and natural languages

C Sur – Multimedia Tools and Applications, 2019 – Springer

… prediction mainly for large scale data processing. Improved version of Memory Networks model to reasoning and natural language for building intelligent dialogue system has been described in [150]. It is a framework and a …

A Comprehensive Review of Ethical Frameworks in Natural Language Processing

PF North – 2019 – scss.tcd.ie

… If we train a model to detect crim- inality through facial recognition, by training a model on classification based on facial features such … al (Braunger et al., 2016) observes similar ethical challenges when making use of crowdsourced material for spoken dialog systems in vehicles …

End-to-end information extraction from business documents

RB Palm – 2019 – core.ac.uk

… The use of deep CNNs have lead to breakthroughs in object detection [Krizhevsky et al., 2012], face recognition [Taigman et al., 2014], image segmentation [Ronneberger et al., 2015], and “ConvNets are now the dominant app

Towards Diverse Paraphrase Generation Using Multi-Class Wasserstein GAN

Z An, S Liu – arXiv preprint arXiv:1909.13827, 2019 – arxiv.org

… semantic. The automatic paraphrase generation of a given sentence is an important NLP task, which can be applied in many fields such as information retrieval, question answering, text sum- marization, dialogue system, etc …

Cognitive Computing Recipes

A Masood, A Hashmi – Springer

Page 1. Cognitive Computing Recipes Artificial Intelligence Solutions Using Microsoft Cognitive Services and TensorFlow — Adnan Masood Adnan Hashmi Foreword by Matt Winkler Page 2. Cognitive Computing Recipes Artificial Intelligence Solutions Using …

of deliverable First periodic report

J van Loon, H op den Akker, T Beinema, M Broekhuis… – 2019 – council-of-coaches.eu

… Not all doctors will have this time available, but fortunately we are developing a dialogue system with which the end user can interact … Current work is being performed on facial recognition and speech analysis sensors to detect the emotional state of the user. Page 18 …

Quantifying Internal Representation for Use in Model Search

NT Blanchard – 2019 – search.proquest.com

Page 1. QUANTIFYING INTERNAL REPRESENTATION FOR USE IN MODEL SEARCH A Dissertation Submitted to the Graduate School of the University of Notre Dame in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy by Nathaniel T. Blanchard …

DATE OF PUBLICATION July 2019

T Walsh, N Levy, G Bell, A Elliott, J Maclaurin… – 2019 – researchgate.net

… 90 3.4.2 The psychological impact of AI 91 3.5 Changing social interactions 93 3.5.1 Spoken and text-based dialogue systems 93 3.5.2 Digital-device-distraction syndrome 94 … It is well known, for example, that smart facial recognition technologies have often been inaccurate …

On the feasibility of transfer-learning code smells using deep learning

T Sharma, V Efstathiou, P Louridas… – arXiv preprint arXiv …, 2019 – arxiv.org

… cnns have been proven particularly effective for problems of optical recognition and are widely used for image classification and detection [45, 49, 90], segmentation of regions of interest in biological images [43], and face recognition [47, 72] …

Ai & Quantum Computing For Finance & Insurance: Fortunes And Challenges For China And America

DKC Lee, P Schulte – 2019 – books.google.com

Page 1. Singapºre University ºf Sºcial Sciences – Wºrld Scientific : Future Economy Series : Al & Guantum Computing for Finance & Insurance Fortunes and Challenges for China and America Paul SCHULTE David LEE Kuo Chuen …

The secret sharer: Evaluating and testing unintended memorization in neural networks

N Carlini, C Liu, Ú Erlingsson, J Kos… – 28th {USENIX} Security …, 2019 – usenix.org

… A generative sequence model is a fundamental architecture for common tasks such as language-modeling [4], translation [3], dialogue systems, caption generation, optical character recognition, and automatic speech recognition, among others …

A comparative study of social bot classification techniques

F Örnbratt, J Isaksson, M Willing – 2019 – diva-portal.org

… Chatbots (natural language based dialog system) ? Spambots (bots that advertise and post spam on online messaging platforms) … recognition where the process is simple for us humans but it is really hard to convert speech and face recognition into a traditional algorithm …

Digital Human Modeling and Applications in Health, Safety, Ergonomics and Risk Management. Healthcare Applications: 10th International Conference, DHM …

VG Duffy – 2019 – books.google.com

… 391 Shota Nakatani, Sachio Saiki, Masahide Nakamura, and Kiyoshi Yasuda Design of Coimagination Support Dialogue System with Pluggable Dialogue System – Towards Long-Term Experiment …

Subsidia: Tools and Resources for Speech Sciences

JME Lahoz-Bengoechea, RE Pérez Ramón – 2019 – riuma.uma.es

Page 1. Page 2. Page 3. SUBSIDIA: Tools and resources for speech sciences José María Lahoz-Bengoechea and Rubén Pérez Ramón (Eds.) Universidad de Málaga Page 4. Page 5. Page 6. Page 7. 1 Copyright: © 2019 Universidad de Málaga …

Contextual language understanding Thoughts on Machine Learning in Natural Language Processing

B Favre – 2019 – hal-amu.archives-ouvertes.fr

… The ELIZA chatbot (Weizenbaum 1976) or contestants to the Loeb- ner Prize competition (Stephens 2004) are dialog systems which rely on conversational tricks in order to evade difficult questions (such as invoking boredom, switching topics, etc.) Machine Translation is …

TEAP 2019 Conference Programme

C Lange-Küttner – Tagung experimentell arbeitender …, 2019 – psycharchives.org

Page 1. Page | 1 TEAP 2019 Conference Programme Tagung Experimentell Arbeitender Psycholog(inn)en Annual Meeting of Experimental Psychologists Christiane Lange-Küttner TEAP Chair Thanks are due to the Head of …

A theory on deep neural network based vector-to-vector regression with an illustration of its expressive power in speech enhancement

J Qi, J Du, SM Siniscalchi… – IEEE/ACM Transactions on …, 2019 – ieeexplore.ieee.org

… However, the classification tasks, such as speech recognition and face recognition, are bounded vector-to-vector regressions from Rd ? [0, 1]q. In this sense, the classification tasks can be considered as special cases of regression ones …