Notes:

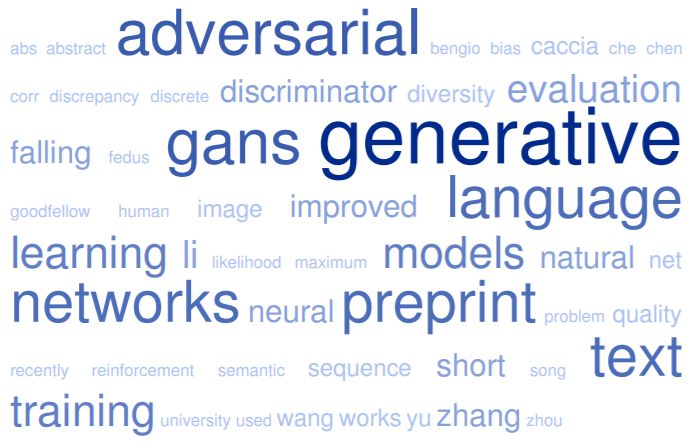

A language GAN, or Generative Adversarial Network, is a type of machine learning model that is designed to generate natural language text. It works by training two neural networks, a generator and a discriminator, to work together to generate text that is indistinguishable from human-written text. The generator produces text, while the discriminator tries to distinguish between the generated text and real human-written text. The two networks are then trained using an adversarial process, in which the generator tries to produce text that the discriminator cannot distinguish from real text, and the discriminator tries to accurately distinguish between the generated text and real text.

In a Generative Adversarial Network (GAN), the discriminator is a type of classifier that is used to distinguish between generated data and real data. In the context of a language GAN, the discriminator is trained to classify text as either generated or real, based on a set of training data.

The role of the discriminator in a GAN is to provide feedback to the generator about the quality of the generated data. As the generator produces text, the discriminator compares it to real text and provides a score indicating how similar the generated text is to real text. This score is then used to adjust the generator’s parameters and improve the quality of the generated text.

- Generative Adversarial Networks (GANs) are a type of machine learning model that is designed to generate data that is indistinguishable from real data. They work by training two neural networks, a generator and a discriminator, to work together to generate data that is of high quality. The generator produces data, while the discriminator tries to distinguish between the generated data and real data. The two networks are then trained using an adversarial process, in which the generator tries to produce data that the discriminator cannot distinguish from real data, and the discriminator tries to accurately distinguish between the generated data and real data.

- Natural Language GAN is a type of GAN that is specifically designed to generate natural language text. It works by training a generator and a discriminator to work together to produce text that is indistinguishable from human-written text. The generator produces text, while the discriminator tries to distinguish between the generated text and real human-written text.

- Text GAN is a type of GAN that is specifically designed to generate text. It works in a similar way to a natural language GAN, but is specifically designed to generate text rather than other types of data. Like a natural language GAN, a text GAN uses a generator and a discriminator to produce text that is of high quality and indistinguishable from real text.

Wikipedia:

See also:

100 Best Generative Adversarial Network Videos

- Caccia, M., Caccia, L., Fedus, W., Larochelle, H., Pineau, J., & Charlin, L. (2018). Language GANs falling short. arXiv preprint arXiv:1811.02549.

- Chen, X., Cai, P., Jin, P., Wang, H., Du, H., Wang, X., & Chen, J. (2019). The detection of distributional discrepancy for text generation. arXiv preprint arXiv:1912.10176.

- Clark, K., Luong, MT, & Le, QV (2020). ELECTRA: Pre-training text encoders as discriminators rather than generators. arXiv preprint arXiv:2003.10555.

- d’Autume, C. M., Rosca, M., Rae, J., & Mohamed, S. (2019). Training language GANs from scratch. Advances in Neural Information Processing Systems, 32.

- Fan, X., Zhang, Y., Wang, Z., & Zhou, M. (2019). Adaptive correlated Monte Carlo for contextual categorical sequence generation. arXiv preprint arXiv:1912.13151.

- Fedus, W., Goodfellow, I., & Dai, AM (2018). MaskGAN: Better text generation via filling in the _. arXiv preprint arXiv:1801.07736.

- Gui, J., Sun, Z., Wen, Y., Tao, D., & Ye, J. (2020). A Review on Generative Adversarial Networks: Algorithms, Theory, and Applications. arXiv preprint arXiv:2001.06937.

- Haidar, M., Rezagholizadeh, M., Do-Omri, A., & Cardin, S. (2019). Latent code and text-based generative adversarial networks for soft-text generation. arXiv preprint arXiv:1902.01294.

- Hashimoto, TB, Zhang, H., & Liang, P. (2019). Unifying human and statistical evaluation for natural language generation. arXiv preprint arXiv:1904.02792.

- Holtzman, A., Buys, J., Du, L., Forbes, M., & Choi, Y. (2019). The curious case of neural text degeneration. arXiv preprint arXiv:1904.09751.

- Lee, D., & Choi, H. (2018). Text to game characterization: A starting point for generative adversarial video composition. In 2018 IEEE International Conference on Big Data (Big Data) (pp. 3653-3658). IEEE.

- Liu, G., Hsu, TMH, McDermott, M., Boag, W., & Chen, PY (2019). Clinically accurate chest X-ray report generation. arXiv preprint arXiv:1912.10939.

- Lu, S., Yu, L., Feng, S., Zhu, Y., Zhang, W., & Yu, Y. (2018). CoT: Cooperative training for generative modeling of discrete data. arXiv preprint arXiv:1804.03782.

- Scialom, T., Dray, P. A., Lamprier, S., Piwowarski, B., Staiano, J., Aisopos, F., & Lauscher, A. (2020). Discriminative adversarial search for abstractive summarization. arXiv preprint arXiv:2002.10958.

- Semeniuta, S., Severyn, A., & Gelly, S. (2018). On accurate evaluation of GANs for language generation. arXiv preprint arXiv:1806.04936.

- Tevet, G., Habib, G., Shwartz, V., & Berant, J. (2018). Evaluating text GANs as language models. arXiv preprint arXiv:1810.12686.

- Vlachostergiou, A., Caridakis, G., Mylonas, P., & Korakis, T. (2018). Learning representations of natural language texts with generative adversarial networks at document, sentence, and aspect level. Algorithms, 11(10), 164.

- Wu, Q., Li, L., & Yu, Z. (2020). TextGAIL: Generative adversarial imitation learning for text generation. arXiv preprint arXiv:2004.13796.

- Ying, H., Li, D., Li, X., & Li, P. (2020). Meta-CoTGAN: A Meta Cooperative Training Paradigm for Improving Adversarial Text Generation. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence.

- Zhang, R., Chen, C., Gan, Z., Wang, W., Shen, D., Zhang, S., … & Wang, L. (2020). Improving adversarial text generation by modeling the distant future. arXiv preprint arXiv:2005.11672.