Notes:

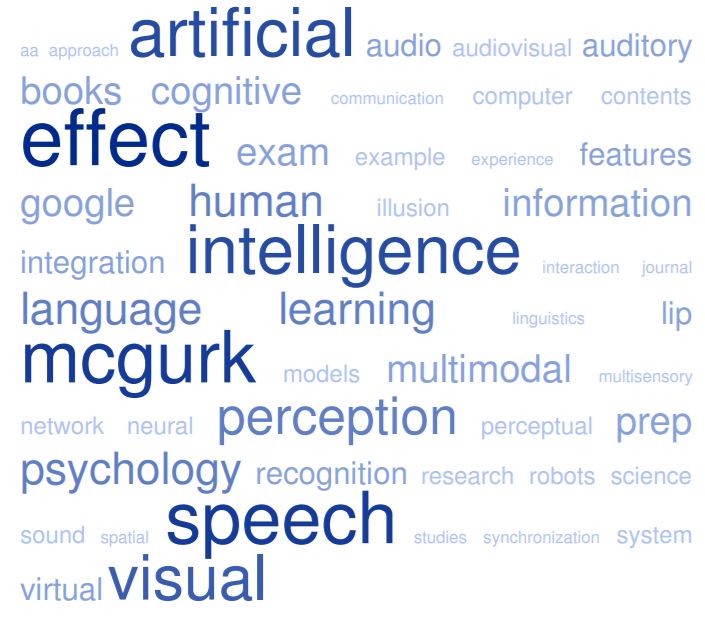

The McGurk Effect is a phenomenon that demonstrates how visual information can influence the perception of speech sounds. The effect is named after Harry McGurk, who first described it in 1976. The effect occurs when a person watches a video of someone speaking a certain sound (such as “ba”) while simultaneously hearing a different sound (such as “ga”). The person will perceive a third sound that is a combination of the visual and auditory information (such as “da”). This illustrates the influence that visual cues can have on the perception of speech sounds, and highlights the complex interactions between the visual and auditory systems in the brain.

The McGurk Effect is closely related to lip synchronization, as both involve the integration of visual and auditory information in the perception of speech. Lip synchronization, also known as lip sync, refers to the matching of the movement of an animated or virtual character’s lips with pre-recorded speech.

In lip synchronization, the goal is to create the illusion that the character is speaking the pre-recorded speech by making the lip movements match the speech sounds as closely as possible. However, if the visual and auditory information do not match perfectly, it can create the same kind of perceptual illusion as the McGurk Effect, where the viewer perceives a different sound than what is actually being spoken.

Therefore, the McGurk Effect provides important insights for the development of lip synchronization techniques in animation, computer graphics, and virtual reality. For example, lip-sync animation techniques such as audio-driven facial animation, use similar principles to the McGurk Effect, they use the audio of a speech to drive the animation of the lips, to produce realistic lip movements that match the speech sounds.

The McGurk Effect is related to virtual humans in the sense that it highlights the importance of accurately synchronizing visual and auditory information in the creation of realistic virtual human characters.

Virtual humans are computer-generated characters that are designed to interact with humans in a natural and believable way. They are often used in fields such as entertainment, education, and communication. In order to create a realistic virtual human, it is important to accurately synchronize the visual and auditory information, as the McGurk Effect illustrates that even small discrepancies can lead to a perception that is different from what was intended.

For example, in the case of virtual human used in virtual reality or augmented reality, it is important to synchronize the virtual human’s lip movement with the speech audio, to create a more realistic and engaging experience for the user. Similarly, in the case of virtual human used in video games or animations, it is important to synchronize the virtual human’s lip movement with the speech audio, to create a more realistic and believable character.

Wikipedia:

See also:

Automatic Lip Synchronization (Lipsync) | Multimodal Dialog Systems

Speech intelligibility of virtual humans

A Devesse, A Dudek, A van Wieringen… – International journal of …, 2018 – Taylor & Francis

… This is illustrated behaviourally by the McGurk effect where an auditory /bA/ syllable is often perceived as /dA/ when presented together with an incongruent … Direct comparison of speech reading scores on our virtual humans with scores on other virtual human speakers is …

Evaluating effects of listening to content with lip-sync animation on head mounted displays

N Isoyama, T Terada, M Tsukamoto – … and Proceedings of the 2017 ACM …, 2017 – dl.acm.org

… As research on auditory and visual percep- tion, the McGurk effect [1] is a compelling demonstration of how we all use visual speech information … In this paper, we investigate the influence of listening to a voice while watching a lip-sync animation on an HMD in which a …

Sentiment Analysis of Telephone Conversations Using Multimodal Data

AG Logumanov, JD Klenin, DS Botov – International Conference on …, 2018 – Springer

… In this paper, we explore the possibilities of artificial intelligence in the task of multimodal sentiment detection in telephone conversations of a … Multimodal machine learning began in the 1970s [2], with the discovery of the McGurk effect, showing the interaction between hearing …

Audio-visual scene analysis with self-supervised multisensory features

A Owens, AA Efros – Proceedings of the European …, 2018 – openaccess.thecvf.com

… Perhaps the most compelling demonstration of this phe- nomenon is the McGurk effect [5], an illusion in which visual motion of a mouth changes one’s interpretation of a spoken sound1. Hearing can also influence vision: the timing of a sound, for instance, affects whether we …

A Surprising Density of Illusionable Natural Speech

MY Guan, G Valiant – arXiv preprint arXiv:1906.01040, 2019 – arxiv.org

… Thirty-second AAAI conference on artificial intelligence, 2018 … Enhanced audiovisual integration with aging in speech perception: a heightened mcgurk effect in older adults. Frontiers in Psychology, 5:323, 2014 … Synthesizing obama: learning lip sync from audio …

Visual speech recognition for isolated digits using discrete cosine transform and local binary pattern features

A Jain, GN Rathna – 2017 IEEE Global Conference on Signal …, 2017 – ieeexplore.ieee.org

… 371 Page 5. 5. REFERENCES [1] Tiippana, Kaisa.“What is the McGurk Effect?” Frontiers in Psychology 5 (2014): 725. PMC. Web … “Solving Multiclass Learning Problems via Error-correcting Output Codes.” Journal of artificial intelligence research 2 (1995): 263-286 …

Dialogue Writing Itinerary

GS Miggiani – Dialogue Writing for Dubbing, 2019 – Springer

… of lip synchronization further enhances modified auditory perception to the point of possibly causing sporadic misunderstanding or misinterpretation of the dialogue. Phonetic dischrony, that is, mismatched visual and auditory stimuli, provokes a strong McGurk effect on viewers …

Reducing playback rate of audiovisual speech leads to a surprising decrease in the McGurk effect

JF Magnotti, DB Mallick, MS Beauchamp – Multisensory Research, 2018 – brill.com

… Reducing Playback Rate of Audiovisual Speech Leads to a Surprising Decrease in the McGurk Effect. in Multisensory … We report the unexpected finding that slowing video playback decreases perception of the McGurk effect. This reduction …

Book Review in press, American Journal of Psychology.

N Chater, K Edition – 2018 – pdfs.semanticscholar.org

… integrate audible and visible speech when they occur within a reasonable window of time (200 ms) but not when their asynchrony exceeds this value (in the McGurk effect, Massaro, 1998) … Expertise is laid waste by describing how expert systems in Artificial Intelligence failed …

A virtual speaker in noisy classroom conditions: supporting or disrupting children’s listening comprehension?

J Nirme, M Haake, V Lyberg Åhlander… – Logopedics …, 2019 – Taylor & Francis

… The so-called McGurk effect refers to how seeing synchronous lip movements affect perception of spoken syllables [8 … The terms Virtual Humans, Virtual Agents, and Embodied Conversational Agents [10 Cassell J, Pelachaud C, Badler NI, et al … Int J Artificial Intelligence Educ …

Multi-view visual speech recognition based on multi task learning

HJ Han, S Kang, CD Yoo – 2017 IEEE International Conference …, 2017 – ieeexplore.ieee.org

… For instance, different visual cues can provide varied perception of speech, which is known as the McGurk effect[1]. Likewise, visual speech recognition (VSR) can enhance the performance of … [11] Joon Son Chung and Andrew Zisserman, “Out of time: automated lip sync in the …

Multi-task learning of deep neural networks for audio visual automatic speech recognition

A Thanda, SM Venkatesan – arXiv preprint arXiv:1701.02477, 2017 – arxiv.org

… This was inspired by the fact that human perception of speech is dependent on both auditory and visual senses as demonstrated by the famous McGurk effect[1]. Traditionally, AV-ASR systems were implemented using GMM/HMM models [2, 3, 4, 5]. One way(called decision …

Unobtrusive Vital Data Recognition by Robots to Enhance Natural Human–Robot Communication

G Bieber, M Haescher, N Antony, F Hoepfner… – … , Societal and Ethical …, 2019 – Springer

… Since developments in machine learning and artificial intelligence drive the capabilities of robots, new application fields arise, such as that of … of communication modalities enhances the total understanding and reliability of information exchange (eg, McGurk effect) (Nath, 2012) …

Learning weakly supervised multimodal phoneme embeddings

R Chaabouni, E Dunbar, N Zeghidour… – arXiv preprint arXiv …, 2017 – arxiv.org

… doi.org/10.1016%2Fs0163-6383%2884%2980050-8 [3] LD Rosenblum, MA Schmuckler, and JA Johnson, “The mcgurk effect in infants … Signature verification using a”siamese” time delay neural network,” International Journal of Pattern Recognition and Artificial Intelligence, vol …

The Richness of Flatness

D Massaro – 2019 – JSTOR

… integrate audible and visible speech when they occur within a reasonable win- dow of time (200 ms) but not when their asynchrony exceeds this value (in the McGurk effect; Massaro, 1998) … Expertise is laid waste by describing how expert systems in artificial intelligence failed …

Three-Dimensional Joint Geometric-Physiologic Feature for Lip-Reading

J Wei, F Yang, J Zhang, R Yu, M Yu… – … Artificial Intelligence …, 2018 – ieeexplore.ieee.org

… I. INTRODUCTION As the McGurk effect [1] proved that visual information plays an essential role in speech perception, humans obtain linguistic information from not only the auditory modality but also … 2018 IEEE 30th International Conference on Tools with Artificial Intelligence …

Touchless heart rate Recognition by Robots to support natural Human-Robot Communication

G Bieber, N Antony, M Haescher – Proceedings of the 11th PErvasive …, 2018 – dl.acm.org

… Since developments in machine learning and artificial intelligence drive the capabilities of robots, new application fields such as that of … combination of communication modalities enhances the total understanding and reliability of information exchange (eg McGurk-Effect) [3]. We …

Validating a method to assess lipreading, audiovisual gain, and integration during speech reception with cochlear-implanted and normal-hearing subjects using a …

S Schreitmüller, M Frenken, L Bentz, M Ortmann… – Ear and …, 2018 – journals.lww.com

Objectives: Watching a talker’s mouth is beneficial for speech reception (SR) in many communicatio.

A neurorobotic experiment for crossmodal conflict resolution in complex environments

GI Parisi, P Barros, D Fu, S Magg, H Wu… – 2018 IEEE/RSJ …, 2018 – ieeexplore.ieee.org

… Widely studied illusions are the spatial ventriloquism effect [7], where the auditory stimulus is perceptually shifted towards the position of the synchronous visual one, and the McGurk effect [8], in which a mismatched audio-visual stimulus of vocalizing a sound leads to the …

Should Mobile Robots Have a Head?

P Soueres – Biomimetic and Biohybrid Systems: 7th International …, 2018 – books.google.com

… For instance, the McGurk effect [15] and other experiences of sensory enrichment [6, 7, 9] are evi- dences that the addition or the removal of a sensory modality makes it … Legreneur, P., Laurin, M., Bels, V.: Predator-prey interactions paradigm: a new tool for artificial intelligence …

Happily entangled: prediction, emotion, and the embodied mind

M Miller, A Clark – Synthese, 2018 – Springer

… resulting circular causality between perception and action fits comfortably with many formulations in embodied cognition and artificial intelligence; for example … In the case of the McGurk effect (see McGurk and MacDonald 1976) for example, we allow visual information from a …

Towards reasoning based representations: Deep consistence seeking machine

A L?rincz, M Csákvári, Á Fóthi, ZÁ Milacski… – Cognitive Systems …, 2018 – Elsevier

… we exploited inference methods based on our knowledge or knowledge bases that point to the strengths of classical artificial intelligence procedures … and component based inferences may become the source of new problems, eg, like in the case of the McGurk effect (McGurk & …

Immersive Spatial Audio Reproduction for VR/AR Using Room Acoustic Modelling from 360° Images

H Kim, L Remaggi, P Jackson… – Proceedings IEEE …, 2019 – epubs.surrey.ac.uk

… Index Terms: Human-centered computing—Human computer in- teraction (HCI)—Interaction paradigms—Virtual reality; Computing methodologies—Artificial intelligence—Computer vision … such as VR, audio perception is bi- ased by the visual side (eg the McGurk effect [28]) …

Evaluation of Artificial Mouths in Social Robots

Á Castro-González, J Alcocer-Luna… – … on Human-Machine …, 2018 – ieeexplore.ieee.org

… The well-known McGurk Effect proved this in the 1970s [4]. Mcgurk and Macdonald showed that the perception of speech changed depending on … evaluated the importance of lip-sync in an an- droid robot where the mouth shape changed according to the vowel sounds played …

Teaching Turkish as a foreign language: extrapolating from experimental psychology

D Erdener – Dil ve Dilbilimi Çal??malar? Dergisi, 2017 – dergipark.org.tr

… Asiaee, M., Kivanani, NH &Nourbakhsh, M. (2016).A Comparative Study of McGurk Effect in Persian and Kermanshahi Kurdish … J. & Davis, C. (2001).Repeating and remembering foreign language words: Implications for language teaching system.Artificial Intelligence Review, 16 …

Attention to affective audio-visual information: Comparison between musicians and non-musicians

J Weijkamp, M Sadakata – Psychology of Music, 2017 – journals.sagepub.com

Individuals with more musical training repeatedly demonstrate enhanced auditory perception abilities. The current study examined how these enhanced auditory ski…

Exam Prep for: Substance Abuse and Addiction Treatment…

D Mason – 2019 – books.google.com

Page 1. M?n?n). Exam Preps Exam Prep for: Substance Abuse and Addiction Treatment Practical Application of Counseling Theory MyLab Counseling without Pearson el?ext – Access Card Package Eb??k Editi?n Page 2. Table …

Effects of Educational Context on Learners’ Ratings of a Synthetic Voice.

NN Chiaráin, AN Chasaide – SLaTE, 2017 – isca-speech.org

… In the context of interactive CALL platforms, where natural language processing/artificial intelligence is used to create chatbot-type platforms where … The phenomenon known as the ‘McGurk Effect’, for example, illustrates the multimodal nature of speech perception since it takes …

Should mobile robots have a head?

F Bailly, E Pouydebat, B Watier, V Bels… – … on Biomimetic and …, 2018 – Springer

… For instance, the McGurk effect [15] and other experiences of sensory enrichment [6, 7, 9] are evidences that the addition or the removal of a sensory modality makes it … Legreneur, P., Laurin, M., Bels, V.: Predator-prey interactions paradigm: a new tool for artificial intelligence …

Audio visual speech recognition with multimodal recurrent neural networks

W Feng, N Guan, Y Li, X Zhang… – 2017 International Joint …, 2017 – ieeexplore.ieee.org

… Even for people with normal hearing, Lipreading can help people to understand speeches, especially in noisy environments [1], [2]. The relationship between audio and visual information can be demonstrated by McGurk effect [3] where conflicting audio and visual stimuli can …

Mylipper: A personalized system for speech reconstruction using multi-view visual feeds

Y Kumar, R Jain, M Salik, R ratn Shah… – … on Multimedia (ISM), 2018 – ieeexplore.ieee.org

… lipreading to better understand speech [4]. An extreme example of the use of visual modality is shown by the McGurk effect [5]. It showed that when subjects were shown /ga/1 with the sound of /ba/, most of them perceived it as /da …

Apparent Personality Prediction using Multimodal Residual Networks with 3D Convolution

S Iacob – 2018 – theses.ubn.ru.nl

… Convolution Bachelor Thesis Artificial Intelligence Stefan Iacob – S4575121 Supervisors … The robustness, as well as the failure of such mechanisms can be observed for example in the McGurk effect [2]. In this experiment, subjects have to report what syllable they hear. When …

Privacy-Preserving Adversarial Representation Learning in ASR: Reality or Illusion?

BML Srivastava, A Bellet, M Tommasi… – Annual Conference of …, 2019 – isca-speech.org

… X. Li, S. Chen, J. Zhang, and I. Marsic, “Speech inten- tion classification with multimodal deep learning,” in Canadian Conference on Artificial Intelligence, 2017, pp … [20] K. Sekiyama, “Cultural and linguistic factors in audiovisual speech processing: The McGurk effect in Chinese …

Expectation Learning and Crossmodal Modulation with a Deep Adversarial Network

P Barros, GI Parisi, D Fu, X Liu… – 2018 International Joint …, 2018 – ieeexplore.ieee.org

… In diverse experiments, semantic congruence shows to be important for the unity assumption, as explained in the review of Chen and Spence [9]. They show that in different psychological experiments (spatial and temporal ventriloquist effect and McGurk effect), the fact that the …

Auxiliary loss multimodal GRU model in audio-visual speech recognition

Y Yuan, C Tian, X Lu – IEEE Access, 2018 – ieeexplore.ieee.org

… Furthermore, the other example is that illusion occurs when the auditory component of one sound is paired with the visual component of another sound, leading to the perception of a third sound [1] (this is called McGurk effect) …

Head anticipation during locomotion with auditory instruction in the presence and absence of visual input

F Dollack, M Perusquia-Hernandez… – Frontiers in human …, 2019 – frontiersin.org

… 2 Artificial Intelligence Laboratory, University of Tsukuba, Tsukuba, Japan; 3 NTT Communication Science Laboratories, Atsugi, Japan; 4 Center for Innovative Medicine and Engineering, University of Tsukuba Hospital, Tsukuba, Japan; …

The impact of automatic exaggeration of the visual articulatory features of a talker on the intelligibility of spectrally distorted speech

N Alghamdi, S Maddock, J Barker, GJ Brown – Speech Communication, 2017 – Elsevier

… The illusion of perceiving a new audio signal when listeners are presented with an incongruent audiovisual signal – known as the McGurk effect (McGurk and MacDonald, 1976) – provides compelling evidence of the synergy of audio and visual speech during perception …

Listen to your face: Inferring facial action units from audio channel

Z Meng, S Han, Y Tong – IEEE Transactions on Affective …, 2017 – ieeexplore.ieee.org

… In addition, eyebrow movements and fundamental frequency of voice have been found to be correlated during speech [7]. As demonstrated by the McGurk effect [8], there is a strong correlation between visual and audio information for speech perception …

Exam Prep for: Abnormal Psychology and Modern Life with…

D Mason – 2019 – books.google.com

Page 1. Mºnºn, Exam Preps Exam Prep for: Abnormal Psychology and Modern Life with Telecourse Study Gulde EEGOk. Editiºn Page 2. Table of Contents Title Page Copyright Foundations of Psychology History of Psychology …

40 years of cognitive architectures: core cognitive abilities and practical applications

I Kotseruba, JK Tsotsos – Artificial Intelligence Review, 2018 – Springer

Page 1. Artificial Intelligence Review https://doi.org/10.1007/s10462-018-9646-y … Similarly, the ultimate goal of research in cognitive architectures is to model the human mind, eventually enabling us to build human-level artificial intelligence …

Dialogue Writing for Dubbing: An Insider’s Perspective

GS Miggiani – 2019 – books.google.com

… Part II Strategies and Know-How 3 Dialogue Writing Itinerary 3.1 3.2 3.3 3.4 3.5 3.6 3.7 3.8 3.9 Establishing a Method Impersonating the Dubbing Actors The Rhythmic Framework Inserting Dubbing Notations Isochrony Kinesics and Lip Synchronization Working Methodology …

The categories, frequencies, and stability of idiosyncratic eye-movement patterns to faces

J Arizpe, V Walsh, G Yovel, CI Baker – Vision research, 2017 – Elsevier

… Eckstein, 2013). Even so, there is also evidence of an association between perception of the McGurk Effect and the degree of an individual’s tendency to fixate the mouth of McGurk stimuli (Gurler et al., 2015). Idiosyncratic scanpaths …

Speech Perception in Infants: Propagating the Effects of Language Experience

CT Best – The Handbook of Psycholinguistics, 2018 – Wiley Online Library

… Speech Perception in Infants: Propagating the Effects of Language Experience 473 simultaneously?heard vowel (Kuhl & Meltzoff, 1982) even if that vowel is non? native (not previously experienced)(Walton & Bower, 1993), and the McGurk effect, in which a phonetic …

Exam Prep for: Counseling Strategies and Interventions for…

D Mason – 2019 – books.google.com

Page 1. Mºnºn). Exam Preps Exam Prep for: Counseling Strategies and Interventions for Professional Helpers EEGOk. Editiºn Page 2. Table of Contents Title Page Copyright Foundations of Psychology History of Psychology …

A Brief Survey of Formal and Informal Tools for Prediction

BA Schuetze – 2019 – schu.etze.co

… high. The McGurk effect arises because our prior predictions regarding the sound we should that … For this reason, I fear that this research on the humanization of artificial intelligence may be a passing fad. Page 20. TOOLS FOR PREDICTION 20 Conclusion …

Exam Prep for: Preventing Child and Adolescent Problem…

D Mason – 2019 – books.google.com

Page 1. Mºnºn). Exam Preps Exam Prep for: Preventing Child and Adolescent Problem Behavior Evidence-Based Strategies in Schools, Families, and Communities Ebººk Editiºn Page 2. Table of Contents Title Page Copyright …

Crossmodal perception in virtual reality

S Malpica, A Serrano, M Allue, MG Bedia… – Multimedia Tools and …, 2019 – Springer

… Another well-known example is the McGurk effect [33] where lip movements of a subject are integrated with different but similar speech sounds. In this work we first investigate the effect of auditory spatial information on the percep- tion of moving visual stimuli …

Exam Prep for: PSYCHOLOGY & 1KEY COURSECOMPASS & S/GD PKG

D Mason – 2019 – books.google.com

Page 1. Mºnºn). Exam Preps Exam Prep for: PSYCHOLOGY & KEY COURSECOMPASS & SIGD PKG Ebººk Edition Page 2. Table of Contents Title Page Copyright Foundations of Psychology History of Psychology Educational …

Exam Prep for: Nutrition Therapy Advanced Counseling Skills

D Mason – 2019 – books.google.com

Page 1. Mºnºn). Exam Preps Exam Prep for: Nutrition Therapy Advanced Counseling Skills Ebººk Edition Page 2. Table of Contents Title Page Copyright Foundations of Psychology History of Psychology Educational Psychology …

State of the art on monocular 3D face reconstruction, tracking, and applications

M Zollhöfer, J Thies, P Garrido, D Bradley… – Computer Graphics …, 2018 – Wiley Online Library

Skip to Main Content …

Five Decades After Chomsky: An Experienced-Based Awakening

D Massaro – 2017 – JSTOR

… just another language change that children from birth onward might master when embedded in the imminent technology and artificial intelligence; Massaro, 2012a … and the facial movements of the talker are used together to identify a speech syllable (the so-called McGurk effect) …

“Aha” ptics: Enjoying an Aesthetic Aha During Haptic Exploration

C Muth, S Ebert, S Markovi?, CC Carbon – Perception, 2019 – journals.sagepub.com

Perceptual insight, like recognizing hidden figures, increases the appreciation of visually perceived objects. We examined this Aesthetic Aha paradigm in the ha…

Discussion-facilitator: towards enabling students with hearing disabilities to participate in classroom discussions

MA Alzubaidi, M Otoom – International Journal of Technology …, 2018 – researchgate.net

… Morcillo, JC López, R. Barra- Chicote, R. Córdoba, and R. San-Segundo, “Translating bus information into sign language for deaf people,” Engineering Applications of Artificial Intelligence, 32, pp … “A neural basis for interindividual differences in the McGurk effect, a multisensory …

Association Learning inspired by the Symbol Grounding Problem

F Raue – 2018 – kluedo.ub.uni-kl.de

… C H A P T E R 1 INTRODUCTION The human brain is an essential inspiration for Artificial Intelligence (AI) when designing and creating computational models, eg, HMAX [1] and SpikeNet [2] … tory signals. For example, the McGurk effect [28] shows a perceptual phenomenon that …

Key-postures, trajectories and sonic shapes

RI Godøy – Music and Shape, 2017 – books.google.com

… There is now mounting evidence that the sense modalities work together and complement one another, sometimes even with one sense modality over- riding another, resulting in what may be judged as illusions, as in the ‘McGurk effect’where visual impressions of a speaker’s …

Multisensory feature integration in (and out) of the focus of spatial attention

C Spence, C Frings – Attention, Perception, & Psychophysics, 2019 – Springer

Page 1. 40 YEARS OF FEATURE INTEGRATION: SPECIAL ISSUE IN MEMORY OF ANNE TREISMAN Multisensory feature integration in (and out) of the focus of spatial attention Charles Spence1 & Christian Frings2 © The Psychonomic Society, Inc. 2019 …

An Empirical Evaluation of Convolutional and Recurrent Neural Networks for Lip Reading

KB Heimbach – 2018 – dspace.library.uu.nl

… intelligence. One reason for the increase of this form of automation is the rapid development of research in artificial intelligence, and more specifically, machine learning. Machine … video). This effect was called the McGurk effect. This …

Interaction with Robots

G Skantze, J Gustafson, J Beskow – The Handbook of Multimodal …, 2019 – books.google.com

… Another testament to the strong influence of visual speech and the multimodal nature of speech perception is the McGurk effect [McGurk and … Lip motion is one example of this, where designing a mechatronic system capable of motion rapid enough for lip synchronization has yet …

PROFILING HUMANS FROM THEIR VOICE.

R Singh – 2019 – Springer

… and beyond). Predicated largely on concepts in machine learning and artificial intelligence, this part discusses mechanisms for information discovery, feature engineering, and the deduction of profile parameters from them. It …

Emergent spatio-temporal multimodal learning using a developmental network

D Wang, J Xin – Applied Intelligence, 2019 – Springer

… the “Cocktail party effect”, in which speech affected by surrounding noise was drastically more intelligible if the talker could be seen, provided powerful support that humans used visual information in speech recognition [4]. Similarly, the “McGurk effect,” which presentes …

A behaviorally inspired fusion approach for computational audiovisual saliency modeling

A Tsiami, P Koutras, A Katsamanis, A Vatakis… – Signal Processing …, 2019 – Elsevier

… It can therefore be observed that when multi-modal stimuli are incongruent they can lead to illusionary perception of the multi-modal event, as in the ventriloquist or the McGurk effect [5], while in the opposite case, where the stimuli are synchronized/aligned, they can effectively …

Profiling and Its Facets

R Singh – Profiling Humans from their Voice, 2019 – Springer

… The artificial intelligence (AI) based profiling processes described in Part II of this book follow the top-down approach … A common example of an illusion created by visual detractors is the McGurk effect [168], wherein the same sound may be perceived differently by a listener …

Linguistics: An introduction to language and communication

A Akmajian, AK Farmer, L Bickmore, RA Demers… – 2017 – books.google.com

… Speech Production 403 10.3 Language Comprehension 411 10.4 Special Topics 440 The McGurk Effect 440 Connectionist … anthropology, sociology, language teaching, cognitive psychology, philosophy, computer science, neuroscience, and artificial intelligence, among others …

Gated multimodal units for information fusion

J Arevalo, T Solorio, M Montes-y-Gómez… – arXiv preprint arXiv …, 2017 – arxiv.org

… In an interesting result, Ngiam et al. (2011) were able to mimic a perceptual phenomenon that demonstrates an interaction between hearing and vision in speech perception known as McGurk effect. A similar approach was proposed by Srivas- tava & Salakhutdinov (2012) …

Identification of Retinal Biomarkers in Alzheimer’s Disease Using Optical Coherence Tomography: Recent Insights, Challenges, and Opportunities

D Cabrera DeBuc, M Gaca-Wysocka… – Journal of clinical …, 2019 – mdpi.com

… It is also worth noting that the use of big data and open-source models taking advantage of artificial intelligence are a critical step for more integrative, data-driven clinical studies to investigate into all the possible biological features involved, as well as the direct connections …

How Optimal Is Word-referent Identification Under Multimodal Uncertainty?

A Fourtassi, MC Frank – 2018 – psyarxiv.com

… to be perceptually correlated. The expectation for this correlation is strong enough that 117 when there is a mismatch between the auditory and visual input, they are still integrated 118 into a unified (but illusory) percept (eg, the McGurk Effect; McGurk & MacDonald, 1976). 119 …

Cross database audio visual speech adaptation for phonetic spoken term detection

S Kalantari, D Dean, S Sridharan – Computer Speech & Language, 2017 – Elsevier

… would recognize it as /da/. This effect is known in the literature as the McGurk effect, and it is a great example to show that human speech perception involves participation of more than just one sense. The first attempt to achieve …

Creativity, information, and consciousness: the information dynamics of thinking

GA Wiggins – Physics of life reviews, 2018 – Elsevier

Skip to main content Skip to article …

Limits of perceived audio-visual spatial coherence as defined by reaction time measurements

H Stenzel, J Francombe, PJB Jackson – Frontiers in neuroscience, 2019 – frontiersin.org

The ventriloquism effect describes the phenomenon of audio and visual signals with commonfeatures, such as a voice and a talking face merging perceptually into one percept even if they arespatially misaligned. The boundaries of the fusion of spatially misaligned stimuli are …

Multimodal machine learning: A survey and taxonomy

T Baltrušaitis, C Ahuja… – IEEE Transactions on …, 2018 – ieeexplore.ieee.org

… In order for Artificial Intelligence to make progress in understanding the world around us, it needs to be able to interpret and reason about multimodal messages … It was moti- vated by the McGurk effect [143]—an interaction between hearing and vision during speech perception …

A unified dynamic neural field model of goal directed eye movements

JC Quinton, L Goffart – Connection Science, 2018 – Taylor & Francis

… to drive the field dynamics, this averaging property has been widely exploited in the literature, for instance to model saccadic motor planning (Kopecz & Schöner, 1995) in the context of active vision, or multimodal integration models able to replicate the McGurk effect (Lefort et al …

Qualitative Aspects of the Voice Signal

R Singh – Profiling Humans from their Voice, 2019 – Springer

… This will allow us to continue to leverage human judgment (for which we do not yet have a replacement, despite recent advances in artificial intelligence) along with machine-discovered features, to perfect the processes and outcomes of profiling in the future …

Subjective Consciousness: What am I?

JB Glattfelder – Information—Consciousness—Reality, 2019 – Springer

… Some researchers have used artificial intelligence, specifically, machine learning algorithms, to reconstruct the original picture, given an fMRI scan … The McGurk effect is a fascinating example of how visual information can affect auditory perception (McGurk and MacDonald 1976 …

30 Looking Toward the Future of Cognitive Translation Studies

RM Martín – The handbook of translation and cognition, 2017 – Wiley Online Library

… Connectionism is a series of information?processing approaches to cognition and artificial intelligence that models mental abilities and behavior with simplified circuits of uniform electronic, mathematical units that exhibit learning capabilities in tasks such as face recognition …

Bayesian approaches to autism: Towards volatility, action, and behavior.

CJ Palmer, RP Lawson, J Hohwy – Psychological bulletin, 2017 – psycnet.apa.org

Autism spectrum disorder currently lacks an explanation that bridges cognitive, computational, and neural domains. In the past 5 years, progress has been sought in this area by drawing on Bayesian probability theory to describe both social and nonsocial aspects of autism in terms …

Young infants can learn object and action-words from continuous audiovisual streams

MC Jara González – 2018 – repositorio.uc.cl

Page 1. FACULTY OF SOCIAL SCIENCES SCHOOL OF PSYCHOLOGY YOUNG INFANTS CAN LEARN OBJECT AND ACTION- WORDS FROM CONTINUOUS AUDIOVISUAL STREAMS BY Mª CRISTINA JARA GONZÁLEZ …

Multimodal Depression Detection: An Investigation of Features and Fusion Techniques for Automated Systems

MR Morales – 2018 – academicworks.cuny.edu

… Let us know! Follow this and additional works at: https://academicworks.cuny.edu/gc_etds Part of the Artificial Intelligence and Robotics Commons, Clinical Psychology Commons, and the Computational Linguistics Commons … 59 7.1 Ellie the virtual human interviewer …

Entangled predictive brain: emotion, prediction and embodied cognition

MD Miller – 2018 – era.lib.ed.ac.uk

Page 1. This thesis has been submitted in fulfilment of the requirements for a postgraduate degree (eg PhD, MPhil, DClinPsychol) at the University of Edinburgh. Please note the following terms and conditions of use: This work …

Multimodal Representation Learning for Visual Reasoning and Text-to-Image Translation

R Saha – 2018 – search.proquest.com

… It en- compasses advanced image processing and artificial intelligence techniques to locate, characterize and recognize objects, regions and their attributes in the image in order … and gestures. One of the seminal works, now known as the McGurk effect (McGurk …

Audio-Visual Speech Recognition Using Lip Movement for Amharic Language

B Belete – 2017 – 213.55.95.56

… In general, the nature of human speech is bimodal. Speech observed by a person depends on audio features, as well as on visual features like lip synchronization or facial expressions. Visual features of speech can compensate for a possible loss in acoustic features of speech …

Gating neural network for large vocabulary audiovisual speech recognition

F Tao, C Busso – IEEE/ACM Transactions on Audio, Speech and …, 2018 – dl.acm.org

Page 1. 1286 IEEE/ACM TRANSACTIONS ON AUDIO, SPEECH, AND LANGUAGE PROCESSING, VOL. 26, NO. 7, JULY 2018 Gating Neural Network for Large Vocabulary Audiovisual Speech Recognition Fei Tao , Student …

The Psychology of Music: A Very Short Introduction

EH Margulis – 2018 – books.google.com

… THE APOCRYPHAL GOSPELS Paul Foster ARCHAEOLOGY Paul Bahn ARCHITECTURE Andrew Ballantyne ARISTOCRACY William Doyle ARISTOTLE Jonathan Barnes ART HISTORY Dana Arnold ART THEORY Cynthia Freeland ARTIFICIAL INTELLIGENCE Margaret A …

Introducing language and cognition: a map of the mind

MS Smith – 2017 – books.google.com

Page 1. INTRODUCING LANGUAGE AND COGNITION A Map of the Mind MICHAEL SHARW OOD SMITH Page 2. Introducing Language and Cognition In this accessible introduction, Michael Sharwood Smith provides a work …

Perceptual Organization: An Integrated Multisensory Approach

S Handel – 2019 – books.google.com

Page 1. Stephen Handel Perceptual Organization An Integrated Multisensory Approach Page 2. Perceptual Organization Page 3. Stephen Handel Perceptual Organization An Integrated Multisensory Approach Page 4. Stephen …

Ways in the Studies of Words: The Methodology and Epistemology of Linguistic Science

GG Dupre – 2019 – escholarship.org

Page 1. UCLA UCLA Electronic Theses and Dissertations Title Ways in the Studies of Words: The Methodology and Epistemology of Linguistic Science Permalink https://escholarship.org/ uc/item/34b0h6dm Author Dupre, Gabriel Gagnier Publication Date 2019 …

Multisensory Perception and Communication: Brain, Behaviour, Environment Interaction, and Development in the Early Years

L Gogate – 2020 – books.google.com

… Neural Signatures Lakshmi Gogate Multidisciplinary research across the domains of cognitive development, speech, hearing, and educational sciences, field studies in cultural anthropology, and more recently, empirical research in artificial intelligence, computational modeling …

Linguistics and the explanatory economy

G Dupre – Synthese, 2019 – Springer

Page 1. Synthese https://doi.org/10.1007/s11229-019-02290-x SI: EXPLANATIONS IN COGNITIVE SCIENCE: UNIFICATION VS PLURALISM Linguistics and the explanatory economy Gabe Dupre1 Received: 12 July 2018 / Accepted: 11 June 2019 © Springer Nature BV 2019 …

A Data-Driven Approach For Automatic Visual Speech In Swedish Speech Synthesis Applications

J Hagrot – 2019 – diva-portal.org

Page 1. IN DEGREE PROJECT COMPUTER SCIENCE AND ENGINEERING, SECOND CYCLE, 30 CREDITS , STOCKHOLM SWEDEN 2018 A Data-Driven Approach For Automatic Visual Speech In Swedish Speech Synthesis Applications JOEL HAGROT …

Champions of Illusion: The Science Behind Mind-boggling Images and Mystifying Brain Puzzles

S Martinez-Conde, S Macknik – 2017 – books.google.com

Page 1. º HM M P L NS DF |S|N| THE SCIEN CEBE HIND MIND – BOGG LIN G IMAGES AND MY STIFYING BRAIN PUZZLES Susana Martinez-Conde and Stephen Macknik Founders of the Best Illusion of the Year Contest Page 2. This page intentionally left blank. Page 3 …

Ideophones and the Evolution of Language

J Haiman – 2018 – books.google.com

Page 1. Ideophones and the Evolution of Language John Haiman Page 2. Ideophones and the Evolution of Language Ideophones have been recognized in modern linguistics at least since 1935, but they still lie far outside the …

Colors of Autism Spectrum

A ás Lorincz – 2018 – pdfs.semanticscholar.org

Page 1. Colors of Autism Spectrum: A Single Paradigm Explains the Heterogeneity in Autism Spectrum Disorder Andr ás L ?orincz Faculty of Informatics Eötvös Loránd University Budapest Hungary ABSTRACT We propose a …

The use of immersive virtual reality in neurorehabilitation and its impact in neuroplasticity

M Matamala Gómez – 2017 – diposit.ub.edu

Page 1. The use of immersive virtual reality in neurorehabilitation and its impact in neuroplasticity Marta Matamala Gómez Aquesta tesi doctoral està subjecta a la llicència Reconeixement- NoComercial – SenseObraDerivada 4.0. Espanya de Creative Commons …

Calibrating Reality with a Mind’s Mirror Postulate: Towards a Comprehensive Schema for Measuring Personal Presence

ML Price – 2017 – search.proquest.com

… Examples to review later might include the McGurk effect (McGurk & MacDonald, 1976) a powerful multisensory illusion occurring with audiovisual speech or the … According to Baars (2005), the theory is based on an old concept from artificial intelligence, using the metaphor of a …