Notes:

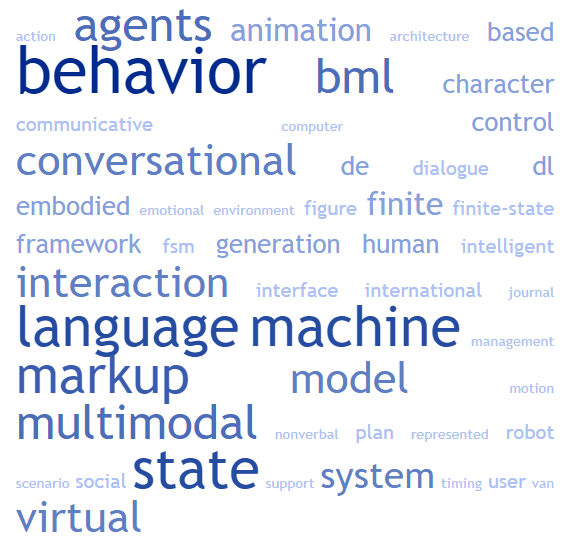

Behavior Markup Language (BML) is a language that is used to specify and control the behaviors of virtual characters in real-time interactive environments. BML is based on the concept of state machines, which are models that describe the possible states that a system can be in and the transitions between those states.

In the context of state machines, BML is used to specify the behaviors of virtual characters in a way that can be easily understood and processed by the system. BML includes a set of predefined behaviors and gestures that can be combined and executed in sequence to create complex and realistic animations. For example, a virtual character might have a “happy” state and a “sad” state, and the BML code could be used to specify the transitions between those states and the behaviors and gestures that the character should display in each state.

See also:

100 Best State Machine Videos | 100 Best Unity3d State Machine Assets | State Machine & Cognitive Architecture | State Machine & Dialog Systems

Animating an Autonomous 3D Talking Avatar

D Borer, D Lutz, M Guay – arXiv preprint arXiv:1903.05448, 2019 – arxiv.org

… Figure 1: Explicitly creating the animation state machine with all connectivity information quickly becomes complex and prone to er- rors … 2008] and the interface for the action specification is often the Behavior Markup Language BML [Kopp et al. 2006] …

All together now

A Hartholt, D Traum, SC Marsella, A Shapiro… – … Workshop on Intelligent …, 2013 – Springer

… Language [17], and in between the behavior planning and behavior realiza- tion phases, called the Behavior Markup Language (BML) [18] … of the NPCEditor which has been extended to incorporate the notion of hierarchical interaction domains, comparable to a state machine …

A Virtual Emotional Freedom Therapy Practitioner

H Ranjbartabar, D Richards – … of the 2016 International Conference on …, 2016 – ifaamas.org

… The dialogue engine sends BML (Behavior Markup Language) message to NVBG (NonVerbal-Behavior-Generator) module containing the line the character will say and for which nonverbal behavior needs to … Figure 2 shows Mecanim’s Animation State Machine created for …

Tactical language and culture training systems: Using AI to teach foreign languages and cultures

WL Johnson, A Valente – AI magazine, 2009 – ojs.aaai.org

… We then turned to a finite-state machine approach that authors can use to specify moderately complex scenarios and that has proved … the production of behavior animation from intent and are exploring the use of representations such as the behavior markup language (BML).6 …

An architecture for personality-based, nonverbal behavior in affective virtual humanoid character

M Saberi, U Bernardet, S DiPaola – Procedia Computer Science, 2014 – Elsevier

… A state machine is a set of input events, output events and states … California’s ITC lab is used as our animation rendering system which provides locomotion, gazing and nonverbal behavior in real time under our scripted control via the Behavior Markup Language (Kopp et al …

A Natural Conversational Virtual Human with Multimodal Dialog System

IR Ali, G Sulong, AH Basori – Jurnal Teknologi, 2014 – journals.utm.my

… It is an extended hierarchical finite state machine (FSM) where an atomic state is represented by a rod and super node that … language (FML) is used for encoding the communicative intent without referring to physical realization and the behavior markup language (BML) specifies …

Socially-aware animated intelligent personal assistant agent

Y Matsuyama, A Bhardwaj, R Zhao, O Romeo… – Proceedings of the 17th …, 2016 – aclweb.org

… It is implemented as a finite state machine whose tran- sitions are determined by different kinds of trig … et al., 2004), which tailors a behavior plan (including relevant hand gestures, eye gaze, head nods, etc.) and outputs the plan as BML (Behavior Markup Language), which is a …

An incremental multimodal realizer for behavior co-articulation and coordination

H Van Welbergen, D Reidsma, S Kopp – International Conference on …, 2012 – Springer

… To fulfill the first requirement, AsapRealizer keeps track of each BML block’s state using the block state machine (Fig … AN, Pelachaud, C., Pirker, H., Thórisson, KR, Vilhjálmsson, HH: Towards a Common Framework for Multi- modal Generation: The Behavior Markup Language …

A human-centric API for programming socially interactive robots

JP Diprose, B Plimmer, BA MacDonald… – … IEEE Symposium on …, 2014 – ieeexplore.ieee.org

… We use an event driven state machine for this purpose (Table 7), which is popular with other programming tools, including Interaction Composer [7] and Robot Behaviour Description Language [20] … When the QueryEvent fires, the state machine transitions …

Starting a conversation with strangers in virtual reykjavik: Explicit announcement of presence

S Ólafsson, B Bédi, HE Helgadóttir… – Proceedings of 3rd …, 2015 – researchgate.net

… in the SAIBA framework for multimodal generation of communicative behavior for ECAs, as manifested in the Behavior Markup Language (BML) [18 … Figure 5: The ‘approach’ block’s state machine propels the con- versation using methods (Initiate and GiveAttention, shown in bold …

Describing and animating complex communicative verbal and nonverbal behavior using Eva-framework

I Mlakar, Z Ka?i?, M Rojc – Applied artificial intelligence, 2014 – Taylor & Francis

… Novel motion–notation scheme (EVA-Script) presents a migration layer between high-level formal descriptions: Behavior markup language (BML; Kopp et al … 2008) for the intent planning phase and Behavior Markup Language (BML) for the behavior planning phase …

JVoiceXML as a modality component in the W3C multimodal architecture

D Schnelle-Walka, S Radomski… – Journal on Multimodal User …, 2013 – Springer

… Full size image. SCXML. Within the W3C MMI architecture recommendation, SCXML is suggested as one suitable XML dialect for controller documents. At its core, SCXML is a description of finite state machine with parallel and nested states, as described by Harel et al. [18] …

The W3C multimodal architecture and interfaces standard

DA Dahl – Journal on Multimodal User Interfaces, 2013 – Springer

… Behavior Markup Language (BML) [9] is used to define the behaviors of intelligent agents and the related Functional Markup Language (FML) [10] is used to define what an agent wants to achieve, in terms of actions, goals, and plans …

Multimodal plan representation for adaptable BML scheduling

D Reidsma, H Van Welbergen, J Zwiers – International Workshop on …, 2011 – Springer

… The behavior is specified using the Behavior Markup Language (BML) [8], defining the form of the behavior and the constraints on its timing … 3). A dedicated BML Block management state machine automatically updates the timing of the BML Block Pegs in reaction to behavior …

Animating synthetic dyadic conversations with variations based on context and agent attributes

L Sun, A Shoulson, P Huang, N Nelson… – … and Virtual Worlds, 2012 – Wiley Online Library

… of the linguistic approach is given by Moulin and Rousseau 15, who discussed a conversation model that acts like a finite?state machine bound to two … The most sophisticated are Behavior Markup Language (BML) and Multimodal Presentation Markup Language 3D (MPML3D) …

A real-time architecture for embodied conversational agents: beyond turn-taking

B Nooraei, C Rich, CL Sidner – ACHI, 2014 – Citeseer

… The Petri-net synchronization mechanism described in Sec- tion VE is modeled on the Behavior Markup Language (BML) [6] developed for … Case 1) Walking Up to the Agent: The engagement schema (implemented as a state machine) continually polls the motion perceptor …

Standardized representations and markup languages for multimodal interaction

R Tumuluri, D Dahl, F Paternò… – The Handbook of …, 2019 – dl.acm.org

… Application Programming Interface (API). Set of procedures made available by a software application to provide services to external programs. Behavior Markup Language (BML). An XML-based language for describing behaviors that should be realized by animated agents …

Simulating listener gaze and evaluating its effect on human speakers

L Frädrich, F Nunnari, M Staudte, A Heloir – International Conference on …, 2017 – Springer

… movements, which also influence the speed of the gazing behaviour; ii) the timeouts used by the state machine to change … Marshall, A., Pelachaud, C., Pirker, H., Thorisson, K.: Towards a common framework for multimodal generation in ECAs: The behavior markup language …

Flipper 2.0: a pragmatic dialogue engine for embodied conversational agents

J van Waterschoot, M Bruijnes, J Flokstra… – Proceedings of the 18th …, 2018 – dl.acm.org

… turn-taking, back channelling, and grounding. Turn-taking for example can be done by a state- machine which regulates turns based on current speech activity of the user and agent. By using this conceptual division between …

When Strangers Meet: Collaborative Construction of Procedural Conversation in Embodied Conversational Agents

S Ólafsson – skemman.is

… The SAIBA framework community defines two representation languages that allow transition from stage (1) to (2) and from (2) to (3), these are the Function Markup Language (FML) and the Behavior Markup Language (BML), respectively. The BML standard version …

Personalized expressive embodied conversational agent EVA

I Mlakar, M Rojc – Proceedings of the 3rd WSEAS international …, 2010 – dl.acm.org

… Kopp, S., Mancini, M., Marsella, S., Marshall, AN, Pelachaud, C., Ruttkay, Z., Th_orisson, KR, van Welbergen, H., van der Werf, RJ: The Behavior Markup Language: Recent developments … Finite-state machine based distributed framework DATA for intelligent ambience systems …

Nonverbal Behavior in

A Cafaro, C Pelachaud… – The Handbook of …, 2019 – books.google.com

… They are typically biphasic (two movement components), small, low energy, rapid flicks of the fingers or hand”[McNeill 1992]. Behavior Markup Language bml is an XML-like mark up language specially suited for representing communicative behavior …

(Simulated) listener gaze in real?time spoken interaction

L Frädrich, F Nunnari, M Staudte… – … Animation and Virtual …, 2018 – Wiley Online Library

… the experiment described in this paper capitalizes on previous (experimental) work.3 The solution employed back then was comparable to available agent control frameworks16-19 and offered a Behavior Markup Language20 interface to the … Agent’s behavior state machine …

Nonverbal behavior in multimodal performances

A Cafaro, C Pelachaud, SC Marsella – The Handbook of Multimodal …, 2019 – dl.acm.org

… They are typically biphasic (two movement components ), small, low energy, rapid flicks of the fingers or hand” [McNeill 1992]. Behavior Markup Language bml is an XML-like mark up language specially suited for representing communicative behavior …

Towards ECA’s Animation of Expressive Complex Behaviour

I Mlakar, M Rojc – Analysis of Verbal and Nonverbal Communication and …, 2011 – Springer

… The idea of continuity is implemented by performing an animated segment in the form of a finite-state machine (FSM) … Pelachaud, C., Pirker, H., Thórisson, K., Vilhjalmsson, H.: Towards a Common Framework for Multimodal Generation in ECAs: The Behavior Markup Language …

Extending multimedia languages to support multimodal user interactions

ÁLV Guedes, RG de Albuquerque Azevedo… – Multimedia tools and …, 2017 – Springer

… BML (Behavior Markup Language) is an XML description language for controlling the verbal and nonverbal behavior of embodied conversational agents … MMI indicates the use of SCXML (State Machine Notation for Control Abstraction) [47] for control documents, and VoiceXML …

MultiPro: Prototyping Multimodal UI with Anthropomorphic Agents

P Kulms, H van Welbergen, S Kopp – Mensch und Computer 2018 …, 2018 – dl.gi.de

… We have embedded a realizer for BML (Behavior Markup Language) to take care of this and extended it to allow synchronization of the agent’s behavior … datamodel and with it offers the capability of storing, reading, and modifying a set of data that is internal to the state machine …

TTS-driven expressive embodied conversation agent EVA for UMB-SmartTV

M Rojc, M Presker, Z Ka?i?, I Mlakar – International journal of …, 2014 – academia.edu

Page 1. Abstract— The main goal of using non-verbal modalities together with the general text-to-speech (TTS) system is to better emulate human-like course of the interaction between users and the UMB-SmartTV platform …

Field trial analysis of socially aware robot assistant

F Pecune, J Chen, Y Matsuyama… – Proceedings of the 17th …, 2018 – researchgate.net

… The Task Reasoner was initially designed as a probabilistic finite state machine whose transitions are governed by a set of rules and … is sent to BEAT, a nonverbal behavior generator [13], and BEAT gen- erates a behavior plan in the BML (Behavior Markup Language) form [20] …

Virtual Reality Game for Social Cue Detection Training

A Þórðarson – 2018 – arithordarson.com

… 1 3.1 A high level state machine representing the behavior of the characters . . . 11 3.2 A screenshot from the application … 18 3.8 The state machine for the character behavior …

On the use of word embeddings for identifying domain specific ambiguities in requirements

S Mishra, A Sharma – 2019 IEEE 27th International …, 2019 – ieeexplore.ieee.org

… Example sentences from the corpora showing the variation of meaning of CS words machine and device are given below : A1. machine : A finite state machine just looks at the input signal and the current state: it has no stack to work with. A2 …

Timed petri nets for multimodal interaction modeling

C Chao, A Thomaz – ICMI 2012 workshop on speech and gesture …, 2012 – Citeseer

… re- cently, supervisors for multi-robot control in a robot soccer domain (eg [1]). In HRI, Holroyd has applied them to execution of Behavior Markup Language (BML) [5 … 2.2 Finite state machines One of the most commonly used automata for agent ac- tion is the finite state machine …

A model of social explanations for a conversational movie recommendation system

F Pecune, S Murali, V Tsai, Y Matsuyama… – Proceedings of the 7th …, 2019 – dl.acm.org

… Our dialog manager (DM) is designed as a finite state machine that takes the user intent and entity from the NLU as inputs; it … realizer which adds and synchronizes non- verbal behavior with the utterance to generate a behavior plan in the Behavior Markup Language (BML) form …

The TTS-driven affective embodied conversational agent EVA, based on a novel conversational-behavior generation algorithm

M Rojc, I Mlakar, Z Ka?i? – Engineering Applications of Artificial Intelligence, 2017 – Elsevier

… functions. Communicative functions are represented through Function Markup Language (FML) (Cafaro et al., 2014), while co-verbal behavior is represented through Behavior Markup Language (BML) (Vilhjalmsson et al., 2007) …

Multimodal human-machine interaction including virtual humans or social robots

NM Thalmann, D Thalmann, Z Yumak – SIGGRAPH Asia 2014 Courses, 2014 – dl.acm.org

Page 1. Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for commercial advantage and that copies bear this notice and the full citation on the first page …

Usability assessment of interaction management support in LOUISE, an ECA-based user interface for elders with cognitive impairment

P Wargnier, S Benveniste, P Jouvelot… – Technology and …, 2018 – content.iospress.com

… Behavior Markup Language (BML) and/or the Speech Synthesis Markup Language (SSML) (see [42] for the technical details about AISML, as well as its XML schema specification). Given an AISML scenario, LOUISE interaction manager maintains a finite state machine with five …

State of the art in hand and finger modeling and animation

N Wheatland, Y Wang, H Song, M Neff… – Computer Graphics …, 2015 – Wiley Online Library

Abstract The human hand is a complex biological system able to perform numerous tasks with impressive accuracy and dexterity. Gestures furthermore play an important role in our daily interactions, …

Analysis and Exploitation of Synchronized Parallel Executions in Behavior Trees

M Colledanchise, L Natale – arXiv preprint arXiv:1908.01539, 2019 – arxiv.org

… The parallel execution of independent behaviors can arise several concur- rency problems in any modeling language and BTs are no exception. However, the parallel composition of BTs is less sensitive to dimensionality problems than a classical finite state machine [12] …

Embodiment, emotion, and chess: A system description

C Becker-Asano, N Riesterer… – Proceedings of the …, 2015 – pdfs.semanticscholar.org

… transitions between the talking head’s facial expressions neutral, sad, happy, and bored are controlled by a state machine that takes … Behavior module to integrate the chess move with emotional states into a behavior description • A Behavior Markup Language (BML) Interpreter …

Exploiting evolutionary algorithms to model nonverbal reactions to conversational interruptions in user-agent interactions

A Cafaro, B Ravenet… – IEEE Transactions on …, 2019 – ieeexplore.ieee.org

… Once a conversation state is marked, a Finite State Machine processes it, as depicted in Figure 3, and triggers the appropriate Annotation Event, which is a pair indicating the layer and corresponding label from our annotation schema …

Comparing and evaluating real time character engines for virtual environments

M Gillies, B Spanlang – Presence: Teleoperators and Virtual …, 2010 – MIT Press

Page 1. Marco Gillies* Department of Computing Goldsmiths University of London London, UK Bernhard Spanlang Departament de LSI Universitat Politècnica de Catalunya Spain and EVENT Lab Universitat de Barcelona Spain Presence, Vol. 19, No …

TTS-driven Embodied Conversation Avatar for UMB-SmartTV

M Rojc, Z Ka?i?, M Presker, I Mlakar – academia.edu

… [22] M. Rojc, I. Mlakar. Finite-state machine based distributed framework DATA for intelligent ambience systems … [25] H. Vilhjalmsson, N. Cantelmo, J. Cassell, NE Chafai, et al., The behavior markup language: Recent developments and challenges, In proc. of IVA’07 (2007) …

Adapting a virtual advisor’s verbal conversation based on predicted user preferences: A study of neutral, empathic and tailored dialogue

H Ranjbartabar, D Richards, AA Bilgin, C Kutay… – Multimodal …, 2020 – mdpi.com

Virtual agents that improve the lives of humans need to be more than user-aware and adaptive to the user’s current state and behavior. Additionally, they need to apply expertise gained from experience that drives their adaptive behavior based on deep understanding of the user’s …

Time to go ONLINE! A Modular Framework for Building Internet-based Socially Interactive Agents

M Polceanu, C Lisetti – Proceedings of the 19th ACM International …, 2019 – dl.acm.org

… Domain Scenario Authoring Components With EEVA, building scenarios to be interpreted by the application component consists in defining the structure of a state machine (SM) (note … Towards a common framework for multimodal generation: The behavior markup language …

Multimodal plan representation for adaptable BML scheduling

H van Welbergen, D Reidsma, J Zwiers – Autonomous agents and multi …, 2013 – Springer

… The type of behavior, and the constraints on its timing, are specified using the Behavior Markup Language (BML) … A dedicated BML Block management state machine automatically updates the timing of the BML Block Pegs in reaction to behavior plan modifications that occur at …

Multilingual and Multimodal Corpus-Based Textto-Speech System–PLATTOS

M Rojc, I Mlakar – Ipši? I.(ur.). Speech and Language …, 2011 – books.google.com

… All TTS engine dequeues are empty at the start. Firstly, the tokenizer module starts generating tokens from the input text by using a finite-state machine (FSM) based lexical scanner … Then the FSM compiler is used for the construction of a tokenizer finite-state machine …

W3C based Interoperable Multimodal Communicator

D Park, D Gwon, J Choi, I Lee… – Journal of Broadcast …, 2015 – koreascience.or.kr

… ?? SCXML(State Chart XML) State machine notation for control abstraction. World Wide Web Consortium [3] VoiceXML Voice Extensible Makeup Language. World Wide Web Consortium [4] BML Common framework for multimodal generation. The behavior markup language …

A multimodal system for real-time action instruction in motor skill learning

I de Kok, J Hough, F Hülsmann, M Botsch… – Proceedings of the …, 2015 – dl.acm.org

… Then key-postures for the MPs are defined. Motion segmentation works via using a state machine: Each motor action and its MPs are represented as states … It is currently implemented as a finite state machine making decisions based on an in- formation state …

Designing an API at an appropriate abstraction level for programming social robot applications

J Diprose, B MacDonald, J Hosking… – Journal of Visual …, 2017 – Elsevier

… of the specific type of primitive. For example, a finite state machine could be used to create dialogue between a human and a robot, by combining primitives for speaking and listening together. This is similar to the distinction …

Believable Virtual Characters in Human-Computer Dialogs.

Y Jung, A Kuijper, DW Fellner, M Kipp… – Eurographics …, 2011 – michaelkipp.de

… In the CrossTalk and COHIBIT systems, interactive embod- ied agents are controlled by the so-called sceneflow, an ex- tended hierarchical finite state machine (FSM) where a node represents an atomic state or a supernode containing another FSM [GKKR03] …

Timing in multimodal turn-taking interactions: Control and analysis using timed petri nets

C Chao, AL Thomaz – Journal of Human-Robot Interaction, 2012 – dl.acm.org

… also to present a preliminary implementation of action interruptions based on MNI by extending a finite state machine (Thomaz & … a different approach; Holroyd’s Petri nets are dynami- cally generated and executed for the realization of Behavior Markup Language (BML), rather …

Extending ncl to support multiuser and multimodal interactions

ÁLV Guedes, RG de Albuquerque Azevedo… – Proceedings of the …, 2016 – dl.acm.org

… SCXML is a state machine-based language responsible for combining multiple input modalities; HTML is responsible for presenting the output modalities. Microsoft [19] and Google [10] propose multiuser support in gaming contexts …

Towards a simple augmented reality museum guide

J Skjermo, MJ Stokes, T Hallgren… – Proceedings of the …, 2010 – ntnuopen.ntnu.no

… The virtual guide’s behaviour was modelled using the state machine shown in Figure 4. The state machine in turn drives the guide’s movement system, animation system and audio … Towards a common framework for multimodal generation: The behavior markup language …

Where to look first? Behaviour control for fetch-and-carry missions of service robots

M Bajones, D Wolf, J Prankl, M Vincze – arXiv preprint arXiv:1510.01554, 2015 – arxiv.org

… and-carry scenario we compare two different implementations within a ROS environment and the SMACH state machine architecture … KR Thórisson, and H. Vilhjálmsson, “Towards a com- mon framework for multimodal generation: The behavior markup language,” in Intelligent …

Now we’re talking: Learning by explaining your reasoning to a social robot

FM Wijnen, DP Davison, D Reidsma, JVD Meij… – ACM Transactions on …, 2019 – dl.acm.org

… The robot’s behaviors were specified using Behavior Markup Language (BML) [Kopp et al … The dialogue engine was constructed as a Finite State Machine (FSM) that modelled the in- teraction of the child with the learning materials as a collection of states, input events, transitions …

Procedural Reasoning System (PRS) architecture for agent-mediated behavioral interventions

E Begoli – IEEE SOUTHEASTCON 2014, 2014 – ieeexplore.ieee.org

… distribution of the components. We also favored SPADE be- cause of its support of Finite State Machine (FSM) model, ex- tensible knowledge base architecture and support of behavior- specific template handlers. 1) Verbal Behavior …

Timed Petri nets for fluent turn-taking over multimodal interaction resources in human-robot collaboration

C Chao, A Thomaz – The International Journal of Robotics …, 2016 – journals.sagepub.com

The goal of this work is to develop computational models of social intelligence that enable robots to work side by side with humans, solving problems and achieving task goals through dialogue and c…

Authoring multi-actor behaviors in crowds with diverse personalities

M Kapadia, A Shoulson, F Durupinar… – Modeling, Simulation and …, 2013 – Springer

… Behavior State Machine Specification. A behavior state defines an actors current goal and objective function. The goal is a desired state the actor must reach; the objective function is a weighted sum of costs the actor must optimize …

Social Spatial Behavior for 3D Virtual Characters

N Karimaghalou – 2013 – summit.sfu.ca

Page 1. Social Spatial Behavior for 3D Virtual Characters by Nahid Karimaghalou B.Sc., University of Tehran, 2007 Thesis in Partial Fulfillment of the Requirements for the Degree of Master of Science in the School of Interactive Arts and Technology …

Case-Based Reasoning in the Cognition-Action Continuum

P Oztürk, A Tidemann – Norwegian Artificial Intelligence …, 2010 – researchgate.net

Page 75. i i “final”—2019/9/12—16: 58—page 67—# 75 i i i i i i Norwegian Artificial Intelligence Symposium, Gjøvik, 22 November 2010 Case-Based Reasoning in the Cognition-Action Continuum Pinar Oztürk and Axel Tidemann …

Qualitative Parameters for Modifying Animated Character Gesture Motion

P Pohl, A Heloir – 2010 – Citeseer

… Control of lip shapes, visualizing the spoken phonemes Table 2.1.: Main behavior categories of the Behavior Markup Language … Figure 2.7.: State machine learned from input data, original motion is from left to right. High probability arcs show cycles of motion primitives …

Interactive virtual characters

D Thalmann, NM Thalmann – SIGGRAPH Asia 2013 Courses, 2013 – dl.acm.org

Page 1. Interactive Virtual Characters Nadia Magnenat Thalmann MIRALab, University of Geneva and NTU, Singapore Daniel Thalmann NTU, Singapore and EPFL, Switzerland Syllabus • Introduction (Daniel Thalmann) 5 minutes …

A transparent and decentralized model of perception and action for intelligent virtual agents

G Anastassakis, T Panayiotopoulos – International Journal on …, 2014 – World Scientific

Page 1. 1st Reading August 7, 2014 14:9 WSPC/INSTRUCTION FILE S0218213014600203 International Journal on Artificial Intelligence Tools Vol. 23, No. 4 (2014) 1460020 (23 pages) c World Scientific Publishing Company DOI: 10.1142/S0218213014600203 …

The conversational interface

MF McTear, Z Callejas, D Griol – 2016 – Springer

Page 1. Michael McTear · Zoraida Callejas David Griol The Conversational Interface Talking to Smart Devices Page 2. The Conversational Interface Page 3. Michael McTear • Zoraida Callejas David Griol The Conversational Interface Talking to Smart Devices 123 Page 4 …

Creating new technologies for companionable agents to support isolated older adults

CL Sidner, T Bickmore, B Nooraie, C Rich… – ACM Transactions on …, 2018 – dl.acm.org

Page 1. 17 Creating New Technologies for Companionable Agents to Support Isolated Older Adults CANDACE L. SIDNER, Worcester Polytechnic Institute TIMOTHY BICKMORE, Northeastern University BAHADOR NOORAIE …

Dynamic aspects of character rendering in the context of multimodal dialog systems

YA Jung – 2011 – tuprints.ulb.tu-darmstadt.de

Page 1. Dynamic Aspects of Character Rendering in the Context of Multimodal Dialog Systems Vom Fachbereich Informatik der Technischen Universität Darmstadt genehmigte DISSERTATION zur Erlangung des akademischen Grades Doktor-Ingenieur (Dr.-Ing.) …

The SEMAINE API: A component integration framework for a naturally interacting and emotionally competent Embodied Conversational Agent

M Schröder – 2011 – publikationen.sulb.uni-saarland.de

Page 1. The SEMAINE API — A Component Integration Framework for a Naturally Interacting and Emotionally Competent Embodied Conversational Agent Dissertation zur Erlangung des Grades des Doktors der Ingenieurwissenschaften …

Conversational AI: Dialogue Systems, Conversational Agents, and Chatbots

M McTear – Synthesis Lectures on Human Language …, 2020 – morganclaypool.com

Page 1. MCT EAR C ONVE R SA T ION AL AI M O R GAN & CL A YPOO L Page 2. Page 3. Conversational AI Dialogue Systems, Conversational Agents, and Chatbots Page 4. Page 5. Synthesis Lectures on Human Language Technologies …

A real-time architecture for conversational agents

BN Beidokht – 2012 – core.ac.uk

… By changing realizers we can move the system to another platform There is a well-known stan- dard for behavior specification called Behavior Markup Language (BML). The idea behind BML was that by conforming to the standard, robotic systems will be inde …

Commercialization of multimodal systems

PR Cohen, R Tumuluri – The Handbook of Multimodal-Multisensor …, 2019 – dl.acm.org

Page 1. 15Commercialization of Multimodal Systems Philip R. Cohen, Raj Tumuluri 15.1 Introduction This chapter surveys the broad and accelerating commercial activity in build- ing products incorporating multimodal-multisensor interfaces …

Interactive narration with a child: impact of prosody and facial expressions

O ?erban, M Barange, S Zojaji, A Pauchet… – Proceedings of the 19th …, 2017 – dl.acm.org

… Later, DiaWOZ-II proposes a text interface for a tutoring system. [48] creates a web interface to simulate dialogue scenarios in a restaurant. [27] includes a dialogue model based on a finite-state machine, driven by a pilot, with the possibility to add more states at run time …

Scenarios in virtual learning environments for one-to-one communication skills training

R Lala, J Jeuring… – International …, 2017 – educationaltechnologyjournal …

A scenario is a description of a series of interactions between a player and a virtual character for one-to-one communication skills training, where at each step the player is faced with a choice between statements. In this paper, we analyse the characteristics of scenarios and provide …

Supervised Hybrid Expression Control Framework for a Lifelike Affective Avatar

S Lee – 2013 – indigo.uic.edu

… In (b), acquired motion data was processed and retargeted to his avatar model to reconstruct his real body gestures and mannerism. …. 48 Figure 24. Semi-Deterministic Hierarchical Finite State Machine (SDHFSM) for motion synthesis …

Timing multimodal turn-taking in human-robot cooperative activity

C Chao – 2015 – smartech.gatech.edu

Page 1. TIMING MULTIMODAL TURN-TAKING IN HUMAN-ROBOT COOPERATIVE ACTIVITY A Thesis Presented to The Academic Faculty by Crystal Chao In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the School of Interactive Computing …

Multimodal integration for interactive conversational systems

M Johnston – The Handbook of Multimodal-Multisensor Interfaces …, 2019 – dl.acm.org

… A finite-state automaton, or finite-state acceptor is a finite state machine that operates over a single stream of input symbols … A finite-state transducer is a finite-state machine that operates over two streams, an input stream, and an output stream …

Real time animation of virtual humans: a trade?off between naturalness and control

H Van Welbergen, BJH Van Basten… – Computer Graphics …, 2010 – Wiley Online Library

Abstract Virtual humans are employed in many interactive applications using 3D virtual environments, including (serious) games. The motion of such virtual humans should look realistic (or ‘natural’…

Utility Learning, Non-Markovian Planning, and Task-Oriented Programming Language

N Shukla – 2019 – escholarship.org

… Causal And-Or graph (STC-AOG) as a (stochastic) context-free grammar. Doing so reminds us that the STC-AOG has the expressive power to represent tasks in ways a finite-state machine (or regular grammar) may never fully capture …

An eye gaze model for controlling the display of social status in believable virtual humans

M Nixon, S DiPaola, U Bernardet – 2018 IEEE Conference on …, 2018 – ieeexplore.ieee.org

… [29] proposed a parametric gaze and head movement model that is linked with the emotional state machine developed at MIRALab [30], where mood is represented with Mehrabian’s Pleasure-Arousal-Dominance (PAD) Temperament Model [31] …

Extending multimedia languages to support multimodal user interactions

S Colcher – 2017 – maxwell.vrac.puc-rio.br

… BML Behavior Markup Language DOM Document Object Model … MMI [14] MCs with LifeCycle messages DoneNotification with EMMA state machine (SCXML) SCXML (ECMAScript) sequence of LifeCycle messages (optionally inside if-then-else) MCs with LifeCycle messages …

Generation of communicative intentions for virtual agents in an intelligent virtual environment: application to virtual learning environment

B Nakhal – 2017 – tel.archives-ouvertes.fr

… VH Virtual Human MARC Multimodal Affective and Reactive Character FML Function Markup Language BML Behavior Markup Language Page 13. xi SAIBA Situation, Agent, Intention, Behavior and Animation FML-APML FML-Affective Presentation Markup Language …

Realization and high level specification of facial expressions for embodied agents

R Paul – 2010 – essay.utwente.nl

… 11 3 Behavior Markup Language 15 … Chapter 3 Behavior Markup Language This chapter describes Behavior Markup Language (BML), the design of a parser that reads BML and stores it in an internal representation and includes some words on schedul- ing of behaviors …

RASCALLI Platform; a dynamic modular runtime environment for agent modeling

C Eis – 2008 – repositum.tuwien.at

Page 1. RASCALLI Platform: A Dynamic Modular Runtime Environment for Agent Modeling DIPLOMARBEIT zur Erlangung des akademischen Grades Diplom-Ingenieur im Rahmen des Studiums Informatik eingereicht von Christian Eis Matrikelnummer 9325145 …

Physical engagement as a way to increase emotional rapport in interactions with embodied conversational agents

IG Sepulveda – 2015 – search.proquest.com

Page 1. PHYSICAL ENGAGEMENT AS A WAY TO INCREASE EMOTIONAL RAPPORT IN INTERACTIONS WITH EMBODIED CONVERSATIONAL AGENTS IVAN GRIS SEPULVEDA Department of Computer Science Charles Ambler, Ph.D. Dean of the Graduate School …

Physical engagement as a way to increase emotional rapport in interactions with embodied conversational agents

I Gris Sepulveda – 2015 – scholarworks.utep.edu

Page 1. University of Texas at El Paso DigitalCommons@UTEP Open Access Theses & Dissertations 2015-01-01 Physical Engagement As A Way To Increase Emotional Rapport In Interactions With Embodied Conversational Agents …

A virtual emotional freedom practitioner to deliver physical and emotional therapy

H Ranjbartabar – 2016 – researchonline.mq.edu.au

… procedure ….. 23 Figure 11: Architecture for virtual EFT practitioner: EFFIE ….. 24 Figure 12: Mecanim’s Animation State Machine created for Effie ….. 25 Figure …

User handover in a cross media device environment, a coaching service for physical activity

W Wieringa – 2012 – essay.utwente.nl

Page 1. USER HANDOVER IN A CROSS MEDIA DEVICE ENVIRONMENT wilko wieringa A Coaching Service for Physical Activity Page 2. Wilko Wieringa: User Handover in a Cross Media Device Environment, A Coaching Service for Physical Activity, c August 2012 …

Learning socio-communicative behaviors of a humanoid robot by demonstration

DC Nguyen – 2018 – hal.archives-ouvertes.fr

… 128 Page 11. viii Contents A Sensorimotor Calibration for Pointing 131 A.1 Comments . . . . . 132 B Finite State Machine of the RL/RI scenario 135 B.1 FSM . . . . . 135 …

Modeling Affective System and Episodic Memory for Social Companions

Z Juzheng – 2017 – dr.ntu.edu.sg

… makes decisions with a hierarchical task network (HTN) planner and organize dialogs by a finite-state-machine (FSM)-based dialog manager. By integrating an emotion module and a database-like memory module, Eva can generate dialogs based on predefined scripts with …

Territoriality and Visible Social Commitment for Virtual Agents

J Rossi, L Veroli, M Massetti – skemman.is

… the agent: even though it is a big advantage, it could be very difficult to define all the relationships between objects, that could be used together, because all the inter- actions are handled by finite state machine (FSM) or behaviour trees. Due to this …

SimCoach evaluation: a virtual human intervention to encourage service-member help-seeking for posttraumatic stress disorder and depression

D Meeker, JL Cerully, MD Johnson, N Lyer, JR Kurz… – 2015 – apps.dtic.mil

… Page 15. Page 16. xv Abbreviations BML Behavior Markup Language CI confidence interval DCoE Defense Centers of Excellence for Psychological Health and Traumatic Brain Injury DM dialogue management DoD US Department of Defense …

IMPLICIT CREATION’–NON-PROGRAMMER CONCEPTUAL MODELS FOR AUTHORING IN INTERACTIVE DIGITAL STORYTELLING

UM Spierling – 2012 – pearl.plymouth.ac.uk

Page 1. ‘IMPLICIT CREATION’ – NON-PROGRAMMER CONCEPTUAL MODELS FOR AUTHORING IN INTERACTIVE DIGITAL STORYTELLING by ULRIKE MARTINA SPIERLING A thesis submitted to the University of Plymouth in partial fulfilment for the degree of …

Authoring and Evaluating Autonomous Virtual Human Simulations

MT Kapadia – 2011 – search.proquest.com

… 7.1.2 Specialization 98. 7.1.3 Behavior State Machine Specification 100. 7.2 Scenario Generation 100 … The state machine transitionsare based on speed thresholds, and the animations are also played at differentspeeds to match the simulated speed of the agents …

Creating 3D Animated Human Behaviors for Virtual Worlds

JM Allbeck – 2009 – Citeseer

… 35 Figure 5: Action hierarchy diagram. …. 39 Figure 6: Finite state machine, AgentNet that processes an agent’s queue of actions.. 56 Figure 7: Finite state machine, PARNet that operates as the process manager for each agent …

SimCoach Evaluation

D Meeker, JL Cerully, MD Johnson, N Iyer, JR Kurz… – 2015 – rand.org

… Page 14. Page 15. xv Abbreviations BML Behavior Markup Language CI confidence interval DCoE Defense Centers of Excellence for Psychological Health and Traumatic Brain Injury DM dialogue management DoD US Department of Defense …

Conceptual Modeling (CM) for Military Modeling and Simulation (M&S)

NA TREATY – foi.se

… C2IEDM Command and Control Information Exchange Data Model C-BML Coalition Battle Management Language CBML Cell Behavior Markup Language CIM Computation Independent Model CM Conceptual Model, Conceptual Modeling CML Conceptual Modeling …

Motion Capture Based Animation for Virtual Human Demonstrators: Modeling, Parameterization and Planning

Y Huang – 2012 – search.proquest.com

… For instance, Lau and Kuffner [LK05] plan over a behavior-based nite state machine (FSM) of motions [LK06], Choi et al … For explicit behavior modeling, [KKM06] describes Behavior Markup Language (BML) within a framework to unify a multi-modal behavior generation …

Gaze Mechanisms for Situated Interaction with Embodied Agents

S Andrist – 2016 – search.proquest.com

… Behavior Markup Language (BML) (Vilhjlmsson et al., 2007), developed as one of the three stages of SAIBA, denes multimodal behaviors such as gaze, head, face, body, gesture, and speech in a human-readable XML format …