Notes:

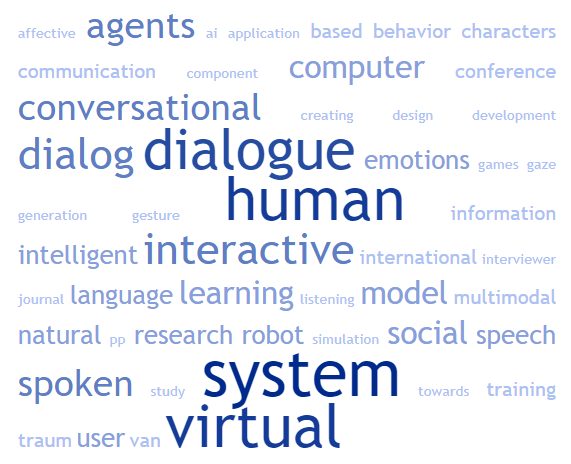

The following references discuss various aspects of virtual humans and their use in various applications, such as in spoken dialogue systems and interactive narratives. It mentions research on topics including the impact of virtual humans’ personalities and emotions on user experience, the challenges of developing realistic virtual humans, the use of virtual humans in training and education, and the role of nonverbal behavior in mediated interactions with virtual humans. The text also mentions methods and technologies used in natural language processing and spoken language systems, including techniques for processing and analyzing language data. The text discusses the potential for future developments in virtual human technology, including the use of affective computing, machine learning, and computer vision.

See also:

100 Best Virtual Human Videos | Multimodal Agents in Unity3d | Virtual Human Toolkit

Assembling the jigsaw: How multiple open standards are synergistically combined in the HALEF multimodal dialog system

V Ramanarayanan, D Suendermann-Oeft… – … Interaction with W3C …, 2017 – Springer

… Prominent examples include: CMU’s Olympus [4]. Alex, 9 by the Charles University in Prague [12]. InproTK, 10 an incremental spoken dialog system. OpenDial 11. the Virtual Human Toolkit [9]. Metalogue, 12 a multimodal dialog system. IrisTK, 13 a multimodal dialog system …

A conversational dialogue manager for the humanoid robot ERICA

P Milhorat, D Lala, K Inoue, T Zhao… – Proceedings of …, 2017 – sap.ist.i.kyoto-u.ac.jp

… discourse features into confidence scoring of intention recognition results in spoken dialogue systems. Speech Communication 48(3-4), 417–436 (2006). DOI 10.1016/j.specom.2005.06.011 15. Leuski, A., Traum, D.: NPCEditor: Creating virtual human dialogue using …

Culture-specific models of negotiation for virtual characters: multi-attribute decision-making based on culture-specific values

E Nouri, K Georgila, D Traum – AI & society, 2017 – Springer

… with people (only with other virtual humans). Unlike the model of Mascarenhas et al. (2009), the MARV model can be used to make a variety of decisions in different contexts. As described in Sect. 4.2, it has been integrated into a virtual human dialog system that supports both …

Laying Down the Yellow Brick Road: Development of a Wizard-of-Oz Interface for Collecting Human-Robot Dialogue

C Bonial, M Marge, A Foots, F Gervits, CJ Hayes… – arXiv preprint arXiv …, 2017 – arxiv.org

… Skantze, G. 2003. Exploring human error handling strategies: Implications for spoken dialogue systems. In ISCA Tutorial and Research Workshop on Error Handling in Spoken Dia- logue Systems … Dealing with Doctors: A Virtual Human for Non-team Interaction …

Listen to my body: Does making friends help influence people

R Artstein, D Traum, J Boberg, A Gainer… – Proceedings of the …, 2017 – people.ict.usc.edu

… Human par- ticipants interact with two agents – a Nao robot and a virtual human – in four dialogue scenarios: one involving building familiarity … In dialogue system research, many have contrasted func- tional or task-oriented dialogue, aimed at joint completion of a specific task …

A roadmap for natural language processing research in information systems

D Liu, Y Li, MA Thomas – … of the 50th …, 2017 – scholarspace.manoa.hawaii.edu

… concerns the refinement and application of NLP techniques to solve real-world problems [3], such as creating spoken dialogue systems [4], speech … for IE and IR, such as a tool for mining Wikipedia [52], automatic case acquisition from texts [53], creating virtual human dialogue [4 …

Perceived emotional intelligence in virtual agents

Y Yang, X Ma, P Fung – Proceedings of the 2017 CHI Conference …, 2017 – dl.acm.org

… 2014. SimSensei Kiosk: A virtual human interviewer for healthcare decision support … 2016. Zara: A Virtual Interactive Dialogue System Incorporating Emotion, Sentiment and Personality Recognition. In Proceedings of COLING 2016, 278-281 …

Computational Approaches to Dialogue

D Traum – The Routledge Handbook of Language and Dialogue, 2017 – books.google.com

… L.-P.(2013), Verbal indicators of psychological distress in interactive dialogue with a virtual human … A., Gerten, J. & Traum, D. (2008), From domain specification to virtual humans: An integrated … J. & Lemon, O.(2006), User simulation for spoken dialogue systems: Learning and …

Selecting and expressing communicative functions in a saiba-compliant agent framework

A Cafaro, M Bruijnes, J van Waterschoot… – … on Intelligent Virtual …, 2017 – Springer

… Natural Language Engineering 6(3&4), 323–340 (2000) 9. Leuski, A., Traum, D.: NPCEditor: Creating Virtual Human Dialogue Using Information Retrieval Techniques … ter Maat, M., Heylen, D.: Flipper: An Information State Component for Spoken Dialogue Systems …

Shihbot: A facebook chatbot for sexual health information on hiv/aids

J Brixey, R Hoegen, W Lan, J Rusow, K Singla… – Proceedings of the 18th …, 2017 – aclweb.org

… Evaluation will include metrics for satisfying social work goals as well as dialogue system goals. 2 Motivation … Anton Leuski and David Traum. 2011. NPCEditor: Creating virtual human dialogue using information retrieval techniques.” AI Magazine, 32(2): 42-56 …

Scenarios in virtual learning environments for one-to-one communication skills training

R Lala, J Jeuring… – International …, 2017 – educationaltechnologyjournal …

… Claudio et al. (2015) demonstrate Virtual Humans (VH) in an interactive application, the goal of which is to train and assess self-medication consultation skills … From the selection, we looked at: Dialoguer (www.dialoguer.info). Dialogue System (www.pixelcrushers.com) …

An Incremental Response Policy in an Automatic Word-Game

E Pincus, D Traum – IVA 2017 Workshop on Conversational …, 2017 – people.ict.usc.edu

… In this work we present updates to Mr. Clue, a fully automated embodied dialogue system that plays the role of clue-giver in a word-guessing … iteration of an earlier version of the system [1]. The early version of the system was built on components of the Virtual Human Toolkit [4 …

Lessons in Dialogue System Deployment

A Leuski, R Artstein – Proceedings of the 18th Annual SIGdial Meeting on …, 2017 – aclweb.org

… Abstract We analyze deployment of an interactive dialogue system in an environment where deep technical expertise might not be read- ily available … The initial NDT system was created from compo- nents of the Virtual Human Toolkit (Hartholt et al., 2013) …

A modular, multimodal open-source virtual interviewer dialog agent

K Cofino, V Ramanarayanan, P Lange… – Proceedings of the 19th …, 2017 – dl.acm.org

… Keelan Evanini Educational Testing Service R&D Princeton, NJ kevanini@ets.org ABSTRACT We present an open-source multimodal dialog system equipped with a virtual human avatar interlocutor. The agent, rigged in Blender …

R3D3 in the Wild: Using A Robot for Turn Management in Multi-Party Interaction with a Virtual Human

M Theune, D Wiltenburg, M Bode… – 1st Interaction with …, 2017 – research.utwente.nl

… work has also looked at the dynamics of interaction with multiple virtual humans [7] and … on – attention management and turn-taking in multi-party interaction with a virtual human/robot duo … Nishimura, Y. Todo, K. Yamamoto, and S. Nakagawa, “Chat-like spoken dialog system for a …

Evaluation of Question-Answering System About Conversational Agent’s Personality

H Sugiyama, T Meguro, R Higashinaka – Dialogues with Social Robots, 2017 – Springer

… References. 1. Shibata, M., Nishiguchi, T., Tomiura, Y.: Dialog system for open-ended conversation using web documents … 199–208 (2015)Google Scholar. 15. Leuski, A., Traum, D.: Creating virtual human dialogue using information retrieval techniques. AI Mag …

Seyedeh Zahra Razavi

CK Learning, M Learning – cs.rochester.edu

… manager for a virtual conversational agent”, Position paper, in Proceedings, Young Researchers Roundtable on Spoken Dialogue Systems (YRRSDS 2016), ICT, Los Angeles, Sept.16, 2016, pp. 22-23 SZ Razavi and LK Schubert, “An automated virtual human for helping teens …

Knowledge Acquisition

M Blackburn – courses.washington.edu

… Suendermann-Oeft..2015.HALEF: an open-source standard-compliant telephony-based modular spoken dialog system – A review and an outlook … Update the dialog and style of the virtual human Page 39 … from the standard questionnaires ? Virtual humans all female …

Dialogues with Social Robots

K Jokinen, G Wilcock – Springer

… of Southern California) spoke on The Role of a Lifetime: Dialogue Models for Virtual Human Role-players … Professor Traum presented examples of role-play dialogue systems from a wide variety of activities, genres, and roles, focussing on virtual humans created at the …

Managing Casual Spoken Dialogue Using Flexible Schemas, Pattern Transduction Trees, and Gist Clauses

SZ Razavi, R EDU, LK Schubert, MR Ali, ME Hoque – cs.rochester.edu

… Optimizing the turn-taking behavior of task-oriented spoken dialog systems. ACM Trans. on Speech and Language Processing (TSLP), 9, 1. Razavi, SZ, Ali, MR, Smith, TH, Schubert, LK, & Hoque, ME (2016). The LISSA virtual human and ASD teens: An overview of initial …

Automatic question generation for virtual humans

EL Fasya – 2017 – essay.utwente.nl

… user’s leadership skills in a warzone. Virtual humans can also be implemented in museums … The architecture of a virtual human is more complex than the typical architecture of spoken dialogue systems because it involves more modules such as nonverbal behavior …

Social Gaze Model for an Interactive Virtual Character

B van den Brink, Z Yumak – International Conference on Intelligent …, 2017 – Springer

… Gaze movement is important for modeling realistic social interactions with virtual humans … The Virtual Human Controller receives the information about where to look at from the Engagement … D., Horvitz, E.: Learning to predict engagement with a spoken dialog system in open …

2. History of Conversational Systems

T Nishida – 2017 – ii.ist.i.kyoto-u.ac.jp

… t 1990 2000 2010 1980 1970 Natural language dialogue systems Speech dialogue systems Multi?modal dialogue systems Embodied Conversational Agents / Intelligent Virtual Human Story Understanding systems Conversational Systems Transactional systems …

19 Speech Synthesis: State of the Art and Challenges for the Future

K Georgila – Social Signal Processing, 2017 – books.google.com

… 109–112). Georgila, K., Black, AW, Sagae, K., & Traum, D.(2012). Practical evaluation of human and synthesized speech for virtual human dialogue systems. In Proceedings of the International Conference on Language Resources and Evaluation (pp. 3519–3526) …

I Probe, Therefore I Am: Designing a Virtual Journalist with Human Emotions

KK Bowden, T Nilsson, CP Spencer, K Cengiz… – arXiv preprint arXiv …, 2017 – arxiv.org

… 3]. To achieve a natural conversa- tional flow, it is necessary for virtual humans to be … developed by the ARIA-VALUSPA consortium and tasked to produce a virtual human journalist capable of … continuously carried out in parallel to the development of the dialogue system in order …

An Agent-Based Aggression De-escalation Training Application for Football Referees

T Bosse, W van Breda, N van Dijk, J Scholte – Portuguese Conference on …, 2017 – Springer

… suspects [4]. Another project delivered a role-playing session with a virtual human to help officers learn and practice interpersonal and counseling skills [7]. Likewise, virtual humans are used in … to engage in a conversation with a training agent, a dialogue system based on …

To Sum Up

E Weigand – The Routledge Handbook of Language and Dialogue, 2017 – taylorfrancis.com

… These systems are also called virtual humans (Gratch et al … CLASS Workshop on Natural, Intelligent and Effective Interaction in Multimodal Dialogue Systems, Edmonton … Morency, L.-P. (2013), Verbal indicators of psychological distress in interactive dialogue with a virtual human …

Reinforcement Learning Based Conversational Search Assistant

M Aggarwal, A Arora, S Sodhani… – arXiv preprint arXiv …, 2017 – arxiv.org

… dialogue [6]. A set of question answering specific tasks have been developed to test the reasoning and deduction ability of dialogue systems [15 … Consequently, in order to bootstrap the training process we designed a virtual human user to imitate a real human user for the search …

Social influence of humor in virtual human counselor’s self?disclosure

SH Kang, DM Krum, P Khooshabeh… – … and Virtual Worlds, 2017 – Wiley Online Library

… The current study is part of ongoing research investigat- ing virtual humans in counseling interviews and the first step to explore a virtual human’s jokes in the interviews. Future studies would use conversational spontaneous jokes with a machine dialog system …

Joint Learning of Response Ranking and Next Utterance Suggestion in Human-Computer Conversation System

R Yan, D Zhao – Proceedings of the 40th International ACM SIGIR …, 2017 – dl.acm.org

Page 1. Joint Learning of Response Ranking and Next Utterance Suggestion in Human-Computer Conversation System Rui Yan †,? 1 Institute of Computer Science and Technology Peking University Beijing 100871, China ruiyan@pku.edu.cn …

Joint, incremental disfluency detection and utterance segmentation from speech

J Hough, D Schlangen – Proceedings of the 15th Conference of the …, 2017 – aclweb.org

… Joint, Incremental Disfluency Detection and Utterance Segmentation from Speech Julian Hough and David Schlangen Dialogue Systems Group // CITEC // Faculty of Linguistics and Literature Bielefeld University firstname.lastname@uni-bielefeld.de Abstract …

Computing negotiation update semantics in multiissue bargaining dialogues

V Petukhova, H Bunt… – Proceedings of the …, 2017 – pdfs.semanticscholar.org

… development. Most research in human-computer interaction mod- elling and dialogue systems design so far has been done in the area of task-oriented systems (TOS) with well-defined tasks in restricted domains. Research …

Automatic Measures to Characterise Verbal Alignment in Human-Agent Interaction

GD Duplessis, C Clavel… – 18th Annual Meeting of …, 2017 – hal.archives-ouvertes.fr

… These measures rely on efficient algorithms making an online usage in a dialogue system re- alistic … For both virtual human agents, wizards were rather free but followed some guide- lines. First, the goal in both negotiations is for the agent to win …

Utterance retrieval based on recurrent surface text patterns

GD Duplessis, F Charras, V Letard, AL Ligozat… – … on Information Retrieval, 2017 – Springer

… 363–370 (2005)Google Scholar. 5. Gandhe, S., Traum, DR: Surface text based dialogue models for virtual humans … 14, pp. 1188–1196 (2014)Google Scholar. 8. Lee, C., Jung, S., Kim, S., Lee, GG: Example-based dialog modeling for practical multi-domain dialog system …

Affect-lm: A neural language model for customizable affective text generation

S Ghosh, M Chollet, E Laksana, LP Morency… – arXiv preprint arXiv …, 2017 – arxiv.org

… is of great importance to understanding spoken language sys- tems, particularly for emerging applications such as dialogue systems and conversational … by Gratch (2014) consists of 70+ hours of dyadic interviews be- tween a human subject and a virtual human, where the …

Challenges in Building Highly Interactive Dialogue Systems

AI Magazine – AI Magazine, 2017 – cs.utep.edu

… SimSensei Kiosk: A Virtual Human Interviewer for Healthcare Decision Support … Policy Committee for Adaptation in Multi-Domain Spoken Dialogue Systems … Can Virtual Humans Be More Engaging Than Real Ones? In Human-Computer Interaction …

User interaction for interactive storytelling

M Cavazza, F Charles – Handbook of Digital Games and Entertainment …, 2017 – Springer

… 3), 204–210 (1997) W. Swartout, J. Gratch, JR Hill, E. Hovy, S. Marsella, J. Rickel et al., Toward virtual humans … tutorial and research workshop on Perception and Interactive Technologies for Speech-Based Systems: Perception in Multimodal Dialogue Systems, Springer-Verlag …

20 Body Movements Generation for Virtual Characters and Social Robots

A Beck, Z Yumak… – Social Signal …, 2017 – books.google.com

… so the movements need to be adapted to the specifics of the virtual human and the … State of the art virtual humans and social robots are not yet able to display … Spoken and multimodal dialog systems and applications–rigid head motion in expressive speech animation: Analysis …

Social Robots and Other Relational Agents to Improve Patient Care

VHS Wang, TF Osborne – Using Technology to Improve Care of …, 2017 – books.google.com

… Psychological Review, 114 (4), 864. Georgila, K., Black, A., Sagae, K., & Traum, DR (2012). Practical evaluation of human and synthesized speech for virtual human dialogue systems (pp. 3519–3526). Retrieved from https://www. researchgate …

The CaMeLi Framework—A Multimodal Virtual Companion for Older Adults

NA Nijdam, D Konstantas – … and Selected Results from the SAI …, 2017 – books.google.com

… Figure5 illustrates the 3D virtual human, which closely simulates human conversational behaviour through the use of synthesized voice and … Technology (2003) 8. Bickmore, TW, Schulman, D., Sidner, CL: A reusable framework for health counseling dialogue systems based on …

Computational gesture research

S Kopp – Why Gesture?: How the hands function in speaking …, 2017 – books.google.com

… a thorough evaluation study on iconic gestures of a virtual human and, despite … gesturing outper- forms average gesturing–evaluating gesture production in virtual humans.” In Proceedings … conversation- al agents.” In Advances in Natural Multimodal Dialogue Systems, JCJ van …

Towards the Instantaneous Expression of Emotions with Avatars

R Boulic, J Ahn, S Gobron, N Wang, Q Silvestre… – Cyberemotions, 2017 – Springer

… Our chatting system allows users to (1) select their virtual human avatar, (2) move their … Thalmann, D., Boulic, R.: An NVC emotional model for conversational virtual humans in a … André, E., Dybkjær, L., Minker, W., Heisterkamp, P. (eds.) Affective Dialogue Systems: Tutorial and …

An agent-based modeling framework for online collective emotions

D Garcia, A Garas, F Schweitzer – Cyberemotions, 2017 – Springer

… Our agent-based approach is used for the next generation of emotionally reactive dialog systems (Rank et al … Schema of the individual emotions model for virtual humans … Thanks to this, virtual human platforms can display rich facial expressions that cover a large variety of states …

Developing Virtual Patients with VR/AR for a natural user interface in medical teaching

MA Zielke, D Zakhidov, G Hardee… – Serious Games and …, 2017 – ieeexplore.ieee.org

… Complex virtual patient architectures may consist of many different modular components for user input, speech recognition, patient response, animation, audio, and lip syncing that must work in tandem to present a realistic virtual human, potentially making the development of …

A Robot Commenting Texts in an Emotional Way

L Volkova, A Kotov, E Klyshinsky, N Arinkin – Conference on Creativity in …, 2017 – Springer

… (eds.): Affective Dialogue Systems … Springer, Heidelberg (2004). doi:10.1007/978-3-540-24842- 2_15 CrossRefGoogle Scholar. 5. Bergmann, K., Branigan, HP, Kopp, S.: Exploring the alignment space – lexical and gestural alignment with real and virtual humans. Front. ICT …

Simulating listener gaze and evaluating its effect on human speakers

L Frädrich, F Nunnari, M Staudte, A Heloir – International Conference on …, 2017 – Springer

… simula- tion using eye-tracking and virtual humans. IEEE Transactions on Affective Com- puting 5(3), 238–250 (2014) 3. Heylen, D., van Es, I., Nijholt, A., van Dijk, B.: Controlling the gaze of conversa- tional agents. In: Advances in Natural Multimodal Dialogue Systems, pp …

SAMBA: A self-aware health monitoring architecture for distributed industrial systems

LC Siafara, HA Kholerdi, A Bratukhin… – … Society, IECON 2017 …, 2017 – ieeexplore.ieee.org

… Systems, 2011. [22] J. Van Oijen, W. Van Doesburg, and F. Dignum. Goal-based commu- nication using bdi agents as virtual humans in training: An ontology driven dialogue system. Springer, 2011. [23] S. Franklin, S. Strain, R. McCall, and B. Baars …

Toward an agent-based framework for human resource management

B Rachid, T Mohamed, KM Ali – Logistics and Supply Chain …, 2017 – ieeexplore.ieee.org

… 8(3), pp. 323-364. [12] J. Oijen, WA van Doesburg, and F. Dignum, Goal-based communications using BDI agents as virtual humans in training: An ontology driven dialogue systems. In J. Dignum (Ed.), Agents for games and simulations II , 2011. pp. 38-52 …

MultiSense—Context-aware nonverbal behavior analysis framework: A psychological distress use case

G Stratou, LP Morency – IEEE Transactions on Affective …, 2017 – ieeexplore.ieee.org

… project [3], the scope of which includes the development of virtual humans that are … of behavioral analysis, we allow external VH architecture components (eg, the dialog system) to log … For example, in a virtual human avatar scenario, MultiSense can broadcast low level tracking …

AI in Informal Science Education: Bringing Turing Back to Life to Perform the Turing Test

AJ Gonzalez, JR Hollister, RF DeMara, J Leigh… – International Journal of …, 2017 – Springer

… The body of literature on intelligent virtual humans is very large … some historically notable attempts to realize the dream of someday creating a virtual human equal to … With a more sophisticated dialog system than Mel, Sergeant Blackwell’s capabilities for conversation provide the …

The Interface

C Draude – Computing Bodies, 2017 – Springer

… system is formed. What is of major interest for this analysis is how the bridging of this gap – the interface – gains importance and, with the embodied agent/Virtual Human, finally receives a life of its own (at least in concept). In her …

Changing stigmatizing attitudes to mental health via education and contact with embodied conversational agents

J Sebastian, D Richards – Computers in Human Behavior, 2017 – Elsevier

… 2014). In line with social learning theory, Cordar et al. (2015) showed that virtual humans could be used to model good and bad conflict resolution strategies and overcome scheduling issues involved in other forms of training. Fox …

Recurrent Neural Network to Deep Learn Conversation in Indonesian

A Chowanda, AD Chowanda – Procedia Computer Science, 2017 – Elsevier

… 5. Zhu, W., Chowanda, A., Valstar, M.. Topic switch models for dialogue management in virtual humans. In: International Conference on Intelligent Virtual Agents … Serban, IV, Lowe, R., Charlin, L., Pineau, J.. A survey of available corpora for building data-driven dialogue systems …

A robotic couples counselor for promoting positive communication

D Utami, TW Bickmore, LJ Kruger – Robot and Human …, 2017 – ieeexplore.ieee.org

… speaker using both robot gaze and speech-based reference to users’ given names (programmed into the dialogue system prior to … 1) Feelings of no-judgment: Consistent with previous research on increased self-disclosure with virtual humans [Gale,Gratch, 2014], participants …

Programming challenges of chatbot: Current and future prospective

AM Rahman, A Al Mamun… – … Conference (R10-HTC) …, 2017 – ieeexplore.ieee.org

… 48, no. 4, 2005, pp.612-618. [6] R. Hubal et al., ?Avatalk virtual humans for training with computer generated forces,? presented at the Proceedings of CGF-BR … Example-based dialog modeling for practical multi-domain dialog system,”Speech Communication, vol. 51, 2009 …

Towards a spoken dialog system capable of acoustic-prosodic entrainment

A Weise – 2017 – pdfs.semanticscholar.org

Page 1. Towards a spoken dialog system capable of acoustic-prosodic entrainment Andreas Weise A Literature Review (Second Exam) Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy … 10 2 Entrainment and dialog systems 14 …

Agent-Oriented Methodology for Designing 3D Animated Characters

GLC Wyai, C WaiShiang, N Jali – … , Electronic and Computer …, 2017 – journal.utem.edu.my

… 8, no. 2, pp. 5-10, 2016. [16] O. Van, W. Joost, and D. Frank, “Goal-based communication using BDI agents as virtual humans in training: an ontology driven dialogue system,” in Proc. of Agents for games and simulations II, 2011, pp. 38- 52 …

Ask-Elle is a tutor for learning the higher-order, strongly-typed functional programming language Haskell. It supports the stepwise development of Haskell programs by …

A Gerdes, B Heeren, J Jeuring… – International Journal of …, 2017 – infona.pl

… learning. In particular, tutorial dialogue systems that engage students in natural language dialogue can create rich, adaptive interactions. A promising approach to increasing the effectiveness of these systems is to adapt not only …

Remembering a Conversation–A Conversational Memory Architecture for Embodied Conversational Agents

M Elvir, AJ Gonzalez, C Walls, B Wilder – Journal of Intelligent …, 2017 – degruyter.com

… By virtue of their embodiment, ECAs can be more lifelike than disembodied chatbots. Virtual humans are ECAs designed to closely resemble human beings – often, specific individuals. Therefore, ECAs and specifically virtual humans are the focus of our discussion here …

Techniques for Adaptive Graphics Applications Synthesis Based on Variability Modeling Technology and Graph Theory

A Bershadsky, A Bozhday, Y Evseeva… – Conference on Creativity …, 2017 – Springer

… Computer 37(7), 56–64 (2004)CrossRefGoogle Scholar. 3. Oijen, J.: Goal-based communication using BDI agents as virtual humans in training: an ontology driven dialogue system. In: Dignum, F. (ed.) Agents for Games and Simulations II, pp. 38–52 …

The Spoken Wikipedia Corpus Collection

T Baumann, A Köhn, F Hennig – nats-www.informatik.uni-hamburg.de

Page 1. LRE Journal manuscript No. (will be inserted by the editor) The Spoken Wikipedia Corpus Collection Harvesting, Alignment and an Application to Hyperlistening Timo Baumann · Arne Köhn · Felix Hennig Received: date / Accepted: date …

DialPort, Gone Live: An Update After A Year of Development

K Lee, T Zhao, Y Du, E Cai, A Lu, E Pincus… – Proceedings of the 18th …, 2017 – aclweb.org

… The current database has just over 100 restaurants and is implemented using the multi-domain statistical dialogue system toolkit PyDial (Ultes et al … since the origi- nal Mr. Clue listens for VH messages (a variant of ActiveMQ messaging used by the Virtual Human Toolkit (Hartholt …

Multimodal HCI: exploratory studies on effects of first impression and single modality ratings in retrospective evaluation

B Weiss, I Wechsung, S Hillmann, S Möller – Journal on Multimodal User …, 2017 – Springer

… also in a brief passive scenario (ie, continuing watching the virtual human) differed significantly … it has been shown that differences in personality/emotions of virtual humans affect retrospective … the usage, are related to ratings of the whole spoken dialog system collected after …

Towards adaptive social behavior generation for assistive robots using reinforcement learning

J Hemminghaus, S Kopp – Proceedings of the 2017 ACM/IEEE …, 2017 – dl.acm.org

Page 1. Towards Adaptive Social Behavior Generation for Assistive Robots Using Reinforcement Learning Jacqueline Hemminghaus CITEC, Bielefeld University 33619 Bielefeld, Germany jhemming@techfak.uni-bielefeld.de …

A data-driven passing interaction model for embodied basketball agents

D Lala, T Nishida – Journal of Intelligent Information Systems, 2017 – Springer

… This is not to say that the other modalities are unnecessary. If the goal is an ideal virtual human, then their combination with the full body is crucial … Gratch et al. (2002) have argued that virtual humans must draw … 2003) adopted JAT as the basis for a spoken dialogue system …

Semantic Comprehension System for F-2 Emotional Robot

A Kotov, N Arinkin, A Filatov, L Zaidelman… – First International Early …, 2017 – Springer

… 2, 3, 4, 5]. In order to minimize numerous shortcomings of this approach researchers often use the bag-of-n-grams – an unordered set of tuples consisting of n consecutive words [2, 6]. Dialogue systems also often … In: Modeling Communication with Robots and Virtual Humans, pp …

Exceptionally social: Design of an avatar-mediated interactive system for promoting social skills in children with autism

B Nojavanasghari, CE Hughes… – Proceedings of the 2017 …, 2017 – dl.acm.org

… With current advances in affective computing, computer vision, dialogue systems and machine learning [8,25,32] it is feasible to assist the interactors by automating or providing … SimCoach: an intelligent virtual human system for providing healthcare information and support …

Crowd-sourced design of artificial attentive listeners

C Oertel, P Jonell, D Kontogiorgos… – INTERSPEECH …, 2017 – isca-speech.org

… A. Suri, D. Traum, R. Wood, Y. Xu, A. Rizzo, and L.-P. Morency, “Simsensei kiosk: A virtual human interviewer for … and prediction of morphological patterns of backchannels for attentive listening agents,” in International Workshop on Spocken Dialogue Systems, 2016, Conference …

Automatic assessment of depression based on visual cues: A systematic review

A Pampouchidou, P Simos, K Marias… – IEEE Transactions …, 2017 – ieeexplore.ieee.org

Page 1. 1949-3045 (c) 2017 IEEE. Translations and content mining are permitted for academic research only. Personal use is also permitted, but republication/ redistribution requires IEEE permission. See http://www.ieee.org …

Computational Study of Primitive Emotional Contagion in Dyadic Interactions

I HUPONT, C Clavel, M CHETOUANI – IEEE Transactions on …, 2017 – computer.org

Page 1. 1949-3045 (c) 2017 IEEE. Personal use is permitted, but republication/ redistribution requires IEEE permission. See http://www.ieee.org/ publications_standards/publications/rights/index.html for more information. This …

Emotion-Based 3D CG Character Behaviors

K Kaneko, Y Okada – Encyclopedia of Computer Graphics and Games, 2017 – Springer

… The authors provided a generic model for personality, mood, and emotion simulation for conversational virtual humans … The model was applied to their pro- totype application which has a dialogue system and a talking head with synchronized speech and facial expressions …

In dialogue with an avatar, language behavior is identical to dialogue with a human partner

E Heyselaar, P Hagoort, K Segaert – Behavior research methods, 2017 – Springer

… The authors explain this as: “when social cues and presence as created by a virtual human come into play, automatic social reactions appear to override the initial beliefs in shaping lexical alignment” (p. 9). Therefore, previous studies comparing human and computer …

Jee haan, I’d like both, por favor: Elicitation of a Code-Switched Corpus of Hindi–English and Spanish–English Human–Machine Dialog

V Ramanarayanan, D Suendermann-Oeft – Proc. Interspeech 2017, 2017 – oeft.de

… framework (see Figure 1). Where there are multiple academic (Olympus [28], Alex [29], Virtual Human Toolkit [30], OpenDial2, etc.) and industrial (Voxeo3, Alexa 4, etc.) implementations of spoken and mul- timodal dialog systems, many of these often use special ar …

A multifaceted study on eye contact based speaker identification in three-party conversations

Y Ding, Y Zhang, M Xiao, Z Deng – … of the 2017 CHI Conference on …, 2017 – dl.acm.org

… 39, 11, 6, 14]. To improve the quality of mediated interaction, tremendous progresses have been made to synthesize nonverbal behaviors for virtual humans as the speaker or a listener in a conversation. Often, the synthesized …

Real-time Virtual Collaborative Environment Designed for Risk Management Training: Communication and Decision Making (Environnement

MV Minville – 2017 – core.ac.uk

Page 1. THÈSE en vue de l’obtention du DOCTORAT DE L’UNIVERSITÉ DE TOULOUSE délivré par Université Paul Sabatier présentée et soutenue par Catherine PONS LELARDEUX le 17 octobre 2017 Real-time Virtual Collaborative Environment …

ARENA simulation model of a conversational character’s speech system

Y Alvarado, G Claudia, V Gil Costa… – Computer Science & … – sedici.unlp.edu.ar

… This paper describes an analysis of a conversational character spoken dialogue system using a discrete event simulator … Keywords: Virtual Reality (VR), Virtual Humans, Conversational Characters, Natural User Interface (NUI), Speech Interface, Conversational Interaction …

Text-based Healthcare Chatbots Supporting Patient and Health Professional Teams: Preliminary Results of a Randomized Controlled Trial on Childhood …

T Kowatsch, M Nißen, CHI Shih… – Persuasive …, 2017 – research-collection.ethz.ch

… In: Kuppevelt, JCJ, Bernsen, NO, Dybkjær, L. (eds.) Advances in Natural Multimodal Dialogue Systems, vol. 30, pp. 23–54 … Interacting with Computers 22, 289-298 (2010). 6. Boukricha, H., Wachsmuth, I.: Modeling Empathy for a Virtual Human: How, When and to What Extent …

Zooming in: studying collective emotions with interactive affective systems

M Skowron, S Rank, D Garcia, JA Ho?yst – Cyberemotions, 2017 – Springer

… of the user, represented by an avatar (male or female according to the user’s gender), interacting with a virtual human (male bartender) … In this round of experiments, three distinct affective profiles were implemented for the dialog system—labeled as positive, negative and neutral …

VR| ServE: A Software Toolset for Service Engineering Using Virtual Reality

P Westner, S Hermann – Serviceology for Smart Service System, 2017 – Springer

… also the requirement for an interface for easy process navigation (eg select current process step to visualize) or text input for our dialog system … For the 3D representation of a role (virtual human model), setting position was one parameter, but also the gesture, pose and direction …

Augmenting group medical visits with conversational agents for stress management behavior change

A Shamekhi, T Bickmore, A Lestoquoy… – International Conference …, 2017 – Springer

… ACM (2016)Google Scholar. 11. Bickmore, T., Giorgino, T.: Health dialog systems for patients and consumers … Meeker, D.: SimCoach evaluation: a virtual human intervention to encourage service-member help-seeking for posttraumatic stress disorder and depression …

Exploring the Dynamics of Relationships Between Expressed and Experienced Emotions

R Srinivasan, A Chander, CL Dam – International Conference on Intelligent …, 2017 – Springer

… M.: Virtual character personality influences participants attitudes and behavior – an interview with a virtual human character about her social anxiety. Front. Robot. AI 2(1) (2015)Google Scholar. 22. Ward, N., DeVault, D.: Challenges in building highly interactive dialog systems …

Processing negative emotions through social communication: Multimodal database construction and analysis

N Lubis, M Heck, S Sakti, K Yoshino… – … (ACII), 2017 Seventh …, 2017 – ieeexplore.ieee.org

… Towards this direction, in the future we hope to utilize the corpus for developing a dialogue system with an emotionally intelligent dialogue strategy … T. Dey, E. Fast, A. Gainer, K. Georgila, J. Gratch, A. Hartholt, M. Lhommet et al., “Simsensei kiosk: A virtual human interviewer for …

Investigating the role of social eye gaze in designing believable virtual characters

M Nixon – 2017 – summit.sfu.ca

… model. Most specifically, I investigated the ability to send social signals related to status through a virtual human’s eye gaze. Gaze is a critical component of social exchanges, and … I have constructed a verbal-conceptual computational model of gaze for virtual humans …

When Less is More: Studying the Role of Functional Fidelity in a Low Fidelity Mixed-Reality Tank Simulator

C Neubauer, P Khooshabeh, J Campbell – International Conference on …, 2017 – Springer

… to users in the form of a mechanical noise as the turret moves back and forth and lastly the loader, who is represented as a virtual human should yell … 1. Robinson, SM, Roque, A., Vaswani, A., Traum, D.: Evaluation of a spoken dialogue system for virtual reality call for fire training …

Unsupervised text classification for natural language interactive narratives

J Bellassai, AS Gordon… – Proceedings of the …, 2017 – pdfs.semanticscholar.org

… Related Work Natural language processing in interactive narratives has his- torically shared many of the methods and technologies of research in natural language dialogue systems … Toward a new generation of virtual humans for interactive experiences …

Attentive listening system with backchanneling, response generation and flexible turn-taking

D Lala, P Milhorat, K Inoue, M Ishida… – Proceedings of the 18th …, 2017 – aclweb.org

… However we improve the precision of taking the turn, which is critical in spoken dialogue systems, from 0.428 to 0.624 … 135 Page 10. Rizzo, and Louis-philippe Morency. 2014. SimSen- sei Kiosk : A Virtual Human Interviewer for Health- care Decision Support …

Exploring variation of natural human commands to a robot in a collaborative navigation task

M Marge, C Bonial, A Foots, C Hayes, C Henry… – Proceedings of the First …, 2017 – aclweb.org

… To col- lect training data for a dialogue system and to investigate possible communica- tion changes over time, we developed a Wizard-of-Oz study that (a) simulates a robot’s limited understanding, and (b) col- lects dialogues where human participants build a progressively …

A Conversational Agent for the Improvement of Human Reasoning Skills

L Wartschinski, NT Le, N Pinkwart – Bildungsräume 2017, 2017 – dl.gi.de

… [BL12] Banchs, RE; Li, H.: IRIS: a chat-oriented dialogue system based on the vector space model. In: Proceedings of the ACL System Demonstrations, 2012. [BSY09] Bickmore, T.; Schulman, D.; Yin, L: Engagement vs. deceit: Virtual humans with human autobiographies” …

Spoken dialogue systems: architectures and applications

JM Olaso Fernández – 2017 – addi.ehu.es

Page 1. Spoken Dialogue Systems: Architectures and Applications Doctoral Thesis Advisor … Page 17. Page 18. 2 Spoken Dialogue Systems 1 Overview SDSs can be defined as informatics systems that allow humans to interact with such systems using natural language …

Chatbots for troubleshooting: A survey

C Thorne – Language and Linguistics Compass, 2017 – Wiley Online Library

… They can be seen as a restricted kind of dialog system that deals with written rather than spoken language … Their features will be discussed within the broader context of chatbots and text-based dialog systems. It is structured as follows …

Interactive Cognitive Systems and Social Intelligence

P Langley – IEEE Intelligent Systems, 2017 – computer.org

Page 1. 22 1541-1672/17/$33.00 © 2017 IEEE IEEE INTELLIGENT SYSTEMS Published by the IEEE Computer Society Interactive Cognitive Systems and Social Intelligence Pat Langley, Institute for the Study of Learning and Expertise This article reviews the cognitive systems …

Aij D 0.

A Listeners – Cyberemotions, 2017 – Springer

… Based on modality the dialog systems can be divided in the following groups: text-based, spoken dialog system, graphical user … Virtual Characters Virtual characters are articulated bodies animated using a computers Virtual Humans Virtual Characters (VH) with a Human shape …

A Comparative Meta-Analysis of Research on Embodied Pedagogical Agents (PAs)

H Darwish – researchgate.net

Page 1. 1 A Comparative Meta-Analysis of Research on Embodied Pedagogical Agents (PAs) Dr. Hamda Darwish Assistant Professor, Suhar College of Applied Sciences Abstract This paper reports a comparative meta-analysis study of empirical studies …

Computing Bodies: Gender Codes and Anthropomorphic Design at the Human-Computer Interface

C Draude – 2017 – Springer

… The Turing Test, as founding myth of artificial intelligence research, is reread against the background of its gendered coding and in terms of its implications for the research field of the Virtual Human. Although it is not ac- tively intended within the field, Virtual Humans hold a …

Listening Intently: Towards a Critical Media Theory of Ethical Listening

J Feldman – 2017 – search.proquest.com

Page 1. Sponsoring Committee: Professor Martin Scherzinger, Chairperson Professor Mara Mills, Chairperson Professor Helen Nissenbaum LISTENING INTENTLY: TOWARDS A CRITICAL MEDIA THEORY OF ETHICAL LISTENING Jessica Feldman …

Combining CNNs and Pattern Matching for Question Interpretation in a Virtual Patient Dialogue System

L Jin, M White, E Jaffe, L Zimmerman… – Proceedings of the 12th …, 2017 – aclweb.org

… Copenhagen, Denmark, September 8, 2017. cO2017 Association for Computational Linguistics Combining CNNs and Pattern Matching for Question Interpretation in a Virtual Patient Dialogue System Lifeng Jin1, Michael White1, Evan …

Effects of Voice-Chat Conditions on Korean EFL Learners’ Affective Factors

NY Kim – ?????, 2017 – db.koreascholar.com

… They are frequently utilized with an avatar — a virtual human — as a conversational participant … Stewart and File (2007) introduce a computer dialogue system, Let’s Chat, encouraging learners to rehearse conversations without a human partner …

Progress in Artificial Intelligence: 18th EPIA Conference on Artificial Intelligence, EPIA 2017, Porto, Portugal, September 5-8, 2017, Proceedings

E Oliveira, J Gama, Z Vale, HL Cardoso – 2017 – books.google.com

Page 1. Eugénio Oliveira· João Gama · Zita Vale Henrique Lopes Cardoso (Eds.) Progress in Artificial Intelligence 18th EPIA Conference on Artificial Intelligence, EPIA 2017 Porto, Portugal, September 5–8, 2017, Proceedings 123 Page 2 …

Embeddings Based Dialogue System for Variety of Responses

Y MIKAMI, M HAGIWARA – Transactions of Japan Society of Kansei …, 2017 – jstage.jst.go.jp

… ?? ????? ?? ????????? Embeddings Based Dialogue System for Variety of Responses Yoshitaka MIKAMI and Masafumi HAGIWARA … Abstract : In this paper, we propose an embeddings based dialogue system for variety of responses …

Interactive narration with a child: impact of prosody and facial expressions

O ?erban, M Barange, S Zojaji, A Pauchet… – Proceedings of the 19th …, 2017 – dl.acm.org

Page 1. Interactive Narration with a Child: Impact of Prosody and Facial Expressions Ovidiu S, erban Normandie Univ, INSA Rouen Normandie, LITIS 76800 Saint-Étienne-du-Rouvray France Mukesh Barange Normandie Univ …

Utterance Behavior of Users While Playing Basketball with a Virtual Teammate.

D Lala, Y Li, T Kawahara – ICAART (1), 2017 – sap.ist.i.kyoto-u.ac.jp

Page 1. Utterance Behavior of Users While Playing Basketball with a Virtual Teammate Divesh Lala1,2, Yuanchao Li1 and Tatsuya Kawahara1 1Graduate School of Informatics, Kyoto University, Kyoto, Japan 2Japan Society …

Dealing with the emotions of Non Player Characters

A Baffa, P Sampaio, B Feijó, M Lana – sbgames.org

Page 1. Dealing with the emotions of Non Player Characters Augusto Baffa* Pedro Sampaio Bruno Feijó Mauricio Lana PUC-Rio, Departamento de Informática, ICAD/VisionLab, Brazil ABSTRACT Usually in games, the interaction …

Using data visualizations to foster emotion regulation during self-regulated learning with advanced learning technologies

R Azevedo, M Taub, NV Mudrick, GC Millar… – Informational …, 2017 – Springer

… Figure 10.1a illustrates the use of a heat map to show a learner that a source of frustration is their lack of attention to the intelligent virtual human as the learner tended to allocate most of their attention to the last paragraph followed by the first and second paragraphs and the …

Communication system and team situation awareness in a multiplayer real-time learning environment: application to a virtual operating room

CP Lelardeux, D Panzoli, V Lubrano, V Minville… – The Visual …, 2017 – Springer

… constraint and the main difficulty is to propose interactions that can allow humans to naturally interact and communicate with virtual humans as in … Spoken dialogue systems have been defined as computer systems with which humans interact on a turn-by-turn basis and in which …

A theoretical framework for conversational search

F Radlinski, N Craswell – Proceedings of the 2017 Conference on …, 2017 – dl.acm.org

… Even in closed domain dialog systems, additional work is needed to make the turn-taking behavior of the system more flexible and efficient [27] … Initiative and Engaging Behavior A number of authors have studied how a “virtual human” should behave [6, 38] …

Human–Robot Interaction

G Piumatti, ML Lupetti, F Lamberti – 2017 – content.taylorfrancis.com

… A specific application consists in putting the screen in place of the robot’s head to display a virtual human face … More sophisticated interfaces are usually integrated in a dialog system, where both speech recognition and speech synthesis take place …

Conversational agents can provide formative assessment, constructive learning, and adaptive instruction

A Graesser, B McDaniel – The Future of Assessment, 2017 – taylorfrancis.com

… Technical advances in the last decade have encouraged research- ers to escalate efforts to build robust dialogue systems (Graesser, Van Lehn, Rose, Jordan, & Harter, 2001; Gratch et al., 2002; Lars- son & Traum, 2000; Rich & Sidner, 1998; Rickel, Lesh, Rich, Sid- ner, & …

A conventional dialogue model based on dialogue patterns

GD Duplessis, A Pauchet, N Chaignaud… – … Journal on Artificial …, 2017 – World Scientific

Page 1. International Journal on Artificial Intelligence Tools Vol. 26, No. 1 (2017) 1760009 (23 pages) c World Scientific Publishing Company DOI: 10.1142/S0218213017600090 A Conventional Dialogue Model Based on Dialogue Patterns …

Dialogue with computers

P Piwek – Dialogue across Media, 2017 – books.google.com

… Drawing on this analysis, we propose that the uptake of future generations of more powerful dialogue systems will depend on whether they are self-validating. A … systems. We will see that there is a large variety of dialogue systems …

Conversational Agents Can Provide Formative Assessment, Constructive Learning, and Adaptive Instruction

CA Dwyer – The Future of Assessment, 2017 – taylorfrancis.com

… Technical advances in the last decade have encouraged research- ers to escalate efforts to build robust dialogue systems (Graesser, Van Lehn, Rose, Jordan, & Harter, 2001; Gratch et al., 2002; Lars- son & Traum, 2000; Rich & Sidner, 1998; Rickel, Lesh, Rich, Sid- ner, & …

Using Gross’ Emotion Regulation Theory to Advance Affective Computing

L Oostveen – 2017 – dspace.library.uu.nl

Page 1. MASTER ARTIFICAL INTELLIGENCE, UTRECHT UNIVERSITY MASTER THESIS Using Gross’ Emotion Regulation Theory to Advance Affective Computing Author: Lucy VAN OOSTVEEN First Supervisor: prof. dr. John-Jules MEYER Second Examiner: dr …

Functional and temporal relations between spoken and gestured components of language

KI Kok – International Journal of Corpus Linguistics, 2017 – jbe-platform.com

… constructed for a different purpose. It was mostly oriented towards the design of virtual avatars and automated dialogue systems (Bergmann & Kopp 2009, Bergmann et al. 2010, Kopp et al. 2008, Lücking et al. 2010). A feature of the …

Explicit feedback from users attenuates memory biases in human-system dialogue

D Knutsen, L Le Bigot, C Ros – International Journal of Human-Computer …, 2017 – Elsevier

… Participants interacted over the phone with a simulated dialogue system. • … 3.3.3. Simulated dialogue system. The dialogue system included four components: a welcome component, an interaction component, a closing component and a help component …

Modelling Engagement in Multi-Party Conversations

C Oertel – KTH, Stockholm, Sweden, 2017 – diva-portal.org

… Weilhammer, K., Oertel, C., Siegemund, R., Sá, R., Batliner, A., Hönig, F., & Nöth, E. (2009). A Spoken Dialog System for Learners of English. Paper presented at the International Workshop on Speech and Language Technology in Education, University of Birmingham, UK …

The Cognitive Psychology of Speech-Related Gesture

P Feyereisen – 2017 – taylorfrancis.com

Page 1. Page 2. THE COGNITIVE PSYCHOLOGY OF SPEECH- RELATED GESTURE Why do we gesture when we speak? The Cognitive Psychology of Speech- Related Gesture offers answers to this question while introducing …

Dialog for natural language to code

S Chaurasia – 2017 – repositories.lib.utexas.edu

… the generation of fully executable code from their initial description. 1.3 Dialog Systems Another line of research that has recently garnered increasing attention is … Dialog System 3.1 Chapter Overview We propose a text-based dialog system with which users can converse using …

Sound synthesis for communicating nonverbal expressive cues

FA Martín, Á Castro-González, MÁ Salichs – IEEE Access, 2017 – ieeexplore.ieee.org

Page 1. Received November 24, 2016, accepted December 29, 2016, date of publication February 1, 2017, date of current version March 13, 2017. Digital Object Identifier 10.1109/ACCESS. 2017.2658726 Sound Synthesis for Communicating Nonverbal Expressive Cues …

Incorporating android conversational agents in m?learning apps

D Griol, JM Molina, Z Callejas – Expert systems, 2017 – Wiley Online Library

By continuing to browse this site you agree to us using cookies as described in About Cookies. Remove maintenance message …

Poster Chair

T Van Rompay – … and implementation of personalized technologies to … – ris.utwente.nl

… Persuasive Dialogue System for Energy Conservation Jean-Baptiste Corrégé1, Céline Clavel1, Nicolas Sabouret1, Emmanuel Hadoux2, Anthony Hunter2, & Mehdi Ammi1 1LIMSI, CNRS, Université Paris-Saclay, F-91405 Orsay, France 2Department of Computer Science …

Using Cognitive Models

S Kopp, K Bergmann – The Handbook of Multimodal-Multisensor …, 2017 – books.google.com

… For example, mul- timodal speech-gesture behavior has proven to be beneficial for virtual humans and socially expressive robots … Similarly, Putze and Schultz [2009] employed cognitive modeling components in adaptive dialogue systems for in-car information applications, to ex …

Virtual Reality and Game Mechanics in Generalized Social Phobia Treatment

KJ Niechwiadowicz – 2017 – essay.utwente.nl

… Intimacy : informal setting with friends and neighbors; the patient has to make contact with the virtual humans (pre-recorded real humans) all gathered in one room, introduce him/herself, speak about the room and later on answer questions from the guests …

Gaze and filled pause detection for smooth human-robot conversations

M Bilac, M Chamoux, A Lim – Humanoid Robotics (Humanoids) …, 2017 – ieeexplore.ieee.org

… Thirdly, we present our HOMAGE turn-taking system that combines our filler detection method and human gaze information into the dialogue system … 2014. SimSensei Kiosk: A virtual human interviewer for healthcare decision support …

CHATBOT: Architecture, design, and development

J Cahn – University of Pennsylvania School of Engineering and …, 2017 – cahn.io

… Page 3. 3 1. Introduction Chatbots are “online human-computer dialog system[s] with natural language.” [1] The first conceptualization of the chatbot is attributed to Alan Turing, who asked “Can machines think?” in 1950. [3] Since …

Communication skills training exploiting multimodal emotion recognition

K Bahreini, R Nadolski, W Westera – Interactive Learning …, 2017 – Taylor & Francis

Page 1. Communication skills training exploiting multimodal emotion recognition Kiavash Bahreini, Rob Nadolski and Wim Westera Faculty of Psychology and Educational Sciences, Welten Institute, Research Centre for Learning …

Painting Pictures with Words-From Theory to System

R Coyne – 2017 – search.proquest.com

… the future. Ulysse [Godreaux et al., 1999] is an interactive spoken dialog system used to navigate in virtual worlds. It consists … action verb. The system blends animation channels to animate virtual human characters. CONFUCIUS leverages …

Game AI Pro 3: Collected Wisdom of Game AI Professionals

S Rabin – 2017 – taylorfrancis.com

Page 1. Page 2. Game AI Pro 3 Page 3. Page 4. Game AI Pro 3 Collected Wisdom of Game AI Professionals Edited by Steve Rabin Page 5. CRC Press Taylor & Francis Group 6000 Broken Sound Parkway NW, Suite 300 Boca …

Transparency in Robot Autonomy

MM Veloso – Workshop–Beneficial AI, 2017 – futureoflife.org

Page 1. 1/5/17 1 Manuela M. Veloso Joint work Francesca Rossi, Stephanie Rosenthal, Sai Selvaraj, Vi orio Perera Machine Learning Department Computer Science Department Robo cs Ins tute School of Computer Science Carnegie Mellon University …