Notes:

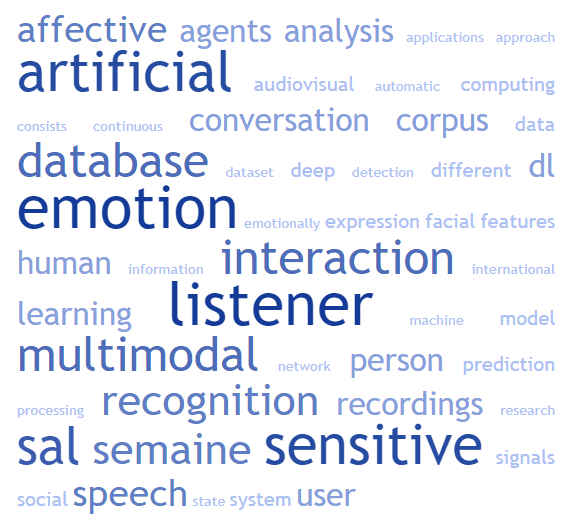

SEMAINE (Sensitive Artificial Listener) was a research project that aimed to develop a non-verbally competent virtual assistant or chatbot that could engage in natural language conversations with humans. The project was funded by the European Commission and was led by the University of Aberdeen in collaboration with several other institutions.

One of the key goals of the SEMAINE project was to develop a virtual assistant that could understand and respond to non-verbal cues, such as facial expressions and tone of voice, in addition to verbal language. The SEMAINE system was designed to be able to recognize and respond to a wide range of non-verbal cues, including emotions, attention, and engagement.

The SEMAINE system was developed using a combination of machine learning algorithms and natural language processing techniques. It was trained on large datasets of human-human conversations, and was designed to be able to learn and adapt over time, becoming more effective at understanding and responding to non-verbal cues as it was exposed to more data.

- Emotion modeling is the process of creating computational models of human emotions and how they are expressed and perceived. Emotion modeling is a key aspect of artificial intelligence (AI) and natural language processing (NLP) research, and it involves developing algorithms and systems that can recognize, understand, and respond to emotions in human language and behavior.

- Semaine corpus is a dataset of human-human conversations that was collected and annotated as part of the SEMAINE (Sensitive Artificial Listener) research project. The Semaine corpus is designed to be a resource for researchers studying human-human communication and non-verbal behavior, and it includes a wide range of annotations and metadata that describe the content and context of the conversations.

- Semaine database is a collection of data and resources related to the SEMAINE (Sensitive Artificial Listener) research project. The Semaine database includes the Semaine corpus, as well as other datasets, tools, and resources that were developed as part of the project.

- Semaine dataset is another name for the Semaine corpus, which is a dataset of human-human conversations that was collected and annotated as part of the SEMAINE (Sensitive Artificial Listener) research project. The Semaine dataset is designed to be a resource for researchers studying human-human communication and non-verbal behavior, and it includes a wide range of annotations and metadata that describe the content and context of the conversations.

- Social embodiment is the process of giving a virtual or artificial agent a sense of social presence or identity. Social embodiment is often used to create more realistic and engaging virtual assistants or chatbots, and it can involve designing the agent to have a specific appearance, personality, or social role.

- Virtual listener is a software program or system that is designed to engage in natural language conversations with humans. Virtual listeners can be used in a variety of applications, including chatbots, virtual assistants, and other types of software that need to communicate with users in natural language. The term “virtual listener” is often used in the context of research projects that are focused on developing more natural and engaging conversational agents.

Resources:

- baum2.bahcesehir.edu.tr .. multilingual audio-visual affective face database

- belfast-naturalistic-db.sspnet.eu .. belfast naturalistic database

- image.ntua.gr/ermis .. emotionally rich man-machine intelligent system

- emotion-research.net .. association for the advancement of affective computing

- hoques.com/mach .. my automated conversation coach

- sail.usc.edu/creativeit .. modeling creative and emotive improvisation in theatre performance

- semaine.opendfki.de .. code of the semaine system

- semaine-db.eu .. database collected for the semaine-project

- semaine-project.eu .. eu sensitive agent project

Wikipedia:

References:

See also:

100 Best Emotion Recognition Videos | 100 Best Sensitive Artificial Listener Videos

Eliciting positive emotion through affect-sensitive dialogue response generation: A neural network approach

N Lubis, S Sakti, K Yoshino, S Nakamura – Proceedings of the AAAI …, 2018 – ojs.aaai.org

… corpus of spontaneous human spoken interaction. The SEMAINE database consists of dialogues between a user and a Sensitive Artificial Listener (SAL) in a Wizard-of-Oz fashion (McKeown et al. 2012). A SAL is a system capa …

Algorithm Awareness: Towards a Philosophy of Artifactuality

G Gramelsberger – Affective Transformations. Politics–Algorithms …, 2020 – books.google.com

… affective state”(Picard 1995, 24; 2000; Clough 2007). A recent application of affective computing is SEMAINE, an EU-project devised to create a Sensitive Artificial Listener. This artificial listener can “interact with humans with …

Personality analysis of embodied conversational agents

S Castillo, P Hahn, K Legde… – Proceedings of the 18th …, 2018 – dl.acm.org

… How do auditory and visual information contribute to the perception of an ECA’s personality? 2 EVALUATION FRAMEWORK For our experiments we used a standardized personality question- naire on a state-of-the-art ECA. Sensitive Artificial Listener (SAL) …

Aff-wild: valence and arousal’In-the-Wild’challenge

S Zafeiriou, D Kollias, MA Nicolaou… – Proceedings of the …, 2017 – openaccess.thecvf.com

… EmoPain [4] and UNBC-McMaster [27] for analy- sis of pain, the RU-FACS database consisting of subjects participating in a false opinion scenario [5] and the SE- MAINE [27] corpus which contains recordings of subjects interacting with a Sensitive Artificial Listener (SAL) under …

Optimizing Neural Response Generator with Emotional Impact Information

N Lubis, S Sakti, K Yoshino… – 2018 IEEE Spoken …, 2018 – ieeexplore.ieee.org

… The corpora are described in this section. 5.1. Wizard-of-Oz interaction with a sensitive artificial listener The SEMAINE database consists of dialogues between a user and a Sensitive Artificial Listener (SAL) in a Wizard-of-Oz fashion [21] …

Learning for multimodal and affect-sensitive interfaces

Y Panagakis, O Rudovic, M Pantic – … of Emotion and Cognition-Volume 2, 2018 – dl.acm.org

Page 1. 3Learning for Multimodal and Affect-Sensitive Interfaces Yannis Panagakis, Ognjen Rudovic, Maja Pantic 3.1 Introduction Humans perceive the world by combining acoustic, visual, and tactile stimuli from their senses …

Empathy framework for embodied conversational agents

ÖN Yalç?n – Cognitive Systems Research, 2020 – Elsevier

… empathy behavior. Sensitive Artificial Listener (SAL) (Schroder et al., 2012) is a multimodal dialogue system that is capable of nonverbal interaction based on speech, head movement and facial expressions of the user. This …

Humanized Artificial Intelligence: What, Why and How

C Miao, Z Zeng, Q Wu, H Yu, C Leung – International Journal of Information …, 2018 – intjit.org

… McRorie et.al investigated how behaviors are influenced by personalities and constructed a Sensitive Artificial listener which can reflect its personality credibly as prescribed (McRorie, Sneddon, de Sevin, Bevacqua, & Pelachaud, 2009) …

Circle of Emotions in Life: Emotion Mapping in 2Dimensions

TS Saini, M Bedekar, S Zahoor – … of the 9th International Conference on …, 2017 – dl.acm.org

… A four-factor solution including cohesive-flexibility, chaos, disengagement, and modified enmeshment appeared more suitable [11]. Figure 12, demonstrates that the Sensitive Artificial Listener (SAL) project is involved in ways to elicit different emotions from humans …

Construction of English-French Multimodal Affective Conversational Corpus from TV Dramas

S Novitasari, QT Do, S Sakti, DP Lestari… – Proceedings of the …, 2018 – aclweb.org

… Another is the SEMAINE Database, an emotion-rich conversational database, which was carefully constructed by recording interactions between Sensitive Artificial Listener (SAL) and users; each recording was transcribed and annotated with the actor’s emotions (McKeown et al …

Predicting Arousal and Valence from Waveforms and Spectrograms Using Deep Neural Networks.

Z Yang, J Hirschberg – INTERSPEECH, 2018 – cs.columbia.edu

… annota- tion granularity of all publicly-available corpora. In SEMAINE recording, two speakers in each conversation are a user and an ‘operator’ who simulates a Sensitive Artificial Listener (SAL) agent. The goal of the operator is to …

AlloSat: a new call center French corpus for satisfaction and frustration analysis

M Macary, M Tahon, Y Estève… – Proceedings of The 12th …, 2020 – aclweb.org

… SEMAINE is composed of simulated conversations between a human user and a machine through Sensitive Artificial Listener (SAL) sce- narios (Douglas-Cowie et al., 2008) and SEWA consists of discussions on commercials between two persons, talk- ing about the ads they …

Multimodal big data affective analytics

NJ Shoumy, L Ang, DMM Rahaman – Multimodal Analytics for Next …, 2019 – Springer

… They collected data from Sensitive Artificial Listener Database (SAL-DB) for emotion prediction and used Bidirectional Long Short-Term Memory Neural Networks (BLSTM-NNs) and Support Vector Regression (SVR) for classification …

Estimation of affective dimensions using CNN-based features of audiovisual data

R Basnet, MT Islam, T Howlader, SMM Rahman… – Pattern Recognition …, 2019 – Elsevier

… Imitating this task, however, is vastly challenging for a machine. In the recent years, an increasing demand of using the ‘sensitive artificial listener’ has been observed in order to tailor services as per the emotional state of an user …

Get your virtual hands off me!–developing threatening IVAs using haptic feedback

L Goedschalk, T Bosse, M Otte – Benelux Conference on Artificial …, 2017 – Springer

… For example, the Sensitive Artificial Listener paradigm enables studying the effect of agents with different personalities on human interlocutors, which provided evidence that IVAs with an angry attitude indeed trigger different (subjective and behavioural) responses than agents …

Prediction-based learning for continuous emotion recognition in speech

J Han, Z Zhang, F Ringeval… – 2017 IEEE international …, 2017 – ieeexplore.ieee.org

… For instance, the work in [9] has compared the performance of SVR and Bidirectional Long Short-Term RNNs (BLSTM-RNNs) for the con- tinuous prediction of arousal and valence on the Sensitive Artificial Listener database, and the results indicate that the latter performed …

On the recognition of spontaneous emotions in spoken Chinese

W Huang, H Zhong, W Wang… – … Conference on Security …, 2017 – ieeexplore.ieee.org

… The Sensitive Artificial Listener (SAL) corpus contains 4 hours of recordings made with the SAL technique [7]. Four participants are recorded by a camcorder while having a conversation sampled at 20 kHz resolution with an experimenter who pretends to be an automatic agent …

Continuous facial expression recognition for affective interaction with virtual avatar

Z Shang, J Joshi, J Hoey – 2017 IEEE International Conference …, 2017 – ieeexplore.ieee.org

… The Semaine database [9] provides extensive annotated audio and visual recordings of a person interacting with an emotionally limited avatar, or sensitive artificial listener (SAL), to study natural social behavior in human interac …

Strength modelling for real-worldautomatic continuous affect recognition from audiovisual signals

J Han, Z Zhang, N Cummins, F Ringeval… – Image and Vision …, 2017 – Elsevier

… For instance, the work in [21] compared the performance of SVR and Bidirectional LSTM-RNNs (BLSTM-RNNs) on the Sensitive Artificial Listener database [20], and the results indicate that the latter performed better on a reduced set of 15 acoustic Low-Level-Descriptors (LLD) …

The NoXi database: multimodal recordings of mediated novice-expert interactions

A Cafaro, J Wagner, T Baur, S Dermouche… – Proceedings of the 19th …, 2017 – dl.acm.org

… A large audio-visual dataset created originally as part of an iterative approach to building virtual agents that can engage a person in a sustained and emotionally-colored conversation, the SEMAINE dataset was collected using the Sensitive Artificial Listener (SAL) paradigm …

A Methond of Building Phoneme-Level Chinese Audio-Visual Emotional Database

X Zhang, S Li, Y Wang, S Li – 2020 International Conference on …, 2020 – ieeexplore.ieee.org

… In 2012, Gary McKeown et al. established SEMAINE [15] by establishing a Sensitive Artificial Listener (SAL) to enable continuous and emotional dialogues. The database is marked with five core dimensions and four optional categories …

Robust modeling of epistemic mental states

A Rahman, ASMI Anam, M Yeasin – Multimedia Tools and Applications, 2020 – Springer

This work identifies and advances some research challenges in the analysis of facial features and their temporal dynamics with epistemic mental states in d.

AST-Net: An Attribute-based Siamese Temporal Network for Real-Time Emotion Recognition.

SH Wang, CT Hsu – BMVC, 2017 – bmva.org

… and artificially intelligent agents. This recording scenario is called Sensitive Artificial Listener (SAL) tech- nique [15] and can stimulate richer and natural emotion changes of humans through the conversations. The other dataset …

Multi-task deep neural network with shared hidden layers: Breaking down the wall between emotion representations

Y Zhang, Y Liu, F Weninger… – 2017 IEEE international …, 2017 – ieeexplore.ieee.org

… The Belfast Sensitive Artificial Listener (SAL) is a subset of the HUMAINE database [28, 29] containing audiovi- sual recordings from natural human-computer conversations. The SmartKom (Smart) corpus [30] features spontaneous speech produced 4991 Page 3 …

Context modeling for cross-corpus dimensional acoustic emotion recognition: Challenges and mixup

D Fedotov, H Kaya, A Karpov – International Conference on Speech and …, 2018 – Springer

… Sustained Emotionally coloured Machine-human Interaction using Nonverbal Expression) database was collected within a project, where the aim was to build a system that could engage a person in a sustained conversation with a Sensitive Artificial Listener (SAL) agent …

Virtually bad: a study on virtual agents that physically threaten human beings

T Bosse, T Hartmann, RAM Blankendaal… – Proceedings of the …, 2018 – researchgate.net

… Other research has focused more explicitly on the impact of emotional agents on humans in interpersonal settings. For example, the Sensitive Artificial Listener paradigm enables researchers to investigate the effect of agents with different personalities on human interlocutors …

EmoLiTe—A database for emotion detection during literary text reading

R Wegener, C Kohlschein, S Jeschke… – … and Demos (ACIIW), 2017 – ieeexplore.ieee.org

… research. Previous work done on multi-modal emotion databases in- cludes the SEMAINE database [5]. It is an audiovisual databases which includes recordings between real users and a sensitive artificial listener (SAL). The …

Affective rating ranking based on face images in arousal-valence dimensional space

G Xu, H Lu, F Zhang, Q Mao – Frontiers of Information Technology & …, 2018 – Springer

… (2008) used the sensitive artificial listener (SAL) database, quantized the annotations of valence and arousal into four and seven levels respectively, and adopted the conditional random fields (CRFs) and support vector machine (SVM) to predict the quantized affective la- bels …

A radial base neural network approach for emotion recognition in human speech

L Hussain, I Shafi, S Saeed, A Abbas, IA Awan… – IJCSNS, 2017 – researchgate.net

… In addition, Sensitive Artificial Listener (SAL) database is used for emotion recognition which contains the natural colour speech and helps the recognition rate from high to low valence state [6]. The multimedia contents from the user are guessed through Bio inspired multimedia …

Comparative study on normalisation in emotion recognition from speech

R Böck, O Egorow, I Siegert, A Wendemuth – International Conference on …, 2017 – Springer

… emotions. The quality of emotional content spans a much broader variety than in emoDB. The Belfast Sensitive Artificial Listener (SAL) (cf. [6]) corpus contains 25 audio-visual recordings from four speakers (two female). The …

Implementing social smart environments with a large number of believable inhabitants in the context of globalization

A Osherenko – Cognitive Architectures, 2019 – Springer

… 3.1.1 Emotions. Emotions should be considered in a believable SSE, as the many scenarios of intercultural communication show [5, 7, 24, 36, 41, 45, 46, 47, 50]. Emotion-related data is acquired in our approach from the audio-visual Sensitive Artificial Listener (SAL) corpus [14] …

Perceptual similarity between piano notes: Simulations with a template-based perception model

AO Vecchi, A Kohlrausch – arXiv preprint arXiv:2005.09768, 2020 – arxiv.org

Page 1. arXiv:2005.09768v1 [eess.AS] 19 May 2020 Perceptual similarity between piano notes: Simulations with a template-based perception model Alejandro Osses Vecchi1, 2 and Armin Kohlrausch1 1Human-Technology …

Affect-lm: A neural language model for customizable affective text generation

S Ghosh, M Chollet, E Laksana, LP Morency… – arXiv preprint arXiv …, 2017 – arxiv.org

… matic Stress Disorder). SEMAINE dataset: SEMAINE (McKeown et al., 2012) is a large audiovisual corpus consisting of interactions between subjects and an operator simulating a SAL (Sensitive Artificial Listener). There are a …

Analysis of Depression Based on Facial Cues on A Captured Motion Picture

BG Dadiz, N Marcos – … on Signal and Image Processing (ICSIP), 2018 – ieeexplore.ieee.org

… It is openly available for scientific research purposes from HTTP: //semaine-db.eu. The scenario used in the documentation is called the Sensitive Artificial Listener (SAL) technique. It includes a user interacting with emotionally stereotypical characters …

Estimating Speaker’s Engagement from Non-verbal Features Based on an Active Listening Corpus

L Zhang, HH Huang, K Kuwabara – International Conference on Social …, 2018 – Springer

… For example, when the user looks in bad mood, showing the agent’s concern on the user by saying “Are you OK?” like human do. The SEMAINE project [12, 14] was launched to build a Sensitive Artificial Listener (SAL). SAL …

Sewa db: A rich database for audio-visual emotion and sentiment research in the wild

J Kossaifi, R Walecki, Y Panagakis… – IEEE transactions on …, 2019 – ieeexplore.ieee.org

… watching audio-visual clips or interacting with a ma- nipulated system [11], [12], and (iii) spontaneous behaviour – natural interactions between individuals, or between a human and a machine, are collected in a given context, eg, chatting with a sensitive artificial listener [7], or …

Multimodal Analysis and Estimation of Intimate Self-Disclosure

M Soleymani, K Stefanov, SH Kang, J Ondras… – 2019 International …, 2019 – dl.acm.org

Page 1. Multimodal Analysis and Estimation of Intimate Self-Disclosure Mohammad Soleymani? University of Southern California Institute for Creative Technologies Los Angeles, CA, USA soleymani@ict.usc.edu Kalin Stefanov …

The problem of the adjective

J Feldman – Transposition Musique et Sciences Sociales, 2017 – academia.edu

… 22 The next year, 2009, The SEMAINE project32 released a report of its research towards designing SAL, a “Sensitive Artificial Listener.” One outcome of this research is openSMILE technology, an open-source “speech analysis technology” for determining emotion.33 …

Implementing Social Smart Environments with a Large Number of Believable Inhabitants in the Context

A Osherenko – Cognitive Architectures, 2018 – books.google.com

… cultural communication show [5, 7, 24, 36, 41, 45–47, 50]. Emotion-related data is acquired in our approach from the audio-visual Sensitive Artificial Listener (SAL) corpus [14]. The SAL corpus is a set of affective NL dialogues …

Recognizing emotionally coloured dialogue speech using speaker-adapted dnn-cnn bottleneck features

K Mukaihara, S Sakti, S Nakamura – International Conference on Speech …, 2017 – Springer

… nonverbal expression (SEMAINE). The SEMAINE material [8] was developed specifically to address the task of achieving emotion-rich interaction with an automatic agent, called a sensitive artificial listener, (SAL). Each user interacts …

OCAE: Organization-controlled autoencoder for unsupervised speech emotion analysis

S Wang, C Soladie, R Seguier – 2019 5th International …, 2019 – ieeexplore.ieee.org

… 2) SEMAINE database: It has created a large audiovisual database to build Sensitive Artificial Listener (SAL) agents that can engage a person in a sustained, emotionally colored conversation [27]. It has 15 subjects, a total of 141 instances …

LSTM Based Cross-corpus and Cross-task Acoustic Emotion Recognition.

H Kaya, D Fedotov, A Yesilkanat… – …, 2018 – pdfs.semanticscholar.org

… of 21.3, almost equally distributed in gender (10 males, 13 fe- males) and have three different mother tongues: French (17), Italian (3) and German (3). SEMAINE database was collected during an interaction in English between users and Sensitive Artificial Listener (SAL), which …

Domain Adaptation for Speech Based Emotion Recognition

M Abdelwahab – 2019 – utd-ir.tdl.org

… RBF Radial basis function ReLU rectified linear unit ResNet deep residual network RMSE root mean square error SAL sensitive artificial listener xviii Page 19. SMO sequential minimal optimization SPS syllables per second SVM support vector machine …

Multimodal big data affective analytics: A comprehensive survey using text, audio, visual and physiological signals

NJ Shoumy, LM Ang, KP Seng, DMM Rahaman… – Journal of Network and …, 2020 – Elsevier

Challenges and applications in multimodal machine learning

T Baltrušaitis, C Ahuja, LP Morency – … of Emotion and Cognition-Volume 2, 2018 – dl.acm.org

Page 1. IPART MULTIMODAL SIGNAL PROCESSING AND ARCHITECTURES Page 2. Page 3. 1Challenges and Applications in Multimodal Machine Learning Tadas Baltrušaitis, Chaitanya Ahuja, Louis-Philippe Morency 1.1 …

Eliciting positive emotional impact in dialogue response selection

N Lubis, S Sakti, K Yoshino, S Nakamura – Advanced Social Interaction …, 2019 – Springer

… In this section, we describe in detail the SEMAINE database and highlight the qualities that make it suitable for our study. The SEMAINE database consists of dialogues between a user and a Sensitive Artificial Listener (SAL) in a Wizard-of-Oz fashion [10] …

Facial emotion recognition: A survey and real-world user experiences in mixed reality

D Mehta, MFH Siddiqui, AY Javaid – Sensors, 2018 – mdpi.com

Extensive possibilities of applications have made emotion recognition ineluctable and challenging in the field of computer science. The use of non-verbal cues such as gestures, body movement, and facial expressions convey the feeling and the feedback to the user. This discipline …

A review of affective computing: From unimodal analysis to multimodal fusion

S Poria, E Cambria, R Bajpai, A Hussain – Information Fusion, 2017 – Elsevier

Continuous affect recognition with different features and modeling approaches in evaluation-potency-activity space

Z Shang – 2017 – uwspace.uwaterloo.ca

… This database provides extensive annotated audio and visual recordings of a person interacting with an emotionally limited agent, or sensitive artificial listener (SAL), to study natural social behavior in human interaction. The Semaine database provides three SAL scenarios …

Interactive narration with a child: impact of prosody and facial expressions

O ?erban, M Barange, S Zojaji, A Pauchet… – Proceedings of the 19th …, 2017 – dl.acm.org

Page 1. Interactive Narration with a Child: Impact of Prosody and Facial Expressions Ovidiu S, erban Normandie Univ, INSA Rouen Normandie, LITIS 76800 Saint-Étienne-du-Rouvray France Mukesh Barange Normandie Univ …

EVIDENCE OF RECENT SOUND CHANGE IN MODERN HEBREW–A SHIFT IN VOWEL PERCEPTION

N Amir, EG Cohen, Y Reshef, E Gonen – change – researchgate.net

Noam AMIR of Tel Aviv University, Tel Aviv (TAU) | Read 101 publications | Contact Noam AMIR.

Real-time sensing of affect and social signals in a multimodal framework: a practical approach

J Wagner, E André – The Handbook of Multimodal-Multisensor Interfaces …, 2018 – dl.acm.org

… VAM corpus [Grimm et al. 2008] consisting of 12 hours of recordings of the German TV talk-show “Vera am Mittag” (Vera at noon); the SAL (Sensitive Artificial Listener) corpus [Douglas-Cowie et al. 2008] consisting of 11 hours …

How is emotion change reflected in manual and automatic annotations of different modalities?

Y Xiang – 2017 – essay.utwente.nl

… QOQ – quasi-open quotient PSP – parabolic spectral parameter MDQ – maxima dispersion quotient SAL – sensitive artificial listener QA – quantitive agreement GCI – Glottal Closure Instants TE-MFCC – Alternative MFCCs extracted from True-Envelope spectral representation …

Speech emotion recognition: Emotional models, databases, features, preprocessing methods, supporting modalities, and classifiers

MB Akçay, K O?uz – Speech Communication, 2020 – Elsevier

Multimodal-multisensor affect detection

SK D’Mello, N Bosch, H Chen – … , and Detection of Emotion and Cognition …, 2018 – dl.acm.org

Page 1. 6Multimodal-Multisensor Affect Detection Sidney K. D’Mello, Nigel Bosch, Huili Chen 6.1 Introduction Imagine you are interested in analyzing the emotional responses of a person in some interaction context (ie, with …

Predicting depression and emotions in the cross-roads of cultures, para-linguistics, and non-linguistics

H Kaya, D Fedotov, D Dresvyanskiy, M Doyran… – Proceedings of the 9th …, 2019 – dl.acm.org

… human interactions. It consists of three parts, representing interaction between a user and a sensitive artificial listener (SAL) on three levels. In our work, we use the solid SAL part, in which a human-operator simulates an agent. It …

Poppy as advanced rubber ducky

KT Kruiff – 2018 – essay.utwente.nl

… 7 2.1.3 Sensitive Artificial Listener 8 … 2.1.3 Sensitive Artificial Listener It is a framework for virtual agents that are made to listen and react to a person talking with verbal and nonverbal communication. It has been developed with natural human computer interaction in mind …

Multimodal behavioral and physiological signals as indicators of cognitive load

J Zhou, K Yu, F Chen, Y Wang, SZ Arshad – The Handbook of …, 2018 – dl.acm.org

Page 1. IIIPART MULTIMODAL PROCESSING OF COGNITIVE STATES Page 2. Page 3. 10Multimodal Behavioral and Physiological Signals as Indicators of Cognitive Load Jianlong Zhou, Kun Yu, Fang Chen, Yang Wang, Syed Z. Arshad …

An investigation of partition-based and phonetically-aware acoustic features for continuous emotion prediction from speech

Z Huang, J Epps – IEEE Transactions on Affective Computing, 2018 – ieeexplore.ieee.org

… The Sustained Emotionally colored Machine-human Interac- tion using Nonverbal Expression (SEMAINE) corpus [37] is an English spontaneous audio-visual dataset collected in the Sensitive Artificial Listener (SAL) scenario, where a person engages in emotionally coloured …

Automatic generation of actionable feedback towards improving social competency in job interviews

SK Nambiar, R Das, S Rasipuram… – Proceedings of the 1st …, 2017 – dl.acm.org

… over a human peer [21]. The Sensitive Artificial Listener(SAL) is a real time interactive mul- timodal dialogue system that focuses primarily on emotional and non-verbal interaction capabilities[19]. The SAL system uses visual …

A facial-expression monitoring system for improved healthcare in smart cities

G Muhammad, M Alsulaiman, SU Amin… – IEEE …, 2017 – ieeexplore.ieee.org

… Emotional Speech (DES) database, the EMO-DB, the eNTERFACE (eNTER), the Airplane Behaviour Corpus (ABC), the Speech Under Simulated and Actual Stress (SUSAS) database, the Audiovisual Interest Corpus (AVIC), the Belfast Sensitive Artificial Listener (SAL), the …

Harnessing ai for augmenting creativity: Application to movie trailer creation

JR Smith, D Joshi, B Huet, W Hsu, J Cota – Proceedings of the 25th ACM …, 2017 – dl.acm.org

… There is almost as many feature sets are there are datasets used for its training (Berlin Speech Emotion Data- base [4], eNTERFACE [24], Airplane Behaviour Corpus [28], Audio Visual Interest Corpus [30], Belfast Sensitive Artificial Listener [8] and Vera-Am-Mittag [13]) …

Prediction of emotion change from speech

Z Huang, J Epps – Frontiers in ICT, 2018 – frontiersin.org

… The SEMAINE corpus 1 is a widely used and reasonably large English spontaneous audiovisual database collected in the Sensitive Artificial Listener (SAL) scenario where a person engages in emotionally colored interactions with one of four emotional operators …

Modeling emotion in complex stories: the Stanford Emotional Narratives Dataset

D Ong, Z Wu, ZX Tan, M Reddan… – IEEE Transactions …, 2019 – ieeexplore.ieee.org

… The first two challenges [13], [14] had researchers predict valence over time on the SEMAINE dataset [15], which consists of recordings of volunteers interacting with a “Sensitive Artificial Listener”, an artificial agent programmed to respond in emotional stereotypes (eg, happy …

Statistical selection of CNN-based audiovisual features for instantaneous estimation of human emotional states

R Basnet, MT Islam, T Howlader… – … Conference on New …, 2017 – ieeexplore.ieee.org

… seconds as per the frame-rate. Thus, the proposed instantaneous emotion prediction technique can be effective in developing real-time sensitive artificial listener (SAL) agents. 6. Conclusion Automatic prediction of emotional states …

8Real-Time Sensing of Affect and Social Signals in

J Wagner, E André – The Handbook of Multimodal-Multisensor Interfaces …, 2018 – dl.acm.org

… VAM corpus [Grimm et al. 2008] consisting of 12 hours of recordings of the German TV talk-show “Vera am Mittag”(Vera at noon); the SAL (Sensitive Artificial Listener) corpus [Douglas-Cowie et al. 2008] consisting of 11 hours …

Classifying multimodal data

E Alpaydin – The Handbook of Multimodal-Multisensor Interfaces …, 2018 – dl.acm.org

Page 1. 2Classifying Multimodal Data Ethem Alpaydin 2.1 Introduction Multimodal data contains information from different sources/sensors that may carry complementary information and, as such, combining these different modal …

A comprehensive survey on automatic facial action unit analysis

R Zhi, M Liu, D Zhang – The Visual Computer, 2020 – Springer

Facial Action Coding System is the most influential sign judgment method for facial behavior, and it is a comprehensive and anatomical system which could e.

A Multimodal Facial Emotion Recognition Framework through the Fusion of Speech with Visible and Infrared Images

MFH Siddiqui, AY Javaid – Multimodal Technologies and Interaction, 2020 – mdpi.com

The exigency of emotion recognition is pushing the envelope for meticulous strategies of discerning actual emotions through the use of superior multimodal techniques. This work presents a multimodal automatic emotion recognition (AER) framework capable of differentiating between …

Multimodal learning analytics: Assessing learners’ mental state during the process of learning

S Oviatt, J Grafsgaard, L Chen, X Ochoa – The Handbook of Multimodal …, 2018 – dl.acm.org

Page 1. 11Multimodal Learning Analytics: Assessing Learners’ Mental State During the Process of Learning Sharon Oviatt, Joseph Grafsgaard, Lei Chen, Xavier Ochoa 11.1 Introduction Today, new technologies are making …

Deep learning for multisensorial and multimodal interaction

G Keren, AED Mousa, O Pietquin, S Zafeiriou… – The Handbook of …, 2018 – dl.acm.org

Page 1. 4Deep Learning for Multisensorial and Multimodal Interaction Gil Keren, Amr El-Desoky Mousa, Olivier Pietquin, Stefanos Zafeiriou, Björn Schuller 4.1 Introduction In recent years, neural networks have demonstrated …

Multimodal user state and trait recognition: An overview

B Schuller – The Handbook of Multimodal-Multisensor Interfaces …, 2018 – dl.acm.org

Page 1. IIPART MULTIMODAL PROCESSING OF SOCIAL AND EMOTIONAL STATES Page 2. Page 3. 5Multimodal User State and Trait Recognition: An Overview Björn Schuller 5.1 Introduction It seems intuitive, if not obvious …

Multimodal analysis of social signals

A Vinciarelli, A Esposito – … , Architectures, and Detection of Emotion and …, 2018 – dl.acm.org

Page 1. 7Multimodal Analysis of Social Signals Alessandro Vinciarelli, Anna Esposito 7.1 Introduction One of the earliest books dedicated to the communication between living beings is The Expression of Emotion in Animals and Man by Charles Darwin …

Literature Survey on Emotion Recognition for Social Signal Processing

A Vijayalakshmi, P Mohanaiah – Advances in Communication, Signal …, 2020 – Springer

… 2010. Nicolaou et al. [85]. Valence, arousal. 1. Mel filter bank coefficients. 2. Corners of the eyebrows (4 points), eyes (8 points), nose (3 points), mouth (4 points), and chin (1 point). HMM, SVM, Panic particle filtering tracking. Sensitive artificial listener (SAL). 2010. Jiang et al. [39] …

Emotion recognition from speech

A Wendemuth, B Vlasenko, I Siegert, R Böck… – Companion …, 2017 – Springer

… They used four emotional terms to discern emotions. The annotation process was conducted by nine labelers assessing complete utterances. The Belfast Sensitive Artificial Listener corpus (SAL) is built from emotionally colored multimodal conversations …

HEU Emotion: a large-scale database for multimodal emotion recognition in the wild

J Chen, C Wang, K Wang, C Yin, C Zhao, T Xu… – Neural Computing and …, 2021 – Springer

… High-quality recording was made by 5 high frame rate, high-resolution cameras, and 4 microphones. A total of 959 conversations with 150 participants and 4 Sensitive Artificial Listener (SAL) characters were recorded, each lasting approximately 5 minutes …

Multimodal deception detection

M Burzo, M Abouelenien, V Perez-Rosas… – The Handbook of …, 2018 – dl.acm.org

Page 1. 13Multimodal Deception Detection Mihai Burzo, Mohamed Abouelenien, Veronica Perez-Rosas, Rada Mihalcea 13.1 Introduction and Motivation Deception is defined as an intentional attempt to mislead others [Depaulo et al. 2003] …

Deep affect prediction in-the-wild: Aff-wild database and challenge, deep architectures, and beyond

D Kollias, P Tzirakis, MA Nicolaou… – International Journal of …, 2019 – Springer

… scenario (Bartlett et al. 2006) and the SEMAINE corpus (McKeown et al. 2012) which contains recordings of subjects interacting with a Sensitive Artificial Listener (SAL) in controlled conditions. All the above databases have …

Perspectives on predictive power of multimodal deep learning: surprises and future directions

S Bengio, L Deng, LP Morency, B Schuller – The Handbook of …, 2018 – dl.acm.org

Page 1. IVPART MULTIDISCIPLINARY CHALLENGE TOPIC Page 2. Page 3. 14Perspectives on Predictive Power of Multimodal Deep Learning: Surprises and Future Directions Samy Bengio, Li Deng, Louis-Philippe Morency, Björn Schuller1 …

Digital humanity: the temporal and semantic structure of dynamic conversational facial expressions

S Castillo Alejandre – 2019 – d-nb.info

… 74 5.2.1 Sensitive Artificial Listener (SAL) … SSq Sum of Squared Errors SEM Standard Error of the Mean MoCap Motion Capture SAL Sensitive Artificial Listener FFMRF Five-Factor Model Rating Form MPI Max Planck Institute BTU Brandenburg Technical University xvii …

Multimodal sentiment analysis

S Poria, A Hussain, E Cambria – 2018 – Springer

Page 1. Socio-Affective Computing 8 Soujanya Poria · Amir Hussain Erik Cambria Multimodal Sentiment Analysis Page 2. Socio-Affective Computing Volume 8 Series Editors Amir Hussain, University of Stirling, Stirling, UK Erik …

14Perspectives on Predictive Power of Multimodal Deep

S Bengio, L Deng, LP Morency, B Schuller – The Handbook of Multimodal … – dl.acm.org

Page 481. 14Perspectives on Predictive Power of Multimodal Deep Learning: Surprises and Future Directions Samy Bengio, Li Deng, Louis-Philippe Morency, Björn Schuller1 In this multidisciplinary discussion among experts …

Building naturalistic emotionally balanced speech corpus by retrieving emotional speech from existing podcast recordings

R Lotfian, C Busso – IEEE Transactions on Affective Computing, 2017 – ieeexplore.ieee.org

… Variations of this technique include creating hypothetical situations (IEMO- CAP database [10]), conversation over video conference while completing a collaborative task (RECOLA database [11]) or eliciting emotions with sensitive artificial listener (SAL) (SEMAINE database [12 …

Hilbert–Huang–Hurst-based non-linear acoustic feature vector for emotion classification with stochastic models and learning systems

V Vieira, R Coelho, FM de Assis – IET Signal Processing, 2020 – IET

… speech. The SEMAINE database features 150 participants (undergraduate and postgraduate students from eight different countries). The sensitive artificial listener (SAL) scenario was used in conversations in English. Interactions …

How do users perceive multimodal expressions of affects?

JC Martin, C Clavel, M Courgeon, M Ammi… – The Handbook of …, 2018 – dl.acm.org

Page 1. 9How Do Users Perceive Multimodal Expressions of Affects? Jean-Claude Martin, Céline Clavel, Matthieu Courgeon, Mehdi Ammi, Michel-Ange Amorim, Yacine Tsalamlal, Yoren Gaffary 9.1 Introduction In their guidelines …

Detecting Deepfakes Using Emotional Irregularities

AF Murray – 2020 – search.proquest.com

Page 1. GRADUATE THESIS/DISSERTATION APPROVAL FORM AND SIGNATURE PAGE Instructions: This form must be completed by all master’s and doctoral students with a thesis or dissertation requirement. Please type …

AffWild Net and Aff-Wild Database

A Benroumpi, D Kollias – arXiv preprint arXiv:1910.05376, 2019 – arxiv.org

… 2.2.1 SEMAINE In paper [9], SEMAINE has created a large audiovisual database as part of an iterative approach to building agents that can engage a person in a sustained, emotionally colored conversation, using the Sensitive Artificial Listener (SAL) [10] paradigm …

Investigating multi-modal features for continuous affect recognition using visual sensing

H Wei – 2018 – doras.dcu.ie

Page 1. Investigating Multi-Modal Features for Continuous Affect Recognition Using Visual Sensing Haolin Wei, B.Sc. (hons) A dissertation submitted in fulfilment of the requirements for the award of Doctor of Philosophy (Ph.D.) to the Dublin City University …

Construction of Spontaneous Emotion Corpus from Indonesian TV Talk Shows and Its Application on Multimodal Emotion Recognition

N Lubis, D Lestari, S Sakti, A Purwarianti… – … on Information and …, 2018 – search.ieice.org

Page 1. 2092 IEICE TRANS. INF. & SYST., VOL.E101–D, NO.8 AUGUST 2018 PAPER Construction of Spontaneous Emotion Corpus from Indonesian TV Talk Shows and Its Application on Multimodal Emotion Recognition Nurul …

Speech based Continuous Emotion Prediction: An investigation of Speaker Variability and Emotion Uncertainty.

T Dang – 2018 – unsworks.unsw.edu.au

… PLSDR Partial Least Square Dimension Reduction RNN Recurrent Neural Network RVM Relevance Vector Machines SAL Sensitive Artificial Listener SDC Shifted Delta Coefficients SVR Support Vector Regression UBM Universal Background Model …

Automatic Analysis of Voice Emotions in Think Aloud Usability Evaluation: A Case of Online Shopping

S Soleimani – 2019 – leicester.figshare.com

Page 1. Thesis submitted for the degree of Doctor of Philosophy in Computer Science Automatic Analysis of Voice Emotions in Think Aloud Usability Evaluation: A Case of Online Shopping by Samaneh Soleimani Department of Informatics February 8, 2019 Page 2 …

Objective Human Affective Vocal Expression Detection and Automatic Classification with Stochastic Models and Learning Systems

V Vieira, R Coelho, F Assis – arXiv preprint arXiv:1910.01967, 2019 – arxiv.org

Page 1. 1 Objective Human Affective Vocal Expression Detection and Automatic Classification with Stochastic Models and Learning Systems V. Vieira, Student Member, IEEE, R. Coelho, Senior Member, IEEE, and FM de Assis …

Non-acted multi-view audio-visual dyadic interactions. Project non-verbal emotion recognition in dyadic scenarios and speaker segmentation

P Lázaro Herrasti – 2019 – diposit.ub.edu

Page 1 …

Automatic recognition of self-reported and perceived emotions

B Zhang, EM Provost – Multimodal Behavior Analysis in the Wild, 2019 – Elsevier

… The FAU-Aibo dataset collected the interactions between children and a robot dog [6]. The Sensitive Artificial Listener (SAL) portion of the SEMAINE dataset used four agents with different characters played by human operators for eliciting different emotional responses from the …

Opinion Analysis in Interactions: From Data Mining to Human-Agent Interaction

C Clavel – 2019 – books.google.com

Page 1. Opinion Analysis in Interactions From Data Mining to Human–Agent Interaction Chloé Cavel Page 2. Opinion Analysis in Interactions From Data Mining to Human–Agent Interaction Chloé Cavel . – | | | Page 3. Page 4. Opinion Analysis in Interactions Page 5. Page 6 …

Affective facial computing: Generalizability across domains

JF Cohn, IO Ertugrul, WS Chu, JM Girard… – … Behavior Analysis in the …, 2019 – Elsevier

JavaScript is disabled on your browser. Please enable JavaScript to use all the features on this page. Skip to main content Skip to article …

Deep representation learning in speech processing: Challenges, recent advances, and future trends

S Latif, R Rana, S Khalifa, R Jurdak, J Qadir… – arXiv preprint arXiv …, 2020 – arxiv.org

… SEMAINE [68] English Audio-Visual 150 participants and 959 conversations An induced corpus recorded to build sensitive artificial listener agents that can engage a person in a sustained and emotionally coloured conversation …

Literature Survey and Datasets

S Poria, A Hussain, E Cambria – Multimodal Sentiment Analysis, 2018 – Springer

… This dataset was developed in 2007 by McKeown et al. [226]. It is a large audiovisual database created for building agents that can engage a person in a sustained and emotional conversation using Sensitive Artificial Listener (SAL) [95] paradigm …

First impressions: A survey on vision-based apparent personality trait analysis

JCSJ Junior, Y Güçlütürk, M Pérez… – IEEE Transactions …, 2019 – ieeexplore.ieee.org

Page 1. 1949-3045 (c) 2019 IEEE. Personal use is permitted, but republication/ redistribution requires IEEE permission. See http://www.ieee.org/ publications_standards/publications/rights/index.html for more information. This …

Movement affect estimation in motion capture data

D Li – 2018 – summit.sfu.ca

… Their data comes from the Sensitive Artificial Listener Database [15], which consists of audiovisual recordings of participant interaction with an operator under the role of four different personalities (happy, gloomy, angry, and pragmatic) …

A Survey on Image Acquisition Protocols for Non-posed Facial Expression Recognition Systems

P Saha, D Bhattacharjee, BK De, M Nasipuri – Multimedia Tools and …, 2019 – Springer

Several research methodologies and human face image databases have been developed based on deliberately produced facial expressions of prototypical emotion.

Machine Learning Solutions for Emotional Speech: Exploiting the Information of Individual Annotations

R Lotfian – 2018 – utd-ir.tdl.org

Page 1. MACHINE-LEARNING SOLUTIONS FOR EMOTIONAL SPEECH: EXPLOITING THE INFORMATION OF INDIVIDUAL ANNOTATIONS by Reza Lotfian APPROVED BY SUPERVISORY COMMITTEE: Carlos Busso, Chair John HL Hansen Yang Liu PK Rajasekaran …

1 Machine Learning Methods for Social Signal Processing

O Rudovic, MA Nicolaou, V Pavlovic – Social signal processing, 2017 – books.google.com

… 1 Besides SEMAINE, other examples of databases which incorporate continuous annotations include the Belfast Naturalistic Database, the Sensitive Artificial Listener (Douglas-Cowie et al., 2003; Cowie, Douglas-Cowie, & Cox, 2005), and the CreativeIT database (Metallinou et …

Listening Intently: Towards a Critical Media Theory of Ethical Listening

J Feldman – 2017 – search.proquest.com

Page 1. Sponsoring Committee: Professor Martin Scherzinger, Chairperson Professor Mara Mills, Chairperson Professor Helen Nissenbaum LISTENING INTENTLY: TOWARDS A CRITICAL MEDIA THEORY OF ETHICAL LISTENING Jessica Feldman …

Novel Frameworks for Attribute-Based Speech Emotion Recognition using Time-continuous Traces and Sentence-Level Annotations

S Parthasarathy – 2019 – utd-ir.tdl.org

Page 1. NOVEL FRAMEWORKS FOR ATTRIBUTE-BASED SPEECH EMOTION RECOGNITION USING TIME-CONTINUOUS TRACES AND SENTENCE-LEVEL ANNOTATIONS by Srinivas Parthasarathy APPROVED BY SUPERVISORY COMMITTEE: Carlos Busso, Chair …

Computational study of primitive emotional contagion in dyadic interactions

G Varni, I Hupont, C Clavel… – IEEE Transactions on …, 2017 – ieeexplore.ieee.org

Page 1. 1949-3045 (c) 2017 IEEE. Personal use is permitted, but republication/ redistribution requires IEEE permission. See http://www.ieee.org/ publications_standards/publications/rights/index.html for more information. This …

Speech Based Emotion and Emotion Change in Continuous Automatic Systems

Z Huang – 2018 – researchgate.net

… RVM-SR RVM-Staircase Regression RRMSE Relative Root Mean Square Error SAL Sensitive Artificial Listener SFS Sequential Forward Selection SVM Support Vector Machines SVR Support Vector Regression UBM Universal Background Model VB Variational Bayesian …

Emotion Recognition from Speech with Acoustic, Non-Linear and Wavelet-based Features Extracted in Different Acoustic Conditions

JCV Correa – 2019 – core.ac.uk

… As example, The European SEMAINE project, a sensitive artificial listener (SAL) able to sustain a conversation with a human using … Semaine [24]: This database was created by the European Semaine project in order to build a Sensitive Artificial Listener …

Automated Deep Audiovisual Emotional Behaviour Analysis in the Wild

J Han – 2020 – opus.bibliothek.uni-augsburg.de

Page 1. Automated Deep Audiovisual Emotional Behaviour Analysis in the Wild Inaugural-Dissertation for the degree of Doctor of Natural Sciences (Dr. rer. nat.) in the Faculty of Applied Computer Science University of Augsburg by Jing Han 2020 Page 2 …

A Review on MAS-Based Sentiment and Stress Analysis User-Guiding and Risk-Prevention Systems in Social Network Analysis

G Aguado, V Julián, A García-Fornes, A Espinosa – Applied Sciences, 2020 – mdpi.com

In the current world we live immersed in online applications, being one of the most present of them Social Network Sites (SNSs), and different issues arise from this interaction. Therefore, there is a need for research that addresses the potential issues born from the increasing user …

Aff-Wild Database and AffWildNet

M Liu, D Kollias – arXiv preprint arXiv:1910.05318, 2019 – arxiv.org

… colored Machine-human Interaction using Nonverbal Expres- sion(SEMAINE) database2 (McKeown et al., 2012) is an annotated audio-video database consists of the recorded conversations between participants and operator simulating a Sensitive Artificial Listener(SAL) …

Cross-domain au detection: Domains, learning approaches, and measures

IO Ertugrul, JF Cohn, LA Jeni, Z Zhang… – 2019 14th IEEE …, 2019 – ieeexplore.ieee.org

Page 1. Cross-domain AU Detection: Domains, Learning Approaches, and Measures Itir Onal Ertugrul 1 , Jeffrey F. Cohn 2 , László A. Jeni 1 , Zheng Zhang 3 , Lijun Yin 3 and Qiang Ji 4 1 Robotics Institute, Carnegie Mellon …

Using a PCA-based dataset similarity measure to improve cross-corpus emotion recognition

I Siegert, R Böck, A Wendemuth – Computer Speech & Language, 2018 – Elsevier

JavaScript is disabled on your browser. Please enable JavaScript to use all the features on this page. Skip to main content Skip to article …

A Video Database for Analyzing Affective Physiological Responses

M Granero Moya – 2019 – upcommons.upc.edu

… in emotion classification perfor- mance. SEMAINE [17] is a large audiovisual database of face recordings as a part of an iterative approach to building Sensitive Artificial Listener (SAL) agents. High-quality recordings total of …

Combining Physiological Data and Context Information as an Input for Mobile Applications

C Stockhausen – 2017 – d-nb.info

Page 1. Combining Physiological Data and Context Information as an Input for Mobile Applications Dissertation zur Erlangung des Doktorgrades der Naturwissenschaften vorgelegt beim Fachbereich 12 der Johann Wolfgang Goethe-Universität in Frankfurt am Main …

EmoAssist: emotion enabled assistive tool to enhance dyadic conversation for the blind

A Rahman, ASMI Anam, M Yeasin – Multimedia Tools and Applications, 2017 – Springer

Page 1. Multimed Tools Appl (2017) 76:7699–7730 DOI 10.1007/s11042-016-3295-4 EmoAssist: emotion enabled assistive tool to enhance dyadic conversation for the blind AKMMahbubur Rahman1 ·ASM Iftekhar Anam2 · Mohammed Yeasin2 …

Learning Hierarchical Emotion Context for Continuous Dimensional Emotion Recognition From Video Sequences

Q Mao, Q Zhu, Q Rao, H Jia, S Luo – IEEE Access, 2019 – ieeexplore.ieee.org

Page 1. 2169-3536 (c) 2019 IEEE. Translations and content mining are permitted for academic research only. Personal use is also permitted, but republication/ redistribution requires IEEE permission. See http://www.ieee.org …

Interpretable Deep Neural Networks for Facial Expression and Dimensional Emotion Recognition in-the-wild

V Richer, D Kollias – arXiv preprint arXiv:1910.05877, 2019 – arxiv.org

… The SEMAINE (Sustained Emotionally coloured Machine-human Interaction using Nonverbal Expression) dataset. It is audiovisual interactions between a human and a machine [26], using the Sensitive Artificial Listener method (SAL) [48] …

Crossing domains for au coding: Perspectives, approaches, and measures

IO Ertugrul, JF Cohn, LA Jeni, Z Zhang… – IEEE transactions on …, 2020 – ieeexplore.ieee.org

Page 1. 158 IEEE TRANSACTIONS ON BIOMETRICS, BEHAVIOR, AND IDENTITY SCIENCE, VOL. 2, NO. 2, APRIL 2020 Crossing Domains for AU Coding: Perspectives, Approaches, and Measures Itir Onal Ertugrul , Member …