Notes:

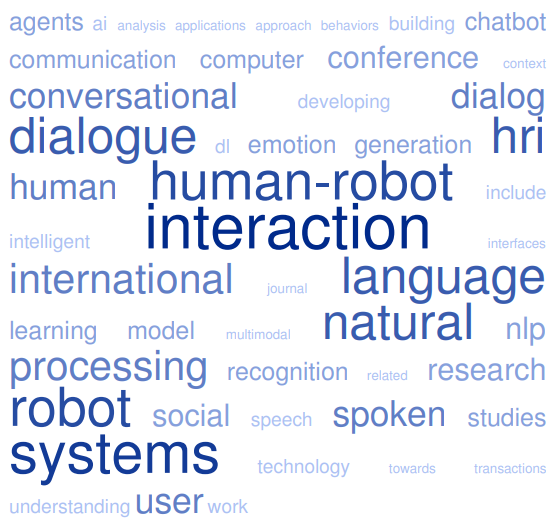

Human-robot interaction (HRI) is the study of how humans and robots interact and communicate with each other. It involves the design, development, and evaluation of systems and technologies that enable humans and robots to interact and collaborate in a natural and intuitive way.

Dialog systems are software systems that are designed to engage in natural language conversations with humans. They are often used in HRI applications to enable robots to communicate with humans in a natural and intuitive way.

Dialog systems can be used in HRI applications in a variety of ways. For example, a dialog system might be used to enable a robot to answer questions or provide information to a human user. Alternatively, a dialog system might be used to enable a robot to ask questions or request information from a human user.

A counseling agent is a type of artificial intelligence (AI) system that is designed to provide emotional support, guidance, and counseling to humans. Counseling agents are often used in mental health and well-being applications, and can be designed to provide a variety of different types of support and guidance, including emotional support, stress management, and problem-solving guidance.

Human-robot interaction (HRI) is the study of how humans and robots interact and communicate with each other. HRI can be used in conjunction with counseling agents to enable robots to communicate with humans in a natural and intuitive way and provide emotional support and guidance.

For example, a counseling agent might be implemented as a robot or virtual assistant that is designed to provide emotional support and guidance to humans. The robot or virtual assistant could use HRI techniques to communicate with humans in a natural and intuitive way, such as by using natural language dialog or facial expressions.

Resources:

- icsoro.org .. international conference on social robotics (icsr)

Wikipedia:

References:

- Integrating CALL Systems with Chatbots as Conversational Partners (2017)

- Artificial Cognitive Systems: A Primer (2014)

See also:

100 Best Pepper Robot Videos | 100 Best Robot Speech Synthesis Videos | 100 Best Social Robotics Videos | Cognitive Architecture & Robotics | Empathy & Robots | Robot Control Schemes | ROILA (Robot Interaction Language) | YARP (Yet Another Robot Platform)

Human-Augmented Robotic Intelligence (HARI) for Human-Robot Interaction

V Mruthyunjaya, C Jankowski – Proceedings of the Future Technologies …, 2019 – Springer

… system discussed in this architecture is from the task-oriented spoken dialogue system architecture [2, 3 … During a human-robot interaction, the robot receives audio and video signals from a human … modules may exist depending on the design requirement and the nature of HRI …

Spoken dialogue system for a human-like conversational robot ERICA

T Kawahara – … International Workshop on Spoken Dialogue System …, 2019 – Springer

… This is the motivation of our Symbiotic Human-Robot Interaction (HRI) project sponsored by the … While dialogue systems have been investigated for casual interviews [8], a job interview is … This is a serious problem in human-robot interaction, but circumvented in smartphones by …

Designing a Personality-Driven Robot for a Human-Robot Interaction Scenario

HB Mohammadi, N Xirakia, F Abawi… – … on Robotics and …, 2019 – ieeexplore.ieee.org

… and S. Shen, “No joking aside–using humor to establish sociality in hri,” in 2014 9th ACM/IEEE International Conference on Human-Robot Interaction (HRI), IEEE, 2014, pp … 30] B. Thomson, Dialogue System Theory … in Human-Robot Interaction at the AISB Convention 2015, 2015 …

Language-capable robots may inadvertently weaken human moral norms

RB Jackson, T Williams – … on Human-Robot Interaction (HRI), 2019 – ieeexplore.ieee.org

… In this paper, we show how this may occur, using clarification request generation in current dialog systems as a motivating example … Index Terms—Natural Language Generation; Robot Ethics; Human-Robot Interaction I. INTRODUCTION AND MOTIVATION …

Constructing human-robot interaction with standard cognitive architecture.

K Jokinen – AIC, 2019 – ceur-ws.org

… a valuable first step to achieve natural dia- logue interactions in HRI, and make … In: Natural Interaction with Robots, Knowbots and Smartphones: Putting Spoken Dialogue Systems into Practice … Towards shared attention through geometric reasoning for human robot interaction …

Stepped Warm-Up–The Progressive Interaction Approach for Human-Robot Interaction in Public

M Zhao, D Li, Z Wu, S Li, X Zhang, L Ye, G Zhou… – … Conference on Human …, 2019 – Springer

… Human-Robot Interaction (HRI) Initiating interaction Progressive interaction approach Reactive approach Facial expression … stage, as if it failed, the following human-robot interaction would seem … is benefited from the AI techniques (eg, NLP, dialogue system, speech recognition …

Predicting Object Affordances within a Continuous Dialogue State Update Process for Human-Robot-Interaction

J Hough, L Jamone – researchgate.net

… potentially unfamiliar objects in a human- robot dialogue system with incremental … capabilities, which are designed for real-world human-robot interaction (HRI) need to … TYPES-AS-CLASSIFIERS FOR AFFORDANCE PREDICTION IN HUMAN-ROBOT INTERACTION Typical raw …

Towards Environment Aware Social Robots using Visual Dialog

A Singh, M Ramanathan, R Satapathy… – aalind0.github.io

… a research field that com- bines computer vision and natural language processing tech- niques … robot conversation history as text are combined to develop a Visual Dialog system … user that ultimately leads to a more satisfying human-robot interaction (HRI) experience (Breazeal …

Toward morally sensitive robotic communication

RB Jackson – … Conference on Human-Robot Interaction (HRI), 2019 – ieeexplore.ieee.org

… Index Terms—Natural Language Generation; Robot Ethics; Human-Robot Interaction … Specifically, current dialogue systems request clarification as soon as am- biguity is identified within … Kramer, and D. Anderson, “First steps toward natural human-like HRI,” Autonomous Robots …

Human Robot Intera

K Technic – researchgate.net

… Since HRI depends on (sometimes natural) h communication knowledge, many aspects are … Mallios, 2018, Virtual doctor: an intelligent human-computer dialogue system for quick … Avoidance System for Indoor Mobile Robots based on Human-Robot Interaction, 9th International …

Natural language generation for social robotics: opportunities and challenges

ME Foster – … Transactions of the Royal Society B, 2019 – royalsocietypublishing.org

… will be similar to the GIVE challenge, but will incorporate aspects of situated human–robot interaction … well as significant research from the related area of spoken dialogue systems that using … Social robotics—and HRI more generally—presents a particularly challenging and rich …

Project r-castle: Robotic-cognitive adaptive system for teaching and learning

D Tozadore, AHM Pinto, J Valentini… – … on Cognitive and …, 2019 – ieeexplore.ieee.org

… IN Human-Robot Interaction (HRI), it is notorious that more adaptive techniques and human-like … In HRI area, in children educational domain using cognitive systems, one example of … verbal communication (nW, RWa, Tta) will be provided by the Dialogue System, whereas the …

A review of methodologies for natural-language-facilitated human–robot cooperation

R Liu, X Zhang – International Journal of Advanced Robotic …, 2019 – journals.sagepub.com

Natural-language-facilitated human–robot cooperation refers to using natural language to facilitate interactive information sharing and task executions with a common goal constraint between robots …

Making Pepper Understand and Respond in Romanian

D Tufis, VB Mititelu, E Irimia, M Mitrofan… – … on Control Systems …, 2019 – ieeexplore.ieee.org

… Humanoid Robot Pepper at a Belgian Chocolate Shop”, HRI ’18 Companion: 2018 ACM/IEEE International Conference on Human-Robot Interaction Companion, March 2018 … Proceedings of the 8th International Workshop on Spoken Dialog Systems, IWSDS 2017 …

Latent character model for engagement recognition based on multimodal behaviors

K Inoue, D Lala, K Takanashi, T Kawahara – … on Spoken Dialogue System …, 2019 – Springer

… In: Proceedings of the HRI, pp 305–311Google Scholar. 24 … Sun M, Zhao Z, Ma X (2017) Sensing and handling engagement dynamics in human-robot interaction involving peripheral … AW, Rudnicky AI (2016) A Wizard-of-Oz study on a non-task-oriented dialog systems that reacts …

Who is responsible for a dialogue breakdown? an error recovery strategy that promotes cooperative intentions from humans by mutual attribution of …

T Uchida, T Minato, T Koyama… – Frontiers in Robotics and AI, 2019 – frontiersin.org

… language understanding, which requires sophisticated technologies of natural language processing (eg, context … Also in human-robot interaction, the robot’s self-disclosure behaviors encourage reciprocal self … However, no current dialogue systems apply the rule of reciprocity to …

Robot Semantic Protocol (RoboSemProc) for Semantic Environment Description and Human–Robot Communication

NTM Saeed, MF Kazerouni, M Fathi… – International Journal of …, 2019 – Springer

… humans has brought forth some new fields, for example, robot-robot interaction (RRI) and human–robot interaction (HRI) … vided, where the authors refer to HRI as “a field of study dedicated to … 3. The need for a dialog system for communication between the robot and humans in …

Aquaticus: publicly available datasets from a marine human-robot teaming testbed

M Novitzky, P Robinette, MR Benjamin… – … -Robot Interaction …, 2019 – ieeexplore.ieee.org

… complexity low (no need for physical interaction) and focused on human-robot interaction with fully … The questionnaire includes the Trust Perception Scale – HRI survey [12], Robot Liking … is triggered by a different button which activates speech recognition and a dialogue system …

Delivering Cognitive Behavioral Therapy Using A Conversational SocialRobot

F Dino, R Zandie, H Abdollahi, S Schoeder… – arXiv preprint arXiv …, 2019 – arxiv.org

… improvement of their verbal and nonverbal socioemotional capabilities enriches human-robot interaction (HRI) … robotics, Socially Assistive Robotics (SAR), especially strengthens HRI due to … interest as natural language generation in modern dialog systems creates conversation …

On perceived social and moral agency in natural language capable robots

RB Jackson, T Williams – … Dark Side of Human-Robot Interaction …, 2019 – researchgate.net

… recent studies show how adversarial contexts or inputs can cause dialogue systems to generate … It is common for human-robot interaction researchers to run experiments and report results without … in a hybrid deliberative/reactive robot architecture,” in Proceedings of HRI, 2008 …

Developing a virtual reality wildfire simulation to analyze human communication and interaction with a robotic swarm during emergencies

P Chaffey, R Artstein, K Georgila… – Proceedings of the …, 2019 – www-scf.usc.edu

… In ACM SIGCHI/SIGART International Conference on Human- Robot Interaction (HRI). Bowling, Michael and Manuela Veloso, 2002 … Journal of Human-Robot Interaction, 2:103–129 … ScoutBot: A dialogue system for collaborative navigation …

Enactivism and Robotic Language Acquisition: A Report from the Frontier

F Förster – Philosophies, 2019 – mdpi.com

… versa, insights and detailed observations of cognitively oriented human–robot interaction may inform … enactivist tenets from the perspective of a practising HRI and interaction … Robotics, in contrast to disembodied natural language processing (NLP) research, has the advantage …

A natural language corpus of common grounding under continuous and partially-observable context

T Udagawa, A Aizawa – Proceedings of the AAAI Conference on Artificial …, 2019 – aaai.org

… our task to be a fun- damental testbed for developing dialogue systems with so … of the Tenth Annual ACM/IEEE In- ternational Conference on Human-Robot Interaction, HRI ’15, 271 … of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), 787 …

Evaluation of the Naturalness of Chatbot Applications

A Atiyah, S Jusoh, F Alghanim – 2019 IEEE Jordan International …, 2019 – ieeexplore.ieee.org

… There are two areas of AI that played a big role in chatbots: Natural Language Processing (NLP) and Machine … of applications that can be used to deploy conversational agents, such as: Spoken Dialogue Systems, Chatbots, Human-Robot Interaction (HRI) such as …

Multimodal conversational interaction with robots

G Skantze, J Gustafson, J Beskow – The Handbook of Multimodal …, 2019 – dl.acm.org

… For a long time, spoken dialogue systems developed in research labs and employed in the industry also lacked any physical embodiment … In this chapter we focus on an important emerging application area for conver- sational interfaces: human-robot interaction …

Exploring Interaction with Remote Autonomous Systems using Conversational Agents

DA Robb, J Lopes, S Padilla, A Laskov… – Proceedings of the …, 2019 – dl.acm.org

… Evaluation of non-task oriented social dialog systems, on the other hand, is a new emerging challenge, as there is no clear measure for task … Page 4. Figure 2. System architecture of the MIRIAM CA. NLP/G are Natural Language Processing/Generation …

Can a Humanoid Robot be part of the Organizational Workforce? A User Study Leveraging Sentiment Analysis

N Mishra, M Ramanathan, R Satapathy… – 2019 28th IEEE …, 2019 – ieeexplore.ieee.org

… Human-robot interaction (HRI) studies are usually con- ducted in controlled settings or pre-defined tasks … discussed here will help the future generation of humanoid robots to enhance HRI … to handle informal texts and common sense reasoning for an effective dialogue system …

Walking partner robot chatting about scenery

T Sono, S Satake, T Kanda, M Imai – Advanced Robotics, 2019 – Taylor & Francis

… scenes? ACM/IEEE Int. Conf. on Human–Robot Interaction (HRI2017), Aula der Wissenschaft, Vienna, Austria; 2017. p. 313–322]. Our main contribution is the whole chat system, which includes the user’s involvement estimation …

Ensemble-based deep reinforcement learning for chatbots

H Cuayáhuitl, D Lee, S Ryu, Y Cho, S Choi, S Indurthi… – Neurocomputing, 2019 – Elsevier

… In our learning scenario the agent-environment interactions consist of agent-data interactions – there is no user simulator as in task-oriented dialogue systems [7], [8]. During each verbal contribution and during training, the DRL agents 1 …

Knowledge Creation Model for Emotion Based Response Generation for AI

UK Premasundera, MC Farook – 2019 19th International …, 2019 – ieeexplore.ieee.org

… Available: 10.1109/hri.2016.7451870 … Loo and M. Seera, “Personality affected robotic emotional model with associative memory for human-robot interaction”, Neurocomputing, vol … Y. Bengio, A. Courville and J. Pineau, “Building End-To-End Dialogue Systems Using Generative …

Development of an Effective Information Media Using Two Android Robots

T Nishio, Y Yoshikawa, K Ogawa, H Ishiguro – Applied Sciences, 2019 – mdpi.com

… S.; Kawahara, T.; Okuno, HG Flexible Guidance Generation Using User Model in Spoken Dialogue Systems … In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction—HRI ’07, Arlington, VA … Available online: http://nlp.ist.i.kyoto-u.ac.jp/index.php …

Atmosphere Sharing with TV Chat Agents for Increase of User’s Motivation for Conversation

S Nishimura, M Kanbara, N Hagita – International Conference on Human …, 2019 – Springer

… 1. Kanda, T., Sato, R., Saiwaki, N., Ishiguro, H.: A longitudinal field trial for human-robot interaction in an elementary school … K., Tokoyo, T., Masui, Y., Matsuo, N., Kikuchi, H.: Factors of interaction in the spoken dialogue system with high … In: Proceedings of the HRI 2010, pp …

Interactive Question-Posing System for Robot-Assisted Reminiscence from Personal Photos

YL Wu, E Gamborino, LC Fu – IEEE Transactions on Cognitive …, 2019 – ieeexplore.ieee.org

… to communicate in human-human interactions, due to limitations in natural language processing, most of the … to be able to handle the responses from the user, dialogue systems often pose … based approaches: 1) Data-Driven Approaches In the more general NLP literature, there …

Expressing reactive emotion based on multimodal emotion recognition for natural conversation in human–robot interaction

Y Li, CT Ishi, K Inoue, S Nakamura… – Advanced …, 2019 – Taylor & Francis

… In: Proceeding 7th International Workshop on Spoken Dialogue Systems; 2016 … Expressing a reaction with appropriate emotion is necessary in human–robot interaction (HRI), but so far this has been difficult to achieve, partly because the systems are insensitive to the emotions …

Human-inspired socially-aware interfaces

D Schiller, K Weitz, K Janowski, E André – International Conference on …, 2019 – Springer

… Various attempts have been made to implement empathic behaviors in computer-based dialogue systems … In: ACM/IEEE International Conference on Human-Robot Interaction, (HRI), Bielefeld, Germany, pp … In: Wilks, Y. (ed.) Natural Language Processing, vol. 8, pp. 131–142 …

Multi-party Turn-Taking in Repeated Human–Robot Interactions: An Interdisciplinary Evaluation

M ?arkowski – International Journal of Social Robotics, 2019 – Springer

… [45] present the effect of long-term repeated human–robot interaction on the … found, neither in the case of human–robot turn-taking, nor in the case of multi-party HRI … The basic capabilities of EMYS use a spoken dialog system for a conversational agent that relies on mostly on …

The empathic project: mid-term achievements

MI Torres, JM Olaso, C Montenegro… – Proceedings of the 12th …, 2019 – dl.acm.org

… The project focuses on evidence-based, user-validated research and in- tegration of intelligent technology, and context sensing methods through automatic voice, eye and facial analysis, integrated with visual and spoken dialogue system capabilities …

Early detection of user engagement breakdown in spontaneous human-humanoid interaction

AB Youssef, C Clavel, S Essid – IEEE Transactions on Affective …, 2019 – ieeexplore.ieee.org

… a supervised classification system for forecasting a potential user engagement breakdown in human-robot interaction … The communication breakdown in spoken dialogue systems was studied in [39 … behave differently during social norm violations and technical failures in HRI [36 …

Conversational Interfaces for Explainable AI: A Human-Centred Approach

SF Jentzsch, S Höhn, N Hochgeschwender – International Workshop on …, 2019 – Springer

… 4. Inferring implications for an implementation in a dialogue system … 676–682. IEEE (2017)Google Scholar. 16. Langley, P.: Explainable agency in human-robot interaction. In: AAAI Fall Symposium Series … referential practices and interactional dynamics in real world HRI …

A social robot in a shopping mall: studies on acceptance and stakeholder expectations

M Niemelä, P Heikkilä, H Lammi, V Oksman – … of Human-Robot Interaction, 2019 – Springer

… communication, and algorithms related to face and expression recognition and natural language processing … all functions, and small consumer-delighting gestures in the human–robot interaction would be … For instance, a dialog system solution called Alana was able to keep up …

Natural language interactions in autonomous vehicles: Intent detection and slot filling from passenger utterances

E Okur, SH Kumar, S Sahay, AA Esme… – arXiv preprint arXiv …, 2019 – arxiv.org

… passenger intents and extracting relevant slots are important building blocks towards developing contextual dialogue systems for natural … LSTM) networks [7] are widely-used for tempo- ral sequence learning or time-series modeling in Natural Language Processing (NLP) …

Resilient chatbots: repair strategy preferences for conversational breakdowns

Z Ashktorab, M Jain, QV Liao, JD Weisz – … of the 2019 CHI Conference on …, 2019 – dl.acm.org

… Brennan derived a theory-driven model for a spoken dialog system to explicitly indicate in … They found NLP errors – misunderstanding a user’s utterance – to be the most … The related human-robot interaction (HRI) community has studied designs to mitigate the negative effects of …

Human-Inspired Socially-Aware Interfaces

K Janowski, E André – researchgate.net

… ACM/IEEE International Conference on Human-Robot In- teraction, (HRI), Bielefeld, Germany … Takayama, L., Pantofaru, C.: Influences on proxemic behaviors in human-robot interaction … Neumann, H., Pieraccini, R., Weber, M. (eds.) Perception in Multimodal Dialogue Systems …

Dempster-shafer theoretic resolution of referential ambiguity

T Williams, F Yazdani, P Suresh, M Scheutz… – Autonomous …, 2019 – Springer

… ambiguity as well. This approach is uniquely tailored to human–robot interaction (HRI) contexts, as it produces human-preferred clarification requests that conform with the pragmatics of human–robot dialogue. The remainder of …

Entertaining and opinionated but too controlling: a large-scale user study of an open domain Alexa prize system

KK Bowden, J Wu, W Cui, J Juraska… – Proceedings of the 1st …, 2019 – dl.acm.org

… Natural Language and Dialogue Systems †Human Computer … centered computing ? Field studies; Natural lan- guage interfaces; User centered design; • Computing method- ologies ? Discourse, dialogue and pragmatics; Intelligent agents; Natural language processing; …

Interaction Guidelines for Personal Voice Assistants in Smart Homes

T Huxohl, M Pohling, B Carlmeyer… – 2019 International …, 2019 – ieeexplore.ieee.org

… Birte Carlmeyer Applied Informatics Group Dialogue System Group CITEC – Bielefeld University Bielefeld … to have more natural interactions with PVAs, the natural language processing needs to … well known in the human-agent and human-robot interaction community, dialogue is …

Situated interaction

D Bohus, E Horvitz – The Handbook of Multimodal-Multisensor Interfaces …, 2019 – dl.acm.org

… The earliest attempts at dialog between computers and people were text-based dialog systems, such as Eliza [Weizenbaum 1966], a pattern- matching chat-bot that emulated a psychotherapist, and SHRLDU [Winograd 1971], a natural language understanding system that …

Query Generation for Resolving Ambiguity in User’s Command for a Mobile Service Robot

K Morohashi, J Miura – 2019 European Conference on Mobile …, 2019 – ieeexplore.ieee.org

… This paper deals with HRI in such a situation … Dialog systems are also used for grounding physical entity like an object or a specific space and/or resolving … and N. Roy, “Clarifying commands with information-theoretic human-robot dia- log,” J. of Human-Robot Interaction, vol …

Conversational Interfaces for Health: Bibliometric Analysis of Grants, Publications, and Patents

Z Xing, F Yu, J Du, JS Walker, CB Paulson… – Journal of medical …, 2019 – jmir.org

… conversational interfaces (CIs) enables users to talk to a machine [1]. Using conventional pattern match or natural language processing, CIs simulate … from previously published works that include CI-related terms [1,2,21,26], such as spoken dialogue system, conversational agent …

A short survey on chatbot technology: failure in raising the state of the art

FS Marcondes, JJ Almeida, P Novais – International Symposium on …, 2019 – Springer

… is referring to the whole chatbot-stack (that includes natural language processing features, knowledge … Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, HRI 2017 … Zhou, M., Zhou, J., Li, Z.: Building task-oriented dialogue systems for online …

Towards Multimodal Understanding of Passenger-Vehicle Interactions in Autonomous Vehicles: Intent/Slot Recognition Utilizing Audio-Visual Data

E Okur, SH Kumar, S Sahay, L Nachman – arXiv preprint arXiv:1909.13714, 2019 – arxiv.org

… the vehicle) are important building blocks towards developing contextual dialog systems for natural … Wild Workshop, 13th ACM/IEEE International Confer- ence on Human-Robot Interaction (HRI 2018 … In Natural Language Processing and Chinese Computing, pages 3–15, Cham …

Machines getting with the program: Understanding intent arguments of non-canonical directives

WI Cho, YK Moon, S Moon, SM Kim, NS Kim – arXiv preprint arXiv …, 2019 – arxiv.org

Page 1. Machines Getting with the Program: Understanding Intent Arguments of Non-Canonical Directives Won Ik Cho1, Young Ki Moon2*, Sangwhan Moon3*, Seok Min Kim1, Nam Soo Kim1 Department of Electrical and Computer …

Improving grounded natural language understanding through human-robot dialog

J Thomason, A Padmakumar, J Sinapov… – … on Robotics and …, 2019 – ieeexplore.ieee.org

… We hope that our agent and learning strategies for an end-to-end dialog system with perceptual connections to the … Toward understanding natural language directions,” in Proceedings of the 5th ACM/IEEE International Conference on Human-robot Interaction, ser. HRI ’10, 2010 …

Smooth turn-taking by a robot using an online continuous model to generate turn-taking cues

D Lala, K Inoue, T Kawahara – 2019 International Conference on …, 2019 – dl.acm.org

… have been improvements for spoken dialogue systems in terms of natural language processing [29], there … and we would also need a robust conversational spoken dialogue system which at … In 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI) …

Working Together with Conversational Agents: The Relationship of Perceived Cooperation with Service Performance Evaluations

G Laban, T Araujo – International Workshop on Chatbot Research and …, 2019 – Springer

… Given ongoing advances in natural language processing and artificial intelligence, it is often … W.: Natural language sales assistant – a web-based dialog system for online … In: International Conference on Human-Robot Interaction (HRI), Workshop on Human Robot Collaboration …

Designing for health chatbots

A Fadhil, G Schiavo – arXiv preprint arXiv:1902.09022, 2019 – arxiv.org

… Evaluating low-level dialog systems, measuring accuracy and user satisfaction are examples of NLP complications that dialog systems encounter (Schumaker 2007, Bickmore 2006, Shawar 2002, Shawar 2007, Radziwill 2017, Marietto 2013). Page 10 …

Teaching Robots Behaviors Using Spoken Language in Rich and Open Scenarios

V Paléologue – 2019 – hal.archives-ouvertes.fr

… Using automatic speech transcription and natural language processing, our sys- tem recognizes unpredicted teachings of new behaviors, and a explicit requests to perform them … 7 1.2.5 Human-Robot Interaction … 97 4.4.3 Rules for HRI Tasks …

A Short Survey on Chatbot Technology: Failure in Raising the State of the Art

C ALGORITMI – Distributed Computing and Artificial Intelligence …, 2019 – books.google.com

… is referring to the whole chatbot-stack (that includes natural language processing features, knowledge … Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, HRI 2017 … Zhou, M., Zhou, J., Li, Z.: Building task-oriented dialogue systems for online …

Low Data Dialogue Act Classification for Virtual Agents During Debugging

AE Wood – 2019 – pdfs.semanticscholar.org

… [93] found only four publicly-available WoZ datasets (more are held privately) suitable for building dialog systems – and none related to Software Engineering … starting around the year 2013. This shift reflects the trend across many areas of NLP and …

Machine Learning from Casual Conversation

A Mohammed Ali – 2019 – stars.library.ucf.edu

… 102 Figure 6.1: Establishing the Connection with Stanford Core NLP Server . . . . . 105 Figure 6.2: Training and Testing Naïve Bayes Classifier, Decision Tree Classifier (DT) and Max Entropy Classifier Respectivly. . . . . 106 …

Toward AI Systems that Augment and Empower Humans by Understanding Us, our Society and the World Around Us

J Crowley, AP O’Sullivan, A Nowak, C Jonker… – 2019 – humane-ai.eu

… of areas including Machine Learning, Computer Vision, Robotics, Human Computer Interaction, Natural Language Processing and Conversational AI … Breazeal2016] have recently surveyed research trends in social robotics and its application to human-robot interaction (HRI) …

Multimodal sentiment analysis: A survey and comparison

R Kaur, S Kautish – International Journal of Service Science …, 2019 – igi-global.com

… al.,2013),amultimodaland spontaneoussentimentrecognitionsystemrealistictotheHRIhasbeen … designated.Theirgrindhas beenrealisticinageneralinterface(ordialog)system,calledRDS … it makesuseoftechniquesforDataMiningandNaturalLanguagesProcessing(NLP)devotedto …

Computational Framework for Facilitating Intimate Dyadic Communication

D Utami – 2019 – repository.library.northeastern.edu

… challenges and provide novel contributions to both HRI and health informatics … dissertation, including the Furhat robot and the IrisTK dialog system … The majority of Human-Robot Interaction (HRI) studies are done in a single interaction, with few exceptions …

Opinion Analysis in Interactions: From Data Mining to Human-Agent Interaction

C Clavel – 2019 – books.google.com

… 8 1.3.2. The WoZ H–A negotiation corpus . . . . . 9 1.3.3. The UE-HRI human–robot corpus … Later, I extended my field of study from acoustic analysis to natural language processing in the context of opinion analysis studies carried out at EDF Lab …

Learning from Others’ Experience

S Höhn – Artificial Companion for Second Language …, 2019 – Springer

… be required to make the desired models part of Communicative ICALL applications or integrate them into dialogue systems and conversational agents. We will discuss the required NLP tools and knowledge bases for the respective research part in Parts II and III in order to …

Techné: Research in Philosophy and Technology

J Seibt, WCRMB Social – Techné: Research in Philosophy …, 2019 – researchportal.helsinki.fi

… Human-Robot Interaction research (officially abbreviated as ‘HRI’) and re- search in Social Robotics have been studying the emotional dimension of human interactions with robots in empirical regards for over two decades (see, eg, Fel- lous and Arbib 2005; Novikova and Watts …

A taxonomy of social cues for conversational agents

J Feine, U Gnewuch, S Morana, A Maedche – International Journal of …, 2019 – Elsevier

FRAMING RISK, THE NEW PHENOMENON OF DATA SURVEILLANCE AND DATA MONETISATION; FROM AN ‘ALWAYS-ON’CULTURE TO ‘ALWAYS-ON’ …

M CUNNEEN, M MULLINS – Hybrid Worlds – researchgate.net

… Matuszek, C.: Grounded Language Learning: Where Robotics and NLP Meet (2018) 33 … We argue that the current status quo for dialogue systems in autonomous agents can (1 … In this experiment you will read about a hypothetical human-robot interaction scenario, and will be …

Music, search, and IoT: How people (really) use voice assistants

T Ammari, J Kaye, JY Tsai, F Bentley – ACM Transactions on Computer …, 2019 – dl.acm.org

… a variety of bodies of work and various disciplines such as artificial intelligence, computer science, natural language processing, human– robot interaction, and social … CA or IPAs are built on top of spoken dialogue systems … Hari M 25 WA No (AA, 1), (GH, 1) Smart lights, Nest …

Collaborative user responses in multiparty interaction with a couples counselor robot

D Utami, T Bickmore – … on Human-Robot Interaction (HRI), 2019 – ieeexplore.ieee.org

… Keywords— Human-Robot Interaction, User Studies, Multiparty Interaction, Collaborative Responses, Turn Taking … Several computational models have been developed to enable humans to engage in conversational turn- taking with dialogue systems, virtual agents and robots …

From rituals to magic: Interactive art and HCI of the past, present, and future

M Jeon, R Fiebrink, EA Edmonds, D Herath – International Journal of …, 2019 – Elsevier

JavaScript is disabled on your browser. Please enable JavaScript to use all the features on this page. Skip to main content Skip to article …

Speech emotion recognition using deep learning techniques: A review

RA Khalil, E Jones, MI Babar, T Jan, MH Zafar… – IEEE …, 2019 – ieeexplore.ieee.org

… for human listeners to react [4]–[6]. Some applications include dialogue systems for spoken … based classification such as natural language process- ing (NLP) and SER … include speech emotion recognition, speech and image recognition, natural language processing, and pattern …

Strong and simple baselines for multimodal utterance embeddings

PP Liang, YC Lim, YHH Tsai, R Salakhutdinov… – arXiv preprint arXiv …, 2019 – arxiv.org

… 2.3 Strong Baseline Models A recent trend in NLP research has been geared to- wards building simple but strong baselines (Arora et al., 2017; Shen et al., 2018; Wieting and Kiela, 2019; Denkowski and Neubig, 2017). The …

On the Impact of Voice Encoding and Transmission on the Predictions of Speaker Warmth and Attractiveness

LF Gallardo, R Sanchez-Iborra – ACM Transactions on Knowledge …, 2019 – dl.acm.org

… 2 RELATED WORK A rapidly growing interest in human-computer interactions and spoken dialog systems has been observed in the last couple of years … Adaptive human- machine spoken dialog systems are already able to react to changes in users’ emotions or attitudes …

From sci-fi to sci-fact: the state of robotics and AI in the hospitality industry

LN Cain, JH Thomas, M Alonso Jr – Journal of Hospitality and Tourism …, 2019 – emerald.com

… psychology (behaviorism and cognitive science); computer engineering (programmability and Turing test); linguistics (natural language processing and knowledge … created a robotic service or rService paradigm that help to examine the human–robot Interaction (HRI)(Pan, et al …

Speech Recognition Engine using ConvNet for the development of a Voice Command Controller for Fixed Wing Unmanned Aerial Vehicle (UAV)

CMJ Galangque, SA Guirnaldo – 2019 12th International …, 2019 – ieeexplore.ieee.org

… He implemented an NLP system built to interpret primitive and high-level air traffic control commands … “The WITAS Multi-Modal Dialogue System I.” In proceesings from Eurospeech, 2001 … In International Conference on Human- Robot Interaction (HRI) …

Design and Analysis of a Human-Machine Interaction System for Researching Human’s Dynamic Emotion

X Sun, Z Pei, C Zhang, G Li… – IEEE Transactions on …, 2019 – ieeexplore.ieee.org

… Today’s dialog systems are becoming increasingly more intelligent and can be applied to complex tasks, such as health-care … two-layer fuzzy sup- port vector regression-Takagi–Sugeno (TLFSVR-TS) model for emotion understanding in human–robot interaction (HRI), where the …

PathBot: An Intelligent Chatbot for Guiding Visitors and Locating Venues

K Mabunda, A Ade-Ibijola – 2019 6th International Conference …, 2019 – ieeexplore.ieee.org

… A chatbot-based robot control architecture for conversational human-robot interactions. HRI 2018 Workshop on Social Human-Robot Interaction of Human-Care Service Robots, 2018 … Example-based dialog modeling for practical multi-domain dialog system …

A survey on deep learning empowered IoT applications

X Ma, T Yao, M Hu, Y Dong, W Liu, F Wang… – IEEE Access, 2019 – ieeexplore.ieee.org

… Such dialogue system based products would func- tion as the next-generation smart … functionalities including the localization, navigation, map building, human-robot interaction, object recognition, and … the assisted robot to improve and evaluate Human-Robot Inter- action (HRI) …

Text and voice-enabled chatbot enhancing the user experience in career counselling domain

A Banerjee – 2019 – esource.dbs.ie

… aids in the process of career counselling. Using the help of AIML and NLP the chatbot becomes … Page 20. 20 2.2 Speech-to-Text Technology has advanced ten folds in the area of speech recognition intertwining our lives closer to spoken dialogue systems, such as chatbots …

How to Survive a Robot Invasion: Rights, Responsibility, and AI

DJ Gunkel – 2019 – books.google.com

… Today an increasingly significant portion of this work can be performed by a speech dialogue system (SDS), like Google Duplex … The three technologies include: 1) natural language processing (NLP) applications like Siri, Alexa, or Google Home …

Improving Social Awareness Through DANTE: A Deep Affinity Network for Clustering Conversational Interactants

M Swofford, JC Peruzzi, N Tsoi, S Thompson… – arXiv preprint arXiv …, 2019 – arxiv.org

… Finally, we demonstrate the practicality of our approach in a human-robot interaction scenario … group interactions through proxemics analysis within HCI [10, 15, 31, 42], computer vision [3, 22, 27, 48], social signal processing [13, 47], and even natural language processing [67] …

Mutual Reinforcement Learning

S Roy, E Kieson, C Abramson, C Crick – arXiv preprint arXiv:1907.06725, 2019 – arxiv.org

… how motivations and reward signals can be used as a channel to impact human- robot partnerships in an HRI setting, simultaneously … feedback-based human-robot interaction, demonstrating that if humans are guided by a robot at an interpersonal level, it increases the …

Embodied contextualization: Towards a multistratal ontological treatment

JA Bateman, M Pomarlan, G Kazhoyan – Applied Ontology, 2019 – content.iospress.com

… the paper will not address the particular demands of human-robot interaction (HRI), although we … Many approaches are currently exploring HRI using a variety of methods (eg … the history of development for ‘interface’ ontologies within natural language processing systems, which …

Redirecting player speech: Lexical entrainment to challenging words in human–computer dialogue

A Bergqvist – 2019 – diva-portal.org

… However, the magnitude of the variety poses a challenge for the computer science area of natural language processing … In a well-documented approach to dealing with the diversity, the words that a dialogue system can understand are embedded in its output speech …

Exploring Users’ Perception of Chatbots in a Mobile Commerce Environment: Creating a Better User Experience by Implementing Anthropomorphic Visual and …

LM Assink – 2019 – essay.utwente.nl

… However, definitions of chatbot applications have been around for a longer period of time. In fact, Eliza was one of the first chatbots in the 1960s, which is one of the earliest Natural Language Applications (NLP) by using simple pattern matching and a template-based response …

Trusting virtual agents: The effect of personality

MX Zhou, G Mark, J Li, H Yang – ACM Transactions on Interactive …, 2019 – dl.acm.org

… 10:5 matches their own personality [68]. However, in human-robot interaction, studies show conflict- ing results. For example, Lee et al … Third, the engine must allow easy integration of third-party conversational technologies, eg, Google Natural Language Processing (NLP) API …

This is the author’s version of a work that was published in the following source

J Feine, U Gnewuch, S Morana, A Maedche – 2019 – researchgate.net

… Page 8. 7 build on similar technology (ie, natural language processing), they differ considerably in their … One of the most prominent spoken-dialog system projects were ATIS (Air Travel Information … and Moon, 2000). In the context of human-robot interaction (HRI), Lobato et al …

Robot conversation strategy for indicated object recognition: coordinating alignment mechanism and gender differences

???? – doshisha.repo.nii.ac.jp

… language processing to calculate the ambiguity of referencing and request additional information [9] … dialogue systems [26, 21, 27, 28, 29] and robots [30, 31]. Through alignment, humans … clarifies users’ indications through human–robot interaction, and we bring up the …

An Active Learning Paradigm for Online Audio-Visual Emotion Recognition

I Kansizoglou, L Bampis… – IEEE Transactions on …, 2019 – ieeexplore.ieee.org

… Abstract—The advancement of Human-Robot Interaction (HRI) drives research into the development of advanced emo- tion identification architectures that fathom audio-visual (AV) modalities of human emotion. State-of-the …

Neural-network-based Memory for a Social Robot: Learning a Memory Model of Human Behavior from Data

M Doering, T Kanda, H Ishiguro – … on Human-Robot Interaction (THRI), 2019 – dl.acm.org

… 2 RELATED WORKS 2.1 Memory for Social Robots There are several fields of study related to modeling memory for human-robot interaction … User modeling is used in dialog systems, and more recently HRI, to model users’ beliefs, goals, and plans such that the system …

Artificial Companion for Second Language Conversation

S Höhn – 2019 – Springer

… for this research and makes gen- eralisations necessary for an operationalisation of the findings in a dialogue system … CALL technology extended with Natural Language Processing (NLP) techniques became a new research field called Intelligent Computer-Assisted Language …

Proactive Communication in Human-Agent Teaming

EM van Zoelen – 2019 – dspace.library.uu.nl

… The focus is for a large part on dialogue systems, while the work done in this thesis is not aimed at creating a dialogue system. However, looking at human-agent communication systems and algorithms, dialogue systems are a large part of the existing research …

Robot Data-Driven Imitation Learning of Human Social Behavior with Time-Persistent Interaction Context

M Robert Doering – 2019 – ir.library.osaka-u.ac.jp

… The research question we sought to answer with this work is whether it is possible to build a memory system for human-robot interaction in a data-driven way … User modeling is used in dialog systems, and more recently HRI, to model users’ beliefs, goals, and plans such …

Miscommunication detection and recovery in situated human–robot dialogue

M Marge, AI Rudnicky – ACM Transactions on Interactive Intelligent …, 2019 – dl.acm.org

… 3 RELATED WORK Much of this research builds on related work on robots that ask for help, symbol grounding for natural language interpretation, dialogue systems that support human–robot interaction (HRI), and error handling in human–computer dialogue …