Notes:

Automatic lip synchronization is the process of creating a digital avatar or a computer-generated character that speaks with realistic lip movements that match the audio of a given speech. This can be achieved using a combination of computer graphics and artificial intelligence techniques.

To create automatic lip synchronization, a digital model of the character’s face is created, and the movement of the lips is then synchronized with the audio of the speech using a process called “speech-driven facial animation.” This process involves analyzing the audio of the speech to identify the different phonemes being spoken, and then mapping those phonemes to the appropriate lip movements.

There are several approaches to speech-driven facial animation, including rule-based approaches, which use predefined rules to map phonemes to lip movements, and data-driven approaches, which use machine learning algorithms to learn the relationship between phonemes and lip movements from a large dataset of annotated speech and facial movements.

Once the lip synchronization is complete, the resulting animation can be used to create a realistic digital avatar that speaks with realistic lip movements. This can be used in a variety of applications, such as video games, virtual assistants, and animated films.

Real-time lip sync refers to the process of synchronizing a digital character’s lip movements with an audio input in real-time, as the audio is being spoken. This means that the digital character’s lip movements are updated continuously as the audio input changes, resulting in a more natural and lifelike appearance.

To achieve real-time lip sync, the system must be able to analyze the audio input in real-time and map the phonemes being spoken to the appropriate lip movements. This can be done using a combination of pre-defined rules and machine learning algorithms that have been trained on a large dataset of annotated speech and facial movements.

Real-time lip sync can be used in a variety of applications, such as virtual assistants, video conferencing software, and live performance capture. It is an important tool for creating realistic and engaging digital experiences, as it allows digital characters to speak and express themselves in a more natural and lifelike manner.

Resources:

- Anime Studio .. proprietary vector-based 2d animation software

- Annosoft .. desktop application for media developers and artists

- Autodesk .. software for the architecture, engineering, and entertainment industries

- Blender .. open-source 3d computer graphics software toolset

- Cinema4d .. a 3d modeling, animation, motion graphic and rendering application

- Daz3d .. rigged 3d human models, associated accessory content and software

- Flash .. content created on the adobe flash platform

- iClone .. real-time 3d animation and rendering software program

- Papagayo .. free lip-syncing software made in python

- Synfig .. open source 2d vector graphics and timeline-based computer animation

- ToonBoom .. animation production and storyboarding software

- Unity3d .. build high-quality 3d and 2d games

Wikipedia:

See also:

Lip Synchronization Meta Guide

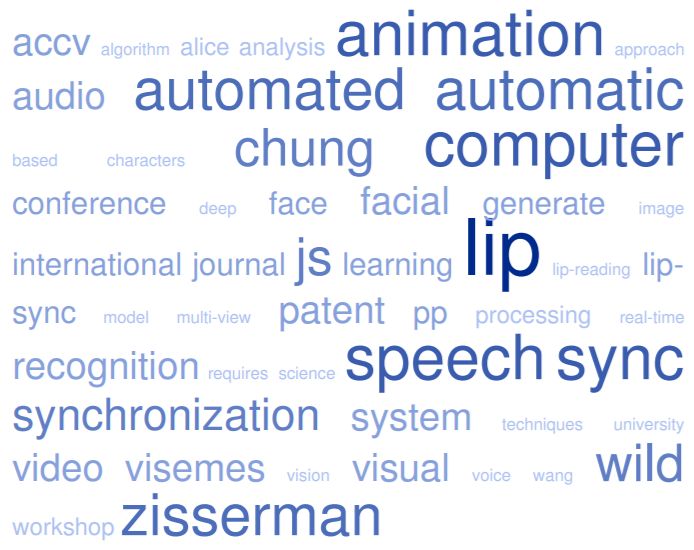

Out of time: automated lip sync in the wild

JS Chung, A Zisserman – Asian Conference on Computer Vision, 2016 – Springer

The goal of this work is to determine the audio-video synchronisation between mouth motion and speech in a video. We propose a two-stream ConvNet architecture that enables the mapping between the sound and the mouth images to be trained end-to-end from …

Development of real-time lip sync animation framework based on viseme human speech

LN Hoon, WY Chai, KAAA Rahman – Archives of Design …, 2014 – dev02.dbpia.co.kr

… 12 Lewis, J. (1991). Automated lip-sync: Background and techniques. The Journal of Visualization and Computer Animation, 2(4), 118-122 … 1366-1369). IEEE. 21 Zori?, G., ?erekovi?, A., & Pandži?, I. (2008). Automatic Lip Synchronization by Speech Signal Analysis …

Exploring Localization for Mouthings in Sign Language Avatars

R Wolfe, T Hanke, G Langer, E Jahn, S Worseck… – researchgate.net

… Page 2. Similar to manually-produced animation, automated lip sync requires a sound track and visemes to generate a talking figure, but it differs in its representation of visemes. The automation strategies fall into two categories, based on the avatar’s representation of visemes …

Development of Real-Time Lip Sync Animation Framework Based On

L Hoon, W Chai, K Rahman – 2014 – aodr.org

… 12 Lewis, J. (1991). Automated lip-sync: Background and techniques. The Journal of Visualization and Computer Animation, 2(4), 118-122 … 1366-1369). IEEE. 21 Zori?, G., ?erekovi?, A., & Pandži?, I. (2008). Automatic Lip Synchronization by Speech Signal Analysis …

Korean speech recognition using phonemics for lip-sync animation

SM Hwang, BH Song, HK Yun – … Science, Electronics and …, 2014 – ieeexplore.ieee.org

… of lip animation. In this research, a real time processed automatic lip sync algorithm for virtual characters as the animation key in digital contents is studied by considering Korean vocal sound system. The proposed algorithm …

Game Design and Appeal: designing for the user

MJ Chavez, MH Keat – … on Computer Games Multimedia and Allied …, 2014 – researchgate.net

… The characters utilize automated lip-sync techniques to generate phonemes and matching Visemes in an auto lip-synced talking character (Graf and Petajan 2000) … We generate the audio and then using automated lip-sync software generate a ?rst pass of key-frame targets …

Speaker adaptive real-time Korean single vowel recognition for an animation producing

HK Yun, SM Hwang, BH Song – Network Security and …, 2015 – books.google.com

… In this research, a real time processed automatic lip sync algorithm for virtual characters as the animation key in digital content is studied by considering Korean vocal sound system … Automatic Lip Sync Solution for Virtual Characters in 3D Animations, ICCT2013, vol. 2, no. 1, pp …

LipSync Generation Based on Discrete Cosine Transform

N Xiey, T Yuanz, M Nakajimax – … International (NicoInt), 2017, 2017 – ieeexplore.ieee.org

… [2] J. Lewis, “Automated lip-sync: Background and techniques,” Journal of Visualization and Computer Animation, vol. 2, no. 4, pp. 118–122, 1991. [Online] … 41, no. 4, pp. 603–623, 2003. [7] CJ Kohnert and SK Semwal, “Automatic lip- synchronization using linear prediction of …

Speaker Adaptive Real-Time Korean Single Vowel Recognition for an Animation Producing

SM Whang, BH Song, HK Yun – … and Innovation in Future Computing and …, 2014 – Springer

… of lip animation. In this research, a real time processed automatic lip sync algorithm for virtual characters as the animation key in digital contents is studied by considering Korean vocal sound system. The proposed algorithm …

Analysis of audio and video synchronization in TV digital broadcast devices

S Kuni?, Z Šego, BZ Cihlar – ELMAR, 2017 International …, 2017 – ieeexplore.ieee.org

… As it shown in Fig.5, after the A/V sync delay detection, automatic lip sync error correction is provide in the A/V sync corrector, which is consisted of audio and video variable delays and circuit for sync adjustment and correction audio and video signals again “in sync” [8 …

Application of visual speech synthesis in therapy of auditory verbal hallucinations

K Sorokosz – 2018 International Interdisciplinary PhD …, 2018 – ieeexplore.ieee.org

… with the patient. The proposed system integrates the XFace face model, synthetic voice generated by text-to-speech systems available on the market and the custom automatic lip synchronization algorithm. The article describes …

Shape synchronization control for three-dimensional chaotic systems

Y Huang, Y Wang, H Chen, S Zhang – Chaos, Solitons & Fractals, 2016 – Elsevier

… [17,18]. Automatic lip-synchronization technique [19] is also hot pints, which the computer-based facial animation can be automatically tackled from the identify lip shapes for a given speed sequence by using speech analysis …

Reform or Ruin? Proposals to Amend Section 101

JL Contreras – 2017 – dc.law.utah.edu

… v. Bandai Namco Games Am. Inc., 837 F.3d 1299 (Fed. Cir. 2016) • (patents directed to processes for automated lip synchronization animation methods were not directed to patent-ineligible abstract ideas). • Rapid Litig. Mgmt. Ltd. v. CellzDirect, Inc., 827 F.3d 1042 (Fed. Cir …

Rap music video generator: Write a script to make your rap music video with synthesized voice and CG animation

M Hayashi, S Bachelder, M Nakajima… – … (GCCE), 2017 IEEE …, 2017 – ieeexplore.ieee.org

… The section which is not surrounded by those “start” and “end” is regarded as an ordinary acting. B. The Animation The TVML engine generates CG animation in real-time. The engine has an automatic lip sync function, so that you do not need to deal with the lip sync …

Patentable Subject Matter: Alice Does Not Permit the Dead to Frolic in a 3D Wonderland

HJ Wu – SMUL Rev., 2016 – HeinOnline

… claims appeared to be tangible and related to a specific technological process, did not claim a monopoly on the entire field of animating the human mouth during speech, and did not cover “prior art methods of computer assisted, but non-automated, lip synchronization for three …

JALI: an animator-centric viseme model for expressive lip synchronization

P Edwards, C Landreth, E Fiume, K Singh – ACM Transactions on …, 2016 – dl.acm.org

… Viseme model (Section 3). We then show how the JALI Viseme model can be constructed over a typical FACS-based 3D facial rig and transferred across such rigs (Section 4). Section 5 provides system implementation de- tails for our automated lip-synchronization approach …

The Muses of Poetry-In search of the poetic experience

D Arellano, S Spielmann… – Symposium on Artificial …, 2013 – animationsinstitut.de

… 3.4.2 Speech Synthesis To create the voice of the character we used a third-party voice syn- thesizer, provided by SVOX3. It produces not only a more natural voice, but also the visemes in- formation required by Frapper to generate automatic lip-sync …

Loose lips sync ships

S Dawson – Connected Home Australia, 2013 – search.informit.com.au

… it. If not go up or down by 25ms. But a solution should already have been in place. HDMI 1.3 implements an automatic lip sync feature. The TV uses this to tell the device supplying its image how much delay it imposes. That device …

Rapid DCT-based LipSync generation algorithm for game making

N Xie, T Yuan, N Chen, X Zhou, Y Wang… – SIGGRAPH ASIA 2016 …, 2016 – dl.acm.org

… The authors are grateful to 3D artists who helped for creating the 3D models. References LEWIS, J. 1991. Automated lip-sync: Background and techniques. Journal of Visualization and Computer Animation 2, 4, 118–122. LIU, B., AND DAVIS, TA 2013 …

Feature extraction methods LPC, PLP and MFCC in speech recognition

N Dave – International journal for advance research in …, 2013 – researchgate.net

… IEEE, International Conference on Multimedia & Expo ICME 2005. [6] Goranka Zoric, “Automatic Lip Synchronization by Speech Signal Analysis,” Master Thesis, Faculty of Electrical Engineering and Computing, University of Zagreb, Zagreb, Oct-2005 …

Out of Band SCTE 35

R Franklin, A Young – SMPTE 2017 Annual Technical …, 2017 – ieeexplore.ieee.org

… point. This not only solves the core problem but provides many side benefits including automatic lip sync error correction, enabling Page 2. The authors are solely responsible for the content of this technical presentation. The …

Epson EH-TW6600 home theatre projector

S Dawson – Connected Home Australia, 2014 – search.informit.com.au

… The projector imposed a little delay on the image – perhaps 70 to 100ms according to my AV sync tests. However it supports the automatic lip sync adjustment available on many home theatre receivers, so it’s worth switching that feature on in your receiver …

Animation in Context: The Outcome of Targeted Transmedia Authorship

MJ Chavez – TECHART: Journal of Arts and Imaging Science, 2014 – thetechart.org

… The characters utilize automated lip-sync techniques to generate phonemes and matching visemes in an auto lip-synced talking character [6]. (Graf and Petajan 2000) In addition, the primary focus of our efforts has been a short-form animation entitled “The Adventures of Barty …

Lipreading using deep bottleneck features for optical and depth images

S Tamura, K Miyazaki, S Hayamizu – avsp2017.loria.fr

… 4. Acknowledgment A part of this work was supported by JSPS KAKENHI Grant No. 16H03211. 5. References [1] JS Chung et al., “Out of time: automated lip sync in the wild,” Proc. ACCV2016 Workshop W9 (2016). [2] T.Saitoh, “Efficient face model for lip reading,” Proc …

VisemeNet: Audio-Driven Animator-Centric Speech Animation

Y Zhou, S Xu, C Landreth, E Kalogerakis, S Maji… – arXiv preprint arXiv …, 2018 – arxiv.org

… Deep learning-based speech animation. Recent research has shown the potential of deep learning to provide a compelling solution to automatic lip-synchronization simply using an audio signal with a text transcript [Taylor et al. 2017], or even without it [Karras et al. 2017] …

Automatic Speech Recognition System Using MFCC And DTW For Marathi Isolated Words

KR Ghule, RR Deshmukh – 2015 – pdfs.semanticscholar.org

… Issue 12, December 2013 [2] Goranka Zoric, “Automatic Lip Synchronization by Speech Signal Analysis”, Master Thesis, Faculty of Electrical Engineering and Computing, University of Zagreb, Zagreb, Oct-2005. [3] Lahouti, F …

A Text-Based Chat System Embodied with an Expressive Agent

L Alam, MM Hoque – Advances in Human-Computer Interaction, 2017 – hindawi.com

… [23] is a solution to stream videos in real-time for instant messaging and/or video-chat applications developed by a company named Charamel. It provides real-time 3D avatar rendering with direct video-stream output and automatic lip synchronization live via headset …

Intelligent Expression Blending For Performance Driven Facial Animation

A Khanam, DRM MUFIT – 2018 – 111.68.101.240

Page 1. INTELLIGENT EXPRESSION BLENDING FOR PERFORMANCE DRIVEN FACIAL ANIMATION by Assia Khanam A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Computer Software Engineering) at the …

Talking Face Generation by Adversarially Disentangled Audio-Visual Representation

H Zhou, Y Liu, Z Liu, P Luo, X Wang – arXiv preprint arXiv:1807.07860, 2018 – arxiv.org

Page 1. Talking Face Generation by Adversarially Disentangled Audio-Visual Representation Hang Zhou Yu Liu Ziwei Liu? Ping Luo Xiaogang Wang The Chinese University of Hong Kong Abstract Talking face generation aims …

Audio and visual modality combination in speech processing applications

G Potamianos, E Marcheret, Y Mroueh, V Goel… – The Handbook of …, 2017 – dl.acm.org

… 504, 505 [doi>10.1109/83.605417]. 36. JS Chung and A. Zisserman. 2017a. Out of time: automated lip sync in the wild. In C.-S. Chen, J. Lu, and K.-K. Ma, editors, Computer Vision—ACCV 2016 Workshops, Part II, vol. LNCS 10117, pp. 251–263 …

A Model of Indonesian Dynamic Visemes From Facial Motion Capture Database Using A Clustering-Based Approach

SS Arifin, MH Muljono – pdfs.semanticscholar.org

… visemes to sound off articulation. Up to now, there is no established Indonesian dynamic viseme standard defined. A research conducted by [6] uses the dataset driven approach to automatic lip sync. The method is used in this …

The Effects of Talking-Head with Various Realism Levels on Students’ Emotions in Learning

AZ Mohamad Ali, MN Hamdan – Journal of Educational …, 2017 – journals.sagepub.com

The aim of this study was to evaluate the effects of various realistic levels of talking-head on students’ emotions in pronunciation learning. Four talking-head…

A Model of Indonesian Dynamic Visemes From Facial Motion Capture Database Using A Clustering-Based Approach.

S Sumpeno, M Hariadi – IAENG International Journal of …, 2017 – search.ebscohost.com

… visemes to sound off articulation. Up to now, there is no established Indonesian dynamic viseme standard defined. A research conducted by [6] uses the dataset driven approach to automatic lip sync. The method is used in this …

Multi-view visual speech recognition based on multi task learning

HJ Han, S Kang, CD Yoo – Image Processing (ICIP), 2017 IEEE …, 2017 – ieeexplore.ieee.org

… Challenges. Asian Conference on Computer Vi- sion (ACCV), 2016. [11] Joon Son Chung and Andrew Zisserman, “Out of time: automated lip sync in the wild,” in Workshop on Multi- view Lip-reading, ACCV, 2016. [12] Iryna …

Effects of verbal Components in 3D talking-head on pronunciation learning among non-native speakers.

M Ali, A Zamzuri, K Segaran, TW Hoe – Journal of Educational Technology & …, 2015 – JSTOR

… The 3D talking-head animation was only limited to lip syncing and basic facial expressions. The automated-lip-sync technic using the special plug-in in the 3D software was utilized to synchronize the lip movement with audio …

Towards building Indonesian viseme: A clustering-based approach

S Sumpeno, M Hariadi – Computational Intelligence and …, 2013 – ieeexplore.ieee.org

… 2011. [3] Goranka Zoric, Igor S. Pandzic, “Automatic Lip Sync and Its Use in The New Multimedia Services for Mobile Devices”, Proceedings of the 8th International Conference on Telecomunication ConTEL, 2005. [4] Mohamaad …

A new chaotic secure communication scheme based on shape synchronization

Y Huang, Y Wang – Control and Decision Conference (2014 …, 2014 – ieeexplore.ieee.org

Page 1. A new chaotic secure communication scheme based on shape synchronization Yuan-yuan Huang1 2, Yin-he Wang1, 1 School Of Automation, Guangdong University of Technology, Guangzhou 510006; E-mail: snailhyy@126.com …

After Two Years, Alice Is Still Stifling Life Sciences Patents; But the Tide May Be Shifting at the Federal Circuit

LC Chen – Biotechnology Law Report, 2016 – liebertpub.com

… common characteristics, calling it a genus, and noting, “genus claims create a greater risk of preemption … [but] this does not mean they are unpatentable.” The limitations of the claimed genus “prevent preemption of all processes for achieving automated lip-synchronization of 3 …

Interoperability: voice and audio standards for Space missions

O Peinado – 14th International Conference on Space Operations, 2016 – arc.aiaa.org

… Automatic lip sync can be made possible if timestamps are used in the video and voice systems; however, this requires special equipment. Ideally, the synchronization of the video and audio should be done onboard and sent embedded from space …

Computer Aided Articulatory Tutor: A scientific study

A Rathinavelu, H Thiagarajan – International Journal of …, 2014 – computingonline.net

… No.3, May/June 2005, pp. 341-352. [19] Lewis, J. Automated Lip-Sync: Background and Techniques, The Journal of Visualization and Computer Animation, 2:118-122, 1991. [20] Parent, R, Computer Animation Algorithms and …

Analysis of the Indonesian vowel/e/for lip synchronization animation

A Rachman, R Hidayat… – … , Computer Science and …, 2017 – ieeexplore.ieee.org

… formant trait. REFERENCES [1] S.-M. Hwang, H.-K. Yun, and B.-H. Song, “Automatic lip sync solution for virtual characters in 3D animations,” in International Conference on Convergence Technology 2(1), 2013, pp. 432–433. [2 …

Evaluation of Artificial Mouths in Social Robots

Á Castro-González, J Alcocer-Luna… – … on Human-Machine …, 2018 – roboticslab.uc3m.es

Page 1. JOURNAL OF LATEX CLASS FILES, VOL. 14, NO. 8, APRIL 2017 1 Evaluation of artificial mouths in social robots Álvaro Castro-González, Jonatan Alcocer-Luna, Maria Malfaz, Fernando Alonso-Martín, and Miguel A. Salichs …

Speaker Verification using Convolutional Neural Networks

H Salehghaffari – arXiv preprint arXiv:1803.05427, 2018 – arxiv.org

… convolutional nets,” arXiv preprint arXiv:1405.3531, 2014. [30] JS Chung and A. Zisserman, “Out of time: automated lip sync in the wild,” in Workshop on Multi-view Lip-reading, ACCV, 2016. [31] S. Chopra, R. Hadsell, and Y …

Audiovisual Synchrony Detection with Optimized Audio Features

S Sieranoja, M Sahidullah, T Kinnunen, J Komulainen… – cs.joensuu.fi

… of 12th International Conference on Information Fusion, 2009, pp. 2255–2262. 2 [20] JS Chung and A. Zisserman, “Out of time: Automated lip sync in the wild,” in Computer Vision – ACCV 2016 Workshops. Springer, 2017, pp. 251–263. 2 …

Lipnet: Sentence-level lipreading

YM Assael, B Shillingford, S Whiteson… – arXiv …, 2016 – innovators-guide.ch

… Lip reading in the wild. In Asian Conference on Computer Vision, 2016a. JS Chung and A. Zisserman. Out of time: automated lip sync in the wild. In Workshop on Multi-view Lip-reading, ACCV, 2016b. M. Cooke, J. Barker, S. Cunningham, and X. Shao …

You said that?

JS Chung, A Jamaludin, A Zisserman – arXiv preprint arXiv:1705.02966, 2017 – arxiv.org

… In: Proc. ACCV (2016) [5] Chung, JS, Zisserman, A.: Out of time: automated lip sync in the wild. In: Workshop on Multi- view Lip-reading, ACCV (2016) [6] Fan, B., Wang, L., Soong, FK, Xie, L.: Photo-real talking head with deep bidirectional lstm …

Evaluation of MFCC for speaker verification on various windows

A Jain, OP Sharma – Recent Advances and Innovations in …, 2014 – ieeexplore.ieee.org

… 6, pp.I-6, 20 II. [18] G. Zori, “Automatic Lip Synchronization by Speech Signal Analysis”, Master Thesis, Department of Telecommunications, Faculty of Electrical Engineering and Computing, University of Zagreb, Croatia, 2005.K. Elissa, ‘Title of paper if known, ” unpublished …

Visual Speech Enhancement using Noise-Invariant Training

A Gabbay, A Shamir, S Peleg – arXiv preprint arXiv:1711.08789, 2017 – arxiv.org

… Lip reading sentences in the wild. arXiv:1611.05358, 2016. [6] JS Chung and A. Zisserman. Out of time: automated lip sync in the wild. In ACCV’16, pages 251–263, 2016. [7] M. Cooke, J. Barker, S. Cunningham, and X. Shao …

Voxceleb: a large-scale speaker identification dataset

A Nagrani, JS Chung, A Zisserman – arXiv preprint arXiv:1706.08612, 2017 – arxiv.org

… video,” Image and Vision Computing, vol. 27, no. 5, 2009. [36] JS Chung and A. Zisserman, “Out of time: automated lip sync in the wild,” in Workshop on Multi-view Lip-reading, ACCV, 2016. [37] K. Chatfield, K. Simonyan, A. Vedaldi …

On the evaluation of inversion mapping performance in the acoustic domain.

K Richmond, ZH Ling, J Yamagishi, B Uria – INTERSPEECH, 2013 – Citeseer

… feedback system,” in Proc. In- terspeech, 2011, pp. 589–592. [4] J. Lewis, “Automated lip-sync: Background and techniques,” The Journal of Visualization and Computer Animation, vol. 2, no. 4, pp. 118–122, 1991. [5] C. Bregler …

Visual Speech Enhancement

A Gabbay, A Shamir, S Peleg – Proceedings of Interspeech (in press), 2018 – cs.huji.ac.il

… [24] J. Ngiam, A. Khosla, M. Kim, J. Nam, H. Lee, and AY Ng, “Multimodal deep learning,” in ICML’11, 2011, pp. 689–696. [25] JS Chung and A. Zisserman, “Out of time: automated lip sync in the wild,” in ACCV’16, 2016, pp. 251–263 …

Isolated Iqlab checking rules based on speech recognition system

B Yousfi, AM Zeki, A Haji – Information Technology (ICIT), 2017 …, 2017 – ieeexplore.ieee.org

… COST Action, vol. 249, 2000. [14] G. Zori?, “Automatic lip synchronization by speech signal analysis.” Fakultet elektrotehnike i ra?unarstva, Sveu?ilište u Zagrebu, 2005. [15] V. Madisetti and D. Williams, Digital signal processing handbook on CD-ROM. CRC Press, 1999 …

A Bibliography of The Journal of Visualization and Computer Animation

NHF Beebe – 2017 – ctan.math.utah.edu

… CO- DEN JVCAEO. ISSN 1049-8907 (print), 1099-1778 (electronic). Lewis:1991:ALS [29] J. Lewis. Automated lip-sync: Back- ground and techniques. The Journal of Visualization and Computer Anima- tion, 2(4):118–122, October–December 1991. CODEN JVCAEO …

Data-Driven Approach to Synthesizing Facial Animation Using Motion Capture

K Ruhland, M Prasad… – IEEE computer graphics …, 2017 – ieeexplore.ieee.org

… base. Furthermore, the use of automated lip-sync software (from audio) is already a commonplace approach to speeding up animation production of lip movement, so we do not attempt to tackle this particular issue here. Animation …

Lipnet: End-to-end sentence-level lipreading

YM Assael, B Shillingford, S Whiteson… – arXiv preprint arXiv …, 2016 – arxiv.org

… JS Chung and A. Zisserman. Lip reading in the wild. In Asian Conference on Computer Vision, 2016a. JS Chung and A. Zisserman. Out of time: automated lip sync in the wild. In Workshop on Multi-view Lip-reading, ACCV, 2016b. J. Chung, C. Gulcehre, K. Cho, and Y. Bengio …

Lip Reading Sentences in the Wild.

JS Chung, AW Senior, O Vinyals, A Zisserman – CVPR, 2017 – openaccess.thecvf.com

Page 1. Lip Reading Sentences in the Wild Joon Son Chung1 joon@robots.ox.ac. uk Andrew Senior2 andrewsenior@google.com Oriol Vinyals2 vinyals@google. com Andrew Zisserman1,2 az@robots.ox.ac.uk 1 Department …

Evaluating automatic speech recognition-based language learning systems: a case study

J van Doremalen, L Boves, J Colpaert… – Computer Assisted …, 2016 – Taylor & Francis

Skip to Main Content …

Patenting Inventions Related to Non-Impact Printing in Light of the Recent US Supreme Court Case: Alice Corp. v. CLS Bank

SM Slomowitz, GA Greene… – NIP & Digital Fabrication …, 2015 – ingentaconnect.com

… While the patents do not preempt the field of automatic lip synchronization for computer-generated 3D animation, they do preempt the field of such lip synchronization using a rules-based morph target approach.” Even this argument strains credulity …

Look, listen and learn

R Arandjelovic, A Zisserman – 2017 IEEE International …, 2017 – openaccess.thecvf.com

… Combining labeled and unlabeled data with co-training. In Computational learning theory, 1998. [6] JS Chung and A. Zisserman. Out of time: Automated lip sync in the wild. In Workshop on Multi-view Lip-reading, ACCV, 2016. [7] C. Doersch, A. Gupta, and AA Efros …

Let me Listen to Poetry, Let me See Emotions.

D Arellano, C Manresa-Yee, V Helzle – J. UCS, 2014 – jucs.org

… properties. Another important feature of Frapper is the automatic lip-sync. Thus, hav- ing previously defined the poses for each viseme, these are displayed when the corresponding viseme is triggered by the TTS. Additional …

Irrational Science Breeds Irrational Law

C Small – Emory LJ, 2017 – HeinOnline

… See id. at 1315 (“The limitations in claim 1 prevent preemption of all processes for achieving automatic lip-synchronization of 3-D characters.”); see also Robert R. Sachs, McRO: Preemption Matters After All, BLSKI BLOG, (Sept …

Combining Multiple Views for Visual Speech Recognition

M Zimmermann, MM Ghazi, HK Ekenel… – arXiv preprint arXiv …, 2017 – arxiv.org

Page 1. Combining Multiple Views for Visual Speech Recognition Marina Zimmermann1, Mostafa Mehdipour Ghazi2, Haz?m Kemal Ekenel3, Jean-Philippe Thiran1 1 Signal Processing Laboratory (LTS5), Ecole Polytechnique …

VoxCeleb2: Deep Speaker Recognition

JS Chung, A Nagrani, A Zisserman – arXiv preprint arXiv:1806.05622, 2018 – arxiv.org

… BMVC., 2017. [37] JS Chung and A. Zisserman, “Out of time: automated lip sync in the wild,” in Workshop on Multi-view Lip-reading, ACCV, 2016. [38] JS Chung and A. Zisserman, “Learning to lip read words by watching videos,” CVIU, 2018 …

Comparison of voice systems for Human Space Flight and Satellite Missions

O Peinado, D Feeney – 2018 SpaceOps Conference, 2018 – arc.aiaa.org

… An automatic lip sync can be made possible if timestamps are used in the video and voice systems; however, this requires special equipment. Ideally, the synchronization of the video and audio should be done onboard and sent embedded from space …

From benedict cumberbatch to sherlock holmes: Character identification in TV series without a script

A Nagrani, A Zisserman – arXiv preprint arXiv:1801.10442, 2018 – arxiv.org

… In Proceedings of the British Machine Vision Conference, 2014. [6] JS Chung and A. Zisserman. Out of time: automated lip sync in the wild. In Workshop on Multi-view Lip-reading, ACCV, 2016. [7] RG Cinbis, JJ Verbeek, and C. Schmid …

The Conversation: Deep Audio-Visual Speech Enhancement

T Afouras, JS Chung, A Zisserman – arXiv preprint arXiv:1804.04121, 2018 – arxiv.org

… BMVC., 2017. [38] JS Chung, A. Nagrani, , and A. Zisserman, “Voxceleb2: Deep speaker recognition,” in arXiv, 2018. [39] JS Chung and A. Zisserman, “Out of time: automated lip sync in the wild,” in Workshop on Multi-view Lip-reading, ACCV, 2016 …

End-to-End Multi-View Lipreading

S Petridis, Y Wang, Z Li, M Pantic – arXiv preprint arXiv:1709.00443, 2017 – arxiv.org

… In Asian Conference on Com- puter Vision, pages 87–103. Springer, 2016. [8] JS Chung and A. Zisserman. Out of time: automated lip sync in the wild. In Work- shop on Multiview Lipreading, Asian Conference on Computer Vision, pages 251–263. Springer, 2016 …

Innovative Accounting Interviewing: A Comparison of Real and Virtual Accounting Interviewers

M Pickard, RM Schuetzler, J Valacich, DA Wood – 2018 – papers.ssrn.com

… audio generation software. In both treatment cases, Visage Technologies’™ Real-Time Automatic Lip Sync module was used to sync the ECA’s lips to the audio files. We used Singular Inversions’ FaceGen Modeller to generate ECA models for the …

Real-time Rendering Facial Skin Colours to Enhance Realism of Virtual Human

MHA Alkawaz – 2013 – eprints.utm.my

Page 1. REAL-TIME RENDERING OF FACIAL SKIN COLOURS TO ENHANCE REALISM OF VIRTUAL HUMAN MOHAMMAD HAZIM AMEEN ALKAWAZ A dissertation submitted in partial fulfillment of the requirements for the award of the degree of …

X2Face: A network for controlling face generation by using images, audio, and pose codes

O Wiles, A Koepke, A Zisserman – arXiv preprint arXiv:1807.10550, 2018 – arxiv.org

… In: NIPS (2016) 8. Chung, JS, Senior, A., Vinyals, O., Zisserman, A.: Lip reading sentences in the wild. In: Proc. CVPR (2017) 9. Chung, JS, Zisserman, A.: Out of time: automated lip sync in the wild. In: Work- shop on Multi-view Lip-reading, ACCV (2016) 10 …

Word Spotting in Silent Lip Videos

A Jha, VP Namboodiri, CV Jawahar – 2018 IEEE Winter Conference …, 2018 – computer.org

… Lip reading in the wild. In ACCV, 2016. [12] JS Chung and A. Zisserman. Out of time: automated lip sync in the wild. In ACCV, 2016. [13] M. Cooke, J. Barker, S. Cunningham, and X. Shao. An audio-visual corpus for speech perception and automatic speech recognition …

Seeing voices and hearing faces: Cross-modal biometric matching

A Nagrani, S Albanie… – Proceedings of the IEEE …, 2018 – openaccess.thecvf.com

Page 1. Seeing Voices and Hearing Faces: Cross-modal biometric matching Arsha Nagrani Samuel Albanie Andrew Zisserman VGG, Dept. of Engineering Science, University of Oxford {arsha, albanie, az}@robots.ox.ac.uk Abstract …

Co-Training of Audio and Video Representations from Self-Supervised Temporal Synchronization

B Korbar, D Tran, L Torresani – arXiv preprint arXiv:1807.00230, 2018 – arxiv.org

… Ucf101: A dataset of 101 human actions classes from videos in the wild. arXiv preprint arXiv:1212.0402, 2012. [27] Joon Son Chung and Andrew Zisserman. Out of time: automated lip sync in the wild. In Asian Conference on Computer Vision, pages 251–263. Springer, 2016 …

Impacting the social presence of virtual agents by scaling the fidelity of their speech and movement

J Fietkau – 2015 – en.julian-fietkau.de

Page 1. Impacting the social presence of virtual agents by scaling the fidelity of their speech and movement Julian Fietkau February 19th, 2015 Master’s thesis for the purpose of attaining the academic degree Master of Science. (M.Sc.) First supervisor: Prof …

Speech-driven talking face using embedded confusable system for real time mobile multimedia

PY Shih, A Paul, JF Wang, YH Chen – Multimedia tools and applications, 2014 – Springer

This paper presents a real-time speech-driven talking face system which provides low computational complexity and smoothly visual sense. A novel embedded confusable system is proposed to generate an e.

Audio-visual scene analysis with self-supervised multisensory features

A Owens, AA Efros – arXiv preprint arXiv:1804.03641, 2018 – arxiv.org

Page 1. Audio-Visual Scene Analysis with Self-Supervised Multisensory Features Andrew Owens Alexei A. Efros UC Berkeley Abstract. The thud of a bouncing ball, the onset of speech as lips open — when visual and audio …

The use of articulatory movement data in speech synthesis applications: An overview—Application of articulatory movements using machine learning algorithms—

K Richmond, Z Ling, J Yamagishi – Acoustical Science and …, 2015 – jstage.jst.go.jp

Page 1. The use of articulatory movement data in speech synthesis applications: An overview — Application of articulatory movements using machine learning algorithms — Korin Richmond1;Ã, Zhenhua Ling2;y and Junichi Yamagishi1;z …

Large-Scale Visual Speech Recognition

B Shillingford, Y Assael, MW Hoffman, T Paine… – arXiv preprint arXiv …, 2018 – arxiv.org

Page 1. Large-Scale Visual Speech Recognition Brendan Shillingford?, Yannis Assael?, Matthew W. Hoffman, Thomas Paine, Cían Hughes, Utsav Prabhu, Hank Liao, Hasim Sak, Kanishka Rao, Lorrayne Bennett, Marie Mulville …

Learnable PINs: Cross-Modal Embeddings for Person Identity

A Nagrani, S Albanie, A Zisserman – arXiv preprint arXiv:1805.00833, 2018 – arxiv.org

Page 1. Learnable PINs: Cross-Modal Embeddings for Person Identity Arsha Nagrani? Samuel Albanie? Andrew Zisserman VGG, Department of Engineering Science, Oxford {arsha,albanie,az}@robots.ox.ac.uk Abstract. We …

An Implementation of isiXhosa Text-to-Speech Modules to Support e-Services in Marginalized Rural Areas

OP Kogeda, S Mhlana, T Mamello, T Olwal – User-Centric Technology …, 2014 – Springer

Information and communication technology (ICT) projects are being initiated and deployed in marginalized areas to help improve the standard of living for community members. This has led to a new field.

Computer Facial Animation: A Review

HY Ping, LN Abdullah… – … Journal of Computer …, 2013 – pdfs.semanticscholar.org

… Computer, pp. 105-116, 1996. [9] K. Waters and T. Levergood, “DECface: An automatic lip-synchronization algorithm for synthetic faces,” Technical Report 93/4, Digital Equipment Corp, Cambridge Research Laboratory, 1993. [10] B …

Emotion Recognition in Speech using Cross-Modal Transfer in the Wild

S Albanie, A Nagrani, A Vedaldi… – arXiv preprint arXiv …, 2018 – arxiv.org

Page 1. Emotion Recognition in Speech using Cross-Modal Transfer in the Wild Samuel Albanie*, Arsha Nagrani*, Andrea Vedaldi, Andrew Zisserman Visual Geometry Group, Department of Engineering Science, University of Oxford {albanie,arsha,vedaldi,az}@robots.ox.ac.uk …

Fractality of Patentability under the New Subject Matter Eligibility Scheme

R Sadr, EJ Zolotova – NEULJ, 2017 – HeinOnline

Page 1. NORTHEASTERN UNIVERSITY LAW REVIEW Fractality of Patentability under the New Subject Matter Eligibility Scheme Reza Sadr, Ph.D.*, EstherJ. Zolotova** * Patent Counsel, Pierce Atwood LLP https://www.pierceatwood …

Speaker Inconsistency Detection in Tampered Video

P Korshunov, S Marcel – 2018 – publications.idiap.ch

… 75, no. 9, pp. 5329–5343, May 2016. [6] JS Chung and A. Zisserman, “Out of time: Automated lip sync in the wild,” in Computer Vision, ACCV 2016 Workshops. Cham: Springer International Publishing, 2017, pp. 251–263. [7 …

Exploring the Abstract: Patent Eligibility Post Alice Corp v. CLS Bank

J Clizer – Mo. L. Rev., 2015 – HeinOnline

Page 1. NOTE Exploring the Abstract: Patent Eligibility Post Alice Corp v. CLS Bank Alice Corp. Pty. Ltd. v. CLS Bank International, 134 S. Ct. 2347 (2014). JOHN CLIZER* I. INTRODUCTION Most Americans are probably aware of the patent system …

Amending Alice: Eliminating the Undue Burden of Significantly More

MR Woodward – Alb. L. Rev., 2017 – HeinOnline

Page 1. AMENDING ALICE: ELIMINATING THE UNDUE BURDEN OF “SIGNIFICANTLY MORE” Michael R. Woodward* INTRODUCTION In 2014, the Supreme Court decided Alice Corp. v. CLS Bank,’ significantly expanding …

Audio-driven facial animation by joint end-to-end learning of pose and emotion

T Karras, T Aila, S Laine, A Herva… – ACM Transactions on …, 2017 – dl.acm.org

Page 1. Audio-Driven Facial Animation by Joint End-to-End Learning of Pose and Emotion TERO KARRAS, NVIDIA TIMO AILA, NVIDIA SAMULI LAINE, NVIDIA ANTTI HERVA, Remedy Entertainment JAAKKO LEHTINEN, NVIDIA and Aalto University 1 Emotional state …

Exploring the Abstact: Patent Eligibility Post Alice Corp v. CLS Bank

J Clizer – Missouri Law Review, 2015 – scholarship.law.missouri.edu

Page 1. Missouri Law Review …

Repurposing hand animation for interactive applications.

SW Bailey, M Watt, JF O’Brien – … on Computer Animation, 2016 – pdfs.semanticscholar.org

Page 1. Eurographics / ACM SIGGRAPH Symposium on Computer Animation (2016) Ladislav Kavan and Chris Wojtan (Editors) Repurposing Hand Animation for Interactive Applications Stephen W. Bailey1,2 Martin Watt2 James F. O’Brien1 …

Face Mask Extraction in Video Sequence

Y Wang, B Luo, J Shen, M Pantic – arXiv preprint arXiv:1807.09207, 2018 – arxiv.org

Page 1. Face Mask Extraction in Video Sequence Yujiang Wang 1 · Bingnan Luo 1 · Jie Shen 1 · Maja Pantic 1 Abstract Inspired by the recent development of deep network- based methods in semantic image segmentation, we …

Enhanced Facial Expression Using Oxygenation Absorption of Facial Skin

MH Ameen – 2015 – eprints.utm.my

Page 1. ENHANCED FACIAL EXPRESSION USING OXYGENATION ABSORPTION OF FACIAL SKIN MOHAMMAD HAZIM AMEEN A thesis submitted in fulfilment of the requirements for the award of the degree of Doctor of Philosophy (Computer science) Faculty of Computing …

The 101 Conundrum: Creating a Framework to Solve Problems Surrounding Interpretation of 35 USC Sec. 101

R Mazzola – Chi.-Kent J. Intell. Prop., 2014 – HeinOnline

Page 1. THE 101 CONUNDRUM: CREATING A FRAMEWORK TO SOLVE PROBLEMS SURROUNDING INTERPRETATION OF 35 USC § 101 ROBERT MAZZOLA* INTRODUCTION Section 101 cases are a particularly vexing …

After B&B Hardware, What is the Full Scope of Estoppel Arising From a PTAB Decision in District Court Litigation?

GG Drutchas, JL Lovsin – mbhb.com

Page 1. After B&B Hardware, What is the Full Scope of Estoppel Arising From a PTAB Decision in District Court Litigation? By Grantland G. Drutchas and James L. Lovsin The America Invents Act (AIA) created several adjudicative …

Audio and Visual Speech Recognition Recent Trends

LH Wei, SK Phooi, A Li-Minn – Intelligent Image and Video …, 2013 – igi-global.com

Page 1. Copyright ©2013, IGI Global. Copying or distributing in print or electronic forms without written permission of IGI Global is prohibited. Chapter 2 42 Lee Hao Wei Sunway University, Malaysia Seng Kah Phooi Sunway University, Malaysia …

Two Stepping with Alice in Justice Stevens’ Shadow

LR Sears – J. Pat. & Trademark Off. Soc’y, 2017 – HeinOnline

Page 1. 129 Two Stepping with Alice in Justice Stevens’ Shadow L. Rex Sears, Ph.D., JD* Abstract Alice Corp. Pty. Ltd. v. CLS Bank International articulated a two-step pro- cedure for determining patent eligibility: “[f]irst, we determine …

Patentable Subject Matter After Alice-Distinguishing Narrow Software Patents from Overly Broad Business Method Patents

O Zivojnovic – Berkeley Tech. LJ, 2015 – HeinOnline

Page 1. PATENTABLE SUBJECT MATTER AFTER ALICE- DISTINGUISHING NARROW SOFTWARE PATENTS FROM OVERLY BROAD BUSINESS METHOD PATENTS Ognjen Zivojnovict For the last forty years, software has perplexed patent law …

3d convolutional neural networks for cross audio-visual matching recognition

A Torfi, SM Iranmanesh, N Nasrabadi, J Dawson – IEEE Access, 2017 – ieeexplore.ieee.org

Page 1. 2169-3536 (c) 2017 IEEE. Translations and content mining are permitted for academic research only. Personal use is also permitted, but republication/ redistribution requires IEEE permission. See http://www.ieee.org …

Detection and Analysis of Content Creator Collaborations in YouTube Videos using Face-and Speaker-Recognition

M Lode, M Örtl, C Koch, A Rizk, R Steinmetz – arXiv preprint arXiv …, 2018 – arxiv.org

Page 1. 1 Detection and Analysis of Content Creator Collaborations in YouTube Videos using Face- and Speaker-Recognition Moritz Lode1, Michael Örtl1, Christian Koch2, Amr Rizk2, Ralf Steinmetz2 1 Technische Universität …

Towards AmI Systems Capable of Engaging in ‘Intelligent Dialog’and ‘Mingling Socially with Humans’

SE Bibri – The Human Face of Ambient Intelligence, 2015 – Springer

This chapter seeks to address computational intelligence in terms of conversational and dialog systems and computational processes and methods to support complex communicative tasks. In so doing, it.

Survey on Automatic Lip-Reading in the Era of Deep Learning

A Fernandez-Lopez, F Sukno – Image and Vision Computing, 2018 – Elsevier

Skip to main content …

Gating Neural Network for Large Vocabulary Audiovisual Speech Recognition

F Tao, C Busso – IEEE/ACM Transactions on Audio, Speech and …, 2018 – dl.acm.org

Page 1. 1286 IEEE/ACM TRANSACTIONS ON AUDIO, SPEECH, AND LANGUAGE PROCESSING, VOL. 26, NO. 7, JULY 2018 Gating Neural Network for Large Vocabulary Audiovisual Speech Recognition Fei Tao , Student …

Sketch-based Facial Modeling and Animation: an approach based on mobile devices

ALVF Orvalho – 2013 – repositorio-aberto.up.pt

Page 1. FACULDADE DE ENGENHARIA DA UNIVERSIDADE DO PORTO Sketch-based Facial Modeling and Animation: an approach based on mobile devices Ana Luísa de Vila Fernandes Orvalho Mestrado Integrado em Engenharia Eletrotécnica e de Computadores …

Legislative Action—Not Further Judicial Action—is Required to Correct the Determination of Patentable Subject Matter in Regard to 35 USC § 101

MW Hrozenchik – 2018 – search.proquest.com

Page 1. Legislative Action – Not Further Judicial Action – is Required to Correct the Determination of Patentable Subject Matter in Regard to 35 USC § 101 By Mark William Hrozenchik BEEE, May 1984, Stevens Institute of Technology …

Deep Lipreading

MC Doukas – 2017 – imperial.ac.uk

Page 1. IMPERIAL COLLEGE LONDON DEPARTMENT OF COMPUTING Deep Lipreading Author: Michail-Christos Doukas Supervisor: Stefanos P. Zafeiriou Submitted in partial fulfillment of the requirements for the MSc degree …