Notes:

NVIDIA Omniverse Audio2Face is a technology that uses deep learning to synthesize a 3D facial animation of a person speaking, based on audio input. It can be used to animate 3D characters in virtual reality or other 3D environments, or to create video or audio content with realistic lip sync.

To use Audio2Face, you would need to provide an audio clip of a person speaking, as well as a 3D facial model of that person. The system will then analyze the audio input and generate a 3D animation of the facial model that reflects the speaker’s facial expressions and lip movements.

One potential use for Audio2Face is in the creation of realistic avatars for virtual reality or online communication. By animating a 3D model of a person’s face based on their voice, it is possible to create a more lifelike and immersive experience for the user. Audio2Face could also be used to improve the realism of animated characters in video games or other media, or to create more natural-looking lip sync for video or audio content.

Resources:

See also:

100 Best UnrealEngine MetaHuman Videos | Lip Synchronization Meta Guide | Speech Synthesis Meta Guide

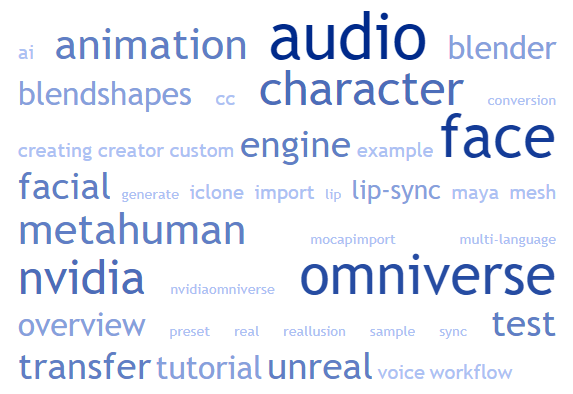

- NVIDIA Audio2Face – Adding Blendshapes to Paragon Aurora – First Results

- Nvidia Omniverse Audio2face to Maya to Unreal Engine Metahuman

- AI-Powered Facial Animation with Omniverse Audio2Face

- Nvidia Omniverse Audio2face to Unreal Engine Metahuman Tutorial

- BlendShape Generation in Omniverse Audio2Face

- Overview of Streaming Audio Player in Omniverse Audio2Face

- Incorporating Facial Animation using Audio2Face

- Unreal Engine Cinematic Video Metahuman+Audio2face

- NVidia Omniverse audio2face quick sample

- Unreal Engine 4 27+Metahuman+Replica+Audio2Face

- Hot Knife Audio2Face

- MetaHuman example with Audio2Face (UE5)

- Nvidia Audio2Face – test

- iClone x Omniverse Audio2Face – Language Independent Facial & Lip-sync Animation from Voice

- Character Creator con animación de Omniverse Audio2Face

- Omniverse Audio2Face Englisch and German Test

- Character Lip Sync Tutorial with Nvidia Omniverse – Audio2Face

- Omniverse Audio2Face to Reallusion iClone to Unreal Engine MetaHuman Workflow

- Testing Unreal Engine’s Metahuman with NVIDIA’s Audio2Face

- Audio2Face to Metahuman: Unreal Engine + NVIDIA Omniverse & RTXGI

- Nvidia Audio2face to Metahuman Test

- Omniverse Audio2face Metahuman Tutorial

- Overview of the Omniverse Audio2Face to Metahuman Pipeline with Unreal Engine 4 Connector

- Audio2Face

- Audio2Face 3D NVidia Real Time Voice to 3D Lip sync.

- audio2Face mine still not working

- My iClone CC3 character animated in Onmiverse Audio2Face in real time!

- BlendShapes Part 4: Custom Character Conversion and Connection in Omniverse Audio2Face

- BlendShapes Part 3: Solver Parameters and Preset Adjustment in Omniverse Audio2Face

- BlendShapes Part 2: Conversion and Weight Export in Omniverse Audio2Face

- BlendShapes Part 1: Importing a Mesh in Omniverse Audio2Face

- Facial Animation & Multi-Language Lip-Sync in Omniverse Audio2Face with Reallusion Character Creator

- Character Transfer Part 7: Creating Presets in Omniverse Audio2Face

- Audio2face testing in blender (eevee)

- Character Creator x Omniverse Audio2Face – Facial Animation & Multi-Language Lip-Sync Through AI

- Tutorial: Lipsync animations in Blender using Audio2face. Example on CC3 character

- Audio2face test on a CC3 Character, inside Blender

- Alien Nvidia Audio2Face test

- NVIDIA Omniverse Audio2Face with Resemble AI’s Synthetic Voices Demo

- EARFQUAKE but Audio2Face animation

- Nvidia’s Omniverse – Audio2Face Comparison with my custom Metahuman text to animation project

- Audio2face import problems, workaround tip

- Audio2face + Deeplabcut Test in Blender

- MocapImport 0.71 – Audio2face to Blender – Tutorial

- MocapImport 0.7 – Audio2face to Blender

- Code PREVIEW – Tutorial How to import Audio2face to Blender

- Metahuman + NVIDIA Omniverse Audio2Face (Maya)

- Omniverse Audio2Face ~ using AI to Generate Facial Animation & Dialogue Lip-sync from Audio

- Creating Facial #Animation using #Audio2Face in #NVIDIAOmniverse is so easy a kid could do it

- How to Use Audio2Face in NVIDIA Omniverse Machinima

- Character Transfer: Maya Workflow Example in Omniverse Audio2Face

- Creating Facial #Animation using #Audio2Face in #NVIDIAOmniverse

- Overview Part 1: Application Overview for NVIDIA Omniverse Audio2Face

- Character Transfer Part 6: Custom Mesh in Omniverse Audio2Face

- Character Transfer Part 4: Post Wrap in Omniverse Audio2Face

- Character Transfer Part 3: Mesh Fitting in Omniverse Audio2Face

- Overview Part 2: Recorder and Live Mode in NVIDIA Omniverse Audio2Face

- Character Transfer Part 1: Overview in Omniverse Audio2Face

- Character Transfer Part 2: User Interface and Samples in Omniverse Audio2Face

- Character Transfer Part 5: Iterative Workflow in Omniverse Audio2Face