Notes:

Affective computing is a subfield of artificial intelligence that focuses on designing and developing systems that can recognize, interpret, and respond to human emotions. Affective computing systems typically use technologies such as natural language processing (NLP), machine learning (ML), and computer vision to analyze and interpret emotional cues in human behavior, such as facial expressions, voice, and body language.

The goal of affective computing is to enable machines to understand and respond to human emotions in a natural and intuitive way. This involves creating systems that can accurately detect and interpret emotional cues, and then generate appropriate responses based on the detected emotions.

Affective computing has a wide range of potential applications, including virtual assistants, social robots, and education and healthcare technology. For example, an affective computing system could be used to develop a virtual assistant that can detect and respond to a user’s emotional state, or a social robot that can provide emotional support to elderly or disabled individuals.

Overall, affective computing is an emerging field that is focused on developing systems that can understand and respond to human emotions in a natural and intuitive way. It has the potential to enable new and innovative applications in a variety of domains.

- Affect analysis is the process of analyzing and interpreting the emotions of a person or group of people. This can be done through various methods, such as analyzing facial expressions, vocal tones, and written or spoken language.

- Emotional AI, or Emotional Artificial Intelligence, refers to artificial intelligence systems that are designed to recognize, interpret, and respond to human emotions. These systems may use various technologies, such as machine learning algorithms and natural language processing, to analyze emotional data and generate appropriate responses.

- Emotional data refers to data that relates to or reflects the emotions of a person or group of people. This data can be collected through various methods, such as surveys, interviews, or by analyzing facial expressions and vocal tones.

- Emotional input refers to the process of providing emotional data to an artificial intelligence system. This can be done through various methods, such as providing written or spoken responses, or by using sensors to collect data about facial expressions and vocal tones.

- Emotional output refers to the process of generating responses based on emotional data. This can be done through various methods, such as generating written or spoken responses, or by using sensors to control physical outputs, such as facial expressions or gestures.

- Emotional recognition refers to the ability of an artificial intelligence system to recognize and interpret the emotions of a person or group of people. This can be done through various methods, such as analyzing facial expressions and vocal tones, or by analyzing written or spoken language.

- Neural emotion recognition refers to the use of neural networks, or artificial neural systems modeled after the structure and function of the human brain, to recognize and interpret human emotions.

- Neural emotion synthesis refers to the use of neural networks to generate responses based on emotional data. This can be done through various methods, such as generating written or spoken responses, or by using sensors to control physical outputs, such as facial expressions or gestures.

- Real-time emotional stream refers to the continuous flow of emotional data that is collected and analyzed in real-time. This data can be used to generate real-time responses or to track changes in emotions over time.

Resources:

- affectiva.com .. emotion recognition software and analysis

- aesuli/sentiwordnet .. sentiment lexicon

Wikipedia:

- Affective computing

- Artificial empathy

- Category:Affective computing

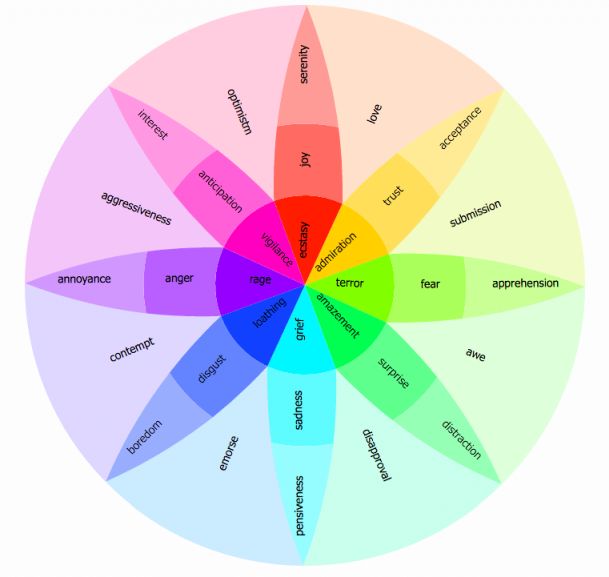

- Contrasting and categorization of emotions

- Emotion recognition

- Emotional intelligence

- Rapport

- Sentiment analysis

- Social emotional development

- Valence (psychology)

References:

- Emotional Expressions Reconsidered: Challenges to Inferring Emotion From Human Facial Movements (2019)

- Affective Computing and Sentiment Analysis (2011)

- Emotion-Oriented Systems: The Humaine Handbook (2011)

See also:

100 Best Emotion Recognition Videos | 100 Best Sentiment Analysis Videos | Affect Listener & Dialog Systems | Affective Dialog Systems | Chatbots & Sentiment Analysis 2018 | Emotional Intelligence & Dialog Systems 2017 | Sentiment Analysis & Dialog Systems 2017 | Sentiment Analysis Tools & Dialog Systems | SentiWordNet & Dialog Systems

- Artificial Empathy

- Computational Humor

- Emotion Engines

- Emotion Synthesis

- Emotional Agents

- Empathy & Robots

- Gamygdala Emotion Engine

- Humor Recognition

- Sarcasm Recognition