Notes:

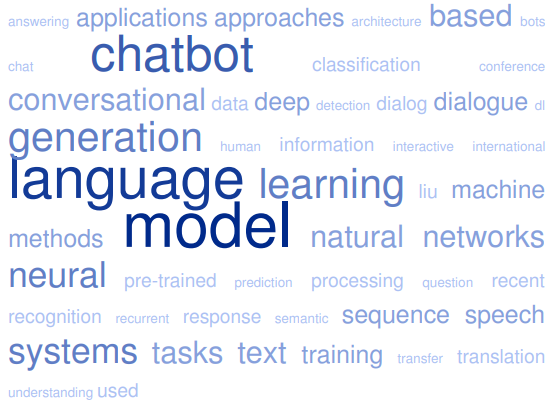

A statistical language model (SLM) is a mathematical model that is used to predict the likelihood of a sequence of words (a string) occurring in a language. It does this by estimating the probability of a given string, denoted as P(s), occurring in the language. A statistical language model is typically based on a large corpus of text, which is a collection of words or texts that are used to train the model. The model is then able to estimate the probability of a given string occurring based on the frequencies of the words and combinations of words in the corpus.

SLMs are commonly used in natural language processing (NLP) tasks, such as speech recognition, machine translation, and text generation. They are also used in other applications where it is important to predict the likelihood of a given sequence of words occurring, such as in information retrieval or summarization. SLMs can be designed to operate at different levels of granularity, such as at the level of individual words, phrases, or sentences. They can also be designed to take into account various contextual factors, such as the words that come before or after a given word in a sentence, or the overall structure of a sentence.

- Anonymized language modeling: An anonymized language model is a type of statistical language model that is designed to protect the privacy of individuals by removing personal identifying information from the text used to train the model. This can involve replacing names and other identifying information with placeholder tokens, or using techniques such as data perturbation to obscure sensitive information. The goal of anonymized language modeling is to allow the model to be trained on real-world text data while protecting the privacy of individuals whose words or actions are captured in that data.

- Attention-based language models: Attention-based language models are a type of neural network-based language model that utilize an attention mechanism to focus on specific parts of the input when generating output. These models can be trained to attend to different parts of the input depending on the task at hand, allowing them to selectively incorporate relevant context or information from different parts of the input. Attention-based language models are commonly used in tasks such as machine translation and text summarization, where it is important to be able to selectively incorporate information from different parts of the input.

- Bidirectional language modeling: Bidirectional language models are a type of statistical language model that are designed to take into account the context of a given word or phrase by considering both the words that come before and after it in a sentence. This allows the model to better capture the meaning and context of a given word or phrase, as it is able to incorporate information from both the preceding and following words in the sentence. Bidirectional language models are commonly used in tasks such as speech recognition and machine translation, where it is important to be able to accurately capture the context of a given word or phrase.

- Character-level language modeling: Character-level language modeling is a type of statistical language model that is trained at the level of individual characters, rather than words or phrases. This means that the model is able to predict the likelihood of a given character occurring based on the characters that come before it in a sequence. Character-level language models are often used in tasks where the input data consists of text written in a language with a complex writing system, such as Chinese or Japanese, as they can capture the complex relationships between individual characters in these languages.

- Contextual language modeling: Contextual language modeling is a type of statistical language model that takes into account the context in which a given word or phrase occurs when predicting the likelihood of that word or phrase occurring. This can involve considering the words that come before or after a given word in a sentence, or the overall structure of the sentence. Contextual language models are often used in tasks such as speech recognition and machine translation, where it is important to be able to accurately capture the meaning and context of a given word or phrase.

- Deep neural language model: A deep neural language model is a type of statistical language model that is based on a deep neural network. This type of model is able to learn complex relationships between the words in a language by training on a large corpus of text data and using multiple layers of neurons to process the data. Deep neural language models are often able to achieve high accuracy on language modeling tasks and have been used in a variety of natural language processing (NLP) applications, such as speech recognition and machine translation.

- Discourse language modeling: Discourse language modeling is a type of statistical language model that is designed to take into account the context and structure of a conversation or discourse when predicting the likelihood of a given word or phrase occurring. This can involve considering the words and phrases that have been used in previous turns in the conversation, as well as the overall structure and purpose of the conversation. Discourse language models are often used in tasks such as dialogue systems and chatbots, where it is important to be able to accurately capture the context and structure of a conversation.

- Hierarchical-RNN-based language modeling: Hierarchical-RNN-based language models are a type of statistical language model that are based on a type of neural network known as a hierarchical recurrent neural network (RNN). These models are able to learn complex relationships between words in a language by processing the input data at multiple levels of granularity, such as at the level of individual words, phrases, or sentences. Hierarchical-RNN-based language models are often used in tasks such as machine translation and text summarization, where it is important to be able to capture the structure and meaning of a text at multiple levels of granularity.

- LSTM language models: LSTM language models are a type of statistical language model that are based on a type of neural network known as a long short-term memory (LSTM) network. LSTM networks are able to learn and remember long-term dependencies between words in a language, which makes them well-suited for language modeling tasks. LSTM language models are often used in tasks such as speech recognition and machine translation, where it is important to be able to accurately capture the meaning and context of a given word or phrase.

- LSTM-based language models: LSTM-based language models are a type of statistical language model that are based on a long short-term memory (LSTM) network. Like other LSTM models, these models are able to learn and remember long-term dependencies between words in a language, which makes them well-suited for language modeling tasks. LSTM-based language models may be used in a variety of natural language processing (NLP) applications, such as speech recognition and machine translation.

- LVCSR language modeling: LVCSR stands for large vocabulary continuous speech recognition, and LVCSR language modeling refers to the process of developing statistical language models for the purpose of recognizing continuous speech with a large vocabulary. This type of language modeling typically involves training a statistical model on a large corpus of transcribed speech data in order to learn the probability distribution of words and phrases occurring in spoken language. LVCSR language models are often used in tasks such as speech recognition and speech-to-text transcription, where it is important to be able to accurately recognize a wide range of words and phrases in continuous speech.

- Low-resource language modeling: Low-resource language modeling refers to the process of developing statistical language models in situations where there is a limited amount of data available for training. This can be a challenge in languages with small amounts of available text data or in situations where there is a need to develop a language model for a specific domain or task, but there is not enough data available to train a model using traditional methods. Low-resource language modeling often involves using techniques such as transfer learning and data augmentation to make the most of the available data.

- M-gram language modeling: An M-gram language model is a type of statistical language model that is based on the probability of a sequence of M words occurring together in a language. M-gram language models are often used in natural language processing (NLP) tasks such as machine translation, where it is important to be able to capture the dependencies between words in a language. M-gram models can be trained on a large corpus of text data in order to learn the probability distribution of M-grams occurring in the language.

- Natural language modelling: Natural language modelling is a subfield of natural language processing (NLP) that involves developing statistical models that can process and understand natural language data, such as text or speech. Natural language modelling involves learning the probability distribution of words, phrases, and sentences occurring in a language, and can be used for tasks such as machine translation, speech recognition, and text classification.

- Neural language model: A neural language model is a type of statistical language model that is based on a neural network. Neural language models are able to learn complex relationships between words in a language by training on a large corpus of text data and using multiple layers of neurons to process the data. Neural language models are often able to achieve high accuracy on language modeling tasks and have been used in a variety of NLP applications, such as speech recognition and machine translation.

- Online language modeling: Online language modeling refers to the process of developing a statistical language model that can learn and adapt to new data in real-time. This type of language modeling is often used in tasks such as speech recognition, where the model needs to be able to recognize and understand words and phrases as they are being spoken. Online language models are able to continuously update their probability distributions based on new data, which allows them to adapt and improve their performance over time.

- Personalized language modeling: Personalized language modeling refers to the process of developing a statistical language model that is tailored to a specific individual or group of individuals. This type of language model takes into account the unique characteristics and language use of the individual or group, and is able to generate more accurate and personalized predictions as a result. Personalized language models are often used in tasks such as language generation, where it is important to be able to generate text that is tailored to the individual user’s style and preferences.

- Phonotactic language modeling: Phonotactic language modeling refers to the process of developing a statistical language model that takes into account the phonotactic constraints of a language. Phonotactic constraints refer to the rules governing the way sounds can be combined in a language, and a phonotactic language model is able to model these constraints in order to better understand and predict the structure of spoken language. Phonotactic language models are often used in tasks such as speech recognition, where it is important to be able to accurately recognize and understand spoken words and phrases.

- Pitman-Yor language modeling: Pitman-Yor language modeling is a type of statistical language model that is based on the Pitman-Yor process, which is a continuous-time Markov chain that can be used to model the probability distribution of sequences of words in a language. Pitman-Yor language models are able to capture long-range dependencies between words in a language and are often used in tasks such as machine translation, where it is important to be able to accurately capture the meaning and context of a given word or phrase.

- Probabilistic language modeling: Probabilistic language modeling refers to the process of developing a statistical model that can predict the probability of a sequence of words occurring in a language. This type of language model is often used to predict the likelihood of a given sequence of words occurring in a language and is typically used in tasks such as speech recognition, machine translation, and language generation. Probabilistic language models are typically based on statistical techniques such as maximum likelihood estimation and use data from a large corpus of text to learn the probability distribution of words and sequences of words in a language.

- Recurrent neural network language modeling: Recurrent neural network (RNN) language modeling refers to the process of developing a statistical language model using a recurrent neural network (RNN). RNNs are a type of artificial neural network that are particularly well suited for modeling sequential data, such as text. In RNN language modeling, the RNN is trained to predict the next word in a sequence based on the words that have come before it. RNN language models are often used in tasks such as machine translation, language generation, and speech recognition.

- Recurrent-neural-network-based language model: A recurrent-neural-network-based language model is a statistical language model that is based on a recurrent neural network (RNN). As with RNN language modeling, a recurrent-neural-network-based language model is trained to predict the next word in a sequence based on the words that have come before it, and is often used in tasks such as machine translation, language generation, and speech recognition.

- RNN language models: RNN language models are statistical language models that are based on recurrent neural networks (RNNs). As with other types of RNN language models, RNN language models are trained to predict the next word in a sequence based on the words that have come before it, and are often used in tasks such as machine translation, language generation, and speech recognition.

- RNN based language modeling: RNN based language modeling refers to the process of developing a statistical language model using a recurrent neural network (RNN). As with other types of RNN language models, RNN based language models are trained to predict the next word in a sequence based on the words that have come before it, and are often used in tasks such as machine translation, language generation, and speech recognition.

- Self-organized language modeling: Self-organized language modeling refers to the process of developing a statistical language model that is able to learn and adapt to new data without explicit supervision. This type of language model is able to learn the structure of a language and the relationships between words and sequences of words from a large corpus of text, and is able to adapt to new data as it becomes available. Self-organized language models are often used in tasks such as machine translation, language generation, and speech recognition, and are particularly useful in situations where the available data is limited or noisy.

- Spoken language modeling: Spoken language modeling refers to the process of developing a statistical language model that is specifically designed to model spoken language. This type of language model is often used in tasks such as speech recognition, where it is used to predict the words that were spoken based on a sequence of acoustic signals. Spoken language models may be based on a variety of different types of statistical models, such as hidden Markov models (HMMs) or recurrent neural networks (RNNs).

- Statistical language modeling: Statistical language modeling refers to the process of developing a probability distribution over sequences of words that reflects how frequently those sequences occur as sentences in a language. Statistical language models are often used in tasks such as machine translation, language generation, and speech recognition, where they are used to predict the most likely sequence of words given a particular context. Statistical language models may be based on a variety of different types of statistical models, such as n-gram models or recurrent neural networks (RNNs).

- Stochastic language modeling: Stochastic language modeling refers to the process of developing a statistical language model that is based on a stochastic process, or a process that involves randomness or uncertainty. Stochastic language models are often used in tasks such as machine translation, language generation, and speech recognition, where they are used to predict the most likely sequence of words given a particular context. Stochastic language models may be based on a variety of different types of statistical models, such as hidden Markov models (HMMs) or recurrent neural networks (RNNs).

- Syntactic language modeling: Syntactic language modeling refers to the process of developing a statistical language model that takes into account the syntactic structure of a language, or the way in which words are arranged into phrases and sentences. Syntactic language models are often used in tasks such as natural language processing, where they are used to predict the most likely sequence of words given a particular context and to identify the roles that different words play in a sentence. Syntactic language models may be based on a variety of different types of statistical models, such as n-gram models or recurrent neural networks (RNNs).

- Syntactico-statistical language modeling: Syntactico-statistical language modeling refers to the process of developing a statistical language model that takes into account both the syntactic structure of a language and the statistical characteristics of the language. Syntactico-statistical language models are often used in tasks such as natural language processing, where they are used to predict the most likely sequence of words given a particular context and to identify the roles that different words play in a sentence. Syntactico-statistical language models may be based on a variety of different types of statistical models, such as n-gram models or recurrent neural networks (RNNs).

- Turn-based language modeling: Turn-based language modeling refers to the process of developing a statistical language model that is specifically designed to model language in a turn-based context, such as a conversation between two people. Turn-based language models are often used in tasks such as dialogue systems, where they are used to predict the most likely response given a particular turn in the conversation. Turn-based language models may be based on a variety of different types of statistical models, such as hidden Markov models (HMMs) or recurrent neural networks (RNNs).

Resources:

- cs.cmu.edu/~mccallum/bow .. toolkit for statistical language modeling

- cmuslm .. cmu statistical language modeling toolkit

- univ-lemans.fr/cslm .. continuous space language model toolkit

- jlm.ipipan.waw.pl .. journal of language modelling

- github.com/mitlm/mitlm .. mit language modeling toolkit

- speech.sri.com/projects/srilm .. sri language modeling toolkit

Wikipedia:

- Language Model

- Long short-term memory

- Text mining (aka Text analysis)

- Word2vec

See also:

OpenOME (Organization Modelling Environment) | Rule-based Language Modeling | Tool for Agent Oriented Modeling for Eclipse (TAOM4E)

Deep learning based chatbot models

R Csaky – arXiv preprint arXiv:1908.08835, 2019 – arxiv.org

… These chatbot programs are very similar in their core, namely that they all use hand-written rules to … Section 3.3.3. In [Ramachandran et al., 2016] a seq2seq model is pretrained as a language model and in order to avoid overfitting, monolingual language modeling losses are …

Unified language model pre-training for natural language understanding and generation

L Dong, N Yang, W Wang, F Wei, X Liu… – Advances in Neural …, 2019 – papers.nips.cc

… This paper presents a new UNIfied pre-trained Language Model (UNILM) that can be fine-tuned for both natural language understanding and generation tasks. The model is pre-trained using three types of language modeling tasks: unidirec- tional, bidirectional, and sequence …

Machine Reading Comprehension for Answer Re-Ranking in Customer Support Chatbots

M Hardalov, I Koychev, P Nakov – Information, 2019 – mdpi.com

… Recent advances in deep neural networks, language modeling and language … vectors are learned activation functions of the internal states of a deep bi-directional language model … focus primarily on word overlap and measure the similarity between the chatbot’s response and …

# MeTooMaastricht: Building a chatbot to assist survivors of sexual harassment

T Bauer, E Devrim, M Glazunov, WL Jaramillo… – … Conference on Machine …, 2019 – Springer

… Large neural networks have been trained on general tasks such as language modelling and then fine-tuned for classification tasks … In our project we used approach based on universal language model fine-tuning for named entity recognition, namely, pre-trained … 3.4 Chatbot …

Multi-Turn Response Selection in Retrieval-Based Chatbots with Iterated Attentive Convolution Matching Network

H Wang, Z Wu, J Chen – Proceedings of the 28th ACM International …, 2019 – dl.acm.org

… aims at generating a response token by token according to conditional probabilistic language models (LM … translation task, it has also been widely applied in generation-based chatbot system … Early studies of retrieval-based chatbots focus on single-turn conversation, which only …

Multi-task learning with language modeling for question generation

W Zhou, M Zhang, Y Wu – arXiv preprint arXiv:1908.11813, 2019 – arxiv.org

… In details, we first feed the input sequence into the language model- ing layer to get a sequence of … In future work, we will adopt the aux- iliary language modeling task to other neural gen- eration systems to … From eliza to xiaoice: challenges and opportuni- ties with social chatbots …

Computational argumentation synthesis as a language modeling task

R El Baff, H Wachsmuth, K Al Khatib, M Stede… – Proceedings of the 12th …, 2019 – aclweb.org

… While these problems may be tolerable to some extent in some applications, such as chatbots, bad text cannot be accepted in an argumentative or … We approach the selection as a language modeling task where each ADU is a “word” of our language model and each …

Topic-aware chatbot using Recurrent Neural Networks and Nonnegative Matrix Factorization

Y Guo, N Haonian, Z Lin, N Liskij, H Lyu… – arXiv preprint arXiv …, 2019 – arxiv.org

… One example of an application of deep learning in language modeling is conversa- tional chatbots … There are two main approaches for building chatbots: retrieval-based methods and generative methods. A retrieval-based chatbot is one that has a predefined set of responses …

AI-Chatbot Using Deep Learning to Assist the Elderly

G Tascini – Systemics of Incompleteness and Quasi-Systems, 2019 – Springer

… The language modeling uses n-grams—groups of words—and processes the n-grams … tools, analyzed the understanding of natural language aimed to chatbot training. We have analyzed deep learning, Recurrent Neural Networks, Seq2Seq and Sec2Vet language models …

Do Massively Pretrained Language Models Make Better Storytellers?

A See, A Pappu, R Saxena, A Yerukola… – arXiv preprint arXiv …, 2019 – arxiv.org

… In partic- ular, the OpenAI GPT2 language model (Rad- ford et al., 2019) achieves state-of-the-art perfor- mance on several language modeling benchmarks, even in a zero-shot setting. While GPT2’s perfor- mance as a language model is undeniable, its per- formance as …

Transfertransfo: A transfer learning approach for neural network based conversational agents

T Wolf, V Sanh, J Chaumond, C Delangue – arXiv preprint arXiv …, 2019 – arxiv.org

… Introduction Non-goal-oriented dialogue systems (chatbots) are an inter- esting test-bed for … Transfer learning from language models have been recently shown to bring strong empirical … One Billion Word Benchmark for Measuring Progress in Statistical Language Modeling …

Fast compression and optimization of deep learning models for natural language processing

M Pietron, M Karwatowski… – … on Computing and …, 2019 – ieeexplore.ieee.org

… networks (CNN) play a major role in a lot of natural language domains like text document categorization, part of speech tagging, chatbots, language modeling or language … LANGUAGE MODELING A statistical language model is a probability distribution over sequences of words …

Survey of Textbased Chatbot in Perspective of Recent Technologies

B Som, S Nandi – … Conference, CICBA 2018, Kalyani, India, July …, 2019 – books.google.com

… Neural probabilistic language model [12] and Neural machine translation [13] can be … These includes language modeling [12], paraphrase detection [24] and word embedding extraction [25] … Language Interpretation: Inconsistency in interpretation is one main issue of chatbot …

Deep Learning Language Modeling Workloads: Where Time Goes on Graphics Processors

AH Zadeh, Z Poulos, A Moshovos – 2019 IEEE International …, 2019 – ieeexplore.ieee.org

… Language Modeling (LM) is at the core of many appli- cations in natural language processing (NLP) [1]. Language modeling is a sequence … long short term memory (LSTM) and gated recurrent units (GRU) are two well-known implementation methods for language model- ing [3 …

A survey on construction and enhancement methods in service chatbots design

Z Peng, X Ma – CCF Transactions on Pervasive Computing and …, 2019 – Springer

… The response generation can be performed as in standard language modeling: sampling one … The first one is word perplexity originally for probabilistic language models (Bengio et al … summarize the rule-based, retrieval-based and generation based chatbot construction methods …

Comparison of diverse decoding methods from conditional language models

D Ippolito, R Kriz, M Kustikova, J Sedoc… – arXiv preprint arXiv …, 2019 – arxiv.org

… Conditional language models, which have wide applications across machine translation, text sim- plification … not permit generating multiple samples, it is rarely used in language modelling … search method to help condense and remove meaningless responses from chatbots …

Alternating Recurrent Dialog Model with Large-scale Pre-trained Language Models

Q Wu, Y Zhang, Y Li, Z Yu – arXiv preprint arXiv:1910.03756, 2019 – arxiv.org

… However, due to the limited language modeling capabilities in the previous model, Sequicity (Lei et al … TransferTransfo is also based on large pre-trained language model, but it uses token type … We ask them to select a preferred chat-bot and indicate how much they are willing to …

Self-Attentional Models Application in Task-Oriented Dialogue Generation Systems

M Saffar Mehrjardi – 2019 – era.library.ualberta.ca

… Appendix B. Rule-based Chatbot: Sample of a rule-based chatbot we used in our experiments. 12 Page 24. Chapter 2 … for probabilistic modeling of a sequenced data. Language modeling is one the … (text data). The language model can be used to predict the next token (eg …

AI based Chatbot for Education Management

S Brindha, KRD Dharan, SJJ Samraj, LM Nirmal – ijresm.com

… AI based Chatbot for Education Management … Language Model: Language modeling is used in many natural language processing applications such as speech recognition tries to capture the properties of a language and to predict the next word in the speech sequence …

Movie Recommendation based on User Similarity of Consumption Pattern Change

M Kim, SH Jeon, H Shin, W Choi… – 2019 IEEE Second …, 2019 – ieeexplore.ieee.org

… which mainly learns and predicts sequence data and time series data, is mainly used in language modeling, stock price prediction, and chat bot … Mikolov, T., Karafiat, M., Burget, L. Cernocky J. and Khudanpur, S., “Recurrent neural network based language model,” in Proc …

ARTIFICIAL INTELLIGENCE BASED CHATBOT FOR HUMAN RESOURCE USING DEEP LEARNING A DISSERTATION

SA Sheikh – 2019 – researchgate.net

… human customers or diverse Chatbots that gives through text. The Chatbots which is being proposed for Human Resource is Artificial Intelligence based Chatbot for major measurement profiling of contenders for the explicit task …

Deep Natural Language Processing for Search Systems

W Guo, H Gao, J Shi, B Long – … of the 42nd International ACM SIGIR …, 2019 – dl.acm.org

… Three representative generation tasks [3, 6, 15] are intro- duced that heavily rely on neural language modeling [1], sequence … of Deep Natural Language Process- ing in LinkedIn Search System (2) Conversational AI • Chatbot for LinkedIn … A neural probabilistic language model …

OpenAI’s new multitalented AI writes, translates, and slanders

J Vincent – The Verge. The Verge, February, 2019 – fully-human.org

… https://www.theverge.com/2019/2/14/18224704/ai-machine-learning-language-models-read- write … moderators still overlook abusive comments, and the world’s most talkative chatbots can barely … It excels at a task known as language modeling, which tests a program’s ability to …

A topic-driven language model for learning to generate diverse sentences

C Gao, J Ren – Neurocomputing, 2019 – Elsevier

… [7] generate the responses of the chatbot based on … strong performance of our model suggests that combining topic information and variational autoencoders benefits language modelling. Table 3. Language model perplexity performance of all models on Restaurant and Yahoo …

Russian Diminutive Name Generation

D Rodionova, T Malygina – academia.edu

… modeling Introduction Problem of Russian diminutives generation would be very useful in lot of language tasks from diminu- tive name formation in conversational chatbots to automatic diminutive vocabulary collection … Char-level language model LM for Russian names …

A Multi-Turn Emotionally Engaging Dialog Model

Y Xie, E Svikhnushina, P Pu – arXiv preprint arXiv:1908.07816, 2019 – arxiv.org

… Further human evaluations confirm that our chatbot can keep track of the conversation … Recent development in neural language modeling has generated significant excitement in the open … tasks, such as building emotional dialog systems and affect language models, has inspired …

Automatic generation of diminutive names

D Rodionova, T Malygina – academia.edu

… Russian names · Russian diminutives · Russian diminutives formation · Russian morphology · char-level language modeling … t want to store dictionaries with all diminutive forms (ie dialog chatbots); – Applying of … 2. Then we trained char-level language model L for only xi with …

Stochastic gradient methods with layer-wise adaptive moments for training of deep networks

B Ginsburg, P Castonguay, O Hrinchuk… – arXiv preprint arXiv …, 2019 – arxiv.org

… Transformer-XL (Dai et al., 2019), the state- of-the-art language model on the … We tested NovoGrad on deep models for image classification, speech recognition, transla- tion, and language modeling … Nemade, G., Lu, Y., and Le, QV Towards a human-like open-domain chatbot …

Training neural response selection for task-oriented dialogue systems

M Henderson, I Vuli?, D Gerz, I Casanueva… – arXiv preprint arXiv …, 2019 – arxiv.org

… Despite their popularity in the chatbot liter- ature, retrieval-based models have had mod- est impact on task-oriented dialogue systems … Inspired by the recent suc- cess of pretraining in language modelling, we propose an effective method for deploying response selection in task …

A Voice Interactive Multilingual Student Support System using IBM Watson

K Ralston, Y Chen, H Isah… – 2019 18th IEEE …, 2019 – ieeexplore.ieee.org

… are generative recurrent systems like seq2seq [9] which are rooted in language modelling and are … been written in, Watson Knowledge Studio for creating a custom language model, and Tone … This paper investigates the applications of chatbot related API packages offered by …

Social bias frames: Reasoning about social and power implications of language

M Sap, S Gabriel, L Qin, D Jurafsky, NA Smith… – arXiv preprint arXiv …, 2019 – arxiv.org

… This model is a uni- directional language model, which means encoded token representations are only conditioned on past tokens (ie, hi = fe(wi … also com- pare the full multitask model to a baseline genera- tive inference model trained only on the language modelling loss (L2) …

Deep reinforcement learning for modeling chit-chat dialog with discrete attributes

C Sankar, S Ravi – arXiv preprint arXiv:1907.02848, 2019 – arxiv.org

… dialog contexts. In such scenarios, decoder learns to ignore the context (considering it as noise) and behaves like a regular language model. Such … more turns. We use a 2-layer GRU language model as a baseline for comparison. As …

Understanding EFL Linguistic Models through Relationship between Natural Language Processing and Artificial Intelligence Applications.

MS Keezhatta – Arab World English Journal, 2019 – academia.edu

… The purpose was to assess how their relationship would result in the creation of language models that could be used for … These models relate to machine translation, question answering systems, chatbots, sentiment analysis and other core issues of language modeling …

Dialogpt: Large-scale generative pre-training for conversational response generation

Y Zhang, S Sun, M Galley, YC Chen, C Brockett… – arXiv preprint arXiv …, 2019 – arxiv.org

… dialogue session as a long text and frame the generation task as language modeling … AI systems with transfer learning based on the GPT-2 transformer language model, which achieves … It contains pre-trained models for knowledge-grounded chatbot trained with crowd- sourced …

Do neural dialog systems use the conversation history effectively? an empirical study

C Sankar, S Subramanian, C Pal, S Chandar… – arXiv preprint arXiv …, 2019 – arxiv.org

… generative dialog systems, we treat the problem of generat- ing an appropriate response given a conversation history as a conditional language modeling prob- lem … Sharp nearby, fuzzy far away: How neural language models use context … A deep reinforcement learning chatbot …

Improving speech recognition error prediction for modern and off-the-shelf speech recognizers

P Serai, P Wang… – ICASSP 2019-2019 IEEE …, 2019 – ieeexplore.ieee.org

… [16] adapted a chatbot answer prediction system to … 167–174. [18] Cantab Research, “Cantab-tedlium language model and lexicon … [19] Anthony Rousseau, Paul Deléglise, and Yannick Esteve, “Enhancing the ted-lium corpus with selected data for language modeling and more …

Neural personalized response generation as domain adaptation

WN Zhang, Q Zhu, Y Wang, Y Zhao, T Liu – World Wide Web, 2019 – Springer

… (\mathbb {LMP}\): the state-of-the-art approach for language model personalization, which … Meanwhile, the Perplexity, which is an evaluation metric for language modeling, is also not reasonable for … are judged to be from a volunteer, but are generated by his/her chatbot in testing …

Learning to explain: Answering why-questions via rephrasing

A Nie, ED Bennett, ND Goodman – arXiv preprint arXiv:1906.01243, 2019 – arxiv.org

… statement so our model can also function as a single-round chitchat chatbot that can … We use a publicly available pre-trained BookCor- pus language model from Holtzman et al … Language Modeling One Billion This dataset (LM-1B) is currently the largest standard training dataset …

Mix-review: Alleviate Forgetting in the Pretrain-Finetune Framework for Neural Language Generation Models

T He, J Liu, K Cho, M Ott, B Liu, J Glass… – arXiv preprint arXiv …, 2019 – arxiv.org

… the target dialogue response task (conditional NLG) and the pre-training language modeling (LM) objective … knowledge from pre-training opens the possibility of a data-driven knowledgable chat-bot … On the other hand, knowledge-grounded chat-bots (Liu et al., 2018; Zhu et al …

The second conversational intelligence challenge (convai2)

E Dinan, V Logacheva, V Malykh, A Miller… – arXiv preprint arXiv …, 2019 – arxiv.org

… The evaluation of chatbots was performed by human as- sessors … Seq2Seq + Attention Baseline 29.8 12.6 16.18 Language Model Baseline 46.0 – 15.02 … This setup is closer to real-world chatbot applications than the Mechanical Turk evaluation set-up …

BERT for Open-Domain Conversation Modeling

X Zhao, Y Zhang, W Guo, X Yuan – 2019 IEEE 5th International …, 2019 – ieeexplore.ieee.org

… meaningless responses, VHRED has been proposed to bring variety in language modeling via a … V. CONCLUSIONS In this paper, we explore the large pre-trained language model BERT for … S., Yoshinaga, N., Toyoda, M., Kitsuregawa, M.: Modeling situations in neural chat bots …

I say, you say, we say: using spoken language to model socio-cognitive processes during computer-supported collaborative problem solving

AEB Stewart, H Vrzakova, C Sun, J Yonehiro… – Proceedings of the …, 2019 – dl.acm.org

… Our use of audio also presents novel challenges in language modeling because automated transcription and speech segmentation are imperfect, whereas text chats provide a precise representation of the communication between partners …

LIRMM-advanse at SemEval-2019 Task 3: Attentive conversation modeling for emotion detection and classification

W Ragheb, J Azé, S Bringay, M Servajean – 2019 – hal-lirmm.ccsd.cnrs.fr

… With the increasing number of mes- saging platforms and with the growing demand of customer chat bot applications, detecting … In natural language processing (NLP), this is done through Language Modeling (LM) … The develop- ing of the Universal Language Model Fine-tuning …

Wide-Ranging Review Manipulation Attacks: Model, Empirical Study, and Countermeasures

P Kaghazgaran, M Alfifi, J Caverlee – Proceedings of the 28th ACM …, 2019 – dl.acm.org

… Also, character-level language models need to capture longer dependencies and learn spelling in addition to syntax and semantics, so they are likely to become … First, the main advantage of character-level over word-level language modeling is its significantly smaller vocabulary …

Inductive Transfer Learning for Detection of Well-Formed Natural Language Search Queries

B Syed, V Indurthi, M Gupta, M Shrivastava… – European Conference on …, 2019 – Springer

… understanding the user’s intent (in case of personal assistants and chatbots [15, 18 … The ULMFiT Architecture: Previous attempts to use inductive transfer through language modeling have resulted in … However, Howard and Ruder [10] showed that if language models (LMs) are fine …

Overview of the sixth dialog system technology challenge: DSTC6

C Hori, J Perez, R Higashinaka, T Hori… – Computer Speech & …, 2019 – Elsevier

… Second, Memory Networks (Sukhbaatar et al., 2015a) are a recent class of models that have also been applied to a range of natural language processing tasks, including question answering (Bordes et al., 2015), language modeling and non-goal … 9, Quantized language model …

A survey of natural language generation techniques with a focus on dialogue systems-past, present and future directions

S Santhanam, S Shaikh – arXiv preprint arXiv:1906.00500, 2019 – arxiv.org

… (9) In the next four subsections, we list the different approaches such as language modeling, encoder- decoder, memory networks and transformer models based approaches that have been applied to the task of language generation. 3.1 Language Models …

Neural Machine Translation with Word Embedding Transferred from Language Model

C Jeong, H Choi – ?????????? ???, 2019 – journal.dcs.or.kr

… In neural network based language modeling (NNLM), the conditional probabilities are implemented by … Bengio, R. Ducharme, P. Vincent, “A Neural Probabilistic Language Model”, The Journal … ????? ???(Deep Learning), NLP(Natural Language Processing), Chat-bot ? …

Evolution of transfer learning in natural language processing

A Malte, P Ratadiya – arXiv preprint arXiv:1910.07370, 2019 – arxiv.org

… has been used to perform a wide variety of tasks including Neural Machine Transla- tion(NMT), Abstractive summarization and chatbot systems … EVOLUTION OF TRANSFER LEARNING AND LANGUAGE MODELING A. ULMFIT Universal Language Model Fine-tuning …

Anniversary article: Then and now: 25 years of progress in natural language engineering

J Tait, Y Wilks – Natural Language Engineering, 2019 – search.proquest.com

… in large part due to much freer access to computer power by that period compared to the 1960s and 1970s, and many chatbots had developed into commercial applications as front ends to travel reservation and customer service sites. One such early chatbot system was …

Conversation Model Fine-Tuning for Classifying Client Utterances in Counseling Dialogues

S Park, D Kim, A Oh – arXiv preprint arXiv:1904.00350, 2019 – arxiv.org

… which consists of a general language model built from an out-of-domain corpus and two role-specific language models built from … Mobile-based psychotherapy programs (Mantani et al., 2017), fully automated chatbots (Ly et al., 2017; Fitzpatrick et al., 2017), and intervention …

Attention-based modeling for emotion detection and classification in textual conversations

W Ragheb, J Azé, S Bringay, M Servajean – arXiv preprint arXiv …, 2019 – arxiv.org

… of messag- ing platforms and with the growing demand of customer chat bot applications, detecting … In natural language process- ing (NLP), this is done through Language Modeling (LM) … The devel- oping of the Universal Language Model Fine-tuning (ULM- FiT) [Howard and …

Approximating interactive human evaluation with self-play for open-domain dialog systems

A Ghandeharioun, JH Shen, N Jaques… – Advances in Neural …, 2019 – papers.nips.cc

… Yet dialog is more than language modeling, it requires topic and social coherence … of capturing human quality judgments, underscoring the difficulty in effectively training good language models … be calculated on the trajectory of self-play utterances for any chatbot, regardless of …

Multi-attending Memory Network for Modeling Multi-turn Dialogue

J Ren, L Yang, C Zuo, W Kong, X Ma – Proceedings of the 2019 3rd High …, 2019 – dl.acm.org

… via learning what to read and to write, and has been widely applied, such as question answering [14][15][19], language modeling [15], text … A Sequence to Sequence and Rerank based Chatbot Engine … Empower Sequence Labeling with Task-Aware Neural Language Model …

Trouble on the horizon: Forecasting the derailment of online conversations as they develop

JP Chang, C Danescu-Niculescu-Mizil – arXiv preprint arXiv:1909.01362, 2019 – arxiv.org

… rather than prediction: recent work in context-aware dialog generation (or “chat- bots”) has proposed … on large amounts of unsuper- vised data, similarly to how chatbots are trained … We hence view our objective as conversational model- ing rather than (only) language modeling …

Keepin’it real: Linguistic models of authenticity judgments for artificially generated rap lyrics

F Karsdorp, E Manjavacas, M Kestemont – PloS one, 2019 – journals.plos.org

… Chatbot interfaces for customers at company websites are but one example of a popular application of this technology … In recent years, such Neural Language Models have shown excellent performance, holding the current state-of-the-art in Language Modeling (see eg …

Recent Advances and Challenges in Design of Non-goal-Oriented Dialogue Systems

A Mehndiratta, K Asawa – International Conference on Big Data Analytics, 2019 – Springer

… arXiv preprint arXiv:1503.01838 (2015). 33. Mikolov, T., et al.: Recurrent neural network based language model … Serban, IV, et al.: A deep reinforcement learning chatbot … Sundermeyer, M., Schlüter, R., Ney, H.: LSTM neural networks for language modeling …

Speech Command Classification System for Sinhala Language based on Automatic Speech Recognition

T Dinushika, L Kavmini… – … Conference on Asian …, 2019 – ieeexplore.ieee.org

… have implemented 3-Gram model with the help of SRI Language Modeling Toolkit (SRILM) … of the ASR utilizes the trained acoustic model and the language model to convert … Bayes Algorithm And Logistic Regression For Intent Classification In Chatbot,” International Conference …

High-performance intent classification in sparse supervised data conditions

K Galli – 2019 – dspace.mit.edu

… Recurrent neural networks will be evaluated as well as attention based language models such as OpenAl Generative … In the following sections, text classification work related to chatbot intent recognition will be examined … If language modeling is used as the objective, the hidden …

Sentiment Analysis through Transfer Learning for Turkish Language

SE Akin, T Yildiz – … on INnovations in Intelligent SysTems and …, 2019 – ieeexplore.ieee.org

… AWD-LSTM method achieved better results in word-level language modeling … Contextual bi-directional long short term memory recurrent neuralnetwork language models : A generative … C. Musat, A. Hossman, and M. Baeriswyl, “Goal-Oriented Chatbot Dialog Management …

Natural language understanding for dialogue systems using n-best lists

S Mansalis – MS thesis, 2019 – aueb.gr

… and non-task-oriented dialogue systems (also known as chatbots). Task-oriented dialogue … is the task of predicting the next word given a sequence of words. In masked language modelling instead of predicting every next to … Fig. 2.12 BERT: Masked Language Model …

BertNet: Combining BERT language representation with attention and CNN for reading comprehension

G Limaye, M Pandit, V Sawal – pdfs.semanticscholar.org

… With the prevalence of ChatBots and Conversational AI in a variety of businesses and consumer use-cases, there is a growing … Masked Language Model approach makes it possible for BERT to use bidirectional attention using transformer for a Language Modeling objective …

Decompositional Semantics for Events, Participants, and Scripts in Text

R Rudinger – 2019 – jscholarship.library.jhu.edu

… 139 10 Common Sense and Language Modeling 141 … 145 10.2.1 Inference Generation via Language Models … AI: How did it taste? Figure 1.1: An example of an award-winning chatbot, “Mitsuku,” failing to respond appropriately to a human user …

Making Pepper Understand and Respond in Romanian

D Tufis, VB Mititelu, E Irimia, M Mitrofan… – … on Control Systems …, 2019 – ieeexplore.ieee.org

… ones (microworlds and scenarios based) and this feature differentiates them from the dialogs carried on chatbots … Based on all the sentences from the database a statistical language model was created using tri … [11] A. Stolcke, “SRILM – An Extensible Language Modeling Toolkit …

Emotion-Eliciting Poetry Generation

B Bena, J Kalita – cs.uccs.edu

… natural language generation can be traced to pioneering rule-based simulations of chatbots such as … Sampling As stated by Radford (2019), the core approach of GPT-2 is language modeling. A language model can be thought of as a probability distribution over a sequence of …

Recurrent neural models and related problems in natural language processing

S Zhang – 2019 – papyrus.bib.umontreal.ca

… Supporting facts required for reasoning are also provided to help the model to make explainable predictions. The fourth article tackles the problem of the lack of personality in chatbots … 21 2.3.1 Neural Language Model … 66 6.3.2 Character Level Language Modeling …

Learning from Fact-checkers: Analysis and Generation of Fact-checking Language

N Vo, K Lee – Proceedings of the 42nd International ACM SIGIR …, 2019 – dl.acm.org

… 2.2 Applications of Text Generation Text generation has been used for language modeling [33], ques- tion and answering [12], machine translation [1, 28, 49], dialogue generation [43–45, 51, 55], and so on. Recently, it is employed to build chat bots for patients under …

AI-Powered Text Generation for Harmonious Human-Machine Interaction: Current State and Future Directions

Q Zhang, B Guo, H Wang, Y Liang… – 2019 IEEE SmartWorld …, 2019 – ieeexplore.ieee.org

… The experimental results show that the RNN language model outperforms the traditional methods … Microsoft XiaoIce is a typical chatbots … Experiments on language modeling and classification tasks using three different corpora demonstrated the advantages of this method …

An Architecture for Dynamic Conversational Agents for Citizen Participation and Ideation

S Ahmed – researchgate.net

… Typically a language model is trained on labeled utterances (see 2) to identify such … Chatbot is traditionally implemented as a finite state machine with prompts repre … as Named Entity Recognition, language modeling, and sentence level classification. [18] 15 Page 29 …

ConveRT: Efficient and accurate conversational representations from transformers

M Henderson, I Casanueva, N Mrkši?, PH Su… – arXiv preprint arXiv …, 2019 – arxiv.org

… to train models capable of generalization. Pretrained models making use of language- model (LM) based learning objectives have be- come prevalent across the NLP research commu- nity. When it comes to dialog systems …

Classification As Decoder: Trading Flexibility For Control In Neural Dialogue

S Shleifer, M Chablani, N Katariya, A Kannan… – arXiv preprint arXiv …, 2019 – arxiv.org

… data on a combination of two loss functions: next-utterance classification loss and language modeling (next word … (2019) prepend source URLs to documents during language model pretraining in … work to ours is Wan & Chen (2018)’s AirBNB customer service chatbot, which also …

A comparative analysis of machine comprehension using deep learning models in code-mixed hindi language

S Viswanathan, MA Kumar, KP Soman – Recent Advances in …, 2019 – Springer

… LSTM networks are found useful in language modeling tasks [23] … is another important aspect of traditional QA systems which deals with categorizing the type of questions [1]. One of the major application of QA system are conversational models referred as chat-bots, it has a …

Multi-Granularity Representations of Dialog

S Mehri, M Eskenazi – arXiv preprint arXiv:1908.09890, 2019 – arxiv.org

… language model on a large corpus in order to obtain strong contextual representations of words. OpenAI’s GPT (Radford et al., 2018) produces latent representations of language by training a large transformer (Vaswani et al., 2017) with a language modelling objective …

Asking clarifying questions in open-domain information-seeking conversations

M Aliannejadi, H Zamani, F Crestani… – Proceedings of the 42nd …, 2019 – dl.acm.org

… [46] studied the task of question generation for an industrial chatbot … We use the KL-divergence retrieval model [24] based on the language modeling framework [30] with Dirichlet prior … where ?t denotes the language model of the original query, and ?h,q,a denotes the language …

Explicit contextual semantics for text comprehension

Z Zhang, Y Wu, Z Li, H Zhao – Proceedings of the 33rd Pacific …, 2019 – waseda.repo.nii.ac.jp

… One billion word benchmark for mea- suring progress in statistical language modeling. arXiv:1312.3005, 2014 … Shenyuan Chen, Hai Zhao, and Rui Wang. Neural network language model for chinese pinyin input method engine …

NER Models Using Pre-training and Transfer Learning for Healthcare

AK Tarcar, A Tiwari, VN Dhaimodker, P Rebelo… – arXiv preprint arXiv …, 2019 – arxiv.org

… Learning, Named Entity Recognition, Natural Language Processing, Pre-Training, Language Modeling, Electronic Health … Language models are capable of adjusting to changes in the textual domain with … widespread, ranging from identifying dates and cities in chatbots to open …

Hierarchical reinforcement learning for open-domain dialog

A Saleh, N Jaques, A Ghandeharioun, JH Shen… – arXiv preprint arXiv …, 2019 – arxiv.org

… could also unlock new, beneficial applications of AI, such as companion chatbots for therapy … Hierarchical models have been investigated extensively in the context of MLE language modeling (Serban et al … We create two language model baselines by training on this dataset …

Conversation Generation with Concept Flow

H Zhang, Z Liu, C Xiong, Z Liu – arXiv preprint arXiv:1911.02707, 2019 – arxiv.org

… The rapid advancements of language modeling and natural language generation (NLG) techniques have enabled fully data-driven conversation … encoded latent concept flow is integrated to the response generation with standard conditional language models: during decoding …

Open-Domain Dialogue Generation: Presence, Limitation and Future Directions

H Yang, W Rong, Z Xiong – Proceedings of the 2019 7th International …, 2019 – dl.acm.org

… it will certainly increase the expression ability and expression diversity of the chatbot.(2) information … [7] Y. Bengio, R. Ducharme, and P. Vincent, “A neural probabilistic language model,” in Proceedings of … [41] B. Liu and I. Lane, “Dialog context language modeling with recurrent …

Adversarial Language Games for Advanced Natural Language Intelligence

Y Yao, H Zhong, Z Zhang, X Han, X Wang… – arXiv preprint arXiv …, 2019 – arxiv.org

… For the judge system, we trained a language model using BERT (Devlin et al., 2019) to … A defender without sense of defending, which is the case of most chatbots, will be … high- perplexity but unreadable sentences that hacks the evaluation method based on language modeling …

Study and Experimentation of Gender Bias in Co-reference Resolution

F Alfaro – 2019 – upcommons.upc.edu

… Theoretical Background BERT is a model trained for masked language modeling (LM) word prediction and sentence prediction using a transformer network (Vaswani et al., 2017a) … Co-reference resolution is vital to tasks like machine translation and tools like chatbots …

HDGS: A Hybrid Dialogue Generation System using Adversarial Learning

H Yu, A Li, R Jiang, Y Jia, X Zhao… – 2019 IEEE Fourth …, 2019 – ieeexplore.ieee.org

… Generative methods can generate new sequences as response by language model, but the … entire body of text is redacted, the generative model becomes a general language modeling … Open- domain chat-bots are usually implemented either by generative methods or retrieval …

Chatting about data

L Martinico – project-archive.inf.ed.ac.uk

… an adversary and mitigations, we conduct some experiments to establish how feasible our protocol is, using current state-of-the-art language models and available … In this private conversation mode, use of chatbots is disabled; the only commercial chatbot platforms that …

Pun generation with surprise

H He, N Peng, P Liang – arXiv preprint arXiv:1904.06828, 2019 – arxiv.org

… ate this principle for pun generation in two ways: (i) as a measure based on the ratio of probabilities under a language model, and (ii) a … et al., 2016), story gen- eration (Meehan, 1977; Peng et al., 2018; Fan et al., 2018; Yao et al., 2019), and social chat- bots (Weizenbaum, 1966 …

Classification as Decoder: Trading Flexibility for Control in Multi Domain Dialogue

S Shleifer, M Chablani, N Katariya, A Kannan… – 2019 – openreview.net

… data on a combination of two loss functions: next-utterance classification loss and language modeling (next word … (2019) prepend source URLs to documents during language model pretraining in … work to ours is Wan & Chen (2018)’s AirBNB customer service chatbot, which also …

Generating question-answer hierarchies

K Krishna, M Iyyer – arXiv preprint arXiv:1906.02622, 2019 – arxiv.org

… or SPECIFIC. We then use these labels as input to a pipelined system centered around a conditional neu- ral language model. We extensively … 3.6 Leveraging pretrained language models Recently, pretrained language models …

Dynamic transfer learning for named entity recognition

P Bhatia, K Arumae, EB Celikkaya – International Workshop on Health …, 2019 – Springer

… [21] leverage unsupervised pretraining in the form of forward and backward language modeling to initialize … was also used for semantic parsing [6], and co training language models [15 … our model on other sequential problems such as translation, summarization, chat bots as well …

Automatic Speech Recognition for Real Time Systems

R Singh, H Yadav, M Sharma… – 2019 IEEE Fifth …, 2019 – ieeexplore.ieee.org

… Tools: IRSTLM (or the language modeling toolkit of your … RNN Acoustic model with language model(5 Gram) and prefix beam search … Chatbots are becoming more sophisticated and contextually-aware, making them able to anticipate and respond to individual customer needs …

AInix: An open platform for natural language interfaces to shell commands

D Gros – 2019 – cs.utexas.edu

… from the beginning to be able to be eventually extended to a diverse range of tasks like checking the weather, purchasing something online, or interacting with Internet of Things (IoT) devices, and be able to support varying interfaces such as voice assistants, chatbots, or video …

Self-attention architectures for answer-agnostic neural question generation

T Scialom, B Piwowarski, J Staiano – … of the 57th Annual Meeting of the …, 2019 – aclweb.org

… improvements over the state of the art in several tasks such as language modelling and machine … cover a broad range of scenarios, such as Information Re- trieval, chat-bots, AI-supported … to other QA datasets, and adapting it to use pre- trained language models such as BERT …

Deep learning based emotion recognition system using speech features and transcriptions

S Tripathi, A Kumar, A Ramesh, C Singh… – arXiv preprint arXiv …, 2019 – arxiv.org

… language processing solutions, such as voice-activated sys- tems, chatbots, etc … a speech from different users using probabilistic acoustic and language models [3], which … Word embedding describes feature learning and language modelling techniques where words and phrases …

Dialogue quality and nugget detection for short text conversation (STC-3) based on hierarchical multi-stack model with memory enhance structure

HE Cherng, CH Chang – NTCIR14. p. to appear, 2019 – research.nii.ac.jp

… a plenty of time and human resources, and provide a 24-hour chatbot to answer … in NTCIR-12 as the first step toward natural language conversation for chatbots … semi-supervised sequence learning, multi-task training, bi-directional transformer [18], and masked language model …

Identifying facts for chatbot’s question answering via sequence labelling using recurrent neural networks

M Nuruzzaman, OK Hussain – Proceedings of the ACM Turing …, 2019 – dl.acm.org

… the order of the word of the user input sentences and language modelling or semantics … recurrent neural networks, an architecture for the development of an AI chatbot system with … at, M., Burget, L., Cernock`y, J., Khudanpur, S.: Recurrent neural network based language model …

PT-CoDE: Pre-trained Context-Dependent Encoder for Utterance-level Emotion Recognition

W Jiao, MR Lyu, I King – arXiv preprint arXiv:1910.08916, 2019 – arxiv.org

… Unlike these feature-based approaches, another trend is to pre-train some architecture through a language model objective, and then fine- tune the … Language modeling (LM) (Józefowicz et al., 2016; Melis et al., 2018) is the most prevalent way to pre-train universal sentence …

Dynamic Working Memory for Context-Aware Response Generation

Z Xu, C Sun, Y Long, B Liu, B Wang… – … on Audio, Speech …, 2019 – ieeexplore.ieee.org

… conversations with human beings [4], [6]–[8]. In other words, responses from Chat-bots are ex … in dialog are similar to the long distance dependency issue in language modeling, and are … a hidden vector, and generate re- sponses conditional on the recurrent language model [30] …

A multi-task hierarchical approach for intent detection and slot filling

M Firdaus, A Kumar, A Ekbal… – Knowledge-Based Systems, 2019 – Elsevier

… Also, by handling intent and slot together, we can build an end-to-end natural language understanding (NLU) module for any task-oriented chatbot … In [38], a pre-trained language model was employed in an RNN framework for the slot filling task …

Pre-screening Textual Based Evaluation for the Diagnosed Female Breast Cancer (WBC).

M Alhlffee – Revue d’Intelligence Artificielle, 2019 – researchgate.net

… The core is consisting of several tool techniques includes LSTM neural networks model, bi-gram and tri-gram language modelling, python language model, flask framework, SQL Alchemy toolkit and … Chatbots: An overview types, architecture, tools and future possibilities …

Keep calm and switch on! preserving sentiment and fluency in semantic text exchange

SY Feng, AW Li, J Hoey – arXiv preprint arXiv:1909.00088, 2019 – arxiv.org

… Another use of STE is in building emotionally aligned chatbots and virtual assistants … 2.4 Review Generation Hovy (2016) generates fake reviews from scratch using language models … to the original, and may be desired in cases such as creating a lively chat- bot or correcting …

ntuer at semeval-2019 task 3: Emotion classification with word and sentence representations in rcnn

P Zhong, C Miao – arXiv preprint arXiv:1902.07867, 2019 – arxiv.org

… With the rise of social media platforms such as Twitter, as well as chatbots such as Amazon Alexa … embedding named BERT by extending the context to both directions and training on the masked language modelling task … Universal language model fine-tuning for text classification …

WAAC: An End-to-End Web API Automatic Calls Approach for Goal-Oriented Intelligent Services

Y Li, S Liu, T Jin, H Gao – International Journal of Software …, 2019 – World Scientific

… to either predict a token from the standard vocabulary of the language model thereby ensuring … and challenges of programming in current and future eras of chatbot … to a variety of applications including statistical machine translation, language modeling, paraphrase generation …

A deep look into neural ranking models for information retrieval

J Guo, Y Fan, L Pang, L Yang, Q Ai, H Zamani… – Information Processing …, 2019 – Elsevier

JavaScript is disabled on your browser. Please enable JavaScript to use all the features on this page. Skip to main content Skip to article …

Towards Evaluating the Complexity of Sexual Assault Cases with Machine Learning

B Mammo, P Narwelkar, R Giyanani – engineering.lehigh.edu

… These QA systems (often one of the underlying implementations of a chatbot aimed at answering customer enquiries … BERT creates its language model by defining the prediction target using two unsupervised training strategies, “Masked Language Modelling” (MLM) and …

Curriculum Learning Strategies for IR: An Empirical Study on Conversation Response Ranking

G Penha, C Hauff – arXiv preprint arXiv:1912.08555, 2019 – arxiv.org

… that cur- riculum strategies can benefit neural network training with experimental results on different tasks such as shape recognition and language modelling … The recent breakthroughs of these large and heavily pre-trained language models have also benefited IR [55,54,57] …

Standardization of Robot Instruction Elements Based on Conditional Random Fields and Word Embedding

H Wang, Z Zhang, J Ren, T Liu – Journal of Harbin Institute of …, 2019 – hit.alljournals.cn

… about destination, transportation, accommodation, etc., and also different from ordinary chatbots which usually have … [10], Mnih A, Hinton G. Three new graphical models for statistical language modelling … [11], Mnih A, Hinton G. A scalable hierarchical distributed language model …

Semantic and Discursive Representation for Natural Language Understanding

D Sileo – 2019 – tel.archives-ouvertes.fr

… Under- standing the needs of humans paves the way for their automatic fulfilment (as in chatbot systems, robotics or information retrieval) … erating costs. Some tasks can rely on other tasks; for instance, a chatbot system (1-1c) can be decomposed into modules …

Natural Language Processing, Understanding, and Generation

A Singh, K Ramasubramanian, S Shivam – Building an Enterprise Chatbot, 2019 – Springer

… Some chatbots are heavy on generative responses, and others are built for retrieving information and … Since this book is about building an enterprise chatbot, we will focus more on … All the language models in spaCy are trained using deep learning, which provides high accuracy …

Does gender matter? towards fairness in dialogue systems

H Liu, J Dacon, W Fan, H Liu, Z Liu, J Tang – arXiv preprint arXiv …, 2019 – arxiv.org

… Chatbots should talk politely with human users … Language Modeling. In [38] a measurement is introduced for measuring gender bias in a text generated from a language model that is trained on a text corpus along with measuring the bias in the training text itself …

Viana: Visual interactive annotation of argumentation

F Sperrle, R Sevastjanova, R Kehlbeck… – … IEEE Conference on …, 2019 – ieeexplore.ieee.org

… is improved over time by incorporating linguistic knowledge and language modeling to learn a … linguistically-informed applications like semantic search en- gines, chatbots or human … locution suggestion workflow combines linguistic annotations with a language model and learns …

Neural architectures for natural language understanding

Y Tay – 2019 – dr.ntu.edu.sg

… Firstly, it is common to com- pletely dispense with handcrafted features, relying on distributed representations pre-trained in an unsupervised manner from large corpora (eg, word embeddings [16] or pretrained language models [15, 17]). Secondly, a multitude of neural com …

Development of Large Scale English-Urdu Machine Translation Corpus for Statistical and Neural Machine Translation Systems

M Hussain – 2019 – dspace.cuilahore.edu.pk

… tion extraction, authoring tools, chatbots, information retrieval, language learn … phrases, and (3) Ordering the Output using language model. PB-SMT model is … An MT technique that is based on syntactic language modelling and focuses on …

Commonsense reasoning for natural language understanding: A survey of benchmarks, resources, and approaches

S Storks, Q Gao, JY Chai – arXiv preprint arXiv:1904.01172, 2019 – researchgate.net

Page 1. COMMONSENSE REASONING FOR NATURAL LANGUAGE UNDERSTANDING: ASURVEY Commonsense Reasoning for Natural Language Understanding: A Survey of Benchmarks, Resources, and Approaches Shane Storks STORKSSH@MSU.EDU Qiaozi Gao …

A Text Abstraction Summary Model Based on BERT Word Embedding and Reinforcement Learning

Q Wang, P Liu, Z Zhu, H Yin, Q Zhang, L Zhang – Applied Sciences, 2019 – mdpi.com

… Multi-Turn Chatbot Based on Query-Context Attentions and Dual Wasserstein Generative … a single unified model, which combines the advantages of universal language model BERT, extraction … So self-attention is widely used in language modeling [19], sentiment analysis [20 …

Dialogue Systems and Conversational Agents for Patients with Dementia: The Human–Robot Interaction

A Russo, G D’Onofrio, A Gangemi, F Giuliani… – Rejuvenation …, 2019 – liebertpub.com

… incoming speech signal. The vast majority of speech recognizers adopt a sto- chastic/probabilistic approach by combining an acoustic model and a language model for a given language (eg, English, Italian, French, etc.). In a …

Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language …

K Inui, J Jiang, V Ng, X Wan – Proceedings of the 2019 Conference on …, 2019 – aclweb.org

Page 1. EMNLP-IJCNLP 2019 2019 Conference on Empirical Methods in Natural Language Processing and 9th International Joint Conference on Natural Language Processing Proceedings of the Conference November 3–7, 2019 Hong Kong, China Page 2 …

From Monolingual to Multilingual FAQ Assistant using Multilingual Co-training

M Patidar, S Kumari, M Patwardhan… – Proceedings of the 2nd …, 2019 – aclweb.org

Page 1. Proceedings of the 2nd Workshop on Deep Learning Approaches for Low-Resource NLP (DeepLo), pages 115–123 Hong Kong, China, November 3, 2019. c 2019 Association for Computational Linguistics https://doi.org/10.18653/v1/P17 115 …

An Analysis Tool for Spoken Language in VR

G Gilbert – idc.ac.il

… Bengio et al. [3], taking the statistical language modeling approach, suggested a trained model to reduce the … 5. TickTock and IRIS from WOCHAT (Workshops and Session Series on Chatbots and Conversational Agents) (http://workshop.colips.org/ wochat/data/index.html) …

Knowledge-Aware Self-Attention Networks for Document Grounded Dialogue Generation

X Tang, P Hu – … Conference on Knowledge Science, Engineering and …, 2019 – Springer

… (2) non-task-oriented dialog system (also known as chat bots): it does … the fluency of the models, we use perplexity, which is generally used in statistical language modeling … Chollet, M., Laksana, E., Morency, LP, Scherer, S.: Affect-LM: a neural language model for customizable …

The speech interface as an attack surface: an overview

M Bispham, I Agrafiotis, M Goldsmith – International Journal On …, 2019 – ora.ox.ac.uk

… Current task-based dialogue systems have some similarity with chatbots in that they are … Gaussian Mixture Models (GMMs) for the acoustic modelling and n-grams for the language modelling … particular type of DNN, have replaced n-grams to extract language model probabilities …

Troubling trends in machine learning scholarship

ZC Lipton, J Steinhardt – Queue, 2019 – dl.acm.org

… Phil Blunsom demonstrated that a series of published improvements in language modeling, originally attributed … as in Cotterell et al., which discovers that existing language models handle inflectional … Note that recent interest in chatbot startups co-occurred with anthropomorphic …

Ensemble-based method of answers retrieval for domain specific questions from text-based documentation

I Safiulin, N Butakov, D Alexandrov… – Procedia Computer …, 2019 – Elsevier

… Many companies want or prefer to use chatbot systems to provide smart assistants for … The Dirichlet prior based retrieval method represents language modeling approaches [16] … method uses the Dirichlet prior smoothing method to smooth a document language model and then …

Unsupervised Text Representation Learning with Interactive Language

H Cheng – 2019 – digital.lib.washington.edu

… neural language model (NLM) with multiple subvectors, each of which captures a different salient factor of the interactive language … language modeling [9], etc … Page 25. 14 (aka chatbots) have been developed for entertainment, companionship and education purpose …

The dialogue dodecathlon: Open-domain knowledge and image grounded conversational agents

K Shuster, D Ju, S Roller, E Dinan, YL Boureau… – arXiv preprint arXiv …, 2019 – arxiv.org

… Several large language modeling projects have been undertaken in order to show prowess in multi-tasking ability (Radford et al., 2019; Keskar et al., 2019), and transformer-based approaches have been adapted to language and vision tasks as well (Lu et al., 2019; Tan and …

Detecting offensive language using transfer learning

A de Bruijn, V Muhonen, T Albinonistraat, W Fokkink… – 2019 – science.vu.nl

… A better understanding of language models could help to build a chatbot, or a … NN), like the Feedforward Neural Network (FFNN), and RNN have been introduced for language modelling … (Bengio, Ducharme, Vincent, & Jauvin, 2003) presented the first FFNN Language Model …

Towards Fast and Unified Transfer Learning Architectures for Sequence Labeling

P Bhatia, K Arumae, B Celikkaya – 2019 18th IEEE …, 2019 – ieeexplore.ieee.org

… leverage unsupervised pre-training in the form of forward and backward language modeling to initialize … was also used for semantic parsing [18], and co training language models [37] … our model on other sequential problems such as translation, summarization, chat bots as well …

Sémantické porozum?ní konverzaci

P Lorenc – 2019 – dspace.cvut.cz

… Each conversation has 20 turns on average. It sounds like very promising data for future development in chatbots’ field. Usually chatbot gets a query q and system returns a response r. Respond model has usually two options …

Recent advances in natural language inference: A survey of benchmarks, resources, and approaches

S Storks, Q Gao, JY Chai – arXiv preprint arXiv:1904.01172, 2019 – arxiv.org

Page 1. RECENT ADVANCES IN NATURAL LANGUAGE INFERENCE:ASURVEY Recent Advances in Natural Language Inference: A Survey of Benchmarks, Resources, and Approaches Shane Storks SSTORKS@UMICH.EDU …

Listening between the lines: Learning personal attributes from conversations

A Tigunova, A Yates, P Mirza, G Weikum – The World Wide Web …, 2019 – dl.acm.org

… will then be a distant source of background knowledge for personalization in downstream applications such as Web-based chatbots and agents in … General-purpose chatbot-like agents show decent performance in benchmarks (eg, [13, 20, 37]), but critically rely on sufficient train …

Emotional Conversation Generation Based on a Bayesian Deep Neural Network

X Sun, J Li, X Wei, C Li, J Tao – ACM Transactions on Information …, 2019 – dl.acm.org

… However, traditional neural language models tend to generate a generic reply with poor semantic logic and no emotion … In application scenarios such as chatbots and virtual personal assistants, to give the machine a more natural and friendly ability to communicate with humans …

A logical-based corpus for cross-lingual evaluation

F Salvatore, M Finger, R Hirata Jr – arXiv preprint arXiv:1905.05704, 2019 – arxiv.org

… it can be used to improve consistency in real case applications, such as chat-bots Welleck et al … (2018), when we pre-trained a Trans- former network in the language modeling task and … This bidirectional model, pre- trained as a masked language model and as a next sentence …

Improving Low Resource Turkish Speech Recognition with Data Augmentation and TTS

R Gokay, H Yalcin – … Multi-Conference on Systems, Signals & …, 2019 – ieeexplore.ieee.org

… These systems are used for information services, chat-bots, dialog systems or smart … y). The coeffcient ? controls the relative contributions of the language model and the … from chaotically synthesized data.” [8] E. Ar?soy, “Statistical and discriminative language modeling for turkish …

Humour-in-the-loop: Improvised Theatre with Interactive Machine Learning Systems

KW Mathewson – 2019 – era.library.ualberta.ca

… 17 2.2.3 Language Modelling … 140 7.3.3 Tuning Language Models with Rewards . . . . . 140 7.3.4 Adapting Language Models in Real-Time . . . . . 142 7.3.5 Evaluating Conversational Dialogue … 186 B.8 Chatbot Competitions …

BoFGAN: Towards A New Structure of Backward-or-Forward Generative Adversarial Nets

MKS Chen, X Lin, C Wei, R Yan – The World Wide Web Conference, 2019 – dl.acm.org

… 2 RELATED WORK 2.1 RNN and Sequence-to-Sequence An RNN is widely used in language modeling, which is capable of capturing sequential dependency … Character-level RNN language model [32] has been applied to various generation tasks at early times …

Towards Emotion Intelligence in Neural Dialogue Systems

C Huang – 2019 – era.library.ualberta.ca

… v, 10, 13, 14, 16, 43–45, 53, 79 NNLM Neural Net Language Model. 14 … xii, 16–18, 45, 47, 49, 50 RNNLM Recurrent Neural Network Language Modeling. 15 … mans and chatbots. Figure 1.1 illustrates an example of a conversation session between a human and a chatbot system …

Paraphrase generation and evaluation: a view from the trenches

W Franus, B Twardowski… – … , Industry, and High …, 2019 – spiedigitallibrary.org

… makes it more attractive for real-life application, eg conversation systems like chat-bots … issues, eg ignoring the latent code completely and regressing to a generic language model … contains the evaluation of presented method in few scenarios: (1) language modeling for Penn …

Example-Driven Question Answering

D Wang – 2019 – lti.cs.cmu.edu

… 95 B Chatbots Interview: bJFK vs bNixon 97 C Chatbots Interview: bStarWars vs bTrump vs bHillary 109 Bibliography 115 x Page 11. List of Figures 1.1 Workflow Diagram of the Example-driven Question Answering . . . . . 6 …

Neural Approaches for Syntactic and Semantic Analysis

S Kurita – 2019 – repository.kulib.kyoto-u.ac.jp

… matical analyses of texts to natural language understanding and application systems such as machine translation systems and chatbots … as machine translation, question-answering, chatbots and searching systems are called application studies …

Stick to the Facts: Learning towards a Fidelity-oriented E-Commerce Product Description Generation

Z Chan, X Chen, Y Wang, J Li, Z Zhang, K Gai… – Proceedings of the …, 2019 – aclweb.org

… Green- berg et al., 2018; Katiyar and Cardie, 2018). In (Ji et al., 2017), they proved that adding entity re- lated information can improve the performance of language modeling. Building upon this work, in (Clark et al., 2018), they …

Black-box attacks via the speech interface using linguistically crafted input

MK Bispham, AJ van Rensburg, I Agrafiotis… – … on Information Systems …, 2019 – Springer