Notes:

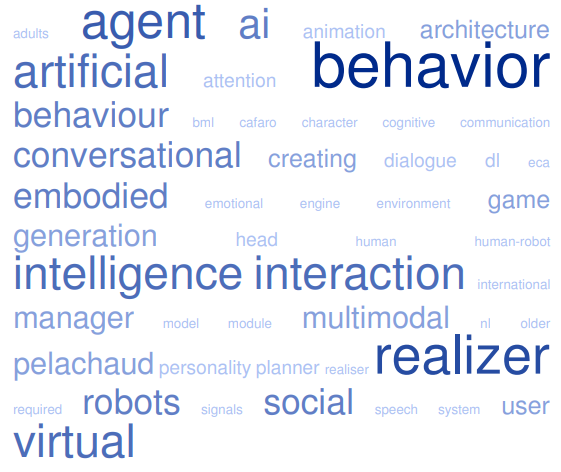

A behavior realizer (aka behaviour realiser) is basically a behavior generator, often based on behavior markup language. The task of the behavior realizer is to realize the behaviors scheduled by the behavior planner. In other words, the behavior realizer makes a concrete action based on the blueprint supplied by the behavior planner. The behavior realizer takes the behavior markup language behavior specifications and transforms them into overt behaviors of an embodied conversational agent. A behavior realizer may also execute communicative actions for realization in a game engine.

The architecture of an agent may include six basic blocks: **

- Human Behavior Detector

- Human Behavior Interpreter

- Attention Tracker

- Intention Planner

- Behavior Planner

- Behavior Realizer

The Human Behavior Detector is responsible for detecting and recognizing human behavior, such as facial expressions, body language, and vocal cues. The Human Behavior Interpreter then interprets the detected behavior to infer the human’s intentions or emotional state. The Attention Tracker monitors the agent’s own attention and that of the human it is interacting with. The Intention Planner uses the interpreted human behavior and the agent’s goals to plan its own actions. The Behavior Planner then plans the specific actions the agent will take, and the Behavior Realizer executes those actions.

- Behavior Planner is a component of an agent’s architecture that is responsible for planning the specific actions that the agent will take based on its goals, the interpreted human behavior, and the attention of the human it is interacting with.

- Behavior Realizer Module is a component of an agent’s architecture that is responsible for executing the actions planned by the Behavior Planner. It converts the planned actions into physical or virtual actions, such as moving a robotic arm or generating speech.

- BML (Behavior Markup Language) is a language used to describe the behavior of an agent, typically used in the fields of animation and virtual agents. It is used to specify the actions, gestures, facial expressions, and speech of an agent in a way that can be easily understood by the Behavior Realizer. It can be used in combination with other languages such as XML and MAST to describe the behaviour of virtual agents.

- BMLR (Behavior Markup Language Realizer) is a software tool that is responsible for interpreting and executing the behavior descriptions written in BML (Behavior Markup Language). It converts the BML code into actions, gestures, facial expressions, and speech that can be performed by an agent, whether it’s a virtual agent or a robotic agent.

- FAPS (Facial Action Parameters) is a set of codes and definitions used to describe the movements of the facial muscles during emotional expressions. It is used to describe the specific muscle movements that correspond to a particular facial expression, such as raising the eyebrows for surprise or wrinkling the nose for disgust. FAPS can be used as a reference for animating virtual characters or for analyzing human facial expressions.

- SAIBA Framework is a framework for building artificial agents that can interact with humans in a natural and human-like way. SAIBA stands for “Situated Artificial Intelligence and Behavior Architecture” and it’s a comprehensive architecture that covers the different components of an agent such as attention, perception, action, and interaction. The SAIBA framework is designed to allow the integration of different modules and technologies such as BML, FAPS, and other behavioral and AI models.

Resources:

- asap-project.org .. asaprealizer

- greta .. real-time three dimensional embodied conversational agent

- embots.dfki.de/embr .. embodied agents behavior realizer

References:

- Artificial intelligence moving serious gaming: Presenting reusable game AI components (2019)

- Toward incorporating emotions with rationality into a communicative virtual agent (2011) **

See also:

Behavior Realization & Artificial Intelligence | BML (Behavior Markup Language) & Dialog Systems | Psyclone AIOS (Artificial Intelligence Operating System) | SmartBody

Artificial intelligence moving serious gaming: Presenting reusable game AI components

W Westera, R Prada, S Mascarenhas… – Education and …, 2020 – Springer

… Computer games have been linked with artificial intelligence (AI) since the first program was designed to play chess (Shannon 1950) … 4.8.3 AI approach … The behaviour planner that produces the BML also gets information back from the behaviour realizers about the success and …

Flipper 2.0: a pragmatic dialogue engine for embodied conversational agents

J van Waterschoot, M Bruijnes, J Flokstra… – Proceedings of the 18th …, 2018 – dl.acm.org

… Flipper provides a techni- cally stable and robust dialogue management system to integrate with other components of ECAs such as behaviour realisers … Commercial cloud-based services such as LUIS.AI, Wit.ai, DialogFlow, Watson, Lex and Recast.ai all use a neural network …

A novel unity-based realizer for the realization of conversational behavior on embodied conversational agents

I Mlakar, Z Kacic, M Borko, M Rojc – International Journal of Computers, 2017 – iaras.org

… The task of the behaviour realizer is then to realize the behaviour on some targeted virtual entity [13] … conversational agent EVA, based on a novel conversational-behavior generation algorithm. Engineering Applications of Artificial Intelligence, 57, 80-104 …

Generative Model of Agent’s Behaviors in Human-Agent Interaction

S Dermouche, C Pelachaud – 2019 International Conference on …, 2019 – dl.acm.org

… For example, the “behavior realizer” computes FAPs (Facial Animation Parameters) frames to an- imate agent’s facial expression and BAPs (Body Animation Parameters) frame for agent’s body movement like head and torso movements …

Advanced Content and Interface Personalization through Conversational Behavior and Affective Embodied Conversational Agents

M Rojc, Z Ka?i?, I Mlakar – Artificial Intelligence: Emerging Trends …, 2018 – books.google.com

… Artificial Intelligence-Emerging Trends and Applications 78 The model builds on the notion that verbal to co-verbal alignment and synchronization are driving forces … Both are maintained within the EVA behavior realizer and implemented as a series of standalone modules …

Virtual general game playing agent

HE Helgadóttir, S Jónsdóttir, AM Sigurdsson… – … on Intelligent Virtual …, 2016 – Springer

… Finally the behavior realizer schedules and executes BML chunks, such as moving pieces, changing poses … AI Games 1(1), 4–15 (2009)CrossRefGoogle Scholar. 5. Cafaro, A … In: Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, IJCAI 2015 …

Non-Verbal Behaviour of a VR Agent Playing a Board Game

GI Baldursdóttir – 2018 – skemman.is

… emotion (eg being surprised by move of the player, see [1]), personality (the Big Five personality traits explained in [1]), and a behaviour realizer (see figure … It has three listeners, one to listen for changes in the state of the board-game and two for the GGP AI [1]. The InputModule …

An embodied virtual agent platform for emotional Stroop effect experiments: A proof of concept

A Oker, N Glas, F Pecune, C Pelachaud – Biologically inspired cognitive …, 2018 – Elsevier

… Behavior realizer describes multimodal behaviors as they are to be realized by the final stage of the generation process by the … in real time, such as gestures, mimics, head nods, and emotional speech, then by triggering appropriate action with artificial intelligence modules like …

Pedagogical Agents as Team Members: Impact of Proactive and Pedagogical Behavior on the User

M Barange, J Saunier, A Pauchet – Proceedings of the 16th …, 2017 – aamas2017.org

… Audio /text Control Flow Data Flow Virtual World Avatar Audio /text Dialogue Manager Behavior Realizer Perception Decision Making Task Planner Turn Handler NLU NLG Pedagogical Module Pedagogical Library Interaction layer Cognitive layer …

Nonverbal Behavior in

A Cafaro, C Pelachaud… – The Handbook of …, 2019 – books.google.com

… multimodal signals. The internal behavior realizer instantiates the correspond- ing multimodal behaviors, it handles the synchronization with speech, and proce- durally generates animations for the ECA. Page 260. 234 Chapter …

Nonverbal behavior in multimodal performances

A Cafaro, C Pelachaud, SC Marsella – The Handbook of Multimodal …, 2019 – dl.acm.org

Page 1. 6Nonverbal Behavior in Multimodal Performances Angelo Cafaro, Catherine Pelachaud, Stacy C. Marsella 6.1 Introduction The physical, nonverbal behaviors that accompany face-to-face interaction convey a wide variety …

Creating new technologies for companionable agents to support isolated older adults

CL Sidner, T Bickmore, B Nooraie, C Rich… – ACM Transactions on …, 2018 – dl.acm.org

Page 1. 17 Creating New Technologies for Companionable Agents to Support Isolated Older Adults CANDACE L. SIDNER, Worcester Polytechnic Institute TIMOTHY BICKMORE, Northeastern University BAHADOR NOORAIE …

Towards self-explaining social robots. Verbal explanation strategies for a needs-based architecture

S Stange, H Buschmeier, T Hassan, C Ritter, S Kopp – 2019 – pub.uni-bielefeld.de

… that explanations vary by context and specifically the knowledge of the person requesting the explanation [3]. For the most part, this work on ‘explainable AI’ is aimed at … Action Planner Behavior Realizer … In Proceedings of the 15th National Conference on Artificial Intelligence …

Biologically Inspired Cognitive Architectures

A Oker, N Glas, F Pecune, C Pelachaud – researchgate.net

… Behavior realizer de- scribes multimodal behaviors as they are to be realized by the final stage of the generation process by the … in real time, such as gestures, mimics, head nods, and emotional speech, then by triggering appropriate action with artificial intelligence mod- ules …

An architecture for a socially adaptive virtual recruiter in job interview simulations

A Ben-Youssef, M Chollet, H Jones… – Proceedings of the …, 2015 – gipsa-lab.grenoble-inp.fr

… Dominance = 1 n ? (val (ai) × AxisDominance (ai)) (14) … This BML message is then in- terpreted by our virtual agent platform’s Behaviour Realizer, which creates an animation for the virtual recruiter … Artificial Intelligence (2005), 0–7. 16 …

A novel realizer of conversational behavior for affective and personalized human machine interaction-EVA U-Realizer

I MLAKAR, Z KA?I?, M BORKO… – WSEAS Trans. Environ …, 2018 – academia.edu

… Key-Words: – embodied conversational agents, personalized interaction, co-verbal behavior, behavior realizer, animation, virtual reality, mixed reality, multimodal interaction … The second component is the EVA Behavior Realizer, which is also the focus of this paper …

Non-participatory user-centered design of accessible teacher-teleoperated robot and tablets for minimally verbal autistic children

J Li, D Davison, A Alcorn, A Williams… – Proceedings of the 13th …, 2020 – dl.acm.org

… The architecture of the child-robot interaction component of the DE-ENIGMA system (same as [34], except ours adds tablets) features a Dialogue Manager, Behavior Realizer and Agent Control Engine (Figure 2). The dialogue manager responds to user events and generates …

A reference architecture for social head gaze generation in social robotics

V Srinivasan, RR Murphy, CL Bethel – International Journal of Social …, 2015 – Springer

This article outlines a reference architecture for social head gaze generation in social robots. The architecture discussed here is grounded in human communication, based on behavioral robotics…

Usability assessment of interaction management support in LOUISE, an ECA-based user interface for elders with cognitive impairment

P Wargnier, S Benveniste, P Jouvelot… – Technology and …, 2018 – content.iospress.com

… Once the behavior that should be produced by the character is determined by the interaction manager, its BML description is sent to the behavior realizer, composed of a behavior controller, a voice synthesizer and a game engine … 3.4Behavior realizer …

Fostering user engagement in face-to-face human-agent interactions: a survey

C Clavel, A Cafaro, S Campano… – Toward Robotic Socially …, 2016 – Springer

… A direct link between this module and the Behavior Realizer allows the agent to exhibit reactive behaviors by quickly producing the behavior to … multi-party group interaction required a more powerful framework that integrated the Greta platform with the Impulsion AI Engine [101] …

An Intelligent Architecture for Autonomous Virtual Agents Inspired by Onboard Autonomy

K Hassani, WS Lee – Intelligent Systems’ 2014, 2015 – Springer

… It consists of intent planner, beha- vior planner and behavior realizer … 31–36. IEEE Press (2013) 6. Muscettola, N., Nayak, P., Pell, B., Williams, B.: Remote Agent: To Boldly Go Where no AI System has Gone Before … In: 21st Na- tional Conference on Artificial Intelligence, pp …

Towards attention monitoring of older adults with cognitive impairment during interaction with an embodied conversational agent

P Wargnier, A Malaisé, J Jacquemot… – 2015 3rd IEEE VR …, 2015 – ieeexplore.ieee.org

… Note that the interaction manager requires feedback from the behavior realizer, mostly to know when the previous utterance is over. Behavior realizer Attention estimator … Explo- rations in engagement for humans and robots. Artificial Intelligence, 166(12):140–164, Aug. 2005 …

Generation of communicative intentions for virtual agents in an intelligent virtual environment: application to virtual learning environment

B Nakhal – 2017 – tel.archives-ouvertes.fr

… 3.4.1 Implementation of Behavior Planner and Behavior Realizer interfaces ….. 71 3.4.2 Integrating ECA platforms ….. 71 … ILE Interactive Learning Environments AI Artificial Intelligence Page 14 …

Leveraging the Dynamics of Non-Verbal Behaviors For Social Attitude Modeling

S Dermouche, C Pelachaud – IEEE Transactions on Affective …, 2020 – ieeexplore.ieee.org

Page 1. 1949-3045 (c) 2020 IEEE. Personal use is permitted, but republication/ redistribution requires IEEE permission. See http://www.ieee.org/ publications_standards/publications/rights/index.html for more information. This …

Using Biosocial Theory as a Model for Agent Emotion

RJ van Dinther – 2016 – dspace.library.uu.nl

… 2014). Though targeted at clinical therapy and psychological disorders, it makes some assumptions and claims that are interesting from an AI perspective … situation. From an AI perspective, this is rather Page 7. 7 like a filtering heuristic …

Offloading cognitive load for expressive behaviour: small scale HMMM with help of smart sensors

BT Manen – 2019 – essay.utwente.nl

… A speech recognition module together with a synthesizer module are required for the dialogue manager and servos for the behaviour realizer … Without any soldering required, it enables the user to use artificial intelligence pre-loaded on a SD card to create a smart google …

Territoriality and Visible Social Commitment for Virtual Agents

J Rossi, L Veroli, M Massetti – skemman.is

… FML Function Markup Language AI Artificial Intelligence ECA Embodied Conversational Agents … This definition leads to a need of an Artificial Intelligence (AI) to simulate human behaviours and, in fact, there are a lot of studies on this topic: the one just mentioned …

Model for verbal interaction between an embodied tutor and a learner in virtual environments

R Querrec, J Taoum, B Nakhal… – Proceedings of the 18th …, 2018 – dl.acm.org

… The content of the sentences uttered by the user is parsed using Artificial Intelligence Markup Language (AIML)1. In this … communicative intentions, the Behavior Planner, which transforms the agent intentions in multi-modal signals and the Behavior Realizer, which realizes the …

D4. 7 1st Expressive Virtual Characters

F Yang, C Peters – 2016 – prosociallearn.eu

… EC European Commission NPC Non-player character RPG Role-playing game AI Artificial intelligence ECA Embodied Conversational Agent PSGM Prosocial Skill Game Model Page 4. 29/06/2016 | ProsocialLearn | D4.7 1st Expressive Virtual Characters Page | 4 …

A User Perception–Based Approach to Create Smiling Embodied Conversational Agents

M Ochs, C Pelachaud, G Mckeown – ACM Transactions on Interactive …, 2017 – dl.acm.org

… lexicon. The morphological and dynamic characteristics to adjust the face for the appropriate signals are described in the Facelibrary. Finally the behavior realizer outputs the animation parameters for each of these signals. Our …

of deliverable Final prototype description and evaluations of the virtual coaches

G Huizing, B Donval, M Barange, R Kantharaju… – 2019 – council-of-coaches.eu

… Council of Coaches Technical Prototype”). ? ASAP: A behaviour realiser for choreographing and realising multimodal behaviours of multiple agents (eg robots, chatbots, embodied virtual agents). It was developed specifically …

Topic management for an engaging conversational agent

N Glas, C Pelachaud – International Journal of Human-Computer Studies, 2018 – Elsevier

JavaScript is disabled on your browser. Please enable JavaScript to use all the features on this page. Skip to main content Skip to article …

Virtual human technologies for cognitively-impaired older adults’ care: the LOUISE and Virtual Promenade experiments

P Wargnier – 2016 – pastel.archives-ouvertes.fr

… Je remercie également les stagiaires que j’ai eu le plaisir d’encadrer et/ou qui ont contribué à ces travaux, soit, par ordre d’apparition : Julien Jacquemot, Adrien Malaisé, Michel Bala, Edmond Phuong, Paul-Emile Fauquet et Chenyang Wang … 154 5.4.4 Behavior realizer …

Human-robot joint action: Coordinating attention, communication, and actions

CM Huang – 2015 – search.proquest.com

… Page 5. ii acknowledgments A year spent in artificial intelligence is enough to make one believe in God. — Alan Perlis … Jerry and Mark have particularly provided artificial intelligence and machine learning perspectives to strengthen my research …

Virtual Movement from Natural

H Sarma – 2019 – d-nb.info

… Understanding natural language is too hard that it falls un- der AI-complete [Yampolskiy, 2013] … makes it difficult to understand textual languages [Linell, 2004]. In the field of artificial intelligence, teaching machines how to understand text is extremely im …

Speech-driven animation with meaningful behaviors

N Sadoughi, C Busso – Speech Communication, 2019 – Elsevier

… from the data. By constraining on prototypical behaviors (eg, head nods), the approach can be embedded in a rule-based system as a behavior realizer creating trajectories that are timely synchronized with speech. The study …

Creating a toolkit for human-robot interaction

J Oosterkamp – 2015 – essay.utwente.nl

… robotic head. The first goal of this project is to create a toolkit for agile human-robot interaction with a focus on modularity and expandability which can be connected to an external inputs such as a social behavior realizer. The …

Field evaluation with cognitively-impaired older adults of attention management in the embodied conversational agent louise

P Wargnier, G Carletti… – … on Serious Games …, 2016 – ieeexplore.ieee.org

… Fig. 1. The Louise virtual character Behavior realizer Attention estimator Dialog script Kinect data Interaction manager … The course of the dialog is automatically interrupted to perform attention prompting when a loss of user’s attention is detected. • Behavior realizer …

A Human Robot Interaction Toolkit with Heterogeneous Multilevel Multimodal Mixing

B Vijver – 2016 – essay.utwente.nl

… autonomous and reactive behavior patterns, executed automatically, ex- ternal inputs for deliberate behavior by a behavior realizer must be … It was proposed in opposition to traditional symbolic artificial intelligence: instead of guiding behavior by symbolic mental representations …

A robotic social actor for persuasive Human-Robot Interactions

R Cobos Mendez – 2018 – essay.utwente.nl

Page 1. A robotic social actor for persuasive Human-Robot Interactions R. (Reynaldo) Cobos Mendez MSc Report Committee: Prof.dr.ir. GJM Krijnen Dr.ir. EC Dertien Dr.ir. D. Reidsma March 2018 006RAM2018 Robotics and …

Things that Make Robots Go HMMM: Heterogeneous Multilevel Multimodal Mixing to Realise Fluent, Multiparty, Human-Robot Interaction

NCEDD Reidsma – researchgate.net

… The BML scripts are then communicated to the robot platform by a Behaviour Realiser (in this project: AsapRealizer [8]), which interprets the BML in terms of the available controls of the robotic embodiment … Page 5. Dialogue manager Behaviour realizer Agent control engine …

A methodology for the automatic extraction and generation of non-verbal signals sequences conveying interpersonal attitudes

M Chollet, M Ochs, C Pelachaud – IEEE Transactions on …, 2017 – ieeexplore.ieee.org

Page 1. A Methodology for the Automatic Extraction and Generation of Non-Verbal Signals Sequences Conveying Interpersonal Attitudes Mathieu Chollet , Magalie Ochs, and Catherine Pelachaud Abstract—In many applications …