Notes:

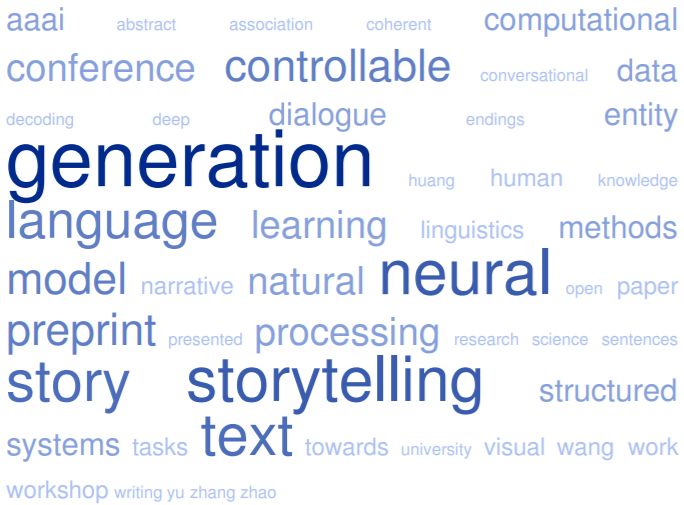

Neural generation is a type of natural language processing (NLP) technique that uses artificial neural networks to generate text or other forms of natural language content. This involves training a neural network on a large corpus of text, such as a collection of stories or novels, and then using the network to generate new content based on the patterns and structures that it has learned from the training data.

Neural generation is often used in the context of storytelling, as it allows for the creation of stories or other narrative content that is coherent and consistent, and that follows the rules and conventions of natural language. By training a neural network on a large collection of stories, it is possible to create a system that can generate new stories that are similar in style and content to the training data.

In addition to generating new stories, neural generation can also be used to augment or enhance existing stories. For example, a neural network trained on a collection of detective stories might be used to generate new plot twists or characters for a story, or to suggest alternative endings or scenarios. This can help to make the story more engaging and interesting, and to provide the reader with new and unexpected experiences.

- Entity relationship diagram (ER diagram) is a graphical representation of the entities, relationships, and attributes within a database or information system. It is used to model the structure of the data, and to illustrate the relationships between the various entities and their attributes. ER diagrams are often used in the design and development of database systems, and can help to visualize and understand the data model of a system.

- Generative text is text that is generated by a computer or other machine, using algorithms and other computational processes. This can include using natural language generation algorithms to create text that is coherent and consistent, or using generative models to create text that is similar in style and content to a given input. Generative text can be used in a variety of applications, such as generating stories, news articles, or other forms of narrative content.

- Neural story generation is a type of natural language processing (NLP) technique that uses artificial neural networks to generate narrative content, such as stories or novels. This involves training a neural network on a large corpus of text, and then using the network to generate new content that is coherent and consistent, and that follows the conventions and structures of natural language. Neural story generation can be used to create new stories, or to augment and enhance existing stories.

- Neural text generation is a similar technique to neural story generation, but is focused on generating any form of text, rather than specifically narrative content. This can include generating news articles, reports, or other forms of non-narrative text, using the same underlying principles and techniques as neural story generation.

- Story generation is the process of creating narrative content, such as stories, novels, or other forms of fiction. This can be done manually, by a writer or other creator, or it can be done automatically, using algorithms and computational processes, such as neural generation. Story generation can be used to create new stories, or to augment and enhance existing stories, and can be applied in a variety of contexts, such as entertainment, education, and storytelling.

Wikipedia:

References:

See also:

Neural Dialog Systems | Text Generation

GLACNEt+: Towards Vivid Storytelling by Combining Visual and Dynamic Entity Representations

S Son, BT Zhang, V GLACNet – bi.snu.ac.kr

… (Common-sense Reasoning) , . 2 2.1 (Visual Storytelling) Huang et al. VIST [4] 5 . 5 . METEOR[6] [7]. 2.2 VIST … 5 . . 6 2018 ( ) (2015-0-00310-SW, 2017-0-01772- VTT, 2018-0-00622-RMI), (10060086- RISF) . [1] E. Clark, Y. Ji, and NA Smith, “Neural text generation …

Neural text generation in stories using entity representations as context

E Clark, Y Ji, NA Smith – Proceedings of the 2018 Conference of the …, 2018 – aclweb.org

… Neural Text Generation in Stories Using Entity Representations as Context … In this work, we incorporate entities into neural text generation models; each entity in a story is given its own vector representation, which is updated as the story unfolds …

My Way of Telling a Story: Persona based Grounded Story Generation

K Chandu, S Prabhumoye, R Salakhutdinov… – … on Storytelling, 2019 – aclweb.org

… Abstract Visual storytelling is the task of generating sto- ries based on a sequence of images. Inspired by the recent works in neural generation focus- ing on controlling the form of text, this paper explores the idea of generating these stories in different personas …

My Way of Telling a Story: Persona based Grounded Story Generation

S Prabhumoye, KR Chandu, R Salakhutdinov… – arXiv preprint arXiv …, 2019 – arxiv.org

… Abstract Visual storytelling is the task of generating sto- ries based on a sequence of images. Inspired by the recent works in neural generation focus- ing on controlling the form of text, this paper explores the idea of generating these stories in different personas …

Plan-and-write: Towards better automatic storytelling

L Yao, N Peng, R Weischedel, K Knight, D Zhao… – Proceedings of the AAAI …, 2019 – aaai.org

… We posit that storytelling systems can benefit from storyline planning to generate more coherent and on-topic stories … Methods We adopt neural generation models to implement our plan- and-write framework, as they have been shown effective in many text generation tasks such …

A hybrid model for globally coherent story generation

F Zhai, V Demberg, P Shkadzko, W Shi… – … Workshop on Storytelling, 2019 – aclweb.org

Proceedings of the Second Storytelling Workshop, pages 34–45 Florence, Italy, August 1, 2019 … Neural text generation models have been shown to per- form well at generating fluent sentences from data, but they usually fail to keep track of the overall coherence of the story after …

Automatic text generation in macedonian using recurrent neural networks

I Milanova, K Sarvanoska, V Srbinoski… – … Conference on ICT …, 2019 – Springer

… Abstract. Neural text generation is the process of a training neural network to generate a human understandable text (poem, story, article) … Text generation Storytelling Poems RNN Macedonian language NLP Transfer learning ROUGE-N. Download conference paper PDF …

A Hybrid Model for Globally Coherent Story Generation

Z Fangzhou, V Demberg, P Shkadzko, W Shi… – ACL 2019, 2019 – aclweb.org

Proceedings of the Second Storytelling Workshop, pages 34–45 Florence, Italy, August 1, 2019 … Neural text generation models have been shown to per- form well at generating fluent sentences from data, but they usually fail to keep track of the overall coherence of the story after …

Storytelling from structured data and knowledge graphs: An NLG perspective

A Mishra, A Laha, K Sankaranarayanan… – Proceedings of the 57th …, 2019 – aclweb.org

… 43 Storytelling from Structured Data and Knowledge Graphs An NLG Perspective Abhijit Mishra Anirban Laha Karthik Sankaranarayanan Parag Jain Saravanan Krishnan … 2016. Neural text generation from structured data with ap- plication to the biography domain. In EMNLP …

Generating Diverse Story Continuations with Controllable Semantics

L Tu, X Ding, D Yu, K Gimpel – arXiv preprint arXiv:1909.13434, 2019 – arxiv.org

… Compared to previous work that only seeks to control the values of sen- timent (Hu et al., 2017) and length (Kikuchi et al., 2016), we further explore neural text generation with particular verbal predicates, semantic frames, and automatically-induced clusters …

Storytelling from Structured Data and Knowledge Graphs An NLG Perspective

AMALK Sankaranarayanan, PJS Krishnan – ACL 2019, 2019 – aclweb.org

… c 2019 Association for Computational Linguistics Storytelling from Structured Data and Knowledge Graphs An NLG Perspective Abhijit Mishra Anirban Laha Karthik Sankaranarayanan Parag … Neural text generation from structured data with ap- plication to the biography domain …

Neural text generation with unlikelihood training

S Welleck, I Kulikov, S Roller, E Dinan, K Cho… – arXiv preprint arXiv …, 2019 – arxiv.org

… ABSTRACT Neural text generation is a key tool in natural language applications, but it is well known there are major problems at its core … 1 INTRODUCTION Neural text generation is a vital tool in a wide range of natural language applications …

Learning to write stories with thematic consistency and wording novelty

J Li, L Bing, L Qiu, D Chen, D Zhao, R Yan – Proceedings of the AAAI …, 2019 – aaai.org

… Introduction Story writing is the new frontier in the research of text gen- eration, and it involves various challenges that exist in the current neural generation systems (Wiseman, Shieber, and Rush 2017). Conventionally, case-based reasoning (Gervás et al …

Image-grounded conversations: Multimodal context for natural question and response generation

N Mostafazadeh, C Brockett, B Dolan, M Galley… – arXiv preprint arXiv …, 2017 – arxiv.org

… et al., 2015) have enabled much interdisciplinary research in vision and language, from video tran- scription (Rohrbach et al., 2012; Venugopalan et al., 2015), to answering questions about im- ages (Antol et al., 2015; Malinowski and Fritz, 2014), to storytelling around series of …

The curious case of neural text degeneration

A Holtzman, J Buys, L Du, M Forbes, Y Choi – arXiv preprint arXiv …, 2019 – arxiv.org

… Our work addresses the challenges faced by neural text generation with this increased level of freedom, but we note that some tasks, such as goal-oriented dialog, may fall somewhere in between open-ended and directed generation. 3 LANGUAGE MODEL DECODING …

Hierarchically structured reinforcement learning for topically coherent visual story generation

Q Huang, Z Gan, A Celikyilmaz, D Wu, J Wang… – Proceedings of the AAAI …, 2019 – aaai.org

… Our work is also related to (Wang et al. 2018a; 2018b), which uses adversarial training and inverse RL, respectively, for storytelling … 2018) describe the benefits of fine-tuning neural generation systems with policy gradient methods on sentence-level scores (eg BLEU or CIDEr) …

Storyboarding of recipes: grounded contextual generation

KR Chandu, E Nyberg, A Black – 2019 – openreview.net

… Sidi Lu, Yaoming Zhu, Weinan Zhang, Jun Wang, and Yong Yu. Neural text generation: past, present and beyond. arXiv preprint arXiv:1803.07133, 2018 … Plan-and- write: Towards better automatic storytelling. arXiv preprint arXiv:1811.05701, 2018 …

Evaluating Image-Inspired Poetry Generation

CC Wu, R Song, T Sakai, WF Cheng, X Xie… – … Conference on Natural …, 2019 – Springer

… While it is not difficult to devise nondeterministic neural generation models, eg, Cheng et al … With respect to AI based creation like storytelling, poetry generation and writing lyrics, the lack of ground-truth makes the BLEU score less suitable …

Story Quality as a Matter of Perception: Using Word Embeddings to Estimate Cognitive Interest

M Behrooz, J Robertson, A Jhala – Proceedings of the AAAI Conference on …, 2019 – aaai.org

… In Interac- tive Storytelling. Springer. Bickmore, T., and Cassell, J. 1999. Small talk and conversational storytelling in embodied conversational interface agents … Clark, E.; Ji, Y.; and Smith, NA 2018. Neural text generation in stories using entity representations as context …

Guided neural language generation for automated storytelling

P Ammanabrolu, E Tien, W Cheung, Z Luo… – … on Storytelling, 2019 – aclweb.org

Page 1. Proceedings of the Second Storytelling Workshop, pages 46–55 Florence, Italy, August 1, 2019. c 2019 Association for Computational Linguistics 46 Guided Neural Language Generation for Automated Storytelling …

Controlling linguistic style aspects in neural language generation

J Ficler, Y Goldberg – arXiv preprint arXiv:1707.02633, 2017 – arxiv.org

Page 1. Accepted as a long paper in Stylistic Variation Workshop 2017 Controlling Linguistic Style Aspects in Neural Language Generation Jessica Ficler and Yoav Goldberg Computer Science Department Bar-Ilan University Israel {jessica.ficler, yoav.goldberg}@gmail.com …

A Character-Centric Neural Model for Automated Story Generation.

D Liu, J Li, MH Yu, Z Huang, G Liu, D Zhao, R Yan – AAAI, 2020 – aaai.org

… To fill this gap, we propose a character-centric neural storytelling model, where a story is created encircling the given charac- ter … previous story generation methods with prior knowledge about story genres, we attempt to explic- itly combine deep neural generation networks with …

Entity Skeletons for Visual Storytelling

KR Chandu, RP Dong, AW Black – alvr-workshop.github.io

Page 1. Entity Skeletons for Visual Storytelling Khyathi Raghavi Chandu? Ruo-Ping Dong? Alan W Black … 1 Introduction “You’re never going to kill storytelling because it’s built in the human plan. We come with it.” – Margaret Atwood …

Exploring Controllable Text Generation Techniques

S Prabhumoye, AW Black, R Salakhutdinov – arXiv preprint arXiv …, 2020 – arxiv.org

… We don’t divide the generation pipeline into several sub-tasks but we divide the neural text generation process into modules all of which … Xie (2017) provides a practical guide to the neural generation process describing it in terms of initialization, optimization, regular- ization and …

Narrative Generation in the Wild: Methods from NaNoGenMo

J van Stegeren, M Theune – … of the Second Workshop on Storytelling, 2019 – aclweb.org

Page 1. Proceedings of the Second Storytelling Workshop, pages 65–74 Florence, Italy, August 1, 2019 … In neural text generation, it is less easy to impose a narrative structure on the generated texts – unless the task is split into two steps, like in the work of Yao et al. (2019) …

Do Massively Pretrained Language Models Make Better Storytellers?

A See, A Pappu, R Saxena, A Yerukola… – arXiv preprint arXiv …, 2019 – arxiv.org

… Decoding algorithms Inspired by Neural Ma- chine Translation, most early attempts at open- ended neural text generation (such as conversa- tional … maximizes P(y|x). However, researchers have shown that for open- ended generation tasks (including storytelling), beam search …

Induction and Reference of Entities in a Visual Story

RP Dong, KR Chandu, AW Black – arXiv preprint arXiv:1909.09699, 2019 – arxiv.org

… In this paper, we address these two stages of introducing the right entities at seemingly reasonable junc- tures and also referring them coherently in the context of visual storytelling … 1 Introduction “You’re never going to kill storytelling because its built in the human plan …

Generating Narrative Text in a Switching Dynamical System

N Weber, L Shekhar, H Kwon… – arXiv preprint arXiv …, 2020 – arxiv.org

… arXiv:2004.03762v1 [cs.CL] 8 Apr 2020 Page 2. Neural generation has since helped scale to open domains (Roemmele and Gordon, 2015; Khalifa et al., 2017) but not with the same level of con- trol over the narrative. Several …

Trading Off Diversity and Quality in Natural Language Generation

H Zhang, D Duckworth, D Ippolito… – arXiv preprint arXiv …, 2020 – arxiv.org

… Neelakantan 4 Abstract For open-ended language generation tasks such as storytelling and dialogue, choosing the right decoding algorithm is critical to controlling the tradeoff between generation quality and diversity. However, there …

Data-to-text generation with entity modeling

R Puduppully, L Dong, M Lapata – arXiv preprint arXiv:1906.03221, 2019 – arxiv.org

… Modeling entities and their communicative actions has also been shown to improve system output in interactive storytelling Page 3. (Cavazza et al., 2002; Cavazza and Charles, 2005) and dialogue generation (Walker et al., 2011) …

What makes a good conversation? how controllable attributes affect human judgments

A See, S Roller, D Kiela, J Weston – arXiv preprint arXiv:1902.08654, 2019 – arxiv.org

… In this work, we examine two controllable neural text generation methods, conditional training and weighted decoding, in order to control four important attributes for … Neural generation models for dialogue, despite their ubiquity in current research, are still poorly understood …

Hierarchical Summary-to-Article Generation

W Zhou, T Ge, K Xu, F Wei, M Zhou – 2019 – openreview.net

… Among the neural text generation models, most of them decompose text generation by either constructing intermediary output of roughly the same length of the final output (Fan et al., 2019; Xu et al., 2018), or generating a … Plan-and- write: Towards better automatic storytelling …

Strategies for structuring story generation

A Fan, M Lewis, Y Dauphin – arXiv preprint arXiv:1902.01109, 2019 – arxiv.org

Page 1. Strategies for Structuring Story Generation Angela Fan FAIR, Paris LORIA, Nancy angelafan@fb.com Mike Lewis FAIR, Seattle mikelewis@fb.com Yann Dauphin Google AI? ynd@google.com Abstract Writers often rely …

Data-dependent gaussian prior objective for language generation

Z Li, R Wang, K Chen, M Utiyama, E Sumita… – International …, 2019 – openreview.net

… results show that the proposed method makes effective use of a more detailed prior in the data and has improved performance in typical language generation tasks, including supervised and unsupervised machine translation, text summarization, storytelling, and image …

Pun generation with surprise

H He, N Peng, P Liang – arXiv preprint arXiv:1904.06828, 2019 – arxiv.org

… between two word senses in WordNet. 3 Pronouns are mapped to the synset person.n.01. tions with a simple retrieval baseline and a neural generation model (Yu et al., 2018) (Section 4.3). We show that the local-global surprisal …

Unsupervised hierarchical story infilling

D Ippolito, D Grangier, C Callison-Burch… – Proceedings of the First …, 2019 – aclweb.org

… Elizabeth Clark, Yangfeng Ji, and Noah A Smith. 2018a. Neural text generation in stories using en- tity representations as context … 2018. Towards controllable story generation. In Proceedings of the First Workshop on Storytelling, pages 43–49 …

Story Realization: Expanding Plot Events into Sentences.

P Ammanabrolu, E Tien, W Cheung, Z Luo, W Ma… – AAAI, 2020 – aaai.org

… testing sets, respectively. 5 Experiments We perform two sets of experiments, one set evaluating our models on the event-to-sentence problem by itself, and another set intended to evaluate the full storytelling pipeline. Each of …

A knowledge-grounded multimodal search-based conversational agent

S Agarwal, O Dusek, I Konstas, V Rieser – arXiv preprint arXiv:1810.11954, 2018 – arxiv.org

… With recent progress in deep learning, there is continued interest in the tasks involving both vi- sion and language, such as image captioning (Xu et al., 2015; Vinyals et al., 2015; Karpathy and Fei- Fei, 2015), visual storytelling (Huang et al., 2016), video description …

Posterior Control of Blackbox Generation

XL Li, AM Rush – arXiv preprint arXiv:2005.04560, 2020 – arxiv.org

… Data and Metrics We consider two standard neural generation benchmarks: E2E (Novikova et al., 2017) and WikiBio (Lebret et al., 2016a) datasets, with examples shown in Figure 1. The E2E dataset contains approximately 50K examples with 8 distinct fields and 945 distinct …

A skeleton-based model for promoting coherence among sentences in narrative story generation

J Xu, X Ren, Y Zhang, Q Zeng, X Cai, X Sun – arXiv preprint arXiv …, 2018 – arxiv.org

… 4.1 Dataset We use the recently introduced visual storytelling dataset (Huang et al., 2016) in our experiments. This dataset contains the pairs of photo sequences and the associated coherent narrative of events through time written by humans …

Notes on NAACL 2018

Z Zhu – Machine Learning – ziningzhu.me

… ACL demo 2017) RNNs for storytelling • How to memorize a random 60-bit string … (EMNLP 2016). • Towards controllable story generation? (Peng et al., NAACL 2018 storytelling workshop) • Paper anstract writing through abstract mechanism (Want et al, ACL 2018) …

Controllable Text Generation

S Prabhumoye – 2020 – cs.cmu.edu

… learning solutions to controlling these variables in neural text generation. I rst outline the … I de ne style as a group of natural language sentences that belong to a particular class or label. I focus on controlling the neural generation process to adhere to a speci c style …

Grounding Conversations with Improvised Dialogues

H Cho, J May – arXiv preprint arXiv:2004.09544, 2020 – arxiv.org

Page 1. Grounding Conversations with Improvised Dialogues Hyundong Cho and Jonathan May Information Sciences Institute University of Southern California {jcho, jonmay}@isi.edu Abstract Effective dialogue involves grounding …

Retrieval-guided Dialogue Response Generation via a Matching-to-Generation Framework

D Cai, Y Wang, W Bi, Z Tu, X Liu, S Shi – Proceedings of the 2019 …, 2019 – aclweb.org

Page 1. Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, pages 1866–1875, Hong Kong, China, November 3–7, 2019 …

WriterForcing: Generating more interesting story endings

P Gupta, VB Kumar, M Bhutani, AW Black – arXiv preprint arXiv …, 2019 – arxiv.org

Page 1. arXiv:1907.08259v1 [cs.LG] 18 Jul 2019 WriterForcing: Generating more interesting story endings Prakhar Gupta? Vinayshekhar Bannihatti Kumar? Mukul Bhutani? Alan W Black School of Computer Science Carnegie …

Combining search with structured data to create a more engaging user experience in open domain dialogue

KK Bowden, S Oraby, J Wu, A Misra… – arXiv preprint arXiv …, 2017 – arxiv.org

… Integrating information across multiple sources could also be further explored [4]. Recent work on hybrid neural generation approaches that use knowledge of sentence and discourse planning … In International Conference on Interactive Digital Storytelling, ICIDS’16, 2016 …

Is artificial data useful for biomedical Natural Language Processing

Z Wang, J Ive, S Velupillai, L Specia – arXiv preprint arXiv:1907.01055, 2019 – arxiv.org

… 2 Related Work Natural Language Generation. Natural lan- guage generation is an NLP area with a range of applications such as dialogue generation, question-answering, machine translation (MT), summarisation, simplification, storytelling, etc …

Is artificial data useful for biomedical Natural Language Processing algorithms?

Z Wang, J Ive, S Velupillai, L Specia – … of the 18th BioNLP Workshop and …, 2019 – aclweb.org

… 2 Related Work Natural Language Generation. Natural lan- guage generation is an NLP area with a range of applications such as dialogue generation, question-answering, machine translation (MT), summarisation, simplification, storytelling, etc …

Learning rhyming constraints using structured adversaries

H Jhamtani, SV Mehta, J Carbonell… – arXiv preprint arXiv …, 2019 – arxiv.org

… We refer to our model as RHYME-GAN. 2.1 Neural Generation Model Our … Xin Wang, Wenhu Chen, Yuan-Fang Wang, and William Yang Wang. 2018. No metrics are perfect: Adversarial reward learning for visual storytelling. In …

From plots to endings: A reinforced pointer generator for story ending generation

Y Zhao, L Liu, C Liu, R Yang, D Yu – CCF International Conference on …, 2018 – Springer

… Experimental results demonstrate that our model outperforms previous basic neural generation models in both automatic evaluation and human evaluation … Stede, M.: Scott R. Turner, the creative process. A computer model of storytelling and creativity …

Enabling Language Models to Fill in the Blanks

C Donahue, M Lee, P Liang – arXiv preprint arXiv:2005.05339, 2020 – arxiv.org

… Elizabeth Clark, Yangfeng Ji, and Noah A Smith. 2018. Neural text generation in stories using entity repre- sentations as context … 2019. Plan-and-write: Towards better automatic storytelling. In Association for the Ad- vancement of Artificial Intelligence (AAAI) …

Hybrid retrieval-generation reinforced agent for medical image report generation

Y Li, X Liang, Z Hu, EP Xing – Advances in neural information …, 2018 – papers.nips.cc

Paper accepted and presented at the Neural Information Processing Systems Conference (http://nips.cc/).

Middle-out decoding

S Mehri, L Sigal – Advances in Neural Information Processing …, 2018 – papers.nips.cc

… Generating captions without looking beyond objects. In Workshop on Storytelling with Images and Videos, 2016. S. Hochreiter … L. Huang, K. Zhao, and M. Ma. When to finish? optimal beam search for neural text generation (modulo beam size) …

Narrative context-based data-to-text generation for ambient intelligence

J Jang, H Noh, Y Lee, SM Pantel, H Rim – Journal of Ambient Intelligence …, 2019 – Springer

Page 1. Vol.:(0123456789) 1 3 Journal of Ambient Intelligence and Humanized Computing https://doi.org/10.1007/s12652-019-01176-7 ORIGINAL RESEARCH Narrative context-based data-to-text generation for ambient intelligence …

The design and implementation of xiaoice, an empathetic social chatbot

L Zhou, J Gao, D Li, HY Shum – Computational Linguistics, 2020 – MIT Press

… users accomplish specific tasks. Over the last 5 years XiaoIce has developed more than 230 skills, ranging from answering questions and recommending movies or restaurants to comforting and storytelling. The most important …

Effective Anchoring for Multimodal Narrative Intelligence

KR Chandu – 2020 – cs.cmu.edu

… With respect to its timeline, neural generative storytelling has gained traction only recently. Similarly, for the factual domain, I present … generating text end to end. Neural text generation models like Radford, Wu, Child, Luan, Amodei …

Bridging the structural gap between encoding and decoding for data-to-text generation

C Zhao, M Walker, S Chaturvedi – Proceedings of the 58th …, 2020 – pdfs.semanticscholar.org

Page 1. DRAFT Bridging the Structural Gap Between Encoding and Decoding for Data-To-Text Generation Chao Zhao†, Marilyn Walker‡ and Snigdha Chaturvedi† † Department of Computer Science, University of North Carolina …

Stick to the Facts: Learning towards a Fidelity-oriented E-Commerce Product Description Generation

Z Chan, X Chen, Y Wang, J Li, Z Zhang, K Gai… – Proceedings of the …, 2019 – aclweb.org

Page 1. Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, pages 4960–4969, Hong Kong, China, November 3–7, 2019 …

How to tell Real From Fake? Understanding how to classify human-authored and machine-generated text

D Chakraborty – 2019 – users.cecs.anu.edu.au

… Neural text generation has been able to achieve outstanding outcomes in the past few years [Wu et al., 2016; Hassan et al., 2018]. Some applica- tions of such text generation can be seen in • Creative writing eg storytelling, poetry-generation [Ghazvininejad et al., 2016] …

Paraphrase generation with latent bag of words

Y Fu, Y Feng, JP Cunningham – Advances in Neural Information …, 2019 – papers.nips.cc

Paper accepted and presented at the Neural Information Processing Systems Conference (http://nips.cc/).

A knowledge-enhanced pretraining model for commonsense story generation

J Guan, F Huang, Z Zhao, X Zhu, M Huang – Transactions of the …, 2020 – MIT Press

Create a new account. Email. Returning user. Can’t sign in? Forgot your password? Enter your email address below and we will send you the reset instructions. Email. Cancel. If the address matches an existing account you will …

The garden of forking paths: Towards multi-future trajectory prediction

J Liang, L Jiang, K Murphy, T Yu… – Proceedings of the …, 2020 – openaccess.thecvf.com

Page 1. The Garden of Forking Paths: Towards Multi-Future Trajectory Prediction Junwei Liang1? Lu Jiang2 Kevin Murphy2 Ting Yu3 Alexander Hauptmann1 1Carnegie Mellon University 2Google Research 3Google Cloud AI …

Deep Learning Approaches to Text Production

S Narayan, C Gardent – Synthesis Lectures on Human …, 2020 – morganclaypool.com

Page 1. 0 N AR A Y AN • GAR DE N T DE E PL EAR NING AP P R O A CH E ST OT E X TP R OD UCT ION M O R GAN & CL A YPOO L Page 2. Page 3. Deep Learning Approaches to Text Production Page 4. Page 5. Synthesis Lectures on Human Language Technologies …

Controlling Contents in Data-to-Document Generation with Human-Designed Topic Labels

K Aoki, A Miyazawa, T Ishigaki, T Aoki, H Noji… – Proceedings of the 12th …, 2019 – aclweb.org

Page 1. Proceedings of The 12th International Conference on Natural Language Generation, pages 323–332, Tokyo, Japan, 28 Oct – 1 Nov, 2019. c 2019 Association for Computational Linguistics 323 Controlling Contents in …

Open Domain Event Text Generation.

Z Fu, L Bing, W Lam – AAAI, 2020 – aaai.org

Page 1. Open Domain Event Text Generation ? Zihao Fu,1 Lidong Bing,2 Wai Lam1 1Department of Systems Engineering and Engineering Management, The Chinese University of Hong Kong, Hong Kong 2DAMO Academy …

INSET: Sentence Infilling with Inter-sentential Generative Pre-training

Y Huang, Y Zhang, O Elachqar, Y Cheng – arXiv preprint arXiv:1911.03892, 2019 – arxiv.org

… Natural Language Generation. The field of neural text generation has received consid- erable attention. Popular tasks include ma- chine translation (Sutskever et al., 2014), text summarization (Paulus et al., 2018), and di- alogue generation (Rajendran et al., 2018) …

Select and attend: Towards controllable content selection in text generation

X Shen, J Suzuki, K Inui, H Su, D Klakow… – arXiv preprint arXiv …, 2019 – arxiv.org

Page 1. Select and Attend: Towards Controllable Content Selection in Text Generation Xiaoyu Shen1,2?, Jun Suzuki3,4, Kentaro Inui3,4, Hui Su5 Dietrich Klakow1 and Satoshi Sekine4 1Spoken Language Systems (LSV), Saarland …

Ask to Learn: A Study on Curiosity-driven Question Generation

T Scialom, J Staiano – arXiv preprint arXiv:1911.03350, 2019 – arxiv.org

… cently presented by (See et al., 2019). Us- ing controllable neural text generation methods, the authors control important attributes for chit- chat dialogues, including question-asking behav- ior. Among the take-away messages of …

A Dynamic Emotional Session Generation Model Based on Seq2Seq and a Dictionary-Based Attention Mechanism

Q Guo, Z Zhu, Q Lu, D Zhang, W Wu – Applied Sciences, 2020 – mdpi.com

With the development of deep learning, the method of large-scale dialogue generation based on deep learning has received extensive attention. The current research has aimed to solve the problem of the quality of generated dialogue content, but has failed to fully consider the emotional …

Open-Domain Conversational Agents: Current Progress, Open Problems, and Future Directions

S Roller, YL Boureau, J Weston, A Bordes… – arXiv preprint arXiv …, 2020 – arxiv.org

… One solution is the use of control- lable neural text generation methods, in particular conditional training (Fan, Grangier, and Auli, 2018; Kikuchi et al., 2016; Peng et al., 2018) and weighted decoding (Ghazvininejad Page 5. Engagingness 3.2 3.0 2.8 2.6 2.4 …

Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing

M Palmer, R Hwa, S Riedel – Proceedings of the 2017 Conference on …, 2017 – aclweb.org

Page 1. EMNLP 2017 The Conference on Empirical Methods in Natural Language Processing Proceedings of the Conference September 9-11, 2017 Copenhagen, Denmark Page 2. c?2017 The Association for Computational Linguistics …

KnowSemLM: A Knowledge Infused Semantic Language Model

H Peng, Q Ning, D Roth – Proceedings of the 23rd Conference on …, 2019 – aclweb.org

Page 1. Proceedings of the 23rd Conference on Computational Natural Language Learning, pages 550–562 Hong Kong, China, November 3-4, 2019. c 2019 Association for Computational Linguistics 550 KnowSemLM : A Knowledge Infused Semantic Language Model …

The soft edge: Where great companies find lasting success

R Karlgaard – 2014 – books.google.com

… Story Gone Wrong: Dell Leadership and Storytelling Why Cirrus Pioneered the Whole-Airframe Parachute Good Stories and Storytelling When Your Customers Tell a Better Story Than You Can Technology Changes But Stories Endure Data Storytelling Gaining the Edge Notes …

Curating Interest in Open Story Generation

M Behrooz – 2019 – escholarship.org

… This deep cognitive link not only speaks to the reasons behind the influence of stories, but it also outlines specific cognitive processes involved in storytelling between humans … enable a storyteller to tell better stories, to the point that Herman’s Storytelling and the Sciences …

Narrative paths and negotiation of power in birth stories

M Antoniak, D Mimno, K Levy – Proceedings of the ACM on Human …, 2019 – dl.acm.org

… 2.2 Stories and Datasets Storytelling and expressive writing about traumatic experiences can have physical, emotional, and social health benefits [71, 76, 78, 79] and have been studied for their role in therapy and the establishment of social norms …

Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics

D Jurafsky, J Chai, N Schluter, J Tetreault – … of the 58th Annual Meeting of …, 2020 – aclweb.org

Page 1. ACL 2020 The 58th Annual Meeting of the Association for Computational Linguistics Proceedings of the Conference July 5 – 10, 2020 Page 2. Diamond Sponsors Platinum Sponsors Gold Sponsors Silver Sponsors ii Page 3. Bronze Sponsors Supporter Sponsors …

Understanding and Generating Multi-Sentence Texts

RK Kedziorski – 2019 – digital.lib.washington.edu

Page 1. Understanding and Generating Multi-Sentence Texts Rik Koncel-Kedziorski A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy University of Washington 2019 Reading Committee: Hannaneh Hajishirzi, Chair …

Creating an Emotion Responsive Dialogue System

A Vadehra – 2018 – uwspace.uwaterloo.ca

Page 1. Creating an Emotion Responsive Dialogue System by Ankit Vadehra A thesis presented to the University of Waterloo in fulfillment of the thesis requirement for the degree of Master of Mathematics in Computer Science Waterloo, Ontario, Canada, 2018 …

Clinicians’ Experiences of the Application of Interpersonal Neurobiology as a Framework for Psychotherapy

KJ Myers – 2011 – vtechworks.lib.vt.edu

… Cozolino, 2008; Siegel, 2006, 2010). With the IPNB frame, age is not a definitive factor for neural generation or integration, and thus for therapeutic intervention. Research shows that the nervous system creates generative and regenerative change within the brain from birth until …

Treating trauma in adolescents: Development, attachment, and the therapeutic relationship

MB Straus – 2018 – books.google.com

Page 1. IB?ling . Tºll?lålill All-SEElls. Page 2. TREATING TRAUMA IN ADOLESCENTS Page 3. Page 4. Treating Trauma in Adolescents Development, Attachment, and the Therapeutic Relationship Martha B. Straus THE GUILFORD PRESS New York London Page 5 …

A survey of the usages of deep learning for natural language processing

DW Otter, JR Medina, JK Kalita – IEEE Transactions on Neural …, 2020 – ieeexplore.ieee.org

Page 1. This article has been accepted for inclusion in a future issue of this journal. Content is final as presented, with the exception of pagination. IEEE TRANSACTIONS ON NEURAL NETWORKS AND LEARNING SYSTEMS 1 A Survey of the Usages of Deep Learning for …