Notes:

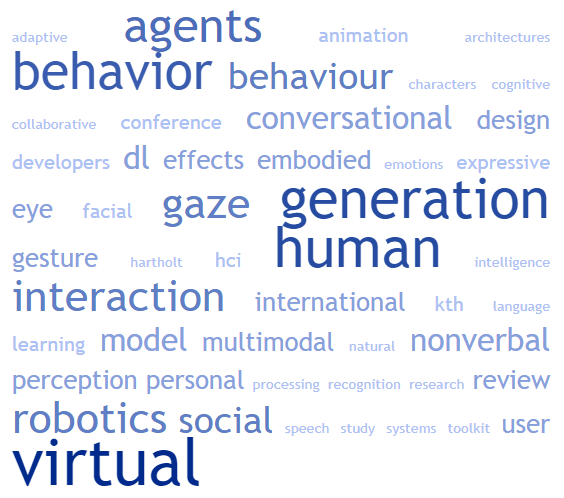

The text discusses the use of virtual humans in various applications, including engagement, social simulations, and the design of embodied virtual humans. The use of virtual humans is supported by technologies such as speech recognition, natural language processing, and nonverbal behavior generation. There has been research on generating empathic behavior in robots through the interaction of perceptual and behavior generation modules with a central behavior controller. There has also been research on designing increasingly realistic, human-like avatars using virtual reality engines and on creating a deep neural architecture for emotion perception and behavior generation using reinforcement learning. The text also mentions the use of virtual humans in persuasive communication, the effects of virtual humans on human behavior, and the link between the personality and emotions of virtual humans. There is also discussion of the use of virtual humans in dialogue processing, multimodal perception, and nonverbal behavior generation, as well as in charismatic behaviors and emotional facial expressions. Finally, the text mentions the use of virtual humans in social virtual avatars, in cognitive architectures, and in collaborative human-robot interactions.

A central behavior controller is a component of a system that is responsible for coordinating and controlling the actions and behaviors of a virtual human. It typically receives input from various sensors and perceives the virtual human’s environment and the actions and behaviors of other agents. Based on this input, the central behavior controller determines appropriate responses and generates appropriate behaviors for the virtual human to execute. The specific design and implementation of a central behavior controller will depend on the specific goals and requirements of the system in which it is being used.

It is difficult to say exactly what a deep neural architecture for emotion perception in virtual humans looks like as it can vary depending on the specific design and goals of the system. However, in general, such an architecture may involve the use of deep learning algorithms, such as neural networks, to process and analyze data related to the virtual human’s perception of emotions. This data could include, for example, facial expressions, body language, and speech patterns. The architecture may also include mechanisms for analyzing and interpreting this data, such as algorithms that can recognize patterns and correlations between different emotional indicators, as well as mechanisms for generating responses or behaviors based on the perceived emotions. It is likely that the architecture would be implemented using a combination of software and hardware components, such as sensors, processors, and other hardware components that can facilitate the data processing and analysis required for emotion perception.

Reinforcement learning is a type of machine learning that involves training an agent to take actions in an environment so as to maximize some reward. In the context of deep neural architecture for emotion perception in virtual humans, reinforcement learning could be used to train the virtual human to recognize and respond appropriately to different emotions in a way that maximizes some reward signal.

For example, the virtual human might be trained to recognize and respond appropriately to different emotions in a conversation with a user, with the reward signal being based on the level of engagement or satisfaction of the user. The virtual human might be trained to recognize different facial expressions, tone of voice, or other cues associated with different emotions, and then adjust its own behavior (such as its own facial expressions, tone of voice, or the words it uses) in a way that is most likely to maximize the reward signal.

The deep neural architecture for emotion perception in virtual humans would likely involve using a combination of neural network architectures and reinforcement learning algorithms to learn how to recognize and respond appropriately to different emotions. This might involve training the virtual human on a large dataset of examples of human emotions and the corresponding behaviors that are appropriate in response to those emotions, and then fine-tuning the model through reinforcement learning to optimize its ability to recognize and respond appropriately to different emotions in real-time.

See also:

Behavior Generation & Virtual Humans 2010 (41x) | Behavior Generation & Virtual Humans 2011 (70x) | Behavior Generation & Virtual Humans 2012 (45x) | Behavior Generation & Virtual Humans 2013 (60x) | Behavior Generation & Virtual Humans 2014 (59x) | Behavior Generation & Virtual Humans 2015 (47x) | Behavior Generation & Virtual Humans 2016 (49x) | Behavior Generation & Virtual Humans 2017 (46x) | Behavior Generation & Virtual Humans 2018 (42x) | Behavior Generation & Virtual Humans 2019 (53x)

Real-time simulation of virtual humans’ emotional facial expressions, harnessing autonomic physiological and musculoskeletal control

Y Tisserand, R Aylett, M Mortillaro… – Proceedings of the 20th …, 2020 – dl.acm.org

… As with other virtual human animation issues, it is not clear that purely physically-based modelling produces the most graphically-convincing … illustrate an implementation of these effects in Unity3d, as part of our current development of the Geneva Virtual Humans toolkit, which is …

Multi-Platform Expansion of the Virtual Human Toolkit: Ubiquitous Conversational Agents

A Hartholt, E Fast, A Reilly, W Whitcup… – … Journal of Semantic …, 2020 – World Scientific

… relevant technologies available in support of developing virtual humans, from individual … creation of virtual human conversational characters.”d The Virtual Human Toolkit supports … recognition [20], natural language processing [27], nonverbal behavior generation [25], nonverbal …

The Design of Charismatic Behaviors for Virtual Humans

N Wang, L Pacheco, C Merchant, K Skistad… – Proceedings of the 20th …, 2020 – dl.acm.org

… NVBG [30] and its later incarnation, Cerebella [31], for real-time nonverbal behavior generation using syntactic … The Design of Charismatic Behaviors for Virtual Humans … Figure 3: The participants’ ratings on the virtual human’s charismatic behaviors, including the use of vivid and …

Introducing Canvas: Combining Nonverbal Behavior Generation with User-Generated Content to Rapidly Create Educational Videos

A Hartholt, A Reilly, E Fast, S Mozgai – Proceedings of the 20th ACM …, 2020 – dl.acm.org

… 2013. All together now, Introducing the Virtual Human Toolkit … 2006. Nonverbal behavior generator for embodied conversational agents … 2015. Cerebella: automatic generation of nonverbal behavior for virtual humans. In Twenty-Ninth AAAI Conference on Artificial Intelligence …

The Passive Sensing Agent: A Multimodal Adaptive mHealth Application

S Mozgai, A Hartholt, A Rizzo – 2020 IEEE International …, 2020 – ieeexplore.ieee.org

… Virtual humans have demonstrated efficacy in the domains of engagement, truthful … researchers and developers with the creation of virtual human conversational characters … speech recognition, natural language processing, non- verbal behavior generation, nonverbal behavior …

An Adaptive Agent-Based Interface for Personalized Health Interventions

S Mozgai, A Hartholt, AS Rizzo – … of the 25th International Conference on …, 2020 – dl.acm.org

… Virtual humans have demonstrated efficacy in the domains of engagement, truthful … Human modeling Four-Count Tactical Breathing D. Virtual Human performance feedback … speech recognition, natural language process- ing, nonverbal behavior generation, nonverbal behavior …

Music-Driven Animation Generation of Expressive Musical Gestures

A Bogaers, Z Yumak, A Volk – … of the 2020 International Conference on …, 2020 – dl.acm.org

… While audio-driven face and gesture motion synthesis has been studied before, to our knowledge no research has been done yet for automatic generation of musical gestures for virtual humans … Non-Verbal Behavior Generation for Virtual Characters in Group Conversations …

Spontaneous Facial Behavior Revolves Around Neutral Facial Displays

PA Blomsma, J Vaitonyte, M Alimardani… – Proceedings of the 20th …, 2020 – dl.acm.org

… and Unreal en- gines) allow developers to design increasingly realistic, human-like avatars, both in academic and industrial virtual human projects [38 … 1.2 Facial Action Coding System The second factor that makes natural facial behavior generation for ECAs difficult is the nearly …

Agent-Based Dynamic Collaboration Support in a Smart Office Space

Y Wang, RC Murray, H Bao, C Rose – … of the 21th Annual Meeting of the …, 2020 – aclweb.org

… et al., 2003) and the Azure Speech Recognizer for speech recognition, the USC Institute for Creative Technologies Virtual Human Toolkit (VHT) to present an embodied conversational agent (Hartholt et al., 2013), OpenFace for face recognition … 2.1.4 Agent Behavior Generation …

Towards a New Computational Affective System for Personal Assistive Robots

EB Sönmez, H Köse, DE Barkana – 2020 28th Signal …, 2020 – ieeexplore.ieee.org

… personality of the agent with its mood and emotions are linked and the emotions expressed by the virtual human are evolved … A deep neural architecture for emotion perception and behavior generation has also been developed in where the model uses reinforcement learning to …

Adapting a virtual advisor’s verbal conversation based on predicted user preferences: A study of neutral, empathic and tailored dialogue

H Ranjbartabar, D Richards, AA Bilgin, C Kutay… – Multimodal …, 2020 – mdpi.com

… Expressing Empathy Using Virtual Humans … [14] shows that people tend to disclose more personal information when they interact with a virtual human rather than … The core functionalities are dialogue processing, multimodal perception and nonverbal behaviour generation [25] …

Empathy framework for embodied conversational agents

ÖN Yalç?n – Cognitive Systems Research, 2020 – Elsevier

… behaviors. The implementation achieves levels of empathic behavior through the interaction between the components of perceptual and behavior generation modules with the central behavior controller … 4.3. Behavior generation. The …

Navigating the Combinatorics of Virtual Agent Design Space to Maximize Persuasion

D Parmar, S Ólafsson, D Utami, P Murali… – Proceedings of the …, 2020 – researchgate.net

… studied the effects of an animated and static virtual human in a medical virtual … In a study comparing virtual humans, virtual characters, and real actors giving persuasive … the animated behaviors were generated by an automatic nonverbal behavior generator [8] synchronized with …

Emergence of agent gaze behavior using interactive kinetics-based gaze direction model

R Satogata, M Kimoto, S Yoshioka, M Osawa… – Companion of the 2020 …, 2020 – dl.acm.org

… 2007. The rickel gaze model: A window on the mind of a virtual human. In International Workshop on Intelligent Virtual Agents. Springer, 296–303 … 2015. A review of eye gaze in virtual agents, social robotics and hci: Behaviour generation, user interaction and perception …

Development and Evaluation of Agent’s Adaptive Gaze Behaviors

K Horie, T Koda – Proceedings of the 8th International Conference on …, 2020 – dl.acm.org

… [4] Lee J., Marsella S., Traum D., Gratch J., Lance B. 2007. The Rickel Gaze Model: A Window on the Mind of a Virtual Human … [5] K. Ruhland1et al. 2015. A Review of Eye Gaze in Virtual Agents, Social Robotics and HCI: Behaviour Generation, User Interaction and Perception …

Inspiring Robots: Developmental trajectories of gaze following in humans

R Fadda, S Congiu, G Doneddu, T Striano – Rivista internazionale di Filosofia …, 2020 – rifp.it

… M. GLEICHER, B. MUTLU, R. MCDONNEL, A review of eye gaze in virtual agents, social robotics and HCI: Behaviour generation, user interaction and … H. MITAKE, S. HASEGAWA, Y. KOIKE, M. SATO, Reactive virtual human with bottom-up and top-down visual attention for gaze …

Towards Socially Interactive Agents: Learning Generative Models of Social Interactions Via Crowdsourcing

D Feng – 2020 – search.proquest.com

… Dr. Stacy Marsella, Adviser Modeling socially interactive agents (SIAs) has become critical in an increasingly wide range of applications, from large-scale social simulations to the design of embodied virtual humans … social simulations to the design of embodied virtual humans …

Incremental learning of an open-ended collaborative skill library

D Koert, S Trick, M Ewerton, M Lutter… – International Journal of …, 2020 – World Scientific

Page 1. Incremental Learning of an Open-Ended Collaborative Skill Library Dorothea Koert*,§, Susanne Trick†, Marco Ewerton*, Michael Lutter* and Jan Peters*,‡ *Intelligent Autonomous Systems Group, Department of Computer …

Embedding Conversational Agents into AR: Invisible or with a Realistic Human Body?

J Reinhardt, L Hillen, K Wolf – Proceedings of the Fourteenth …, 2020 – dl.acm.org

… cel-shading) to avoid the uncanny effect [33]. Schwind et al. investigated the negative effects of the Uncanny Valley on the facial char- acteristics of virtual human faces [29, 30]. They indicated that the most important factors to …

The security-utility trade-off for iris authentication and eye animation for social virtual avatars

B John, S Jörg, S Koppal, E Jain – IEEE transactions on …, 2020 – ieeexplore.ieee.org

Page 1. 1077-2626 2020 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See https://www.ieee.org/publications/rights/index.html for more information. Manuscript received 10 Sept. 2019; accepted 5 Feb. 2020 …

Using Human-Inspired Signals to Disambiguate Navigational Intentions

J Hart, R Mirsky, X Xiao, S Tejeda, B Mahajan… – … Conference on Social …, 2020 – Springer

… Lynch, SD, Pettré, J., Bruneau, J., Kulpa, R., Crétual, A., Olivier, AH: Effect of virtual human gaze behaviour during an orthogonal collision … Ruhland, K., et al.: A review of eye gaze in virtual agents, social robotics and HCI: behaviour generation, user interaction and perception …

Using Human-Inspired Signals to Disambiguate Navigational Intentions

P Stone – Social Robotics: 12th International Conference, ICSR … – books.google.com

… Lynch, SD, Pettré, J., Bruneau, J., Kulpa, R., Crétual, A., Olivier, AH: Effect of virtual human gaze behaviour during an orthogonal collision … Ruhland, K., et al.: A review of eye gaze in virtual agents, social robotics and HCI: behaviour generation, user interaction and perception …

Gesticulator: A framework for semantically-aware speech-driven gesture generation

T Kucherenko, P Jonell, S van Waveren… – Proceedings of the …, 2020 – dl.acm.org

Page 1. Gesticulator: A framework for semantically-aware speech-driven gesture generation Taras Kucherenko KTH, Stockholm, Sweden tarask@kth.se Patrik Jonell KTH, Stockholm, Sweden pjjonell@kth.se Sanne van Waveren KTH, Stockholm, Sweden sannevw@kth.se …

“Let me explain!”: exploring the potential of virtual agents in explainable AI interaction design

K Weitz, D Schiller, R Schlagowski, T Huber… – Journal on Multimodal …, 2020 – Springer

While the research area of artificial intelligence benefited from increasingly sophisticated machine learning techniques in recent years, the resulting sys.

Interpreting and Generating Gestures with Embodied Human Computer Interactions

J Pustejovsky, N Krishnaswamy, R Beveridge… – openreview.net

… 2004. APML, a markup language for believable behavior generation. In Life-like characters. Springer, 65–85 … 2006. Situated interaction with a virtual human perception, action, and cognition. Situat Commun 166 (2006), 287 …

Intrapersonal dependencies in multimodal behavior

PA Blomsma, GM Linders, J Vaitonyte… – Proceedings of the 20th …, 2020 – dl.acm.org

… of their dialogue systems. 2 BACKGROUND 2.1 Embodied Conversational Agents An important part of ECAs’ multimodal behavior generation is the temporal coordination of speech and gestures. Gesture plays a prominent …

Council of Coaches in Virtual Reality

T Petersen – 2020 – essay.utwente.nl

… Figure 8: ASAP (men) and GRETA (women) ECAs in one scene _____ 20 Figure 9: Examples of generated Virtual Humans called NEONS [19] _____ 20 … platforms are used for multimodal behaviour generation and for visualising …

Gaze by Semi-Virtual Robotic Heads: Effects of Eye and Head Motion

M Vázquez, Y Milkessa, MM Li, N Govil – ras.papercept.net

… JB Badler, NI Badler, M. Gleicher, B. Mutlu, and R. McDonnell, “A review of eye gaze in virtual agents, social robotics and hci: Behaviour generation, user interaction … 36] M. Thiebaux, B. Lance, and S. Marsella, “Real-time expressive gaze animation for virtual humans,” in AAMAS …

Affect-driven modelling of robot personality for collaborative human-robot interactions

N Churamani, P Barros, H Gunes… – arXiv preprint arXiv …, 2020 – arxiv.org

… Yet, current approaches for affective behaviour generation in robots focus on instantaneous perception to generate a one-to-one mapping between observed human expressions and static robot actions. In this paper, we propose …

40 years of cognitive architectures: core cognitive abilities and practical applications

I Kotseruba, JK Tsotsos – Artificial Intelligence Review, 2020 – Springer

In this paper we present a broad overview of the last 40 years of research on cognitive architectures. To date, the number of existing architectures has reached several hundred, but most of the…

Understanding the Digital Future: Applying the Decomposed Theory of Planned Behaviour to the Generation Y’s Online Fashion Purchase Intention while Creating …

E Lancere de Kam, J Diefenbach – 2020 – diva-portal.org

… Keywords – Virtual fitting, virtual fashion, virtual avatar, customised avatar, Theory of Planned Behaviour, Decomposed Theory of Planned Behaviour, Generation Y, online buying … textiles and virtual garments (Kalbaska et al., 2019). Virtual human bodies and clothing are widely …

Context-Aware Robot Behavior Learning for Practical Human-Robot Interaction

??? – 2020 – s-space.snu.ac.kr

… Context- aware behavior generation techniques will become an essential component for implementing future service robots which coexist and interact with real people … 4 2 Personalized Behavior Generation 11 2.1 Related Works …